Prasanna

Kannappan

|

|

|

|

|

|

Prasanna

Kannappan

|

|

|

|

|

|

| Baby Tracking

and Behavior Analysis using a Network of Cameras

Top↑

- Prasanna Kannappan, Elena Kokkoni, Ashkan Zehfroosh, Caili Li, Efi Mavroudi, Kristina Strother-Garcia, Navid Kermani, Dr. Herbert G. Tanner, Dr. Cole Galloway, Dr. Rene Vidal and Dr. Jeffrey Heinz @ University of Delaware (2016-2017) Children learn by moving and manipulating objects. Motion disabilities affect this learning process, especially during the formative childhood years. The Grounded Early Adaptive Rehabilitation (GEAR) project investigates the use of robots to encourage children with disabilties to move. The project involves putting children through a range of fun activities like sliding down a ramp and climbing stairs. Additionally the play area is fitted with a harness system to artifically provide support a baby to stand up and move. The play area is also shared by robots Nao, Dash and Dot. The robots actively engage with the baby competing for its attention. The driving idea is to use the robots to encourage the baby to move and iteract with the elements in the play area. The play area is monitored through a network of cameras (Kinect and Pointgrey). The data acquired by these cameras will provide researchers with a wealth of information. The future direction of this project is towards building activity recognition algorithms to track improvements in a baby's motion abilities. Project Links: article1 article2 paper |

|

|

| Distance

Based Global Descriptors for Multi-view Object Recognition

Top↑

- Prasanna Kannappan and Dr. Herbert G. Tanner @ University of Delaware (2015-2016) Object classification is an important component of any object recognition system. A well-designed object classifier should offer high recognition rates while avoiding false positives. The object classification task gets complicated in noisy images where sufficient information is not available to unambiguously identify an object. This paper proposes a multi-view algorithm that combines information from multiple images of a single target object, captured from different heights, to determine the identity of the object. Furthermore, the use of global feature descriptors here obviates segmentation. This method has been assessed on a binary classification problem over an underwater image dataset. |

|

| Images

of an orange specimen captured in an underwater environment from

different physical heights |

|

|

| Modeling

Multi-agent Systems under Uncertainity

Top↑

- Prasanna Kannappan, Konstantinos Karydis, Adam Jardine, Kevin Leahy, Dr. Herbert G. Tanner, Dr. Jeffrey Heinz, Dr. Calin Belta @ University of Delaware and Boston University (2015-2016) Interaction in multi-agent systems present interesting scenarios that can be studied. Developing winning strategies in multi-agent games, modeling unknown adversarial agents and building leader-follower systems resilient to catastrophic failures are some of the problems addressed in this project. The underlying matematical concepts were drawn from machine learning, automata theory, computation linguistics and control theory. Project Links: poster paper1 paper2 code |

|

|

| Multi-agent

game where a quadrotor (agent) models an unknown adversary

(ground robot) and tries to device a winning strategy to

eventually win a grid-world game |

|

|

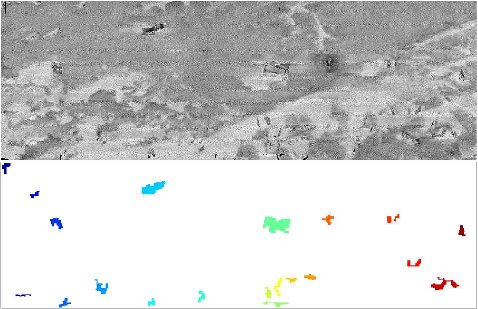

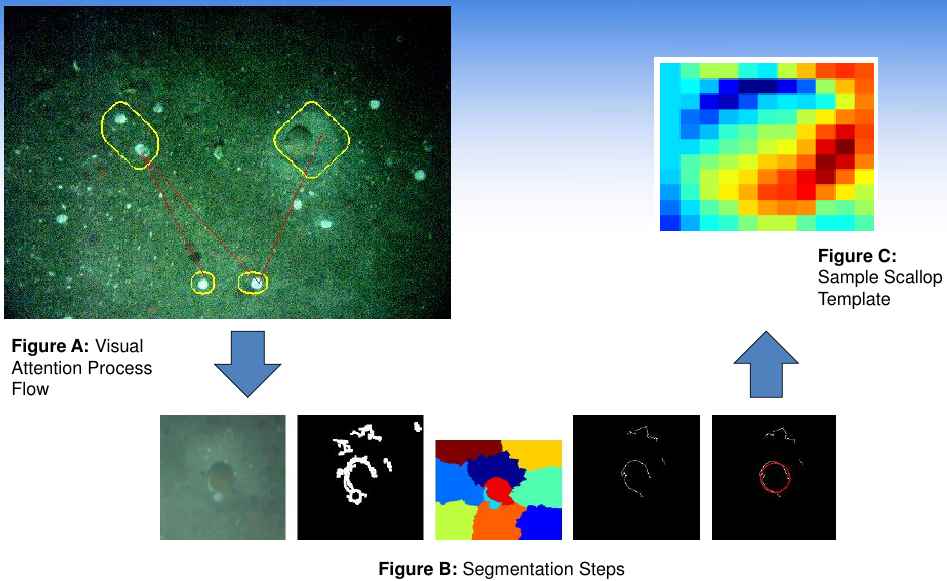

| Counting

Scallops

and Subway Cars from Underwater

Images Top↑

- Prasanna Kannappan, Justin H. Walker, Dr. Art Trembanis and Dr. Herbert G. Tanner @ University of Delaware (2012-2013) Automating the counting of marine animals like scallops benefits marine population survey efforts. These surveys are tools for policy makers to regulate fishing activities, and sources of information for biologists and marine ecologists interested in population statistics of marine species. The counting and study of subway cars in the ocean floor as potential artificial reef sites is another interesting problem. In this project we discuss some practical difficulties that arise in the scallop/subway car detection problem from visual data, and propose a solution based on top-down visual attention, graph-cut segmentation and template matching. Project Links: ppt1 ppt2 paper poster |

|

|

| Underwater

Image

with Scallops shown in red circles |

Subway

cars

seen in sonar images of Redbird reef site |

|

| Scallop

recognition

process pipeline involving visual attention, segmentation and

template matching |

|

|

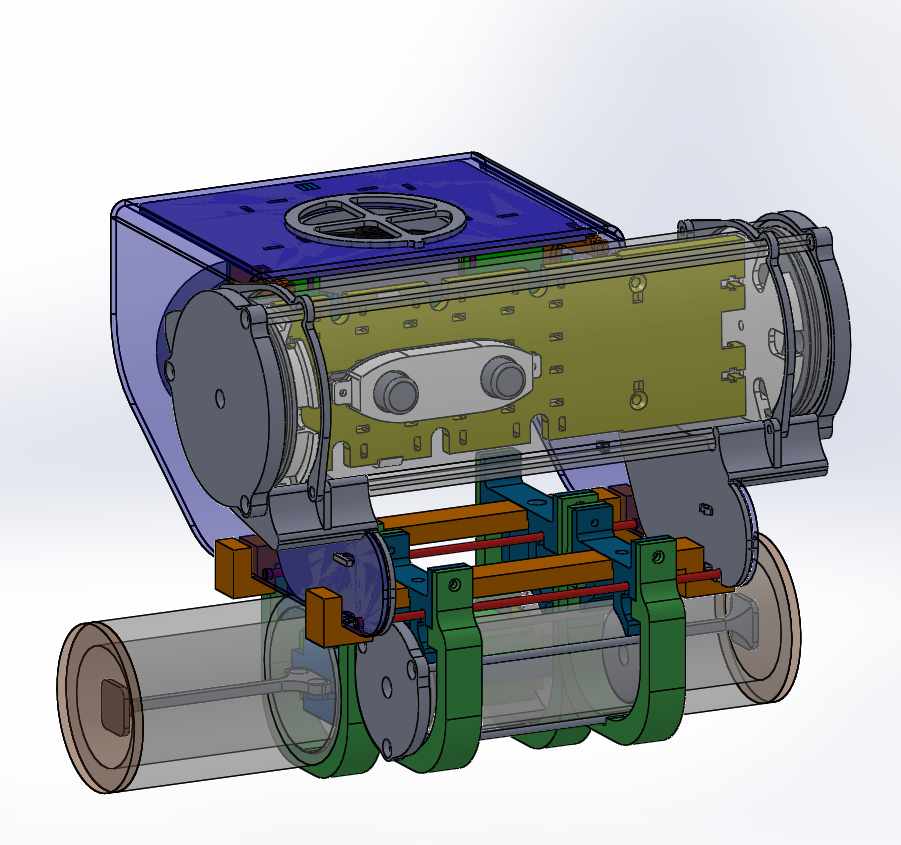

| Building

CoopROV: A Remotely Operated Vehicle for Underwater Exploration

Top↑

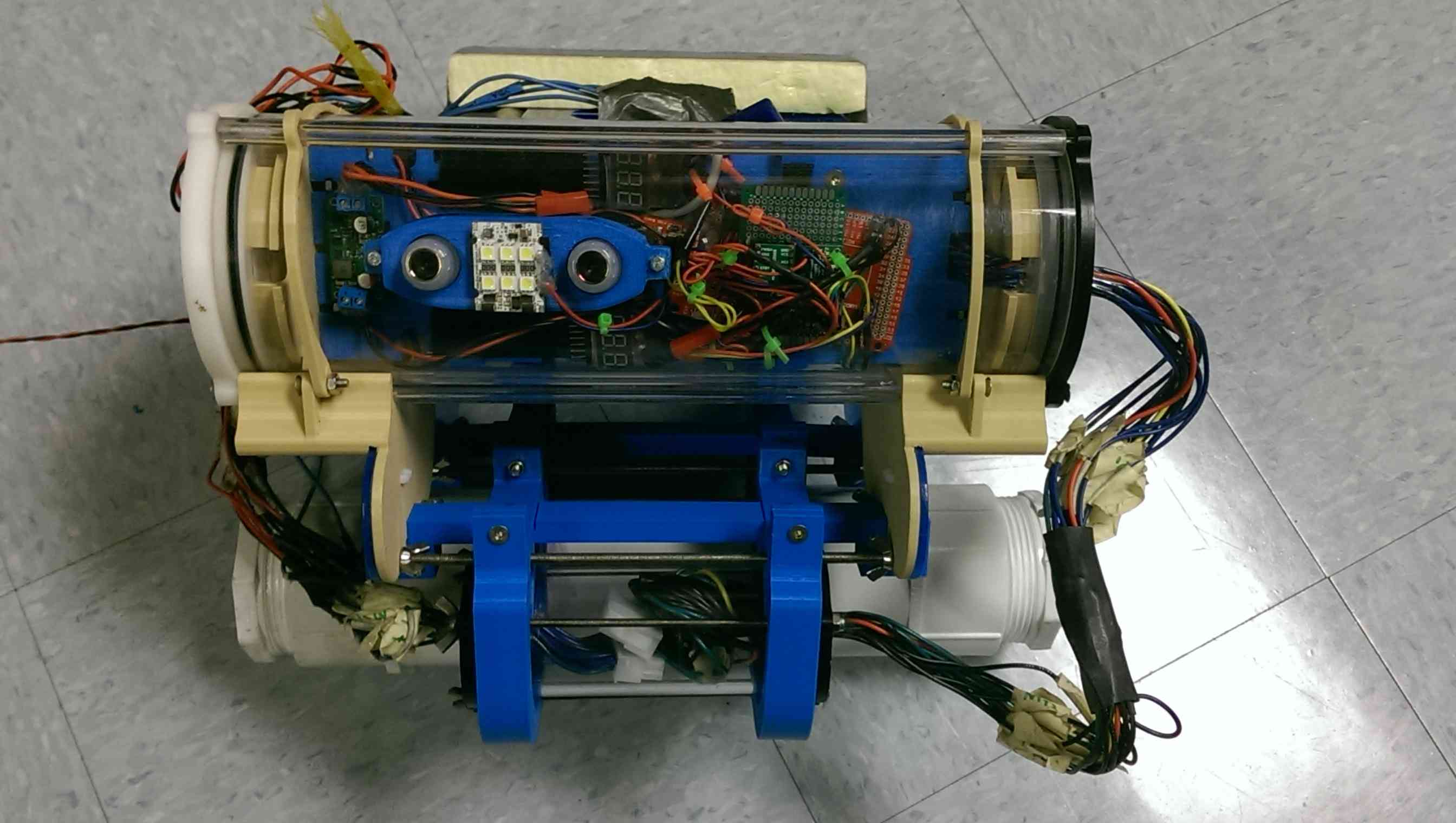

- Prasanna Kannappan and Dr. Herbert G. Tanner @ University of Delaware (2013-2014) To study underwater environments, robotic vehicles like Autonomous Underwater Vehicles (AUV) and Remotely Operated Vehicles (ROV) are commonly used. In this direction, we have been developing CoopROV which initially started off from the designs of openrov and has currently evolved into an low-cost advanced research vehicle with its extensive suite of electronics that include raspberry pi, arduino mega, IMU, depth sensor, stereo camera, all tied together through in-house software based on Robot Operating System (ROS). In future, this platform will serve as an implementation testbed for object recognition algorithms that we have been developing. Project Links: poster |

|

|

| CoopROV

Electronics |

CoopROV |

|

|

| Object

Recognition

using Eigen Value based Shape Descriptors

Top↑

- Prasanna Kannappan and Dr. Herbert G. Tanner @ University of Delaware (2011) Identifying an object is an important aspect of decision making that robots need to be good at to solve real world problems. Object recognition as a field draws heavily from computer vision and machine learning techniques. In this work, we use eigen value based shape descriptors to classify classes of objects. The intrigue behind these descriptors began when Kac asked the question "Can one hear the shape of a drum?". Future studies showed that these descriptors are rotation, scaling and translation invariant which makes them very effective for object recognition. Project Links: ppt |

|

|

| Cooperative

Target Acquisition, Detection and

Classification

Top↑

- Prasanna Kannappan, Luis Valbuena Reyes and Dr. Herbert G. Tanner @ University of Delaware (2011) Learning the identity of a target using data acquisition capabilities of multiple robotic agents is an interesting research paradigm. Such distributed systems help in getting more detailed information about targets enabling humans or robotic systems to infer the identity of unknown objects. As a proof-of-concept study, the corobot ground vehicles (three in number) were used to collect several images (from different point of views) of a large target that cannot fit into the field of view of any single agent. These images were then stitched together by just using the position information from vicon motion capture system. Some advantages of this method are minimal processing and inexpensive stitching compared to algorithms that find pointwise correspondence between images. One of the components of future study is handling uncompensated localization errors that can affect the stiching algorithm adversely. |

|

|

| Corobots |

Stitched

image |

|

|

| Corobots cooperatively acquiring a large target |

|

|

| Visual

Servoing using Kinect and AR Tags

Top↑

- Prasanna Kannappan and Konstantinos Karydis (2014) @ University of Delaware (2012) Visual servoing is a technique that uses images from an onboard camera to enable a robot to achieve a desired target position. This concept was demonstrated using an octapod robot with an onboard kinect sensor. The robot was required to climb a ramp with an AR Tag. The robot localized itself using the images of the AR tag from the kinect data. Then a P-controller was used to drive the robot onto the top of the ramp. The octapod here was fully 3D-printed and used a raspberry pi and arduino nano as the onboard processor and controller. The system was implemented using Robot Operating System (ROS) and Point cloud library (PCL). Project Links: ppt source code |

| Demo:

Observer

point of view |

Demo: Robot point of view |

|

|

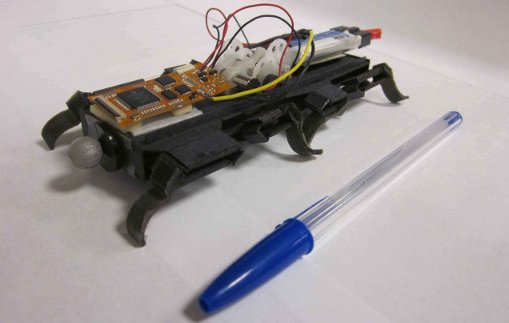

| Robotic

Leader-Follower

implementation using I-robot Create and

Octaroach Top↑

- Prasanna Kannappan and Konstantinos Karydis @ University of Delaware (2012) Leader-follower systems are a way to coordinate the actions of multiple robots. In such a system, generally the leader's actions are directly related to achieving an objective or target location. The follower robots observe the actions of the leader and generate their own sequence of actions that lead to satisfying some group objective, say retain a specific formation among the swarm members. In this implementation, an octaroach robot played the part of a leader and irobot create the follower. Octaroach was teleoperated and irobot create used an onboard camera to track the color fiducial markers on octaroach and follow it. The whole system was implemented using Robot Operating System (ROS) and open computer vision (OpenCV) libraries. Project Links: ppt source code |

|

|

||

| irobot

create |

Color

fiducials |

Octaroach |

Experiment

Setup |

| Demo:

Robot

point of view |

Demo:

Observer

point of view |

|

|

| Vehicle

Localization

using GPS and

IMU Top↑

- Prasanna Kannappan, Dr. Sharon Ong and Dr. Marcelo Ang Jr. @ National University of Singapore (2009) Vehicle localization is the task of identifying the location of a mobile vehicle in a map. Such localization systems are a part of vehicle navigation aids that are available in today's automobiles. These navigational aids, rely heavily on Global Positioning System (GPS) to determine a vehicles position. In indoor environments or dense forests where GPS availability is limited, these systems fail. In this project we developed a localization system that integrates GPS, Inertial Measurement Unit (IMU) and accelerometers into a hybrid localization system that can work even in GPS denied environment. The sensor fusion here was performed using kalman filter. Project Links: report ppt source code |

| Crossbow

DMU

RGA300CA- IMU |

SerAccel

V5

Triple-Axis Accelerometer |

FV-M11

GPS

Receiver |

|

| A

bus ride from NUS Engineering building to NUS Arts building as

logged by the localization system overlaid on a map |