Overview

I invented, developed, and deployed a vision-based localization system for an underwater robot that conducts precision inspection of nuclear reactors. A pan-tilt-zoom (PTZ) camera located above the robot is utilized as the primary sensing modality. The localization system runs at 20 Hz and localizes the robot to within several centimeters. This technology is currently in use for reactor inspection operations.

Summary

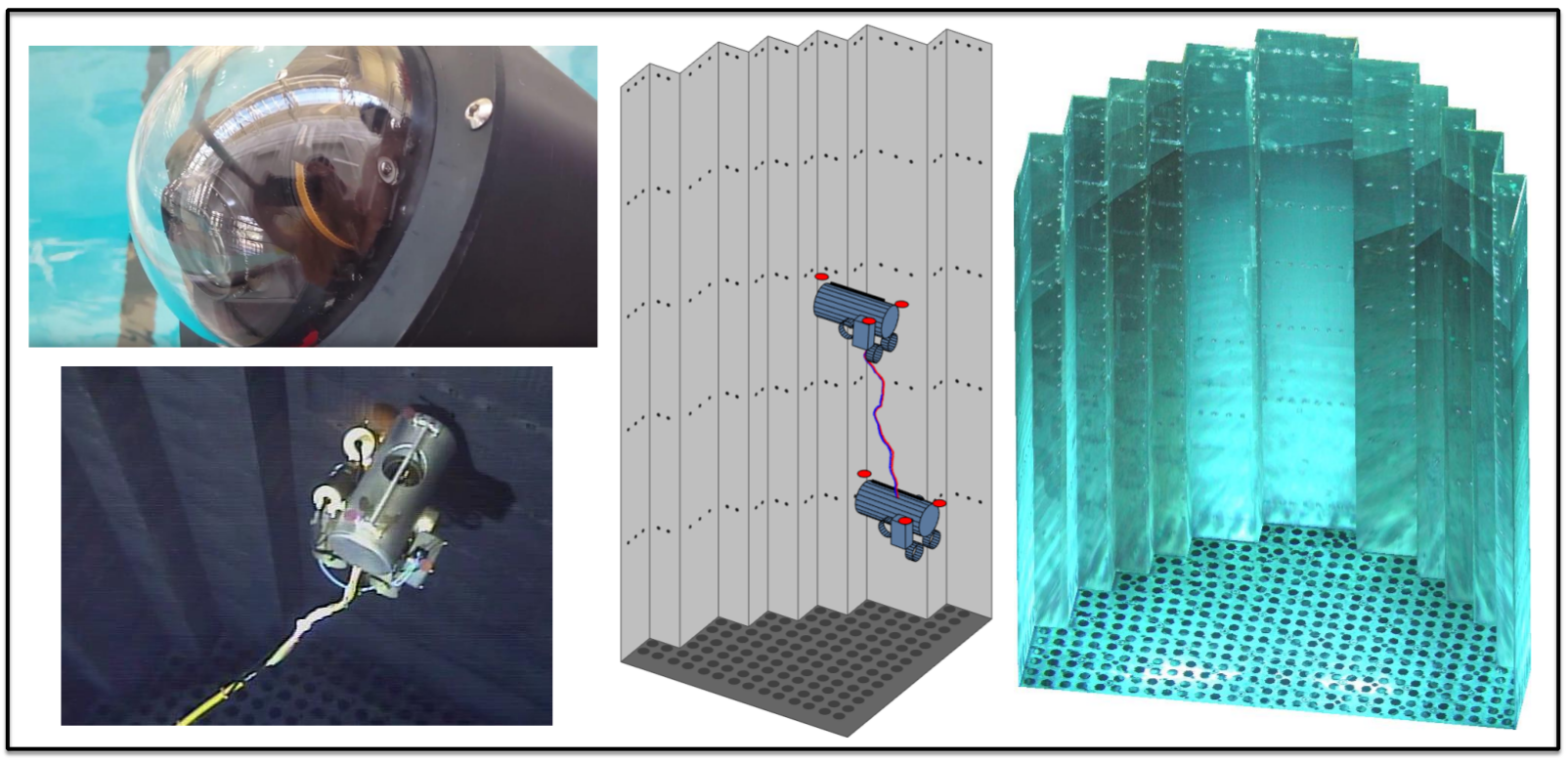

This technology (the “ROV Tracker”, for remotely operated vehicle) was invented to improve the efficiency of nuclear reactor inspections by providing inspection utility, assurance, and providing the state estimation cornerstone for eventually fully automating reactor inspection through autonomous navigation. The extreme harshness of radiation that the robot incurs during inspection (approx. 30 kRad/hour at the closest proximity to the reactor walls) provided difficult sensing constraints in designing a robust localization system, as even sensors with standard radiation hardening would eventually degrade and fail. Ultimately, a pan-tilt-zoom (PTZ) camera was chosen as the primary sensing modality, avoiding any on-board sensing of the robot. The camera is rigidly fixed in place above the reactor, tracking the robot via visual servoing. The large proximity of the camera from the robot is compensated by greater optical zoom, allowing for sufficient optical resolution for accurate localization. The technology leverages an extended Kalman filter (EKF) to simultaneously estimate the three-dimensional pose of both the camera and robot. As measurement inputs, the projection of reactor structural landmarks (such as flow holes and lines formed by adjacent baffle plates) provide observability to the camera pose and focal length. Fiducial markers mounted on the robot provide image observations to enable inference of robot three-dimensional pose. The key insight for how this technology can infer three-dimensional pose from only two-dimensional measurements in the camera image space is because we utilize the highly precise structural dimensions of the reactor and robot as a prior, avoiding the problem of visual scale that would otherwise occur with this sensing modality. This technology was demonstrated to be highly accurate when compared to ground truth pose collected from high-fidelity motion capture with a subscale laboratory mockup system. Following laboratory experiments and full-scale experiments with a reactor mockup, the technology was shown to be successful in providing localization information when deployed to a nuclear reactor. The technology provides online methods for camera calibration and EKF initialization to allow for the technology to be bootstrapped by inspection personnel. Additionally, I explored other avenues besides robot localization through which this technology can provide high-impact to reactor inspection operations, such as through visual inspection of high-fidelity three-dimensional reconstructions of the reactor from homography.

Project Technical Details

- Invented, developed, and deployed a vision-based tracking system (“ROV Tracker”) for a submersible robot. The ROV Tracker is the fundamental state estimation and localization system that will enable automated infrastructure inspection of nuclear reactors. Scope of project was expansive for typical master’s-level research, as both hardware and software efforts were required to build the technology from scratch. Full stack developer of the robot autonomy and simulation software, consisting of over 35 software packages and over 50,000 source lines of code.

- Developed and tested real-time, robust perception and localization capabilities in C++ and Python using the Robot Operation System (ROS) architecture and leveraging the Eigen and OpenCV open-source libraries. Capabilities are based on validated prototype algorithms, enabling field inspection deployments with the robot. Representative capabilities include:

- fiducial marker detection and tracking by color, including adaptation via color feedback to achieve robustness to scene lighting;

- automated control of a pan-tilt-zoom camera via visual servoing;

- reactor landmark detection and tracking, including homography-based feedforward matching for improved data association in cases when the camera is in motion;

- the underlying state estimation framework of an extended Kalman filter (EKF);

- online EKF initialization and camera calibration through a human-in-the-loop startup procedure;

- fault detection and recovery;

- extensive software testing, such as unit tests and an automated regression testing framework.

- Invented a planar-based geometric representation of a nuclear reactor core that is used as a map for localization.

- Developed system simulation that models the vision-based perception system, robot dynamics, and infrastructure geometry. Simulator generates high-fidelity synthetic camera images that enabled prototyping of perception and estimation algorithms.