|

|

|

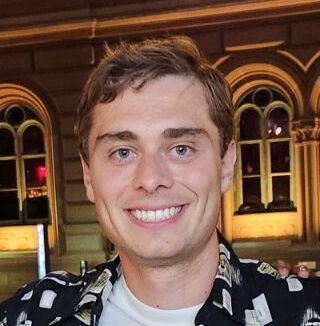

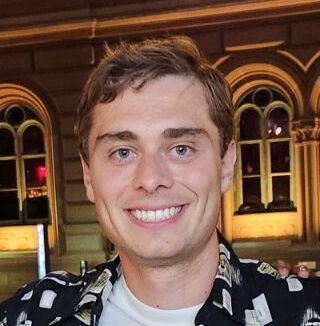

I am broadly interested in algorithms for robust sequential decision-making, drawing from reinforcement learning, search, and game theory. My long-term goal is to create an agent that can do anything that a human can do on a computer. Toward this goal, I am currently working on foundation models for decision-making and AI alignment.

I am a postdoc at CMU working with Tuomas Sandholm. I received a PhD in computer science from the University of California, Irvine working with Pierre Baldi. During my PhD, I did research scientist internships at Intel Labs and DeepMind. Before that, I received my bachelor's degree in mathematics and economics from Arizona State University in 2017. I am currently on the job market! Please reach out if you think I will be a good fit. | |

|

|

|

Research Confronting Reward Model Overoptimization with Constrained RLHF Toward Optimal Policy Population Growth in Two-Player Zero-Sum Games Language Models can Solve Computer Tasks Team-PSRO for Learning Approximate TMECor in Large Team Games via Cooperative Reinforcement Learning ESCHER: Eschewing Importance Sampling in Games by Computing a History Value Function to Estimate Regret Mastering the Game of Stratego With Model-Free Multiagent Reinforcement Learning XDO: A Double Oracle Algorithm for Extensive-Form Games Pipeline PSRO: A Scalable Approach for Finding Approximate Nash Equilibria in Large Games Solving the Rubik's Cube With Approximate Policy Iteration Toward Optimal Policy Population Growth in Two-Player Zero-Sum Games Illusory Attacks: Detectability Matters in Adversarial Attacks on Sequential Decision-Makers Game-Theoretic Robust Reinforcement Learning Handles Temporally-Coupled Perturbations Llemma: An Open Language Model For Mathematics Confronting Reward Model Overoptimization with Constrained RLHF Language Models can Solve Computer Tasks Team-PSRO for Learning Approximate TMECor in Large Team Games via Cooperative Reinforcement Learning Policy Space Diversity for Non-Transitive Games Computing Optimal Equilibria and Mechanisms via Learning in Zero-Sum Extensive-Form Games Algorithms and Complexity for Computing Nash Equilibria in Adversarial Team Games Regret-Minimizing Double Oracle for Extensive-Form Games MANSA: Learning Fast and Slow in Multi-Agent Systems A Game-Theoretic Framework for Managing Risk in Multi-Agent Systems ESCHER: Eschewing Importance Sampling in Games by Computing a History Value Function to Estimate Regret Mastering the Game of Stratego With Model-Free Multiagent Reinforcement Learning Towards Human-Level Bimanual Dexterous Manipulation with Reinforcement Learning Reducing Variance in Temporal-Difference Value Estimation via Ensemble of Deep Networks Proving Theorems using Incremental Learning and Hindsight Experience Replay Independent Natural Policy Gradient Always Converges in Markov Potential Games XDO: A Double Oracle Algorithm for Extensive-Form Games Discovering Multi-Agent Auto-Curricula in Two-Player Zero-Sum Games Online Double Oracle Pipeline PSRO: A Scalable Approach for Finding Approximate Nash Equilibria in Large Games Evolutionary Reinforcement Learning for Sample-Efficient Multiagent Coordination Solving the Rubik's Cube with Deep Reinforcement Learning and Search Solving the Rubik's Cube With Approximate Policy Iteration | |

|

|

|

Teaching Tuomas and I co-taught a course on computational game solving last semester. The first half focuses on fundamental concepts in game theory and the second half covers state-of-the art methods on large games such as Stratego and Diplomacy. We emphasize the intersection of concepts from reinforcement learning and game theory in state-of-the-art methods. | |

|

|