Achieving parallel performance and scalability involves making compromises between parallel and sequential computation. If not contained, the overheads of parallelism can easily outweigh its benefits, sometimes by orders of magnitude. Today, we expect programmers to implement this compromise by optimizing their code manually. This process is labor intensive, requires deep expertise, and reduces code quality. Recent work on heartbeat scheduling shows a promising approach that manifests the potentially vast amounts of available, latent parallelism, at a regular rate, based on even beats in time. The idea is to amortize the overheads of parallelism over the useful work performed between the beats. Heartbeat scheduling is promising in theory, but the reality is complicated: it has no known practical implementation.

In this paper, we propose a practical approach to heartbeat scheduling that involves equipping the assembly language with a small set of primitives. These primitives leverage existing kernel and hardware support for interrupts to allow parallelism to remain latent, until a heartbeat, when it can be manifested with low cost. Our Task Parallel Assembly Language (TPAL) is a compact, RISC-like assembly language. We specify TPAL through an abstract machine and implement the abstract machine as compiler transformations for C/C++ code and a specialized run-time system. We present an evaluation on both the Linux and the Nautilus kernels, considering a range of heartbeat interrupt mechanisms. The evaluation shows that TPAL can dramatically reduce the overheads of parallelism without compromising scalability.

Follow the instructions in the README.txt file, in our

project git

repository.

The long version of our paper presents, in the appendix, a dynamic semantics for our TPAL. We prototyped the semantics in PLT Redex, and make available the source code tpal.rkt here. You can use this file to write your own TPAL programs, modify or extend the semantics, and analyze the performance of our scheduler using sample programs.

Using the semantics of TPAL, we can analyze performance by using a language-based cost model. A language-based cost model defines a mapping from the execution of a source program, e.g., a TPAL program, to its cost. In particular, our semantics specifies a cost model for TPAL in terms of work and span. The work of a TPAL program denotes the total number of operations it performs, and the span the length of the longest chain of dependencies in the computation.

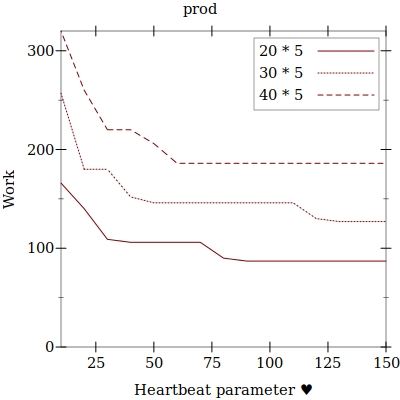

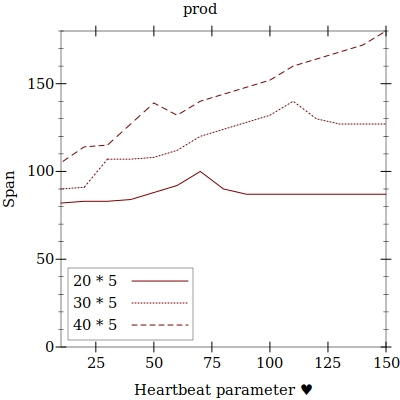

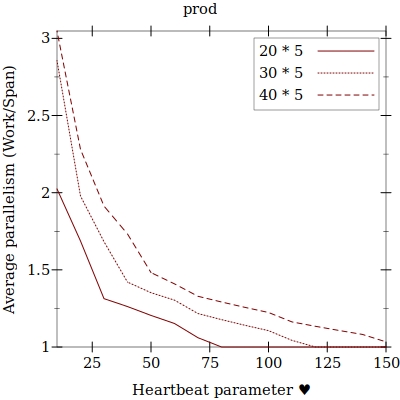

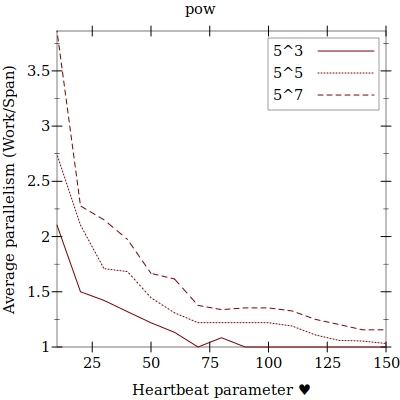

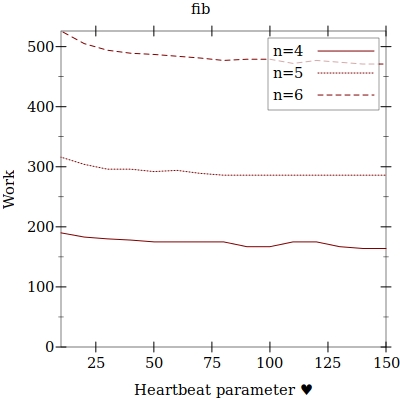

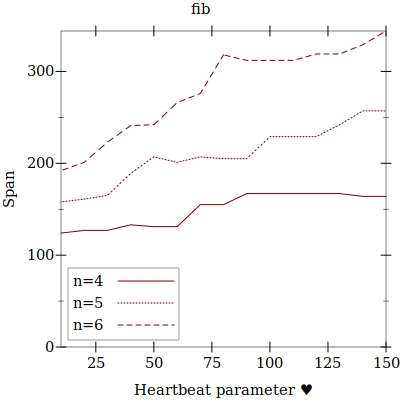

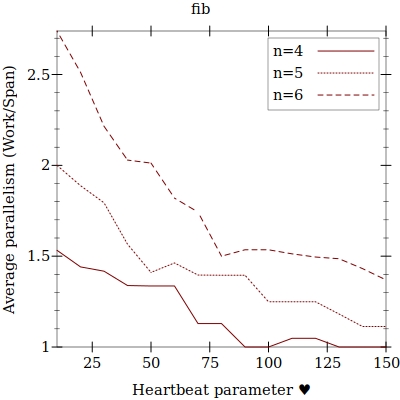

Having these abstract costs is useful for analyzing the behavior of the TPAL scheduling policy in a setting that is free from the complications of modern chip architectures. Here, we show plots that help us to see how performance is affected as we vary the heartbeat parameter of TPAL, denoted by the “heart” symbol. The heartbeat parameter controls the pace at which promotions are performed by our programs. In particular, suppose we set the heartbeat parameter to, e.g., 100. Then, for every 100 instructions it performs, a task in our program will receive a heartbeat interrupt, handle the promotion (a little bit later), and, if the promotion is successful, spawn a new task. The actual rate is therefore one new task for every 100 instructions issued by the program, approximatey. The rate is approximate because there may be some delay between the moment a task has issued at least 100 instructions and when the task subsequently reaches a promotion-ready program point, where the task may then promote any of its latent parallelism.

The first plot shows that the work of our prod program

starts out high, due to task-creation overheads, when the heartbeat

parameter is small, and decreases sharply before the heartbeat parameter

gets to the setting 75. Once the heartbeat setting gets to 125, the

task-creation overhead is well amortized, thanks to the spacing out of

task creations.

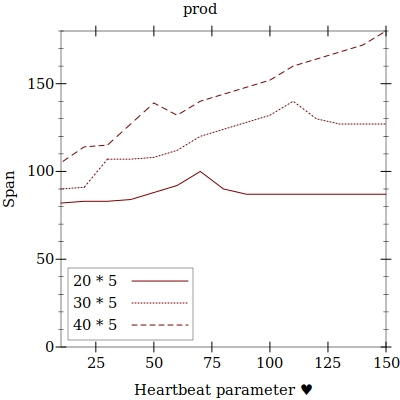

The second plot shows that the span of our prod program

starts out low, because the small setting of the heartbeat parameter

leads to the creation of abundant parallelism. The span increases in a

linear fashion, in proportion to the heartbeat parameter. Note that the

linear increase is not a problem for TPAL, because TPAL programs

typically use a single, fixed setting of the heartbeat parameter, which

is picked once per machine, to ensure task overheads are well amortized,

by a simple tuning process.

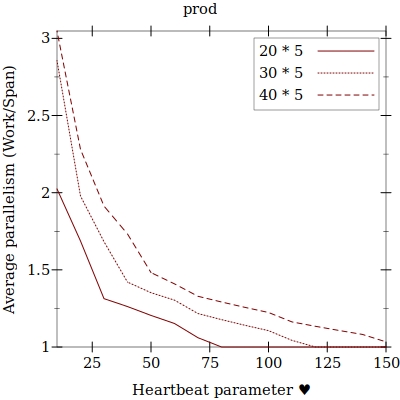

The third plot shows the average parallelism, which is the ratio of work and span. Average parallelism decreases as we increase the heartbeat parameter. For this reason, we always pick a heartbeat parameter that is large enough to amortize task creation, but no larger. However, as the curves show, as we increase the problem size, and therefore total amount of work, we see less impact from the increasing heartbeat parameter. The impact is particularly noticeable for the small input sizes we consider here. But for larger ones, and in general, for any program that features parallel slackness, the impact is negligible. See the formal analysis of Heartbeat Scheduling for details.

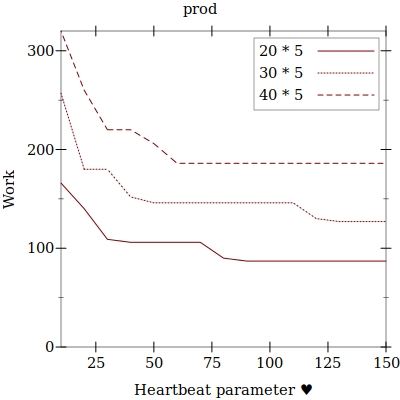

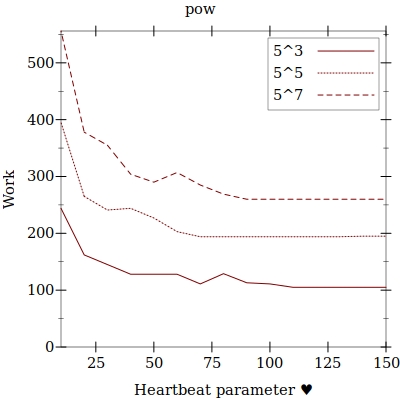

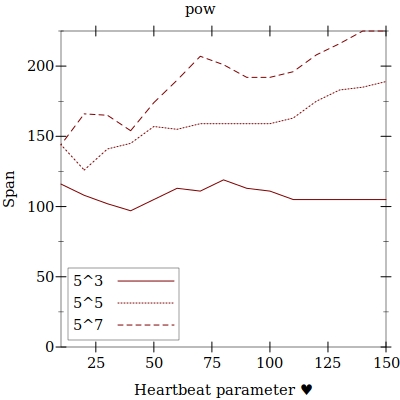

We see similar trends for our pow program, which

features a parallel loop nest. In particular, the outer loop contains a

copy of the prod loop.

Finally, we see a similar trend again, but this time with recursively

nested parallelism in the form of our recursive fib

program.

Get the bibtex file used to generate these references.