95-865: Unstructured Data Analytics

(Spring 2023 Mini 4)

Lectures:

Note that the current plan is for Section B4 to be recorded.

- Section A4: Mondays and Wednesdays 5pm-6:20pm, HBH 1206

- Section B4: Mondays and Wednesdays 3:30pm-4:50pm, HBH 1206

Recitations (shared across Sections A4/B4): Fridays 5pm-6:20pm, HBH A301

Instructor: George Chen (email: georgechen ♣ cmu.edu) ‐ replace "♣" with the "at" symbol

Teaching assistants:

- Zekai Fan (zekaifan ♣ andrew.cmu.edu)

- Xiyang Hu (xiyanghu ♣ andrew.cmu.edu)

- Shahriar Noroozizadeh (snoroozi ♣ andrew.cmu.edu)

- Omar Sanchez Granados (ols ♣ andrew.cmu.edu)

- Kelly Zhang (yizhang4 ♣ andrew.cmu.edu)

Office hours (starting second week of class): Check the course Canvas homepage for the office hour times and locations.

Contact: Please use Piazza (follow the link to it within Canvas) and, whenever possible, post so that everyone can see (if you have a question, chances are other people can benefit from the answer as well!).

Course Description

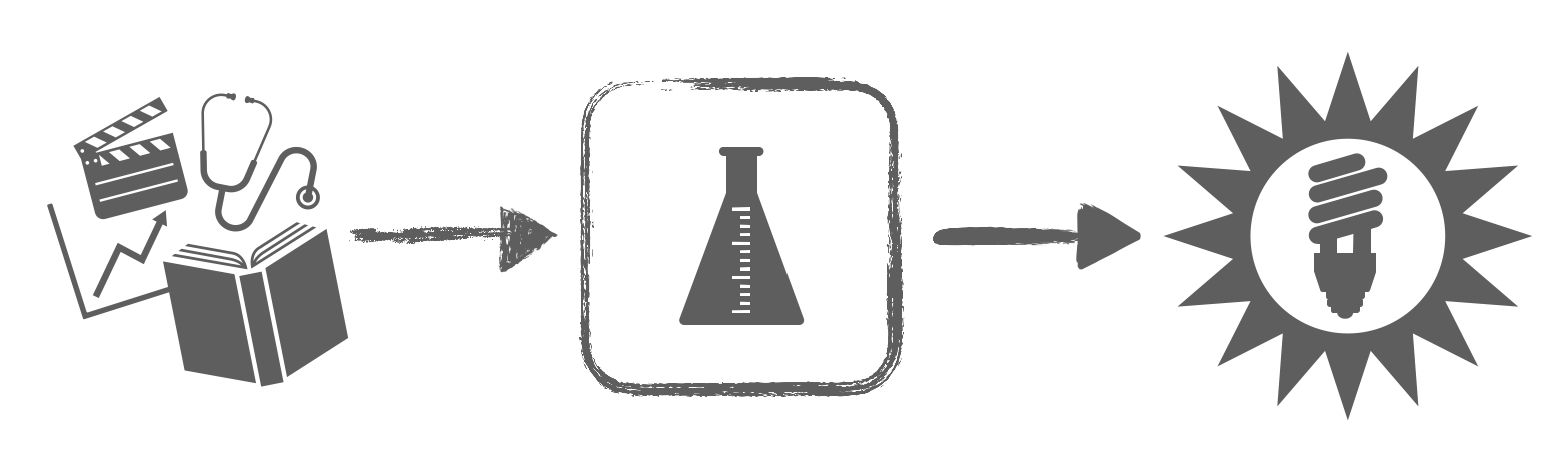

Companies, governments, and other organizations now collect massive amounts of data such as text, images, audio, and video. How do we turn this heterogeneous mess of data into actionable insights? A common problem is that we often do not know what structure underlies the data ahead of time, hence the data often being referred to as "unstructured". This course takes a practical approach to unstructured data analysis via a two-step approach:

- We first examine how to identify possible structure present in the data via visualization and other exploratory methods.

- Once we have clues for what structure is present in the data, we turn toward exploiting this structure to make predictions.

We will be coding lots of Python and dabble a bit with GPU computing (Google Colab).

Prerequisite: If you are a Heinz student, then you must have taken 95-888 "Data-Focused Python" or 90-819 "Intermediate Programming with Python". If you are not a Heinz student and would like to take the course, please contact the instructor and clearly state what Python courses you have taken/what Python experience you have.

Helpful but not required: Math at the level of calculus and linear algebra may help you appreciate some of the material more

Grading: Homework (30%), Quiz 1 (35%), Quiz 2 (35%*)

*Students with the most instructor-endorsed posts on Piazza will receive a slight bonus at the end of the mini, which will be added directly to their Quiz 2 score (a maximum of 10 bonus points, so that it is possible to get 110 out of 100 points on Quiz 2).

Letter grades are determined based on a curve.

Calendar for Sections A4/B4 (tentative)

Previous version of course (including lecture slides and demos): 95-865 Fall 2022 mini 2

| Date | Topic | Supplemental Material |

|---|---|---|

| Part I. Exploratory data analysis | ||

| Mon Mar 13 |

Lecture 1: Course overview, analyzing text using frequencies

[slides] Please install Anaconda Python 3 and spaCy by following this tutorial (needed for HW1 and the demo next lecture): [slides] Note: Anaconda Python 3 includes support for Jupyter notebooks, which we use extensively in this class |

|

| Tue Mar 14 | HW1 released (check Canvas) | |

| Wed Mar 15 |

Lecture 2: Basic text analysis demo (requires Anaconda Python 3 & spaCy), co-occurrence analysis

[slides] [Jupyter notebook (basic text analysis)] |

|

| Fri Mar 17 |

Recitation: Python review

[Jupyter notebook] |

|

| Mon Mar 20 |

Lecture 3: Co-occurrence analysis (cont'd), visualizing high-dimensional data

[slides] [Jupyter notebook (co-occurrence analysis)] |

Additional reading (technical):

[Notes from CMU 10-704 "Information Processing and Learning" Lecture 1 (Fall 2016) that provides some intuition on "information content" of random outcomes and on "entropy"] |

| Wed Mar 22 |

Lecture 4: Continuous outcomes, PCA, manifold learning

[slides] [Jupyter notebook (PCA)] |

Additional reading (technical):

[Abdi and Williams's PCA review] |

| Fri Mar 24 |

Recitation: More on PCA, practice with argsort

[Jupyter notebook] |

|

| Mon Mar 27 |

Lecture 5: Manifold learning (Isomap, MDS, t-SNE)

[slides] [Jupyter notebook (manifold learning)] HW1 due 11:59pm |

Python examples for manifold learning:

[scikit-learn example (Isomap, t-SNE, and many other methods)] Additional reading (technical): [The original Isomap paper (Tenenbaum et al 2000)] [some technical slides on t-SNE by George for 95-865] [Simon Carbonnelle's much more technical t-SNE slides] [t-SNE webpage] |

| Wed Mar 29 |

Lecture 6: t-SNE (cont'd), dimensionality reduction for images, intro to clustering

[slides] [required reading: "How to Use t-SNE Effectively" (Wattenberg et al, Distill 2016)] [Jupyter notebook (dimensionality reduction with images)***] ***For the demo on t-SNE with images to work, you will need to install some packages: pip install torch torchvision

[Jupyter notebook (dimensionality reduction and clustering with drug data)] HW2 released (check Canvas) |

New manifold learning method that is promising (PaCMAP):

[paper (Wang et al 2021) (technical)] [code (github repo)] Clustering additional reading (technical): [see Section 14.3 of the book "Elements of Statistical Learning" on clustering] |

| Fri Mar 31 |

Lecture 7: Clustering (cont'd)

[slides] We continue using the same demo from last time: [Jupyter notebook (dimensionality reduction and clustering with drug data)] |

See supplemental clustering reading posted for previous lecture |

| Mon Apr 3 |

Lecture 8: Clustering (cont'd)

[slides] We continue using the same demo from last time: [Jupyter notebook (dimensionality reduction and clustering with drug data)] |

|

| Tue Apr 4 | Quiz 1 review session: 3:30pm-4:30pm HBH 1005 | |

| Wed Apr 5 |

Lecture 9: Topic modeling

[slides] [Jupyter notebook (topic modeling with LDA)] |

Topic modeling reading:

[David Blei's general intro to topic modeling] |

| Fri Apr 7 | Quiz 1 (80-minute exam) | |

| Part II. Predictive data analysis | ||

| Mon Apr 10 |

Lecture 10: Wrap up topic modeling and clustering, intro to predictive data analysis

[slides] [Jupyter notebook (clustering on images)] |

|

| Wed Apr 12 |

Lecture 11: Hyperparameter tuning, decision trees & forests, classifier evaluation

[slides] [Jupyter notebook (prediction and model validation)] HW2 due 11:59pm |

Some nuanced details on cross-validation (technical):

[Andrew Ng's article Preventing "Overfitting" of Cross-Validation Data] [Braga-Neto and Dougherty's article Is cross-validation valid for small-sample microarray classification? (this article applies more generally rather than only to microarray data from biology)] [Bias and variance as we change the number of folds in k-fold cross-validation] |

| Fri Apr 14 | No class (CMU Spring Carnival) | |

| Mon Apr 17 |

Lecture 12: Intro to neural nets & deep learning

[slides] We didn't get around to covering the basic neural net demo, which is instead covered in Lecture 13 |

PyTorch tutorial (at the very least, go over the first page of this tutorial to familiarize yourself with going between NumPy arrays and PyTorch tensors, and also understand the basic explanation of how tensors can reside on either the CPU or a GPU): [PyTorch tutorial] Additional reading: [Chapter 1 "Using neural nets to recognize handwritten digits" of the book Neural Networks and Deep Learning] Video introduction on neural nets: ["But what *is* a neural network? | Chapter 1, deep learning" by 3Blue1Brown] |

| Wed Apr 19 |

Lecture 13: Wrap up neural net basics; image analysis with convolutional neural nets (also called CNNs or convnets)

[slides] For the neural net demo below to work, you will need to install some packages: pip install torch torchvision torchaudio torchtext torchinfo

[Jupyter notebook (handwritten digit recognition with neural nets; be sure to scroll to the bottom to download UDA_pytorch_utils.py)] |

Additional reading:

[Stanford CS231n Convolutional Neural Networks for Visual Recognition] |

| Fri Apr 21 | Recitation: More on classifier evaluation

[slides] [Jupyter notebook] |

|

| Mon Apr 24 |

Lecture 14: Wrap up convnets; time series analysis with recurrent neural nets (RNNs)

[slides] [Jupyter notebook (sentiment analysis with IMDb reviews; requires UDA_pytorch_utils.py from the previous demo)] |

Additional reading:

[(technical) Richard Zhang's fix for max pooling] [Christopher Olah's "Understanding LSTM Networks"] |

| Wed Apr 26 |

Lecture 15: Wrap up RNNs; additional deep learning topics and course wrap-up

[slides] [slides (extended version; PDF pages 58-71 were not covered in lecture due to lack of time)] |

Additional reading:

[A tutorial on word2vec word embeddings] [A tutorial on BERT word embeddings] Software for explaining neural nets: [Captum] Some articles on being careful with explanation methods (technical): ["The Disagreement Problem in Explainable Machine Learning: A Practitioner's Perspective" (Krishna et al 2022)] ["Do Feature Attribution Methods Correctly Attribute Features?" (Zhou et al 2022)] ["The false hope of current approaches to explainable artificial intelligence in health care" (Ghassemi et al 2021)] |

| Fri Apr 28 | Recitation slot: Quiz 2 review | |

| Mon May 1 | HW3 due 11:59pm | |

| Fri May 5 | Quiz 2 (80-minute exam): 1pm-2:20pm HBH A301 | |