95-865: Unstructured Data Analytics (Spring 2020 Mini 3)

Lectures, time and location:

- Section A3: Mondays and Wednesdays 4:30pm-5:50pm, HBH 1204

- Section B3: Mondays and Wednesdays 3:00pm-4:20pm, HBH 1204

Recitations: Fridays 3:00pm-4:20pm, HBH A301

Instructor: George Chen (email: georgechen ♣ cmu.edu) ‐ replace "♣" with the "at" symbol

Teaching assistants: Tianyu Huang (tianyuhu ♣ andrew.cmu.edu), Xiaobin Shen (xiaobins ♣ andrew.cmu.edu), Sachin Kalayathankal Sunny (ssunny ♣ andrew.cmu.edu)

Office hours:

- George: Wednesdays 11:30am-1:00pm HBH 2216

- Sunny: Wednesday 2:00pm-3:30pm HBH 1109

- Tianyu: Thursday 10:30am-12:00pm HBH 1109

- Xiaobin: Thursday 2:00pm-3:30pm HBH 1109

Contact: Please use Piazza (follow the link to it within Canvas) and, whenever possible, post so that everyone can see (if you have a question, chances are other people can benefit from the answer as well!).

course description

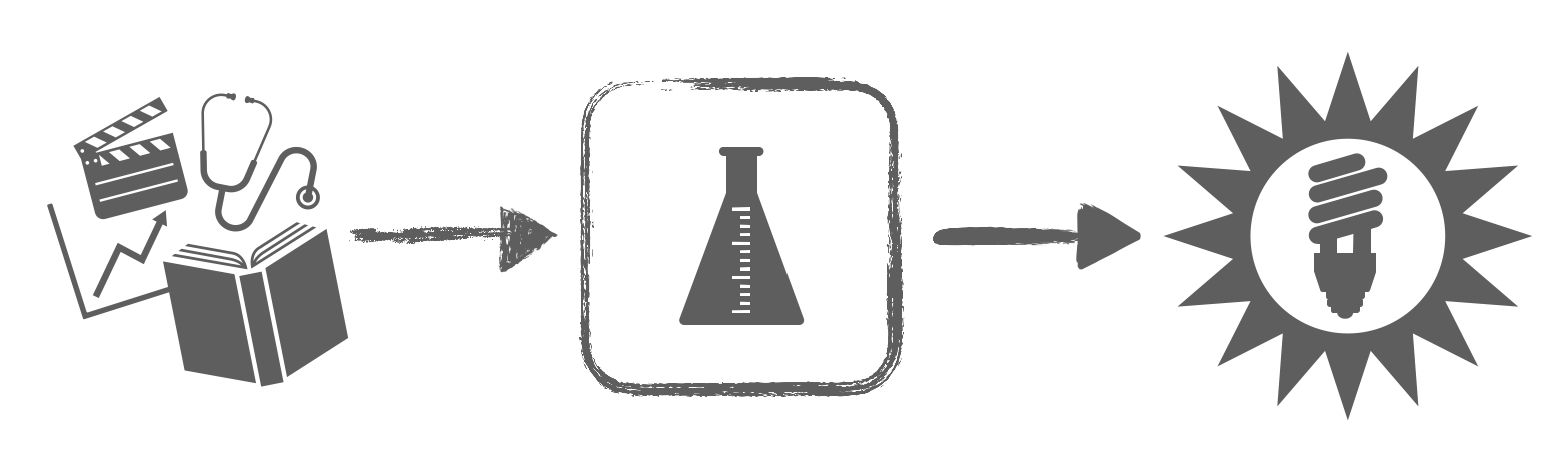

Companies, governments, and other organizations now collect massive amounts of data such as text, images, audio, and video. How do we turn this heterogeneous mess of data into actionable insights? A common problem is that we often do not know what structure underlies the data ahead of time, hence the data often being referred to as "unstructured". This course takes a practical approach to unstructured data analysis via a two-step approach:

- We first examine how to identify possible structure present in the data via visualization and other exploratory methods.

- Once we have clues for what structure is present in the data, we turn toward exploiting this structure to make predictions.

We will be coding lots of Python and working with Amazon Web Services (AWS) for cloud computing (including using GPU's).

Prerequisite: If you are a Heinz student, then you must have either (1) passed the Heinz Python exemption exam, or (2) taken 95-888 "Data-Focused Python" or 90-819 "Intermediate Programming with Python". If you are not a Heinz student and would like to take the course, please contact the instructor and clearly state what Python courses you have taken/what Python experience you have.

Helpful but not required: Math at the level of calculus and linear algebra may help you appreciate some of the material more

Grading: Homework 20%, quiz 1 40%, quiz 2 40%

Syllabus: [pdf]

calendar (subject to revision)

Previous version of course (including lecture slides and demos): 95-865 Fall 2019 mini 2

| Date | Topic | Supplemental Material |

|---|---|---|

| Part I. Exploratory data analysis | ||

|

Week 1: Jan 13-17 |

Lecture 1 (Jan 13): Course overview, analyzing text using frequencies

Recitation 1 (Jan 17): Basic Python review

HW1 released (check Canvas)! |

|

| Week 2: Jan 20-24 |

No class on Monday (MLK holiday)

Lecture 3 (Jan 22): Finding possibly related entities

Recitation 2 (Jan 24): Python practice with sorting and NumPy

|

What is the maximum value of phi-square/chi-square value? (technical)

Causality additional reading: |

| Week 3: Jan 27-31 |

HW1 due Monday 11:59pm HW2 released start of the week

Lecture 4 (Jan 27): Wrap up finding possibly related entities, visualizing high-dimensional data (PCA)

Lecture 5 (Jan 29): Manifold learning (Isomap, t-SNE)

Lecture 6 (during Jan 31 recitation slot): Wrap up manifold learning, begin clustering (k-means)

|

Python examples for dimensionality reduction:

Some details on t-SNE including code (from a past UDA recitation):

Additional dimensionality reduction reading (technical):

Additional clustering reading (technical):

|

| Week 4: Feb 3-7 |

Lecture 7 (Feb 3): Clustering (k-means, GMMs, CH index)

Lecture 8 (Feb 5): More clustering (DP-GMMs, DP-means), topic modeling (LDA)

Quiz 1 during Feb 7 recitation slot |

Python cluster evaluation:

Topic modeling reading:

|

| Part 2. Predictive data analysis | ||

| Week 5: Feb 10-14 |

HW2 due Monday 11:59pm HW3 released early in the week

Lecture 9 (Feb 10): Wrap up topic modeling, intro to predictive data analytics (some terminology, k-NN classification, model evaluation)

Lecture 10 (Feb 12): More on model evaluation (including confusion matrices, ROC curves), decision trees & forests

Recitation 3 (Feb 14): Practice with ROC curves

|

Some nuanced details on cross-validation (technical):

|

| Week 6: Feb 17-21 |

Lecture 11 (Feb 17): Intro to neural nets and deep learning

Mike Jordan's Medium article on where AI is at (April 2018):

Lecture 12 (Feb 19): Image analysis with convolutional neural nets

Lecture 13 (during Feb 21 recitation slot): Time series analysis with recurrent neural nets

|

PyTorch tutorial (at the very least, go over the first page of this tutorial to familiarize yourself with going between NumPy arrays and PyTorch tensors, and also understand the basic explanation of how tensors can reside on either the CPU or a GPU):

Additional reading:

Video introduction on neural nets: |

|

Week 7: Feb 24-28 |

Lecture 14 (Feb 24): More deep learning, wrap-up

Wednesday Feb 26 lecture slot: Quiz 2 review Quiz 2 during Feb 28 recitation slot |

Additional reading:

|

|

Week 8: Mar 2-6 |

HW3 due Thursday Mar 5, 11:59pm |

|