95-865: Unstructured Data Analytics (Spring 2019 Mini 3)

Lectures, time and location:

- Section A3: Mondays and Wednesdays 4:30pm-5:50pm HBH 1206

- Section B3: Mondays and Wednesdays 3:00pm-4:20pm HBH 1206

- Section C3: Tuesdays and Thursdays 4:30pm-5:50pm HBH 1206

Recitations for all three sections: Fridays 3pm-4:20pm HBH A301

Instructor: George Chen (georgechen [at symbol] cmu.edu)

Teaching assistants: Emaad Manzoor (emaad [at symbol] cmu.edu), Yucheng Huang (huangyucheng [at symbol] cmu.edu)

Office hours (starting second week of class):

- Emaad (these office hours are for both 94-775 and 95-865): Tuesdays and Thursdays 11:30am-1pm, HBH 3rd floor Heinz PhD lounge

- Yucheng (these office hours are only for 95-865): Wednesdays and Fridays 1pm-2:30pm, HBH A007J except for on Feb 20 and 22 when it's A007A; Feb 15 location TBA

- George (these office hours are for both 94-775 and 95-865): Tuesdays 1pm-2:30pm, HBH 2216

Contact: Please use Piazza (follow the link to it within Canvas) and, whenever possible, post so that everyone can see (if you have a question, chances are other people can benefit from the answer as well!).

Course Description

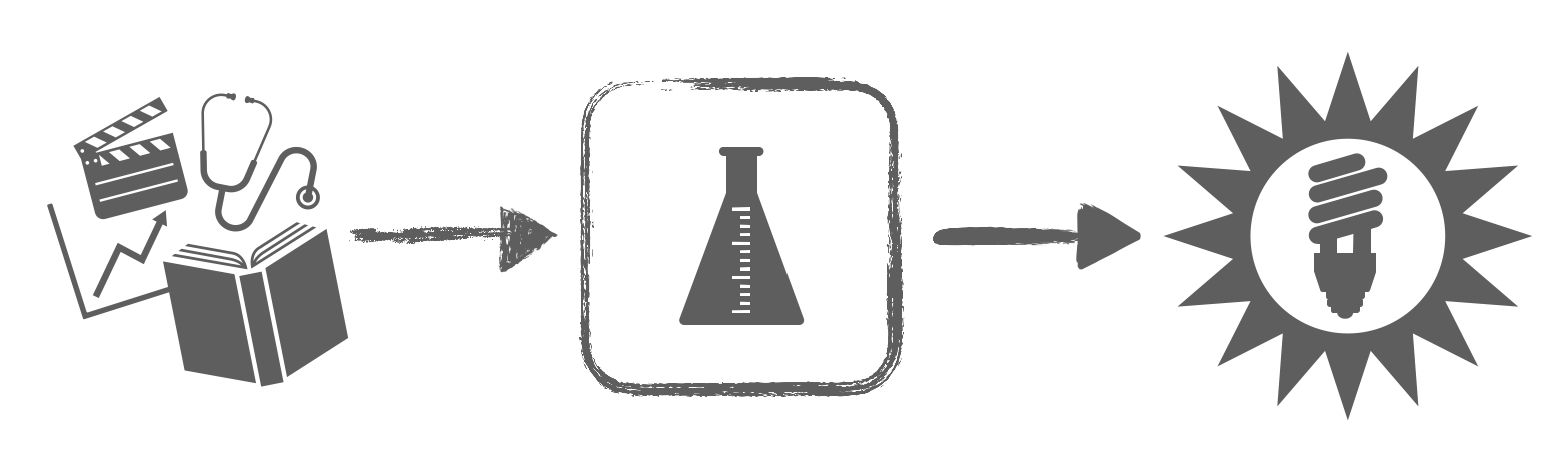

Companies, governments, and other organizations now collect massive amounts of data such as text, images, audio, and video. How do we turn this heterogeneous mess of data into actionable insights? A common problem is that we often do not know what structure underlies the data ahead of time, hence the data often being referred to as "unstructured". This course takes a practical approach to unstructured data analysis via a two-step approach:

- We first examine how to identify possible structure present in the data via visualization and other exploratory methods.

- Once we have clues for what structure is present in the data, we turn toward exploiting this structure to make predictions.

We will be coding lots of Python and working with Amazon Web Services (AWS) for cloud computing (including using GPU's).

Prerequisite: If you are a Heinz student, then you must have either (1) passed the Heinz Python exemption exam, or (2) taken 95-888 "Data-Focused Python" or 16-791 "Applied Data Science". If you are not a Heinz student and would like to take the course, please contact the instructor and clearly state what Python courses you have taken/what Python experience you have.

Helpful but not required: Math at the level of calculus and linear algebra may help you appreciate some of the material more

Grading: Homework 20%, mid-mini quiz 35%, final exam 45%. If you do better on the final exam than the mid-mini quiz, then your final exam score clobbers your mid-mini quiz score (thus, the quiz does not count for you, and instead your final exam counts for 80% of your grade).

Syllabus: [pdf]

Calendar (tentative)

🔥 Previous version of course (including lecture slides and demos): 95-865 Fall 2018 mini 2 🔥

| Date | Topic | Supplemental Material |

|---|---|---|

| Part I. Exploratory data analysis | ||

|

Mon-Tue Jan 14-15 Reminder: Sections A3 and B3 meet Mondays and Wednesdays; Section C3 meets Tuesdays and Thursdays |

Lecture 1: Course overview, basic text processing, and frequency analysis

HW1 released (check Canvas)! |

|

| Wed-Thur Jan 16-17 |

Lecture 2: Basic text analysis demo, co-occurrence analysis

|

|

| Fri Jan 18 |

Recitation 1: Basic Python review

|

|

| Mon-Tue Jan 21-22 |

No class due to MLK Jr. Day (even though Tuesday is not a holiday, to keep the three sections synchronized, there will be no class on Tuesday for Section C3) |

|

| Wed-Thur Jan 23-24 |

Lecture 3: Finding possibly related entities, PCA

|

Causality additional reading:

PCA additional reading (technical): |

| Fri Jan 25 |

Recitation 2: More practice with PCA

HW1 due 11:59pm, HW2 released |

|

| Mon-Tue Jan 28-29 |

Lecture 4: t-SNE

|

Python examples for dimensionality reduction:

Additional dimensionality reduction reading (technical):

|

| Wed-Thur Jan 30-31 |

Class cancelled due to polar vortex |

|

| Fri Feb 1 |

Lecture 5 (this is not a typo): Introduction to clustering, k-means, Gaussian mixture models

Recitation 3: t-SNE (recorded video is in Canvas - look for "UDAP 01/25 Recitation", which is the same recitation material that was presented 1 week earlier for the policy version of UDA)

|

Additional clustering reading (technical): |

| Mon-Tue Feb 4-5 |

Lecture 6: Clustering and clustering interpretation demo, automatic selection of k with CH index

|

Additional clustering reading (technical):

Python cluster evaluation: |

| Wed-Thur Feb 6-7 |

Lecture 7: Hierarchical clustering, topic modeling

|

Additional reading (technical): |

| Fri Feb 8 |

Recitation 4: Quiz review session |

|

| Mon-Tue Feb 11-12 |

Lecture 8: Topic modeling (wrap-up; note that this material is fair game for the quiz),

introduction to predictive analytics (this is the official start to the second half of the course on predictive data analysis), nearest neighbors, evaluating prediction methods

|

|

| Part 2. Predictive data analysis | ||

| Wed-Thur Feb 13-14 |

Lecture 9: Model validation, decision trees/forests

|

|

| Fri Feb 15 |

Mid-mini quiz (same time/place as recitation); in case of space issues, we do have an overflow room booked (HBH 1002) HW2 due 11:59pm, HW3 released |

|

| Mon-Tue Feb 18-19 |

Lecture 10: Introduction to neural nets and deep learning

Mike Jordan's Medium article on where AI is at (April 2018):

Pre-recorded recitation for support vector machines, ROC curves

(this material is also fair game for the final exam despite us not having a live recitation for it)

|

Video introduction on neural nets:

Additional reading: |

| Wed-Thur Feb 20-21 |

Lecture 11: Image analysis with CNNs (also called convnets)

|

CNN reading:

|

| Fri Feb 22 | Recitation 5: Final exam review |

|

| Mon-Tue Feb 25-26 |

Lecture 12: Time series analysis with RNNs

|

LSTM reading: |

| Wed-Thur Feb 27-28 |

Lecture 13: Interpreting what a deep net is learning, other deep learning topics, wrap-up

Gary Marcus's Medium article on limitations of deep learning and his heated debate with Yann LeCun (December 2018): |

Videos on learning neural nets (warning: the loss function used is not the same as what we are using in 95-865):

Recent heuristics/theory on gradient descent variants for deep nets (technical):

Some interesting reads (technical): |

| Fri Mar 1 |

No class HW3 due 11:59pm |

|

| Thur Mar 7 |

Final exam, 4:30pm-5:50pm HBH A301 |

|