94-775: Practical Unstructured Data Analytics

(Spring 2025 Mini 4; listed as 94-475 for undergrads)

Class time and location:

- Lectures: Tuesdays and Thursdays 5pm-6:20pm, HBH 2008

- Recitations: Fridays 11am-12:20pm, HBH 2008

Instructor: George Chen (email: georgechen ♣ cmu.edu) ‐ replace "♣" with the "at" symbol

Teaching assistant: Johnna Sundberg (jsundber ♣ andrew.cmu.edu)

Office hours (starting second week of class): Check the course Canvas homepage for the office hour times and locations.

Contact: Please use Piazza (follow the link to it within Canvas) and, whenever possible, post so that everyone can see (if you have a question, chances are other people can benefit from the answer as well!).

Course Description

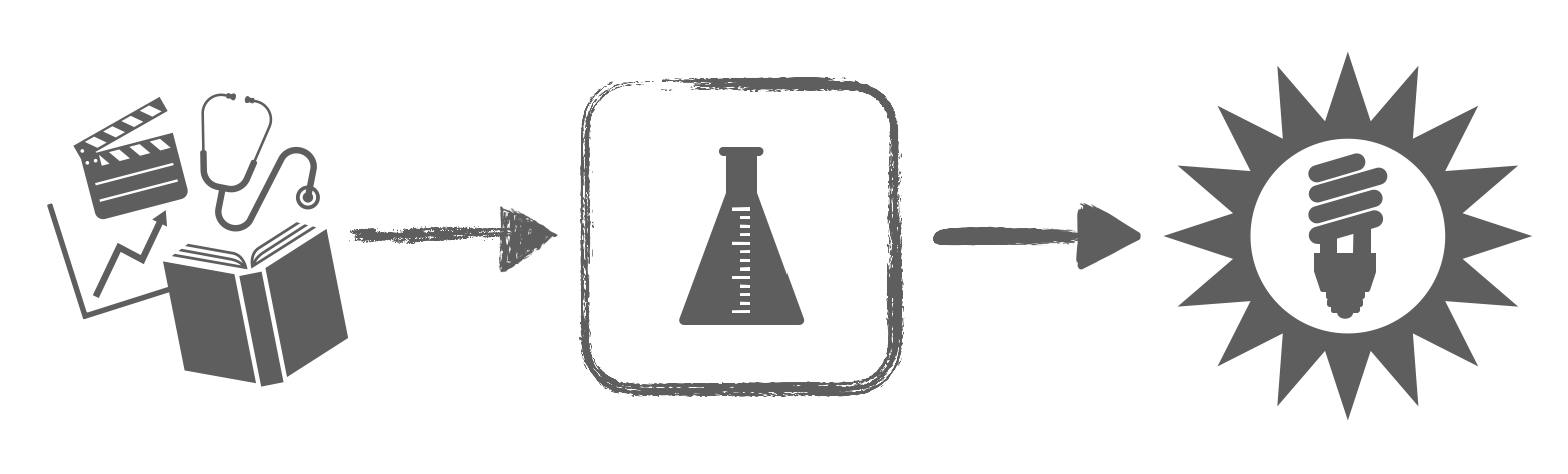

Companies, governments, and other organizations now collect massive amounts of data such as text, images, audio, and video. How do we turn this heterogeneous mess of data into actionable insights? A common problem is that we often do not know what structure underlies the data ahead of time, hence the data often being referred to as "unstructured". This course takes a practical approach to unstructured data analysis via a two-step approach:

- We first examine how to identify possible structure present in the data via visualization and other exploratory methods.

- Once we have clues for what structure is present in the data, we turn toward exploiting this structure to make predictions.

Prerequisite: If you are a Heinz student, then you must have already completed 90-803 "Machine Learning Foundations with Python". If you are not a Heinz student and would like to take the course, please contact the instructor and clearly state what Python and machine learning courses you have taken (or relevant experience).

Helpful but not required: Math at the level of calculus and linear algebra may help you appreciate some of the material more

Grading:

- HW1 (15%)

- HW2 (15%)

- Quiz 1 (15%)

- Quiz 2 (15%*)

- Final Project Proposal (10%)

- Final Project (30%)

Letter grades are determined based on a curve.

Calendar (tentative)

| Date | Topic | Supplemental Materials |

|---|---|---|

| Part I. Exploratory data analysis | ||

| Week 1 | ||

| Tue Mar 11 |

Lecture 1: Course overview

[slides] Please install Anaconda Python 3 and spaCy by following this tutorial (needed for HW1 and the demo next lecture): [slides] Note: Anaconda Python 3 includes support for Jupyter notebooks, which we use extensively in this class |

|

| Wed Mar 12 | HW1 released | |

| Thur Mar 13 |

Lecture 2: Basic text analysis (requires Anaconda Python 3 & spaCy)

[slides] [Jupyter notebook (basic text analysis)] |

|

| Fri Mar 14 |

Recitation slot: Lecture 3 — Basic text analysis (cont'd), co-occurrence analysis

[slides] [Jupyter notebook (basic text analysis using arrays)] [Jupyter notebook (co-occurrence analysis toy example)] |

As we saw in class, PMI is defined in terms of log probabilities. Here's additional reading that provides some intuition on log probabilities (technical):

[Section 1.2 of lecture notes from CMU 10-704 "Information Processing and Learning" Lecture 1 (Fall 2016) discusses "information content" of random outcomes, which are in terms of log probabilities] |

| Week 2 | ||

| Tue Mar 18 |

Lecture 4: Co-occurrence analysis (cont'd), visualizing high-dimensional data with PCA

[slides] [Jupyter notebook (text generation using n-grams)] |

Additional reading (technical):

[Abdi and Williams's PCA review] Supplemental videos: [StatQuest: PCA main ideas in only 5 minutes!!!] [StatQuest: Principal Component Analysis (PCA) Step-by-Step (note that this is a more technical introduction than mine using SVD/eigenvalues)] [StatQuest: PCA - Practical Tips] [StatQuest: PCA in Python (note that this video is more Pandas-focused whereas 94-775 is taught in a manner that is more numpy-focused to better prep for working with PyTorch later)] |

| Thur Mar 20 |

Lecture 5: PCA (cont'd), manifold learning (Isomap, MDS)

[slides] [Jupyter notebook (PCA)] [Jupyter notebook (manifold learning)] |

Additional reading (technical):

[The original Isomap paper (Tenenbaum et al 2000)] |

| Fri Mar 21 |

Recitation slot: More on dimensionality reduction

[slides (how to save a Jupyter notebook as PDF)] [Jupyter notebook (more on PCA, argsort)] [Jupyter notebook (analyzing the 20 Newsgroups dataset)] |

|

| Week 3 | ||

| Tue Mar 25 |

HW1 due 11:59pm

Lecture 6: Wrap up manifold learning, intro to clustering [slides] [we wrap up the demo from last lecture: Jupyter notebook (manifold learning)] [Jupyter notebook (PCA and t-SNE with images)***] ***For the demo on PCA and t-SNE with images to work, you will need to install some packages: pip install torch torchvision

|

Python examples for manifold learning:

[scikit-learn example (Isomap, t-SNE, and many other methods)] Supplemental video: [StatQuest: t-SNE, clearly explained] Additional reading (technical): [some technical slides on t-SNE by George for 94-775] [Simon Carbonnelle's much more technical t-SNE slides] [t-SNE webpage] New manifold learning method that is promising (PaCMAP): [paper (Wang et al 2021) (technical)] [code (github repo)] |

| Wed Mar 26 | Quiz 1 review session 5pm-6pm over Zoom, run by your TA Johnna (check Canvas for Zoom link) | |

| Thur Mar 27 |

Lecture 7: Clustering

[slides] [Jupyter notebook (preprocessing 20 Newsgroups dataset)] [Jupyter notebook (clustering 20 Newsgroups dataset)] |

Clustering additional reading (technical):

[see Section 14.3 of the book "Elements of Statistical Learning"] |

| Fri Mar 28 | Recitation slot: Quiz 1 — material coverage: everything up to and including Fri Mar 21 (i.e., weeks 1-2) | |

| Week 4 | ||

| Tue Apr 1 |

Lecture 8: Clustering (cont'd)

[slides] [we resume the demo from last time: Jupyter notebook (clustering 20 Newsgroups dataset)] |

Same supplemental materials as the previous lecture |

| Wed Apr 2 | Final project proposals due 11:59pm (1 email per group) | |

| Thur Apr 3 & Fri Apr 4 |

No class (CMU Spring Carnival)

🎪 |

|

| Week 5 | ||

| Tue Apr 8 |

Lecture 9: Clustering (cont'd); topic modeling

[slides] [Jupyter notebook (clustering on text revisited using TF-IDF, normalizing using Euclidean norm)] [Jupyter notebook (topic modeling with LDA)] |

Topic modeling reading:

[David Blei's general intro to topic modeling] [Maria Antoniak's practical guide for using LDA] |

| Thur Apr 10 |

Lecture 10: Wrap up topic modeling and clustering; intro to predictive data analytics

[slides] [Jupyter notebook (LDA: choosing the number of topics)] |

|

| Fri Apr 11 | Recitation slot: Quiz 2 — material coverage: Tue Mar 25 up to Tue Apr 8 (i.e., weeks 3-4 as well as Lecture 9) | |

| Part II. Predictive data analysis | ||

| Week 6 | ||

| Tue Apr 15 |

HW2 due 11:59pm

Lecture 11: Hyperparameter tuning; intro to neural nets & deep learning [slides] For the neural net demo below to work, you will need to install some packages: pip install torch torchvision torchaudio torchinfo

[Jupyter notebook (handwritten digit recognition with neural nets; be sure to scroll to the bottom to download UDA_pytorch_utils.py)] |

PyTorch tutorial (at the very least, go over the first page of this tutorial to familiarize yourself with going between NumPy arrays and PyTorch tensors, and also understand the basic explanation of how tensors can reside on either the CPU or a GPU):

[PyTorch tutorial] Additional reading on basic neural networks: [Chapter 1 "Using neural nets to recognize handwritten digits" of the book Neural Networks and Deep Learning] Video introduction on neural nets: ["But what *is* a neural network? | Chapter 1, deep learning" by 3Blue1Brown] StatQuest series of videos on neural nets and deep learning: [YouTube playlist (note: there are a lot of videos in this playlist, some of which goes into more detail than you're expected to know for 94-775; make sure that you understand concepts at the level of how they are presented in 94-775 lectures/recitations)] |

| Thur Apr 17 |

Lecture 12: Wrap up neural net basics; brief overview of word embeddings; image analysis with convolutional neural nets

[slides] [we resume the demo from last time: Jupyter notebook (handwritten digit recognition with neural nets; be sure to scroll to the bottom to download UDA_pytorch_utils.py)] |

BERT word embeddings (technical):

[A tutorial on BERT word embeddings] Supplemental reading and video for convolutional neural networks (CNNs): [Stanford CS231n Convolutional Neural Networks for Visual Recognition] [(technical) Richard Zhang's fix for max pooling] In the above StatQuest YouTube playlist, there's a video in the playlist on CNNs |

| Fri Apr 18 |

Recitation slot: How to use BERT word embeddings (the basics along with applying them to sentiment analysis and topic modeling)

[Jupyter notebook (quick intro to using BERT word embeddings)] [Jupyter notebook (applying BERT to sentiment analysis and topic modeling)] |

See BERT supplemental material for Lecture 12 |

| Week 7 | ||

| Tue Apr 22 |

Lecture 13: Text generation with generative pretrained transformers (GPTs)

[slides] [Jupyter notebook (text generation with neural nets)] |

Additional reading/videos:

[Andrej Karpathy's "Neural Networks: Zero to Hero" lecture series (including a more detailed GPT lecture)] |

| Thur Apr 24 |

Lecture 14: More on transformers; a few more deep learning concepts; course wrap-up

[slides] [Jupyter notebook (sentiment analysis with IMDb reviews using BERT-Tiny)] |

Software for explaining neural nets:

[Captum] Some articles on being careful with explanation methods (technical): ["The Disagreement Problem in Explainable Machine Learning: A Practitioner's Perspective" (Krishna et al 2022)] ["Do Feature Attribution Methods Correctly Attribute Features?" (Zhou et al 2022)] ["The false hope of current approaches to explainable artificial intelligence in health care" (Ghassemi et al 2021)] |

| Fri Apr 25 | Recitation slot: Final project presentations | |

| Final exam week | ||

| Mon Apr 28 | Final project slide decks + Jupyter notebooks due 11:59pm by email (1 email per group) | |