Introduction and Motivation

VecPy (Vectorizing Python) exists to facilitate the development of high-performance data-parallel modules. Python is a programmer-friendly, high-level language well suited for rapid prototyping, and as such it is widely considered to be one of the most productive programming languages1,2. Unfortunately, Python is slow3. VecPy reads Python source code and emits multi-threaded, vectorized C++ code using compiler intrinsics, which is subsequently compiled into a native shared library. The library contains language bindings for C++, Java, and Python, allowing a programmer to rapidly develop a kernel in Python and then efficiently execute that kernel in a production setting.

Usage and Features

The primary design goals of VecPy are simplicity, utility, flexibility, and efficiency. In just a few lines of code, VecPy translates and compiles a Python function into an efficient, data-parallel native library. The generated library can then be loaded as a Python module, allowing the optimized function to be used as a drop-in replacement for the original Python function. The following program (Listing 1) illustrates how simple it can be to use VecPy to significantly improve Python program performance.

#Define the kernel

def shapes(radius, edge, vol_sphere, vol_icos):

"""Calculates sphere and an icosahedron volumes."""

vol_sphere = (4/3 * math.pi) * (radius ** 3)

vol_icos = (5/12) * (3 + math.sqrt(5)) * (edge ** 3)

return (vol_sphere, vol_icos)

#Generate some data

from array import array

from random import uniform

def rand():

return array('f', [uniform(0, 1) for i in range(10)])

radii, edges, spheres, icosahedrons = rand(), rand(), rand(), rand()

#Call VecPy to generate the native, vectorized module

from parser import Parser

from compiler import Compiler

from compiler_constants import *

krnl = Parser.parse(shapes)

opts = Options(Architecture.sse4, DataType.float)

Compiler.compile(krnl, opts)

#Import the newly-minted module and execute the data-parallel kernel

import VecPy_shapes

VecPy_shapes.shapes(radii, edges, spheres, icosahedrons)

#Use the results!

print(spheres, icosahedrons)Listing 1. Hello, world!

VecPy aims to implement a sufficiently large feature set to be useful for meaningful, real-world applications. Conditional operations within if-elif-else blocks and while loops are fully implemented; all comparison, bit-wise, and arithmetic operators are supported; and most built-in math functions and constants are available. Data type targets include 32-bit floats and 32-bit unsigned integers; invocation targets include C++, Java, and Python; and architecture targets include SSE4.2 and AVX2.

Walkthrough: The Mandelbrot Set

In the context of data-parallel kernel development tools, the problem of rendering the Mandelbrot set is perhaps one of the most widely showcased examples. This problem is an ideal example for such tools for several reasons: each pixel can be processed independently, the work is CPU-intensive, the algorithm utilizes a reasonable set of language features, and the resulting images are captivating. Below, VecPy is applied to the rendering the Mandelbrot set in real-time.

As a preliminary step, I created a Java program to display an image on the screen in real-time. The source code is available, but in the interest of brevity is not shown here.

First, I implemented a pure-Java, sequential version of the Mandelbrot function. I followed the standard escape time algorithm with an extension for smooth shading4 (Listing 2).

private static boolean mandelbrot(FloatBuffer row_, FloatBuffer col_, FloatBuffer count_,

float max, float w_m1, float h_m1,

float left, float right, float top,float bottom) {

for (int index = 0; index < N; index++) {

float row = row_.get();

float col = col_.get();

float x0 = left + col * (right - left) / w_m1;

float y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1;

float x = 0, y = 0;

float count = 0;

float xx = x * x;

float yy = y * y;

//Standard escape time

while (count < max && xx + yy < 16) {

float temp = xx - yy + x0;

y = 2 * x * y + y0;

x = temp;

xx = x * x;

yy = y * y;

++count;

}

//Smooth shading

if (count < max) {

count += 1 - Math.log(Math.log(xx + yy) / LOG2 / 2.0) / LOG2;

}

count_.put(count);

}

count_.rewind();

return true;

}

Listing 2. Sequential Java Mandelbrot implementation.

Next, I wanted to optimize this function by taking advantage of SIMD execution and multi-threading. I wrote the same function in Python and used VecPy to generate a JNI library to replace the pure-Java version (Listing 3,4). Python function annotations are used to declare that some arguments are "uniform", meaning that all execution instances will receive the same immutable value. Some useful Python features used here include multi-assignment, while loops, if branches, and calls to built-in math functions.

def mandelbrot(row, col, count,

max: 'uniform', w_m1: 'uniform', h_m1: 'uniform',

left: 'uniform', right: 'uniform', top: 'uniform', bottom: 'uniform'):

x0 = left + col * (right - left) / w_m1

y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1

x = y = count = 0

xx, yy = (x * x), (y * y)

#Standard escape time

while (count < max and xx + yy < 16):

x, y = (xx - yy + x0), (2 * x * y + y0)

xx, yy = (x * x), (y * y)

count += 1

#Smooth shading

if count < max:

count += 1 - math.log2(math.log2(xx + yy) / 2)

return (row, col, count)

Listing 3. Sequential Python Mandelbrot implementation.

from parser import Parser

from compiler import Compiler

from compiler_constants import *

krnl = Parser.parse(mandelbrot)

opts = Options(Architecture.sse4, DataType.float, threads=2)

Compiler.compile(krnl, opts)Listing 4. Invoking VecPy to compile the Mandelbrot kernel for use in Java.

One of the files VecPy generates is an annotated, vectorized version of the mandelbrot function (Listing 5). It's long; thanks to VecPy I didn't have to manually write it. VecPy also includes Python source code as comments before each block of generated code. This can be helpful for debugging, optimization, or even learning to use SIMD intrinsics.

/*******************************************************************************

* Target Architecture: SSE4.2 (float) *

*******************************************************************************/

//Includes

#include <x86intrin.h>

//Kernel function: mandelbrot

static void mandelbrot_vector(KernelArgs* args) {

//Setup

const __m128 MASK_FALSE = _mm_setzero_ps();

const __m128 MASK_TRUE = _mm_cmpeq_ps(MASK_FALSE, MASK_FALSE);

//Uniforms

const __m128 max = _mm_set1_ps(args->max);

const __m128 w_m1 = _mm_set1_ps(args->w_m1);

const __m128 h_m1 = _mm_set1_ps(args->h_m1);

const __m128 left = _mm_set1_ps(args->left);

const __m128 right = _mm_set1_ps(args->right);

const __m128 top = _mm_set1_ps(args->top);

const __m128 bottom = _mm_set1_ps(args->bottom);

//Literals

const __m128 lit023 = _mm_set1_ps(0.0000000f);

const __m128 lit045 = _mm_set1_ps(1.0000000f);

const __m128 lit041 = _mm_set1_ps(2.0000000f);

const __m128 lit033 = _mm_set1_ps(16.0000000f);

//Stack variables

__m128 row, col, count, var012, var013, var014, var015, x0, var017, var018, var019, var020, var021, y0, x, y, var026, xx, var028, yy, mask030, mask031, var032, mask034, mask035, var036, var037, var038, var039, var040, var042, var043, var044, mask046, mask047, var048, var049, var050, var051, var052, var053;

//Loop over input

for(unsigned int index = 0; index < args->N; index += 4) {

//Inputs

row = _mm_load_ps(&args->row[index]);

col = _mm_load_ps(&args->col[index]);

count = _mm_load_ps(&args->count[index]);

//Begin kernel logic

{

//>>> x0 = left + col * (right - left) / w_m1

var015 = _mm_sub_ps(right, left);

var014 = _mm_mul_ps(col, var015);

var013 = _mm_div_ps(var014, w_m1);

var012 = _mm_add_ps(left, var013);

x0 = var012;

//>>> y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1

var020 = _mm_sub_ps(h_m1, row);

var021 = _mm_sub_ps(top, bottom);

var019 = _mm_mul_ps(var020, var021);

var018 = _mm_div_ps(var019, h_m1);

var017 = _mm_add_ps(bottom, var018);

y0 = var017;

//>>> x = y = count = 0

x = lit023;

y = lit023;

count = lit023;

//>>> xx, yy = (x * x), (y * y)

var026 = _mm_mul_ps(x, x);

var028 = _mm_mul_ps(y, y);

xx = var026;

yy = var028;

//>>> while (count < max and xx + yy < 16):

mask031 = _mm_cmplt_ps(count, max);

var032 = _mm_add_ps(xx, yy);

mask034 = _mm_cmplt_ps(var032, lit033);

mask030 = _mm_and_ps(mask031, mask034);

mask035 = _mm_and_ps(mask030, MASK_TRUE);

while(_mm_movemask_ps(mask035)) {

//>>> x, y = (xx - yy + x0), (2 * x * y + y0)

var037 = _mm_sub_ps(xx, yy);

var036 = _mm_add_ps(var037, x0);

var040 = _mm_mul_ps(lit041, x);

var039 = _mm_mul_ps(var040, y);

var038 = _mm_add_ps(var039, y0);

x = _mm_or_ps(_mm_and_ps(mask035, var036), _mm_andnot_ps(mask035, x));

y = _mm_or_ps(_mm_and_ps(mask035, var038), _mm_andnot_ps(mask035, y));

//>>> xx, yy = (x * x), (y * y)

var042 = _mm_mul_ps(x, x);

var043 = _mm_mul_ps(y, y);

xx = _mm_or_ps(_mm_and_ps(mask035, var042), _mm_andnot_ps(mask035, xx));

yy = _mm_or_ps(_mm_and_ps(mask035, var043), _mm_andnot_ps(mask035, yy));

//>>> count += 1

var044 = _mm_add_ps(count, lit045);

count = _mm_or_ps(_mm_and_ps(mask035, var044), _mm_andnot_ps(mask035, count));

//>>> while (count < max and xx + yy < 16):

mask031 = _mm_cmplt_ps(count, max);

var032 = _mm_add_ps(xx, yy);

mask034 = _mm_cmplt_ps(var032, lit033);

mask030 = _mm_and_ps(mask031, mask034);

mask035 = _mm_and_ps(mask030, MASK_TRUE);

}

//>>> if count < max:

mask046 = _mm_cmplt_ps(count, max);

mask047 = _mm_and_ps(mask046, MASK_TRUE);

{

//>>> count += 1 - math.log2(math.log2(xx + yy) / 2)

var053 = _mm_add_ps(xx, yy);

var052[0] = log2(var053[0]);

var052[1] = log2(var053[1]);

var052[2] = log2(var053[2]);

var052[3] = log2(var053[3]);

var051 = _mm_div_ps(var052, lit041);

var050[0] = log2(var051[0]);

var050[1] = log2(var051[1]);

var050[2] = log2(var051[2]);

var050[3] = log2(var051[3]);

var049 = _mm_sub_ps(lit045, var050);

var048 = _mm_add_ps(count, var049);

count = _mm_or_ps(_mm_and_ps(mask047, var048), _mm_andnot_ps(mask047, count));

}

//>>> return (row, col, count)

}

//End kernel logic

//Outputs

_mm_store_ps(&args->count[index], count);

}

}

//End of kernel function

Listing 5. VecPy generated Mandelbrot kernel in C++.

Of course, VecPy immediately calls g++ to compile this code, so the final output is native shared library called "VecPy_mandelbrot.so" containing the entry points below (Listing 6).

nm VecPy_mandelbrot.so | grep " T "

0000000000001a60 T _fini

0000000000000a58 T _init

0000000000001710 T Java_vecpy_VecPy_mandelbrot

00000000000016a0 T mandelbrot

00000000000016f0 T PyInit_VecPy_mandelbrot

Listing 6. Library "VecPy_mandelbrot.so" entry points for C++, Java, and Python.

Additionally, VecPy generates a Java wrapper class for allocating buffers and invoking the native kernel (Listing 7).

/*******************************************************************************

* VecPy generated API *

*******************************************************************************/

package vecpy;

import java.nio.*;

public class VecPy {

//Helper function to allocate a direct buffer

public static FloatBuffer getFloatBuffer(int N) {

return ByteBuffer.allocateDirect(N * 4).order(ByteOrder.nativeOrder()).asFloatBuffer();

}

//JNI wrapper

public static native boolean mandelbrot(FloatBuffer row, FloatBuffer col, FloatBuffer count,

float max, float w_m1, float h_m1,

float left, float right, float top, float bottom);

}

Listing 7. VecPy generated wrapper class for invoking the native kernel.

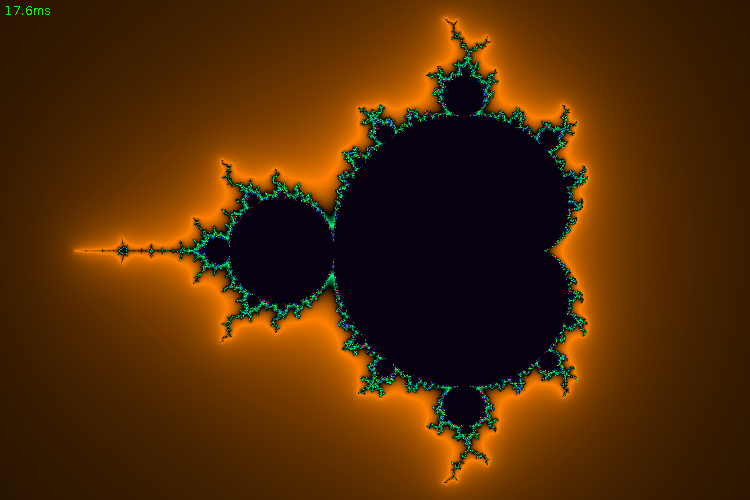

At this point, the call to the pure-Java kernel can be swapped out for a call to the native kernel. After making the change and adding a call to load the native library, the Mandelbrot set is rendered interactively in real-time (Figure 1).

Figure 1. The Mandelbrot set, computed with VecPy-generated kernel.

Finally, I quantify performance in terms of rendering time and speedup on a 1920x1280 image of the Mandelbrot set. Detailed results are shown below (Table 1).

| Test | Speedup (Runtime) | Details |

|---|---|---|

| Original | 1.00x (491ms) |

Sequential (1x) This is the original, pure-Java implementation of the Mandelbrot kernel in Listing 2. |

| Generic C++ | 1.14x (430ms) |

Sequential (1x) VecPy; Neither multi-threading nor SIMD is used. opts = Options(Architecture.generic, DataType.float, threads=1) |

| Multi-threading (2xMT) | 2.10x (234ms) |

Parallel (2x) VecPy; 2-way multi-threading is used, but SIMD is not used. opts = Options(Architecture.generic, DataType.float, threads=2) |

| SSE4.2 SIMD (4xSIMD) | 1.99x (247ms) |

Parallel (4x) VecPy; Multi-threading is not used, but 4-wide SIMD (targeting SSE4.2) is used. opts = Options(Architecture.sse4, DataType.float, threads=1) |

| 2xMT + 4xSIMD | 3.64x (135ms) |

Parallel (8x) VecPy; 2-way multi-threading is used, and 4-wide SIMD (targeting SSE4.2) is used. opts = Options(Architecture.sse4, DataType.float, threads=2) |

Table 1. Speedups on the Mandelbrot set; VecPy works.

Conclusion

VecPy enables a programmer to use a very friendly and productive language to write blazing-fast SIMD-optimized kernels without ever having to write a single compiler intrinsic. It also handles the tedious process of generating language bindings, and the code it generates is documented to assist in debugging, optimization, and learning.

I hope you found my working to be interesting, and thank you for checking out my showcase!

References

-

Prechelt, Lutz. "An empirical comparison of C, C++, Java, Perl, Python, Rexx and Tcl." IEEE Computer 33.10 (2000): 23-29.

PDF -

Barnes, Connelly. "Programming Language Productivity" (2006)

PDF -

Fulgham, Brent and Isaac Gouy. "The Computer Language Benchmarks Game"

Link -

Wikipedia. "Mandelbrot set: Escape time algorithm"

Link