Summary

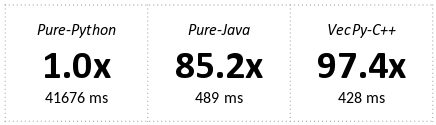

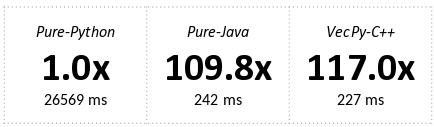

VecPy converts kernels written in Python into equivalent C++ that leverages multi-threading and vector intrinsics for SIMD. VecPy then invokes g++ to compile the generated code into a native shared library which can be subsequently called from C/C++, Python, and Java. Efficiency, one of the primary design goals of VecPy, is demonstrated by analyzing sequential and parallel speedups on several test kernels.

Background

Python is a popular1, programmer-friendly language well suited for rapid prototyping2, and as such it is widely considered to be one of the most productive programming languages3,4. Unfortunately, Python is slow5. There are at least three reasons why Python (as implemented in CPython) is slower than corresponding C/C++ code. First is that Python code isn't compiled to machine code; instead, it's compiled to an intermediate byte-code which is then executed at run-time in a virtual machine6, which is a source of execution overhead. Second, Python provides the illusion of concurrency within a single process, but multi-threading across CPU cores is not possible within a single Python process7,8. Third, Python does not use SIMD vector operations. To write efficient code that utilizes parallelism across and within CPU cores typically requires the programmer to abandon Python and use a lower-level language like C/C++.

For data-parallel processing, VecPy exists to provide the best of both worlds. By converting Python code to equivalent - but multi-threaded and vectorized - C++ code, VecPy enables the programmer to use Python and still take advantage of hardware parallelism to achieve maximum performance. This process of automatic translation of sequential kernels into efficient parallelized kernels has been implemented in other projects, like ISPC9 which operates on kernels written in C. Unlike ISPC however, the libraries generated by VecPy are intended to be usable from C++, Python, and Java.

Approach

Upon invoking VecPy within a Python program, two main steps take place. First, the Parser is given a handle (or a string containing source code) to a kernel function written in Python, and it generates an abstract, language-independent description of the operations performed in that kernel. Second, the Compiler generates several C++ source files based on the following logical components (each of which is described in detail below): the Kernel, API Bindings for each language target, and the Core. Additionally, a build script is created to call g++ to compile the library, and a Java source file is generated which contains the necessary native method definitions for use via the Java Native Interface (JNI). Finally, after the build script has been executed, program control returns to the Python caller where the nascent module can optionally be immediately imported and executed.

- The Parser begins by calling the built-in Python "AST" and "inspect" modules, which parse Python code and return an abstract syntax tree. The Parser then traverses this tree and stores a language-independent set of operations that encapsulate the functionality of the core. Along the way, it keeps track of all variables in the original code and generates new variables when needed. Each variable is either numeric or boolean; literal or derived; a function argument or a local variable; uniform or varying; read from or not read from; written to or not written to. (Numeric if float or integer; literal if appears as a constant in the code; argument if part of the function definition; uniform if annotated with the 'uniform' keyword; read from and written to if value loaded and stored, respectively.) These boolean attributes later determine how the variables are defined by and used by the Compiler.

-

The Compiler is responsible for generating all output files and is split into separate modules to handle code generation for different targets. The most salient of these components are the "generic" and "intel" sub-compilers. These two are responsible for the bulk of VecPy's code generation and both translate the Parser's abstract set of operations into valid C++ code retaining the original functionality of the Python kernel. As mentioned above, the following files are generated during compilation.

- The kernel file ("*kernel.h") contains three primary components: the KernelArgs struct, a sequential kernel implementation, and a vectorized kernel implementation. The KernelArgs struct is simply a collection of all vector and scalar arguments passed to the kernel by the Core and contains the number of elements that should be processed. The sequential kernel is generated by the "generic" sub-compiler and uses standard C++ with no vector intrinsics to operate on individual elements at time. The vectorized kernel is generated by the "intel" sub-compiler and uses C++ with compiler intrinsics for SIMD to operate on 4- or 8-wide vectors of consecutive elements at a time (depending on the target architecture). The reason for including both kernel implementations is to support data inputs which are not a multiple of the vector size on the current architecture.

- The API Binding files ("*cpp.h","*python.h","*java.h",) define the requisite interfaces for invocation from each respective programming language. The C++ binding is the simplest of these bindings, essentially being a wrapper around the Core entry point. The Python and Java bindings are more substantial wrappers for the Core; both require interoperation with the calling VM to get the memory addresses of the kernel arguments. These files include extra sanity checking to prevent the Core from attempting to execute on null or otherwise invalid pointers, and both perform length checking on input arrays as an extra safety measure. The Java interface also requires helper functions to allocate data arrays on proper boundaries, which is required for some SSE4.2 and AVX2 loads and stores. (Python also requires these helper functions, but unlike Java, they are implemented in pure-Python within VecPy's runtime module.) The Python interface must also release the data arrays after the Core finishes execution. In all three interfaces, memory is accessed directly with no need to copy values to and from the caller and the VecPy library.

- The Core file ("*core.cpp") tries together all of the files mentioned above. When the core is called, it first checks to make sure that all data arrays are properly aligned. If so, it begins the process of executing the kernel. It spawns pthreads (by default using the number of execution contexts, but can be set to a specific number at compile-time) and begins to assign work to these threads. It breaks the input arrays into equal sized chunks and passes the a KernelArgs struct to each thread for multi-threaded, vectorized kernel execution. Any remaining elements at the end of the array that did not fit evenly into one of the large chunks are then executed sequentially by calling the scalar implementation of the kernel.

Results

The primary design goals of VecPy were simplicity, flexibility, utility, and efficiency. In just a few lines of code, VecPy translates and compiles a Python function into an efficient, data-parallel native library. The generated library can then be loaded as a Python module, allowing the optimized function to be used as a drop-in replacement for the original Python function. The following program (Listing 1) illustrates how simple it can be to use VecPy to significantly improve Python program performance.

#Import VecPy

from vecpy.runtime import *

from vecpy.compiler_constants import *

#Define the kernel

def volume(radius, volume):

volume = (4/3 * math.pi) * (radius ** 3)

#Generate some data

def data():

array = get_array('f', 10)

for i in range(len(array)): array[i] = (.1 + i/10)

return array

radii, volumes = data(), data()

#Call VecPy to generate the native module

vectorize(volume, Options(Architecture.avx2, DataType.float))

#Import the newly-minted module and execute kernel

from vecpy_volume import volume

volume(radii, volumes)

#Print the results!

print('Radius:', ', '.join('%.3f'%(r) for r in radii))

print('Volume:', ', '.join('%.3f'%(v) for v in volumes))

Listing 1. Hello, world!

VecPy aims to be very flexible to be of benefit to as many projects as as possible. The following data types, architectures, and language bindings are supported:

-

Data Types:

- 32-bit float

- 32-bit unsigned integer

-

Architectures:

- Generic (no SIMD)

- SSE4.2

- AVX2

-

Language Bindings:

- C/C++

- Python

- Java

VecPy aims to implement a sufficiently large feature set to be useful for meaningful, real-world applications. Particularly useful syntax that is supported includes conditional operations within if-elif-else blocks and while loops. All common and Python-specific assignments are also supported: simple (a = x), augmenting (a op= x), multiple (a, b = x, y), and swizzle (a, b = b, a). The following operators, function, and constants are supported:

- Operators: + - * / // % ** == != > >= < <= & | ^ ~ << >> and or not

- Functions: abs, acos, acosh, asin, asinh, atan, atan2, atanh, ceil, copysign, cos, cosh, erf, erfc, exp, expm1, fabs, floor, fmod, gamma, hypot, lgamma, log, log10, log1p, log2, max, min, pow, pow, round, sin, sinh, sqrt, tan, tanh, trunc

- Constants: pi, e

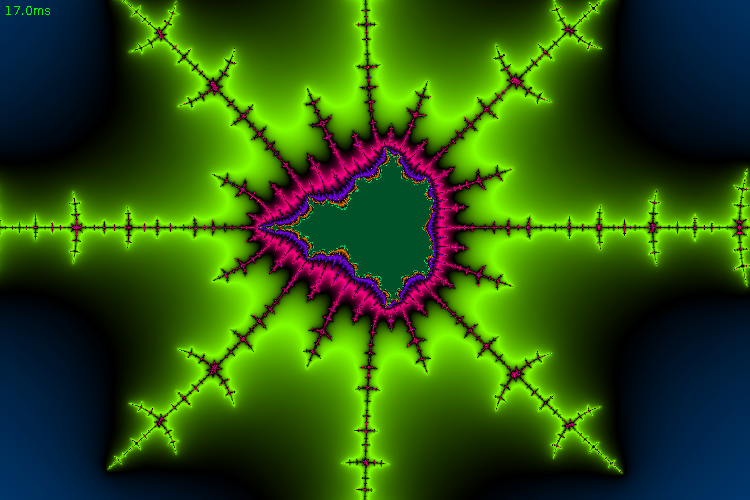

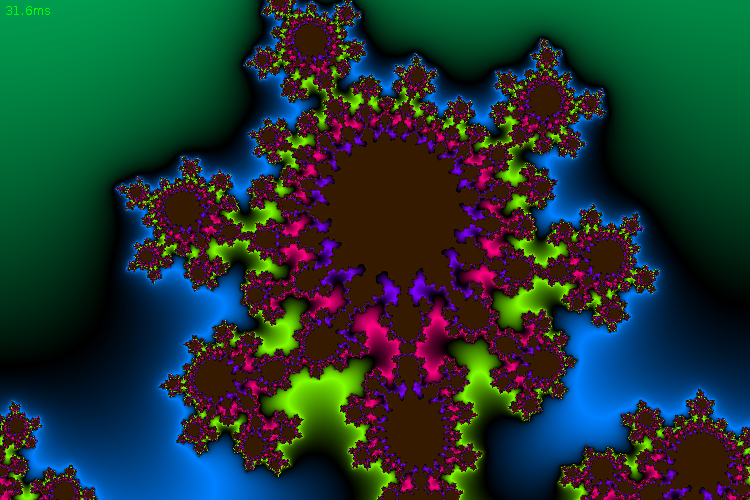

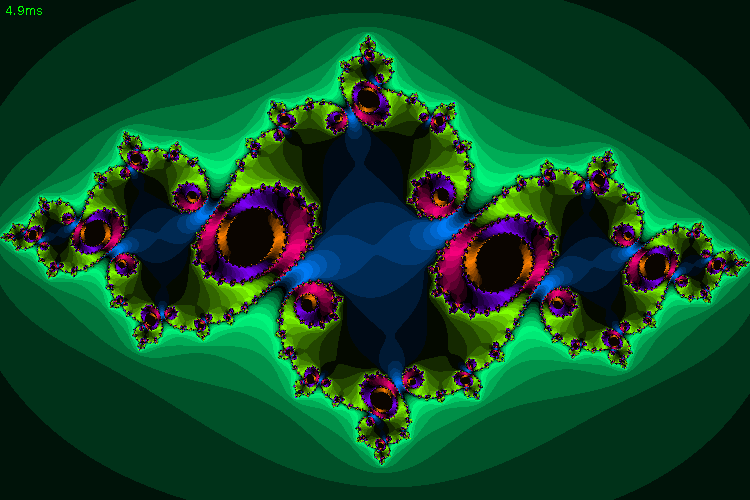

The efficiency of VecPy is demonstrated by analysis of performance on rendering the Mandelbrot and Julia sets. In the context of data-parallel kernel development tools, these mathematical objects are perhaps some of the most widely showcased examples. Rendering these sets is an ideal example for such tools for several reasons: each pixel can be processed independently, the work is CPU-intensive, the algorithms utilize a reasonable set of language features, and the resulting images are captivating. Below, VecPy is applied to the rendering the Mandelbrot set in real-time.

As a preliminary step, I created a Java program to display an image on the screen in real-time. The source code is available, but in the interest of brevity is not shown here.

First, I implemented a pure-Java, sequential version of the Mandelbrot function. I followed the standard escape time algorithm with an extension for smooth shading10 (Listing 2).

private static boolean mandelbrot(FloatBuffer row_, FloatBuffer col_, FloatBuffer count_,

float max, float w_m1, float h_m1,

float left, float right, float top,float bottom) {

for (int index = 0; index < N; index++) {

float row = row_.get();

float col = col_.get();

float x0 = left + col * (right - left) / w_m1;

float y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1;

float x = 0, y = 0;

float count = 0;

float xx = x * x;

float yy = y * y;

//Standard escape time

while (count < max && xx + yy < 16) {

float temp = xx - yy + x0;

y = 2 * x * y + y0;

x = temp;

xx = x * x;

yy = y * y;

++count;

}

//Smooth shading

if (count < max) {

count += 1 - Math.log(Math.log(xx + yy) / LOG2 / 2.0) / LOG2;

}

count_.put(count);

}

count_.rewind();

return true;

}

Listing 2. Sequential Java Mandelbrot set implementation.

Next, I wanted to optimize this function by taking advantage of SIMD execution and multi-threading. I wrote the same function in Python and used VecPy to generate a JNI library to replace the pure-Java version (Listing 3,4). Python function annotations are used to declare that some arguments are "uniform", meaning that all execution instances will receive the same immutable value. Some useful Python features used here include multi-assignment, while loops, if branches, and calls to built-in math functions.

def mandelbrot(row, col, count,

max: 'uniform', w_m1: 'uniform', h_m1: 'uniform',

left: 'uniform', right: 'uniform', top: 'uniform', bottom: 'uniform'):

x0 = left + col * (right - left) / w_m1

y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1

x = y = count = 0

xx, yy = (x * x), (y * y)

#Standard escape time

while (count < max and xx + yy < 16):

x, y = (xx - yy + x0), (2 * x * y + y0)

xx, yy = (x * x), (y * y)

count += 1

#Smooth shading

if count < max:

count += 1 - math.log2(math.log2(xx + yy) / 2)

Listing 3. Sequential Python Mandelbrot set implementation.

from vecpy.runtime import *

from vecpy.compiler_constants import *

vectorize(mandelbrot, Options(Architecture.avx2, DataType.float))Listing 4. Invoking VecPy to compile the Mandelbrot kernel for use in Java.

One of the files VecPy generates is an annotated, vectorized version of the mandelbrot function (Listing 5). It's long; thanks to VecPy I didn't have to manually write it. VecPy also includes Python source code as comments before each block of generated code. This can be helpful for debugging, optimization, or even learning to use SIMD intrinsics.

/*******************************************************************************

* Target Architecture: SSE4.2 (float) *

*******************************************************************************/

//Includes

#include <x86intrin.h>

//Kernel function: mandelbrot

static void mandelbrot_vector(KernelArgs* args) {

//Setup

const __m128 MASK_FALSE = _mm_setzero_ps();

const __m128 MASK_TRUE = _mm_cmpeq_ps(MASK_FALSE, MASK_FALSE);

//Uniforms

const __m128 max = _mm_set1_ps(args->max);

const __m128 w_m1 = _mm_set1_ps(args->w_m1);

const __m128 h_m1 = _mm_set1_ps(args->h_m1);

const __m128 left = _mm_set1_ps(args->left);

const __m128 right = _mm_set1_ps(args->right);

const __m128 top = _mm_set1_ps(args->top);

const __m128 bottom = _mm_set1_ps(args->bottom);

//Literals

const __m128 lit023 = _mm_set1_ps(0.0000000f);

const __m128 lit045 = _mm_set1_ps(1.0000000f);

const __m128 lit041 = _mm_set1_ps(2.0000000f);

const __m128 lit033 = _mm_set1_ps(16.0000000f);

//Stack variables

__m128 row, col, count, var012, var013, var014, var015, x0, var017, var018, var019, var020, var021, y0, x, y, var026, xx, var028, yy, mask030, mask031, var032, mask034, mask035, var036, var037, var038, var039, var040, var042, var043, var044, mask046, mask047, var048, var049, var050, var051, var052, var053;

//Loop over input

for(unsigned int index = 0; index < args->N; index += 4) {

//Inputs

row = _mm_load_ps(&args->row[index]);

col = _mm_load_ps(&args->col[index]);

count = _mm_load_ps(&args->count[index]);

//Begin kernel logic

{

//>>> x0 = left + col * (right - left) / w_m1

var015 = _mm_sub_ps(right, left);

var014 = _mm_mul_ps(col, var015);

var013 = _mm_div_ps(var014, w_m1);

var012 = _mm_add_ps(left, var013);

x0 = var012;

//>>> y0 = bottom + (h_m1 - row) * (top - bottom) / h_m1

var020 = _mm_sub_ps(h_m1, row);

var021 = _mm_sub_ps(top, bottom);

var019 = _mm_mul_ps(var020, var021);

var018 = _mm_div_ps(var019, h_m1);

var017 = _mm_add_ps(bottom, var018);

y0 = var017;

//>>> x = y = count = 0

x = lit023;

y = lit023;

count = lit023;

//>>> xx, yy = (x * x), (y * y)

var026 = _mm_mul_ps(x, x);

var028 = _mm_mul_ps(y, y);

xx = var026;

yy = var028;

//>>> while (count < max and xx + yy < 16):

mask031 = _mm_cmplt_ps(count, max);

var032 = _mm_add_ps(xx, yy);

mask034 = _mm_cmplt_ps(var032, lit033);

mask030 = _mm_and_ps(mask031, mask034);

mask035 = _mm_and_ps(mask030, MASK_TRUE);

while(_mm_movemask_ps(mask035)) {

//>>> x, y = (xx - yy + x0), (2 * x * y + y0)

var037 = _mm_sub_ps(xx, yy);

var036 = _mm_add_ps(var037, x0);

var040 = _mm_mul_ps(lit041, x);

var039 = _mm_mul_ps(var040, y);

var038 = _mm_add_ps(var039, y0);

x = _mm_or_ps(_mm_and_ps(mask035, var036), _mm_andnot_ps(mask035, x));

y = _mm_or_ps(_mm_and_ps(mask035, var038), _mm_andnot_ps(mask035, y));

//>>> xx, yy = (x * x), (y * y)

var042 = _mm_mul_ps(x, x);

var043 = _mm_mul_ps(y, y);

xx = _mm_or_ps(_mm_and_ps(mask035, var042), _mm_andnot_ps(mask035, xx));

yy = _mm_or_ps(_mm_and_ps(mask035, var043), _mm_andnot_ps(mask035, yy));

//>>> count += 1

var044 = _mm_add_ps(count, lit045);

count = _mm_or_ps(_mm_and_ps(mask035, var044), _mm_andnot_ps(mask035, count));

//>>> while (count < max and xx + yy < 16):

mask031 = _mm_cmplt_ps(count, max);

var032 = _mm_add_ps(xx, yy);

mask034 = _mm_cmplt_ps(var032, lit033);

mask030 = _mm_and_ps(mask031, mask034);

mask035 = _mm_and_ps(mask030, MASK_TRUE);

}

//>>> if count < max:

mask046 = _mm_cmplt_ps(count, max);

mask047 = _mm_and_ps(mask046, MASK_TRUE);

{

//>>> count += 1 - math.log2(math.log2(xx + yy) / 2)

var053 = _mm_add_ps(xx, yy);

var052[0] = log2(var053[0]);

var052[1] = log2(var053[1]);

var052[2] = log2(var053[2]);

var052[3] = log2(var053[3]);

var051 = _mm_div_ps(var052, lit041);

var050[0] = log2(var051[0]);

var050[1] = log2(var051[1]);

var050[2] = log2(var051[2]);

var050[3] = log2(var051[3]);

var049 = _mm_sub_ps(lit045, var050);

var048 = _mm_add_ps(count, var049);

count = _mm_or_ps(_mm_and_ps(mask047, var048), _mm_andnot_ps(mask047, count));

}

//>>> return (row, col, count)

}

//End kernel logic

//Outputs

_mm_store_ps(&args->count[index], count);

}

}

//End of kernel function

Listing 5. VecPy generated Mandelbrot set kernel in C++.

Of course, VecPy immediately calls g++ to compile this code, so the final output is native shared library called "VecPy_mandelbrot.so" containing the entry points below (Listing 6).

nm VecPy_mandelbrot.so | grep " T "

0000000000001f18 T _fini

0000000000000b30 T _init

0000000000001d70 T Java_vecpy_VecPy_allocate

0000000000001df0 T Java_vecpy_VecPy_free

0000000000001aa0 T Java_vecpy_VecPy_mandelbrot

0000000000001a20 T mandelbrot

0000000000001a80 T PyInit_vecpy_mandelbrot

Listing 6. Library "VecPy_mandelbrot.so" entry points for C++, Java, and Python.

Additionally, VecPy generates a Java wrapper class for allocating buffers and invoking the native kernel (Listing 7).

/*******************************************************************************

* VecPy generated API *

*******************************************************************************/

package vecpy;

import java.nio.*;

public class VecPy {

//JNI wrappers

public static native boolean mandelbrot(FloatBuffer row, FloatBuffer col, FloatBuffer count,

float max, float w_m1, float h_m1,

float left, float right, float top, float bottom);

private static native ByteBuffer allocate(long N);

private static native boolean free(Buffer buffer);

//Helper functions to allocate and free aligned direct buffers

public static FloatBuffer newBuffer(long N) {

return allocate(N * 4).order(ByteOrder.nativeOrder()).asFloatBuffer();

}

public static boolean deleteBuffer(Buffer buffer) {

return free(buffer);

}

}

Listing 7. VecPy generated wrapper class for invoking the native kernel.

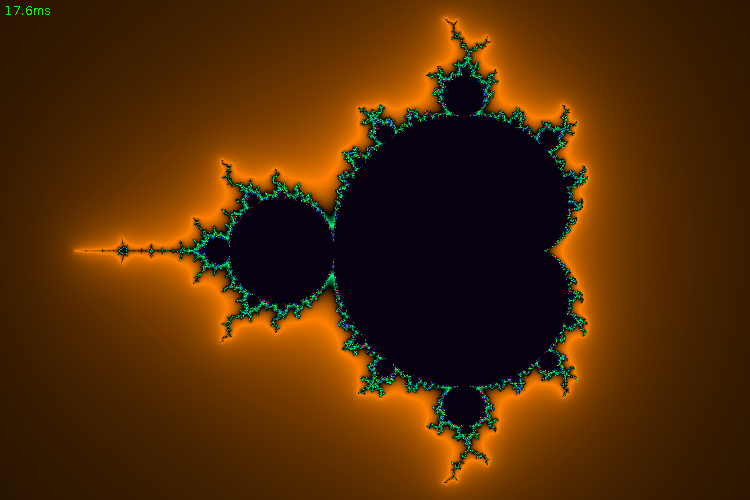

At this point, the call to the pure-Java kernel can be swapped out for a call to the native kernel. After making the change and adding a call to load the native library, the Mandelbrot set is rendered interactively in real-time (Figure 1).

Figure 1. The Mandelbrot set, computed with VecPy-generated kernel (4 threads, AVX2).

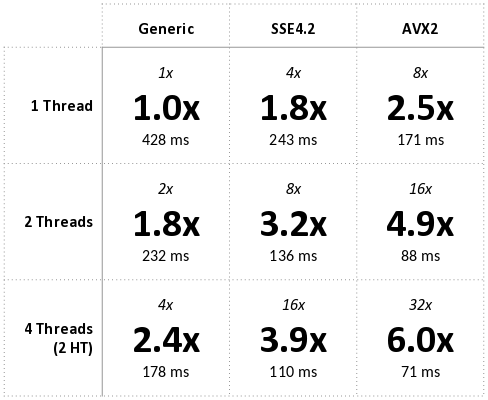

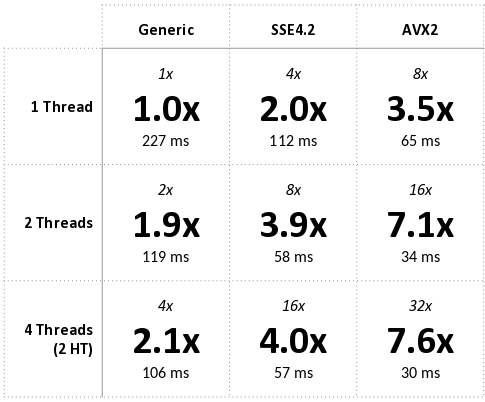

Performance on the Mandelbrot set is quantified in terms of rendering time and speedup on a 1920x1280 image of the Mandelbrot set on a dual core 1.80GHz i7-4500U CPU. Detailed results are shown below (Table 1). In addition to a sequential speedup, VecPy also gives speedups across both axes of parallelism: increasing thread count and increasing vector width.

Table 1. VecPy gives sequential and parallel speedup. Overall speedup: ~582x.

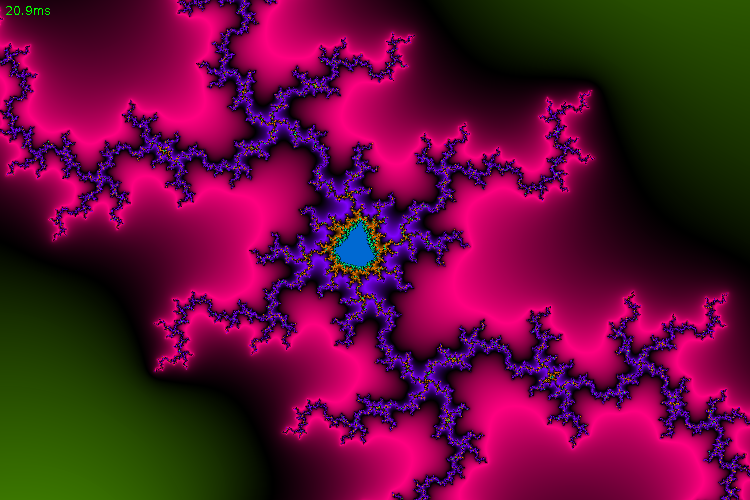

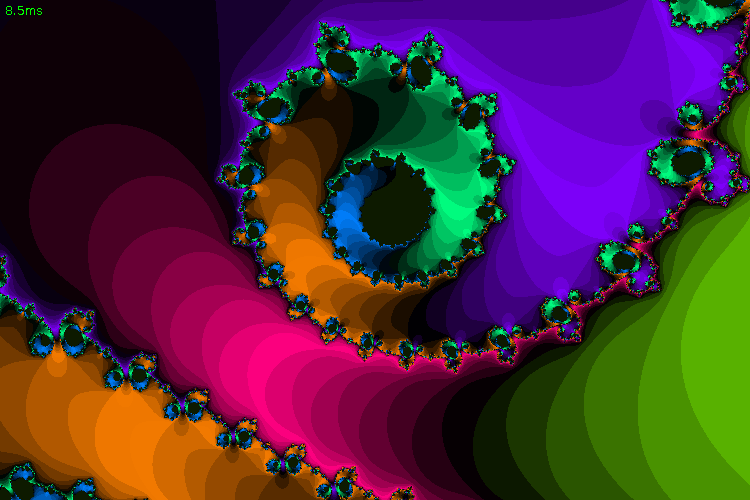

Next, performance is quantified on a related mathematical structure - a Julia set. The code is similar (Listing 8), except here the extension for smooth shading has been removed to illustrate additional speedup when the kernel isn't forced to make scalar-mode calls to the base-two log function. The renderings show clear banding (Figure 2), and as a consequence of avoiding the extra scalar-mode calls, the resulting speedups are greater than those observerd for the Mandelbrot set (Table 2).

def julia(row, col, count, max: 'uniform', w_m1: 'uniform', h_m1: 'uniform',

left: 'uniform', right: 'uniform', top: 'uniform',

bottom: 'uniform', cr: 'uniform', ci: 'uniform'):

r = left + col * (right - left) / w_m1

i = bottom + (h_m1 - row) * (top - bottom) / h_m1

count = 0

rr, ii = r * r, i * i

while count < max and rr + ii < 4:

r, i = (rr - ii + cr), (2 * r * i + ci)

rr, ii = r * r, i * i

count += 1

Listing 8. Sequential Python Julia set implementation.

Figure 2. The Julia set, computed with VecPy-generated kernel (4 threads, AVX2).

Table 2. VecPy gives greater sequential and parallel speedup when scalar-mode math is removed. Overall speedup: ~889x.

Although the speedups described above are significant, they are not perfect. As the number of threads is doubled from 1 to 2, there is nearly a perfect speedup. However, as it is doubled again from 2 to 4, the speedup is abysmal. The reason is that these tests were performed on a dual-core machine, and the additional two threads were, in the best case, scheduled with hyper-threading (HT). In HT, Intel's implementation of simultaneous multi-threading, two threads can execute simultaneously on a single core if they are not using the same parts of the CPU at the same time. In these simple compute-bound kernels, the CPU is nearly constantly busy with vector arithmetic. HT appears to give the Mandelbrot kernel a slightly better speedup than the Julia kernel, and I hypothesize that it is because of the Mandelbrot reliance on scalar-mode log calculation. Two Mandelbrot threads may be able to execute simultaneously if one is calculating scalar-mode log and the other is doing vector-mode arithmetic. In both examples, speedups were far from perfect as the width of vector lanes was increased from 1 (scalar) to 4 (SSE4.2) to 8 (AVX2). Because these kernels were implemented with the escape time algorithm, they are susceptible to divergence. Even if 7 of 8 elements break out of the while loop after a single iteration, they'll be unable to progress until the final element leaves the loop. I suspect that there is some divergence happening here, becoming more severe as the vector widths of the increase.

It is important to note that the purpose of this project was not to implement the world's fastest CPU-based fractal renderer. Rather, VecPy aims to fill a real need in Python computing: to provide a means to the Python programmer to generate efficient data-parallel code. It is a non-trivial task to find a balance between maximizing program efficiency while minimizing programmer effort, but VecPy provides a reasonable approach and achieves the previously described goals of simplicity, flexibility, utility, and efficiency of data-parallel computing on modern CPUs in Python.

References

- Black Duck Software, Inc. "Python Programming Language Statistics."

Link - Garey, Diane and Steve Lang. "High Performance Development with Python."

Link - Prechelt, Lutz. "An empirical comparison of C, C++, Java, Perl, Python, Rexx and Tcl." IEEE Computer 33.10 (2000): 23-29

PDF - Barnes, Connelly. "Programming Language Productivity." (2006)

PDF - Fulgham, Brent and Isaac Gouy. "The Computer Language Benchmarks Game."

Link - CPython source code. "Python/ceval.c."

Code - Python Wiki. "Global Interpreter Lock."

Link - Wikipedia. "Global Interpreter Lock."

Link - Intel Corporation. "Intel SPMD Program Compiler."

Link - Wikipedia. "Mandelbrot set: Escape time algorithm."

Link