Overview

In this assignment, you will implement a few different techniques that require you to manipulate images on the manifold of natural images. First, we will invert a pre-trained generator to find a latent variable that closely reconstructs the given real image. In the second part of the assignment, we will take a hand-drawn sketch and generate an image that fits the sketch accordingly.

Part 1: Inverting the Generator

Inverting the generator of a pretrained network involves solving solving a nonconvex optimization problem where we constrain the output of the

generator (given some optimized latent) to be close to the true image according to some loss function. The choice of loss function here is an important design decision which heavily influences the later

parts of this assignment. First, we investigate our inversion results using a simple L2 loss.

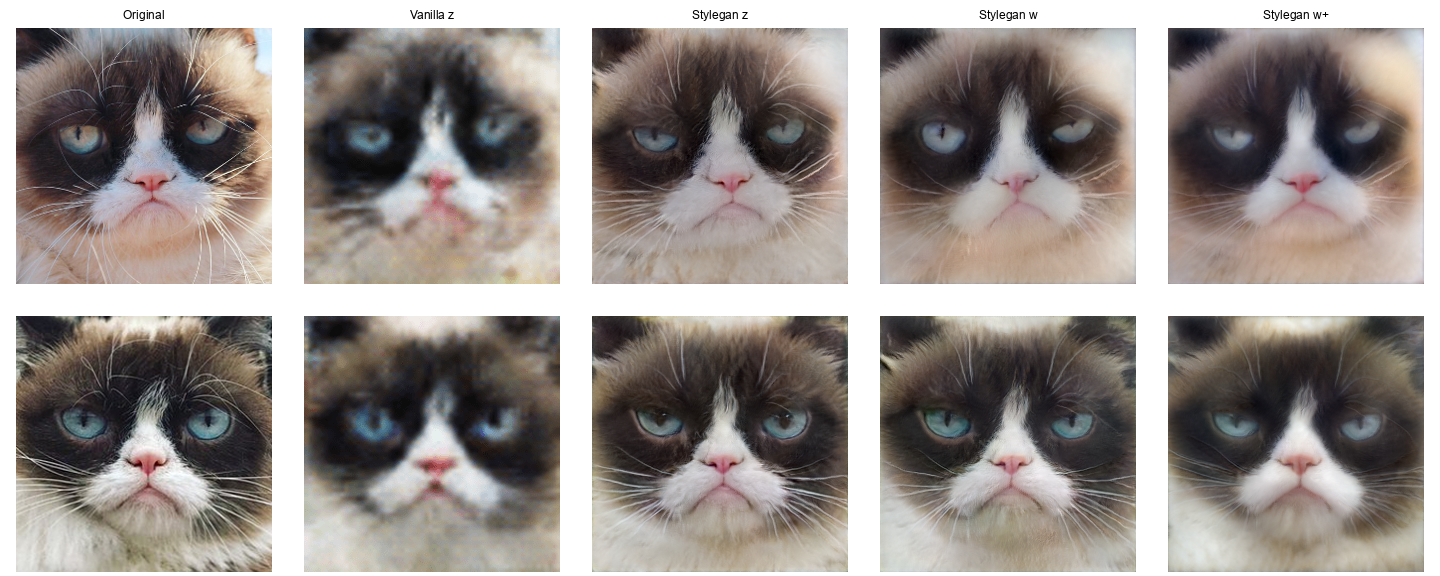

The above results show the reconstruction obtained from our inverted latents for 2 different input images. We show results for inverting a vanilla DCGAN in z space as well as

StyleGAN in z, w, w+ space. Notably, the vanilla GAN has the blurriest reconstruction but this is because we upscale the original output (64 x 64) to match the resolutions of

the higher dimensional pre-trained StyleGAN. Qualitatively, inversion using the z space gives the best reconstruction with the results in w and w+ space

being slightly blurrier as well as not matching the shape of the eyes and pupils as well. The coloration for w space appears to be the most accurate as well. The close similarity

between the rightmost images is likely a result of the simplicity of the loss function. Now, we introduce perceptual loss term based on the first convolutional layer of a pre-trained

VGG network (weighted equally with our prior L2 loss).

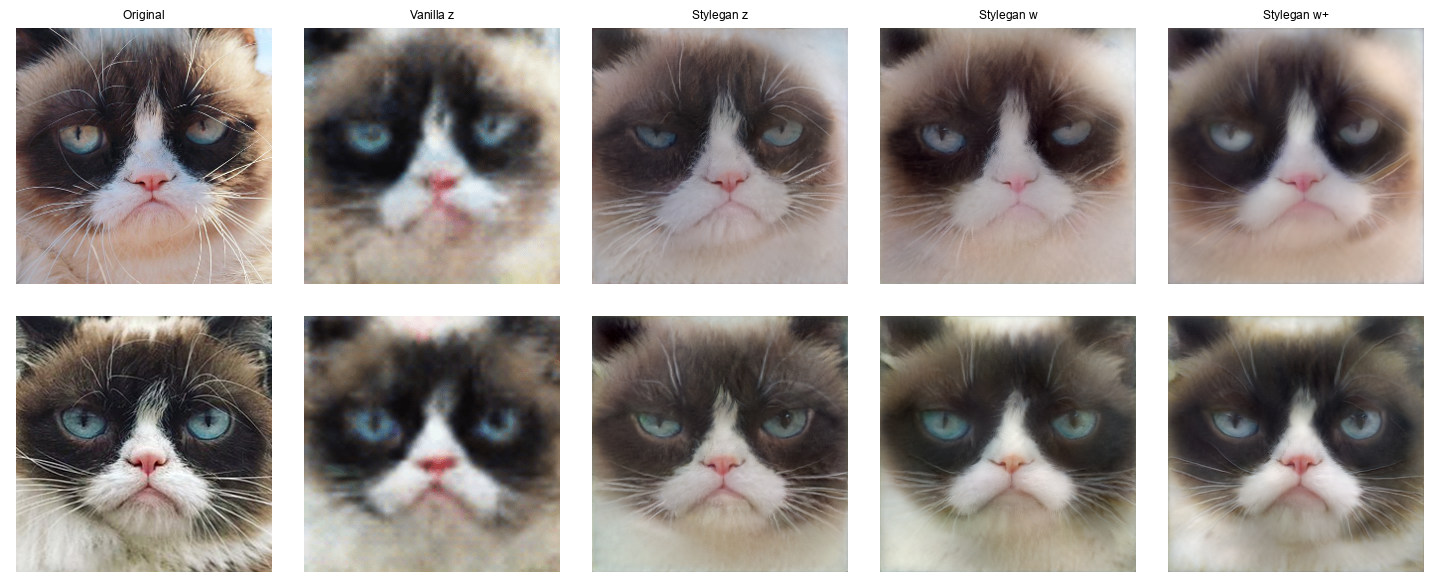

The above results show the reconstruction obtained from our inverted latents for 2 different input images. We show results for inverting a vanilla DCGAN in z space as well as

StyleGAN in z, w, w+ space. Notably, the vanilla GAN has the blurriest reconstruction but this is because we upscale the original output (64 x 64) to match the resolutions of

the higher dimensional pre-trained StyleGAN. Qualitatively, inversion using the z space gives the best reconstruction with the results in w and w+ space

being slightly blurrier as well as not matching the shape of the eyes and pupils as well. The coloration for w space appears to be the most accurate as well. The close similarity

between the rightmost images is likely a result of the simplicity of the loss function. Now, we introduce perceptual loss term based on the first convolutional layer of a pre-trained

VGG network (weighted equally with our prior L2 loss).

The blurry results with the vanilla GAN persist, but we see some clear differences between the results of the StyleGAN outputs. In particular, inverting from z space

results in general grumpy cats which fail to capture some clear physical features like the left pupil and eye. However, inverting from w and w+ space allows the model to

capture these features throughout optimization. In this sense, we see the usefulness of a mapping network as simply inverting from z space can get stuck in these local optima

which are "general" grumpy cats. The results from w and w+ space are quite similar apart from some slight colorization differences. Finally, the actual optimization was done using

a quasi-newton optimizer (LBFGS) for 1000 iterations. For a 256 x 256 resolution image on a Nvidia P4 GPU, this took around 1 minute on average.

In the remaining parts of this assignment, we choose to invert from w and w+ space using this combined loss with StyleGAN.

The blurry results with the vanilla GAN persist, but we see some clear differences between the results of the StyleGAN outputs. In particular, inverting from z space

results in general grumpy cats which fail to capture some clear physical features like the left pupil and eye. However, inverting from w and w+ space allows the model to

capture these features throughout optimization. In this sense, we see the usefulness of a mapping network as simply inverting from z space can get stuck in these local optima

which are "general" grumpy cats. The results from w and w+ space are quite similar apart from some slight colorization differences. Finally, the actual optimization was done using

a quasi-newton optimizer (LBFGS) for 1000 iterations. For a 256 x 256 resolution image on a Nvidia P4 GPU, this took around 1 minute on average.

In the remaining parts of this assignment, we choose to invert from w and w+ space using this combined loss with StyleGAN.

Part 2: Cat Interpolation

Using our inversion from Part 1, we can now interpolate between images by simply taking a linear combination of the latents and passing the resulting vector through the pre-trained generator. First, we present results where we invert from w space. The leftmost and rightmost images are the true images while the middle GIF depicts a progressive 31 frame transition.

Overall, the interpolation is quite smooth and looks impressive! The GIF clearly shows how major features like the shape of the cat, ears, eyes, and pose are gradually shifting from the source to the target image. While some of the initial inverted images like the middle row look more like a generic grumpy cat than the source image, the interpolation still smoothly shifts to a replication of the target image. The next results are interpolation in w+ space.

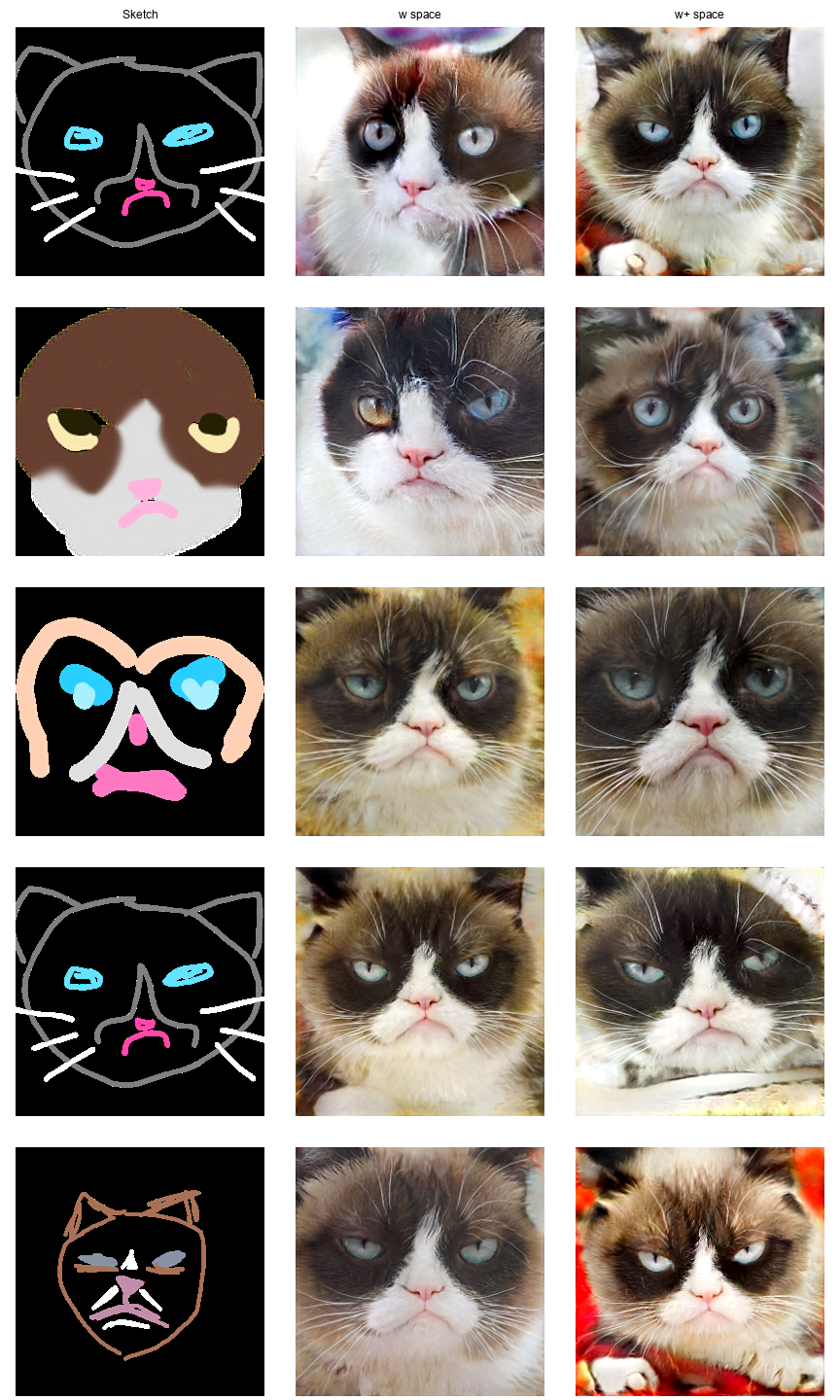

Part 3: Scribble to Image

Finally, we can apply a similar strategy to Part 1 in order to generate a realistic grumpy cat from a sparse scribble. In particular, we can

generate a mask from the scribble and constrain the difference between the Hadamard product of the mask and sketch and the mask and generated

image from our optimized latent. We also add L2 regularization with a factor of 0.001 to

avoid divergent results too far from the natural image manifold. For particularly dense sketches, we encounter failure cases occasionally but

our model can generate some impressive images! We showcase results below from both w and w+ space.

It's quite interesting to see how similar the facial structure of the grumpy cats are across the bottom 3 images. The w+

model also introduced additional color into the background of the generated images in several cases. Some minor failure

cases can be seen such as the yellow-ish coloring over the w space cat's eye in the second row. Regardless, it's amazing

that such a simple approach can produce such detailed results!

It's quite interesting to see how similar the facial structure of the grumpy cats are across the bottom 3 images. The w+

model also introduced additional color into the background of the generated images in several cases. Some minor failure

cases can be seen such as the yellow-ish coloring over the w space cat's eye in the second row. Regardless, it's amazing

that such a simple approach can produce such detailed results!