| In an era where dynamic visual storytelling is increasingly sought after, our work "GIF-Tune: One-Shot Tuning for Continuous Text-to-GIF Synthesis" introduces an innovative process of text-guided GIFs. Conventional methods requires large video datasets, our approach is predicated on a one-shot tuning paradigm that requires only a single text-GIF pair for training, thereby removing the computational burdens associated with traditional text-to-video (T2V) frameworks. Our methodology can work with any text-to-image (T2I) diffusion architectures pre-trained on large image dataset. We introduce a spatiotemporal attention and background regularization techniques along with one-shot tuning strategy within our "GIF-Tune" model for temporally coherent and depth-consistent GIFs. For the inference process, we utilize discrete denoising implicit models (DDIM) for structural guidance, thus enhancing sampling efficiency. Our exhaustive experimental assessments, both qualitative and quantitative, underscore the proficiency of our methodology to adapt across a diverse array of applications, setting a new precedent for personalized, text-initiated GIF generation. |

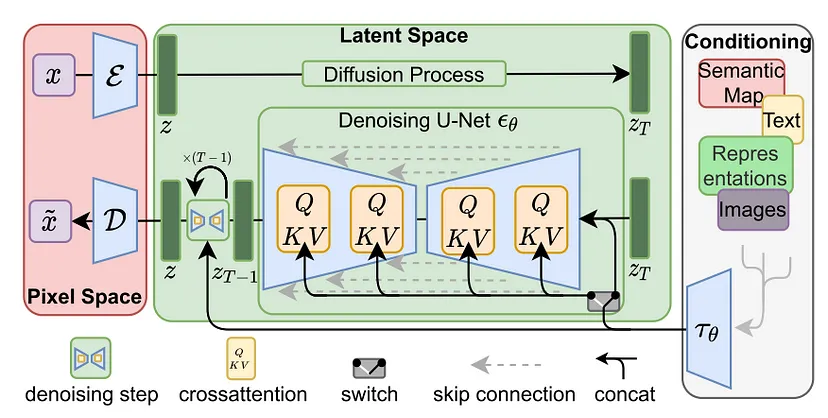

Latent Diffusion Models (LDMs), introduced by Rombach et al., represent a significant evolution within likelihood-based Denoising Probabilistic Models (DPMs), adept at capturing intricate data details for high-fidelity image generation. Traditionally, DPMs operated in pixel space, necessitating substantial computational resources and time, and suffering from slow, sequential inference processes. LDMs address these challenges by utilizing a two-phase training strategy: initially, an autoencoder compresses the image into a perceptually consistent latent representation, significantly reducing dimensionality. Subsequently, a DPM is trained on this compressed latent space instead of the high-dimensional pixel space, enhancing efficiency and allowing rapid generation from latent space back to detailed images. This method not only makes high-resolution image generation computationally feasible on limited setups but also speeds up the entire process, maintaining the quality and adaptability of the original DPM approach. |

Stable Diffusion, introduced in 2022, marks a significant advancement in generative AI, enabling the creation of photorealistic images and animations from textual and visual prompts using diffusion processes and latent space rather than pixel space. This approach dramatically reduces the computational load, allowing efficient operation on standard desktop GPUs. It features a variational autoencoder (VAE) that compresses images without losing detail and quality, supported by expansive datasets from LAION. Stable Diffusion incorporates forward and reverse diffusion processes and a noise predictor, all facilitated by text conditioning. These components not only allow for detailed image generation but also extend to animating these images into GIFs, meeting the demands of personalized text-to-GIF synthesis with temporal cohesion and visual richness. |

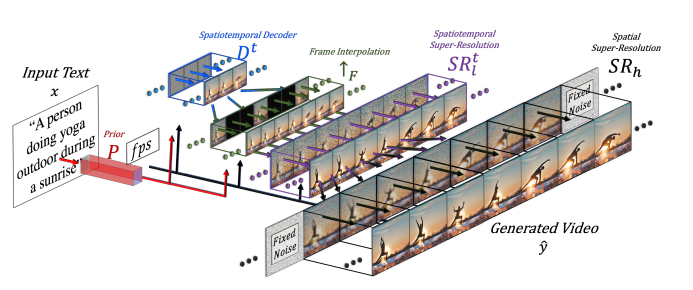

Make-a-Video leverages the advancements in T2I generation for the creation of T2V content, using the appearance and language descriptors of the world from image-text pairs, while discerning motion from unlabelled video data. This method gives 3 major benefits: expediting T2V model training without necessitating the development of visual and multimodal foundations anew, eliminating the dependency on paired text-video datasets, and ensuring the generated videos reflect the extensive diversity encountered in modern image synthesis. Its architecture extends T2I models with spatial-temporal components, decomposing and approximating temporal U-Net and attention tensors across space and time, enabling it to produce videos of high resolution and frame rate. Make-A-Video's proficiency in adhering to textual prompts and delivering high-quality videos has established new benchmarks in the T2V domain. |

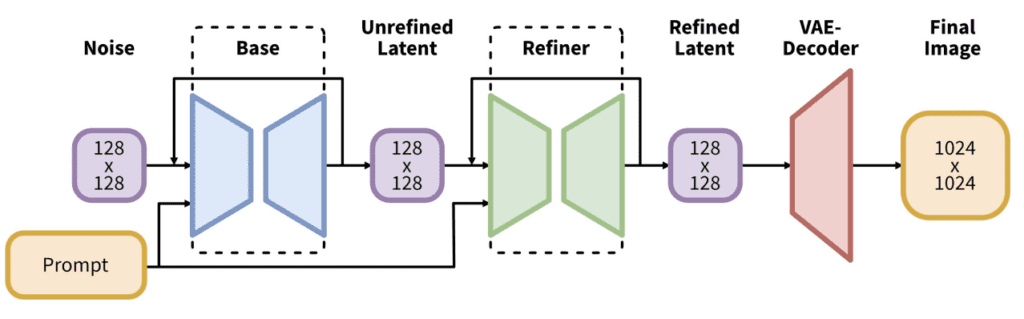

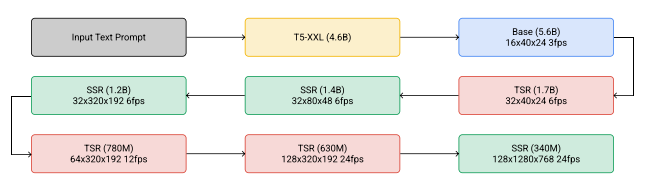

Imagen Video introduces a text-conditional video generation system that leverages a cascade of video diffusion models to produce high-definition videos from textual prompts. This system employs a foundational video generation model alongside a series of spatial and temporal video super-resolution models that interleave to enhance video quality. The architecture incorporates fully-convolutional temporal and spatial super-resolution models at varying resolutions, utilizing v-parameterization for diffusion processes, thereby scaling the model effectively for high-definition outputs. By adapting techniques from diffusion-based image generation and employing progressive distillation with classifier-free guidance, Imagen Video achieves not high fidelity and rapid sampling rates while also providing a high degree of controllability and depth of world knowledge. This allows for the creation of stylistically diverse videos and text animations that demonstrate a sophisticated understanding of 3D objects. |

|

|

|

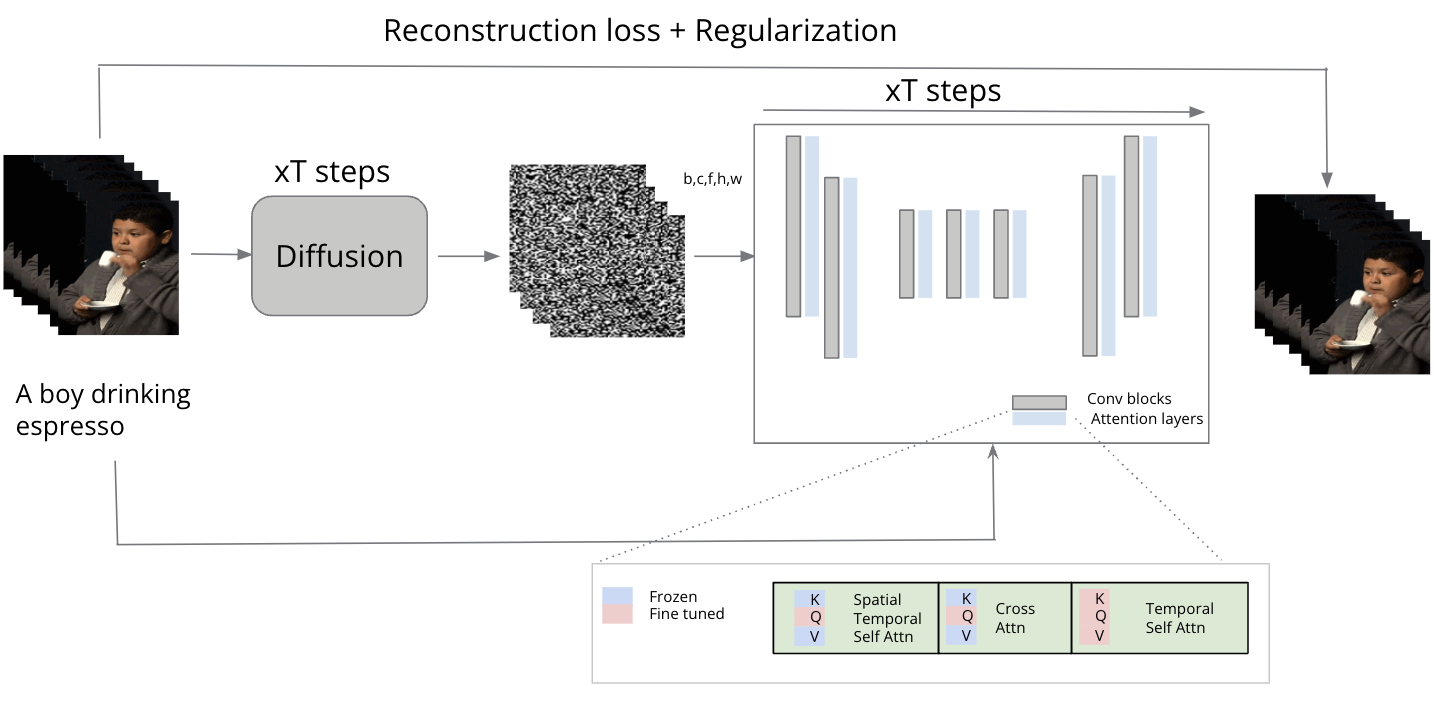

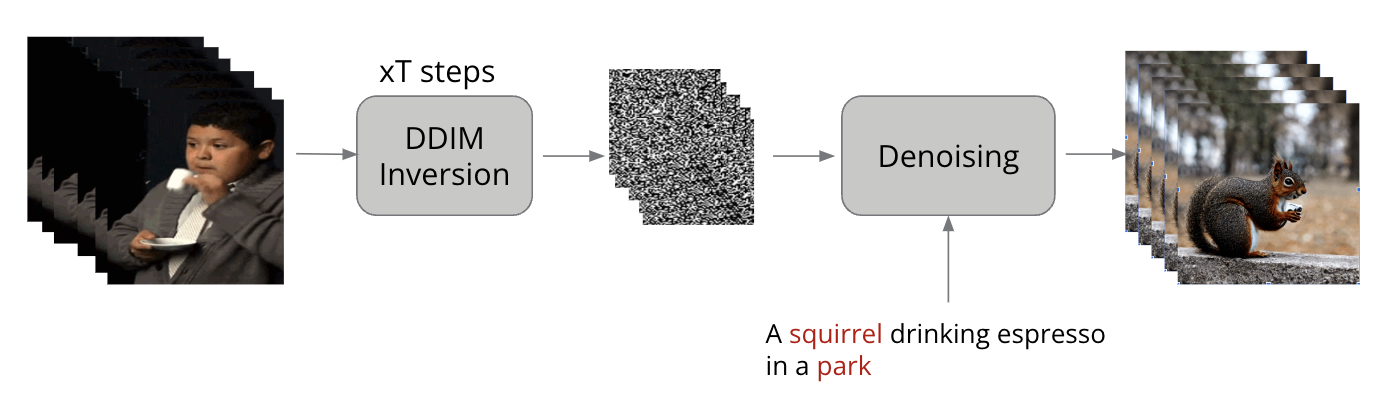

Our proposed method, GIF-Tune is a one-shot tuning strategy for continuous text-to-GIF synthesis. The model is trained on a single text-GIF pair and can generate GIFs from any text prompt. Architecture A T2I model is extended to spatial temporal domain by inflating 2D convolutional layers to psuedo 3D convolutional layers, by replacing the 2D kernels with 3D kernels and append a temporal self attention layer in each transformer block to model the temporal dependencies. We use causal self attention to model the temporal dependencies by computing the attention matrix between frame $z_i$ and two previous frames $z_{i-1}$ and $z_{1}$ i.e, the query feature is derived from $z_i$ and the key and value features are derived from $z_{i-1}$ and $z_{1}$. These attention layers are called spatiotemporal attention layers. We also add temporal self attention layers in the transformer blocks to model the temporal dependencies. Finetuning Pipeline The model is now fine tuned on the input GIF and text prompt. In the spatiotemporal attention layers, we finetune the query matrix while keeping the key and value matrix fixed. In the cross attention layers in the transformers block, we fine tune the query matrix since the query feature vector are derived from the text prompt. The key and value matrices are kept frozen. Inference Pipeline During inference, the model generates GIFs from any text prompt. We obtain latent noise of input GIF using DDIM inversion. This allows the network to not produce stagnant GIFs. The latent noise is then fed into the GIF-Tune model along with the edited text prompt to generate the GIF. The model can generate GIFs with diverse and realistic 3D scenes across different categories. For the same input GIF, we only need to perform DDIM inversion once. Regularization Along with the standard reconstruction loss, we introduce background regularization loss to ensure that the model does not ignore the background while generating the GIF. This is done using depth as a supervision signal. We also add temporal consistency loss to ensure that the generated GIF is temporally coherent by minimizing the difference between consecutive frames. |

|

Here're the results of our proposed method. On the left are the input GIF and text prompt. On the right are the generated GIFs for the novel edited text prompts.

Below results are using Stable-Diffusion-v1-5 |

|

|

|

|

|

|

|

|

|

|

|

|

| Below we show an example to demonstrate the need for temporal smoothing in GIF generation. The left GIF is generated without temporal smoothing and the right GIF is generated with temporal smoothing. The GIF on the right is more coherent and visually appealing. |

|

Generated without temporal smoothing |

Generated with temporal smoothing |

| We perform qualitative analysis with some zero-shot Text-to-Video models to evaluate the effectiveness of our proposed method for a complex text prompt with occlusion in motion. The results are shown below. |

|

|

| AnimateLCM is a model that accelerates the animation of personalized diffusion models and adapters with decoupled consistency learning. Text2Video-Zero models are zero-shot video generators that use text-to-image diffusion models. The results show that all models across the board struggle with modelling motion when there is occulsion with the main object in motion, for instance the cubs here. The cubs can be seen to merge into the mother panda. Our model however is able to generate the cubs have them as separate entities for most of the duration of the GIF. |

|

|

|

|

|

|

| Rombach, Robin, et al. "High-resolution image synthesis with latent diffusion models." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2022. |

| Singer, Uriel, et al. "Make-a-video: Text-to-video generation without text-video data." arXiv preprint arXiv:2209.14792 (2022). |

| Ho, Jonathan, et al. "Imagen video: High definition video generation with diffusion models." arXiv preprint arXiv:2210.02303 (2022). |

| Wu, Jay Zhangjie, et al. "Tune-a-video: One-shot tuning of image diffusion models for text-to-video generation." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023. |

| Wang, Fu-Yun, et al. "AnimateLCM: Accelerating the Animation of Personalized Diffusion Models and Adapters with Decoupled Consistency Learning." arXiv preprint arXiv:2402.00769 (2024). |

| Khachatryan, Levon, et al. "Text2video-zero: Text-to-image diffusion models are zero-shot video generators." Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023. |

|

|