16726 Final Project: Multi-Modal Instruction Image Editing

Tiancheng Zhao (andrewid: tianchen), Chia-Chun Hsieh (andrewid: chiachun)

In this project, we explore the use of multi-modal instructions to edit images.

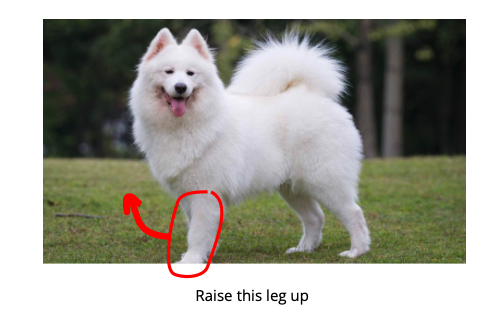

Object-deforming/structural-change type of image editing is

under-explored in current diffusion-based image editing literature (see fig 1).

We hypothesize that multi-modal instructions can be a natural way to address this, because

we can use

- sketches/masks to designate object boundaries

- text to designate object attributes

We experimented with 3 different models:

- Instruct-Pix2Pix (text-only baseline)

- Stable-Diffusion (img2img translation with text prompt)

- Pix2Pix-Zero (with masks)