This endeavor is a part of the Algonauts 2023 competition, which aims to evaluate computational models that anticipate human brain activity when individuals view images of objects. The comprehension of how the human brain functions is a significant obstacle that both science and society confront. With every blink, we are exposed to a vast amount of light particles, yet we process the visual world as organized and meaningful. The central focus of this undertaking is to forecast human brain responses to intricate natural visual environments by employing the most comprehensive brain dataset accessible for this intention.

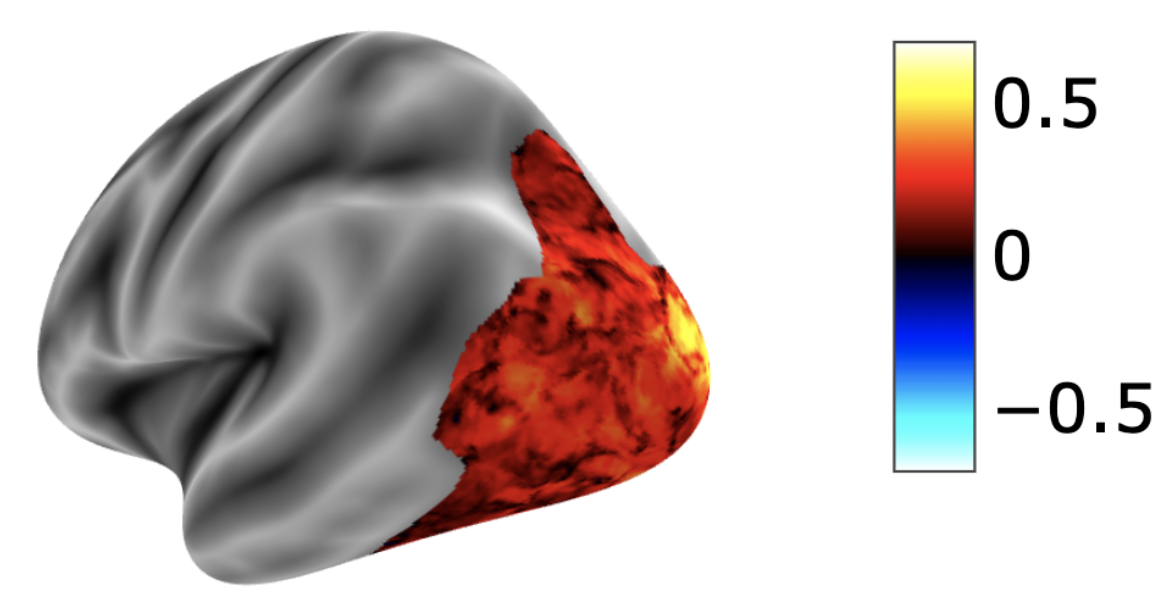

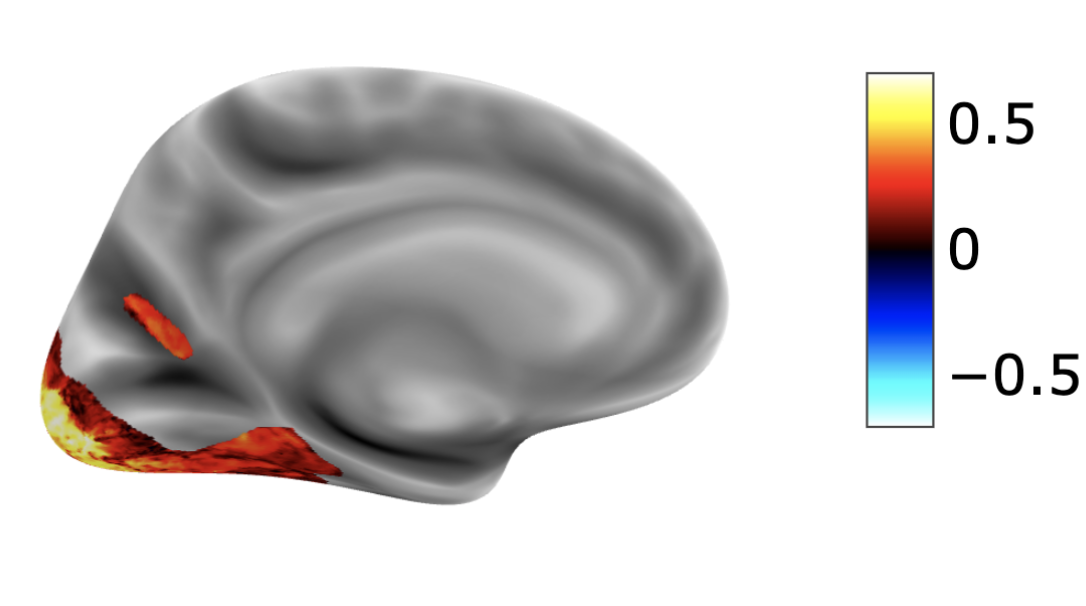

Region-of-Interest (ROI):

The visual cortex is divided into multiple areas with different functional properties, referred to as regions-of-interest (ROIs).

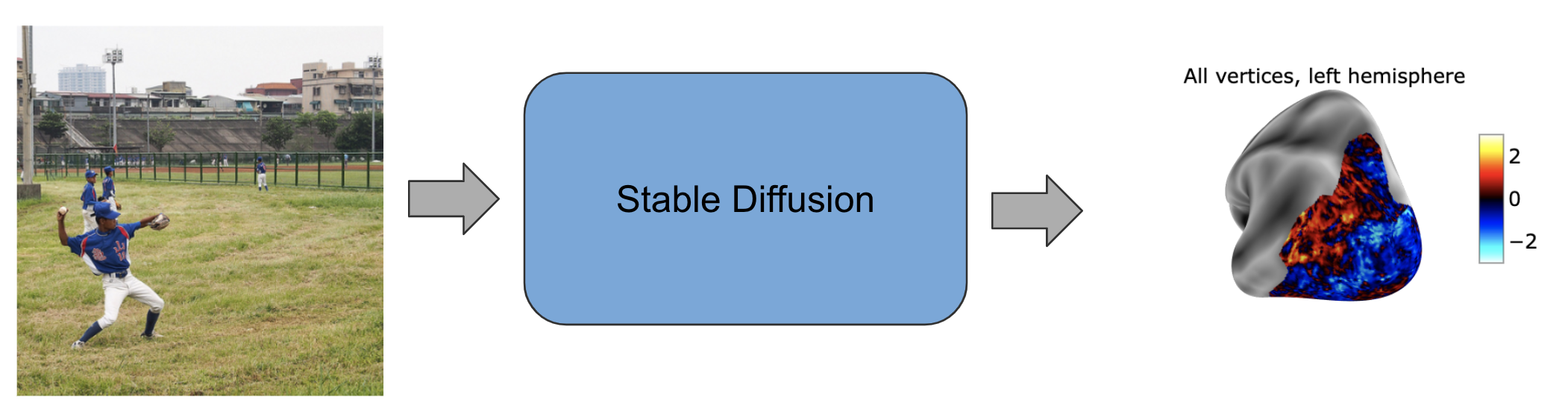

I took inspiration from a related study Link for my initial approach to tackle this problem. In the mentioned study, the authors reconstructed visual images based on the fMRI responses of subjects. They used a pre-trained diffusional model on specific ROIs of the brain, as stated earlier, and conditioned it on the remaining ROIs to infer the subject's thoughts. By examining the following diagrams, one can gain insight into their methodology and findings:

I attempted to fine-tune the diffusional model in order to predict fMRI responses based on a given image and subject ID. The initial results of the experiment demonstrated promise, as the correlation score between the predicted and actual fMRI data increased with each epoch. However, due to computational limitations, I was unable to fully explore this approach. The final correlation score achieved using the diffusional model was 0.24

One benefit of GANs over diffusion or autoregressive models is their quicker training and inference times. As a result, I was able to explore several different approaches.

To keep things brief, I will only discuss the most potential approach for fMRI prediction through GAN training:

| Model | Loss | Epochs | Pre-trained | Config | More training | Time per epoch | Final Correlation Score (Max: 1) |

| Dreambooth-Stable-Diffusion | Mean Squared Error | 2 | Yes | Standard | Can improve further | 1 day | 0.24 |

| Vanilla GANs | L1 + GAN loss | 25 | No | Spectral Norm | Cannot be improved | 5 min | 0.15 |

| Vision Transformer GANs | L1 + GAN loss | 50 | Yes | Single VIT discriminator | Can improve further | 2 hrs | 0.54 |

| Vanilla GANs | Correlation + L1 + GAN loss | 6 | No | Two discriminators Spectral Norm |

Cannot be improved further | 8 min | 0.30 |

| U-Net | Correlation + L1 + GAN loss | 1 | No | Two discriminators Spectral Norm |

Need to stabalize correlation loss | 20 min | 0.45 |

| Vanilla GANs | Correlation + L1 + GAN loss | 25 | No | Custom Patch Multi-discriminators Spectral Norm |

Running... | 23 min | __ |

| Vision Transformer GANs | Correlation +L1 + GAN loss | 25 | Yes | Custom Patch Multi-discriminators | Running... | 3 hrs | __ |

| SOTA | Unknown | Unknown | Unknown | Unknown | Unknown | Unknown | 0.61 |

The results of the above experiment imply that incorporating multiple discriminators, including correlation loss, and utilizing a vision transformer can each lead to a significant improvement in the output. At present, I am conducting an experiment to combine all three enhancements into a single architecture. Additionally, I am experimenting with customized Patch Multi-discriminators.

Currently, the state-of-the-art (SOTA) for the Algonaut competition achieves a correlation score of 0.61, and my VIT-based approach achieved a score of 0.54. However, with the potential integration of all three techniques and a few more epochs of training, the results may surpass the SOTA. The outcome should be available in a week.