Training Generative Adversarial Networks (GANs). Part 1: DCGAN for generating grumpy cats from random noise.

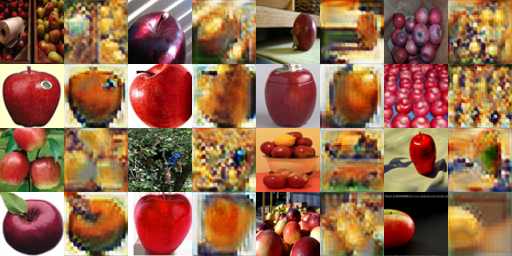

Part 2: CycleGAN for image-to-image translation between Grumpy and Russian Blue cats and between apples and oranges.

GANs are deep learning models used for generative modelling, which involves learning patterns in data to generate new examples.

GANs use two sub-models, a generator and a discriminator, trained together in a zero-sum game to generate plausible examples.

GANs excel in image-to-image translation and photorealistic image generation. The least Square loss is used for training DCGAN.

Implementation of DCGAN involves three things: 1) Generator network, 2) Discriminator network, and 3) Adversarial training loop.

The generator creates new examples similar to real examples (training data)

The discriminator tries to distinguish between real and generated examples

Note: For the final implementation of discriminator, LeakyReLU activation with negative slope of 0.2 was used to improve the discriminator

The two models are trained together in a zero-sum game until the generator can produce plausible examples that fool the discriminator.

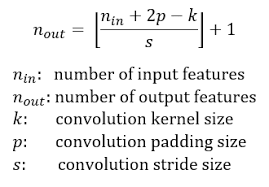

Convolutional layer downsamples input by a factor of 2 using a K=4 kernel size, S=2 stride, and padding=1. Calculated using below formula:

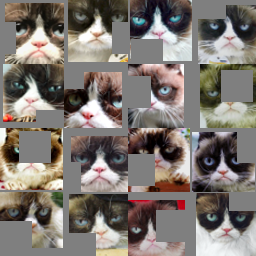

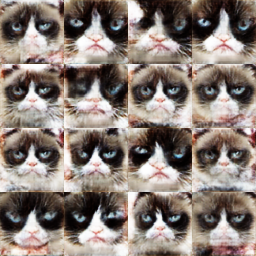

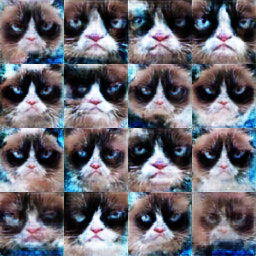

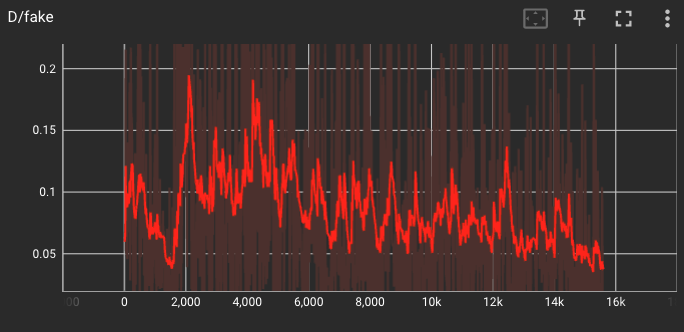

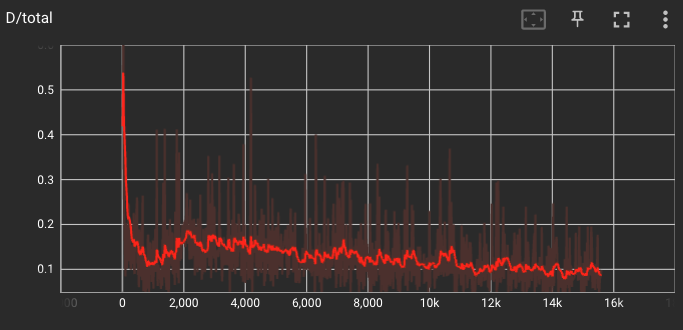

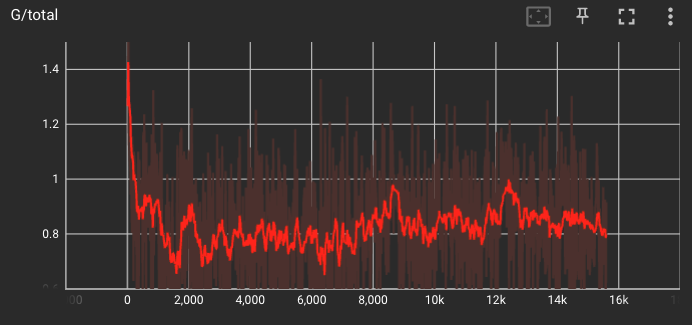

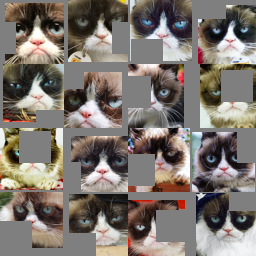

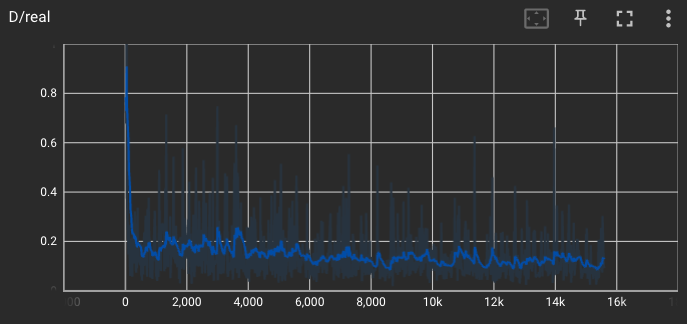

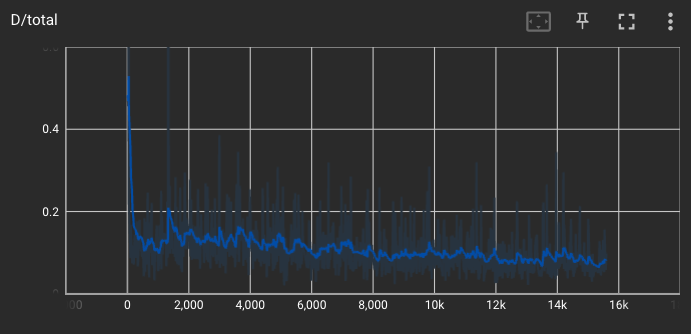

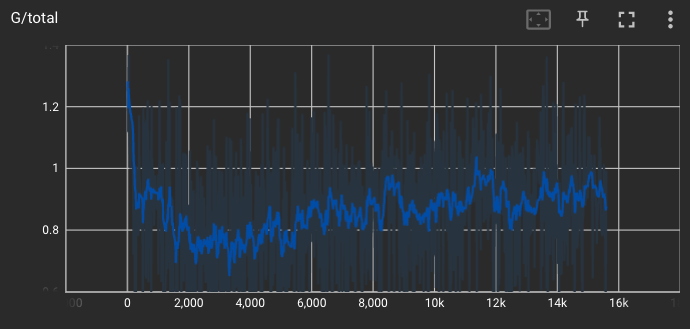

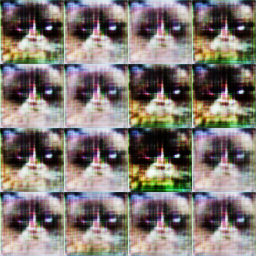

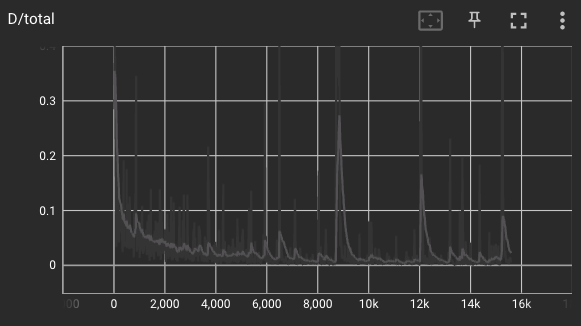

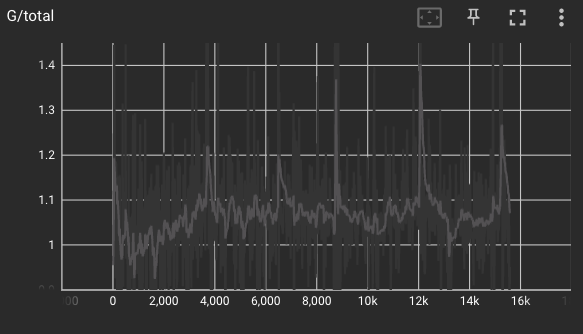

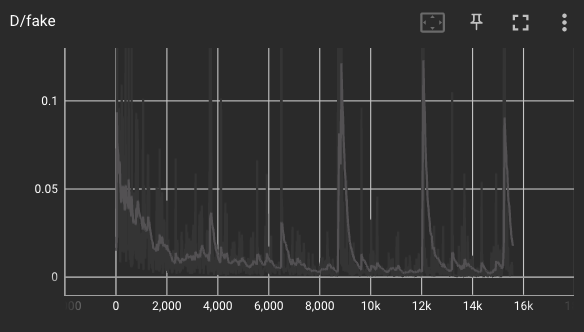

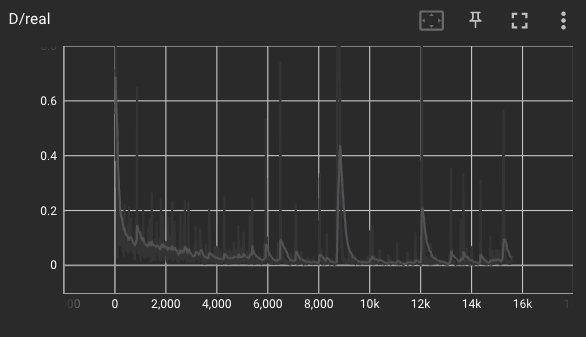

Below are the results from 4 experiments of training DCGAN. Sequence of results are from more realistic generated outputs to less realistic.

DCGAN needs data augmentation, specifically Differentiable Augmentation, to perform well on small datasets. Without it, the discriminator can easily overfit to the real dataset.

Deluxe parameter is normal augmentation including random crop, random horizontal flip and color jittering to real during training.

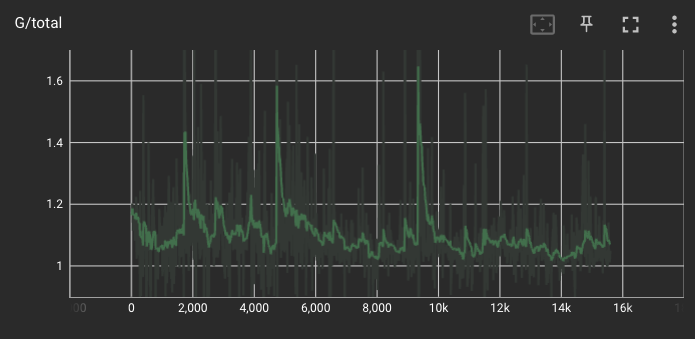

Results show that GAN produces more realistic output as iterations increase. Differentiable Augmentation selected for training are Random translation and cutout

|

|

|

|

|

|

|

|

Note: Non differentialble augmentation leads to augmentation leak. The effects of augmentation can be seen in the generated images

|

|

|

|

|

|

|

|

Deluxe parameter is normal augmentation including random crop, random horizontal flip and color jittering to real during training.

|

|

|

|

|

|

|

|

|

|

|

|

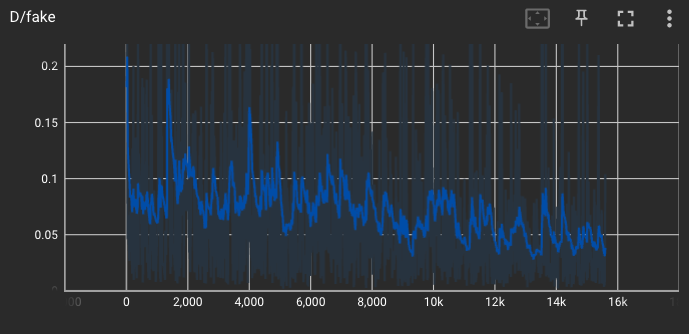

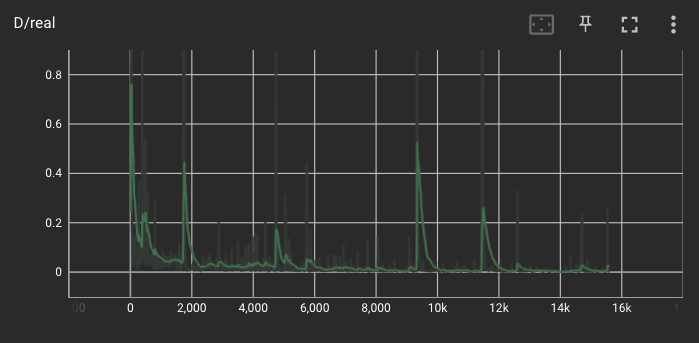

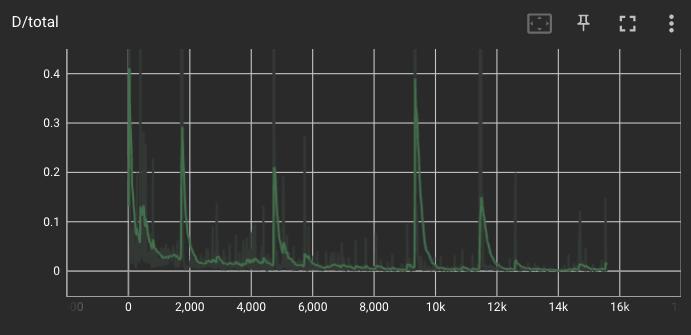

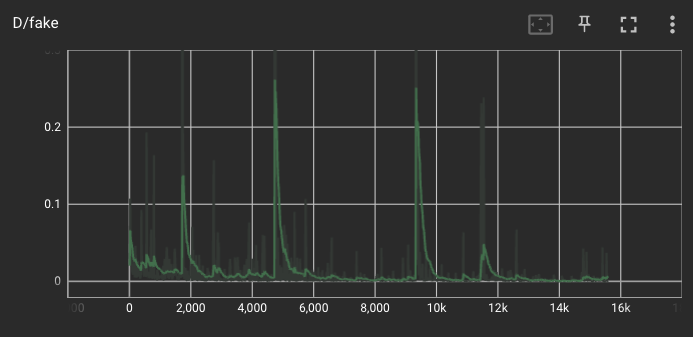

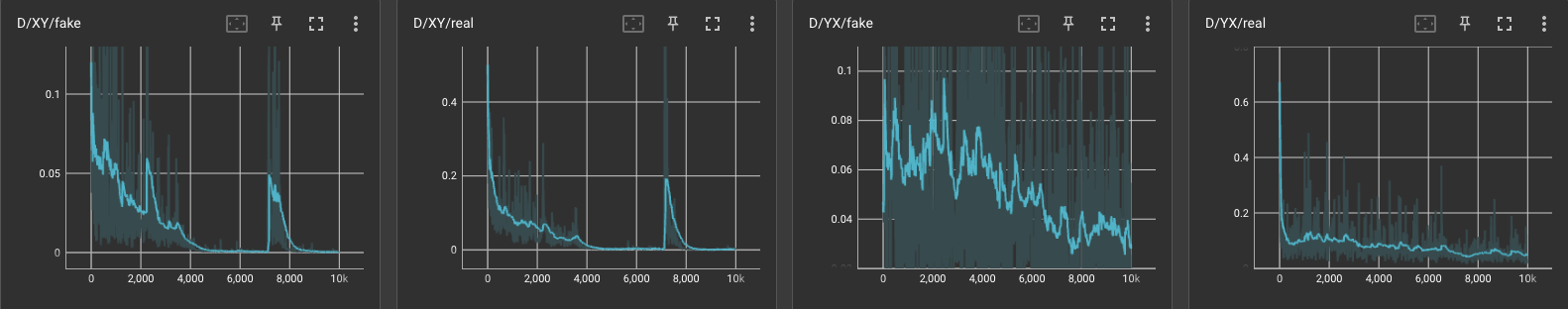

Note: We can observe that DCGAN for limited data with no data augmentation, the discriminator loss goes to zero.

This means discriminator is dominating and has overfitted the training samples. Discriminator loss is slightly higher in training with data augmentation (deluxe).

The best option is to use differentable augmentation, as it improves quality of generated images and also reduces overfitting of the discriminator

The CycleGAN generator has three stages: encoding, transformation via residual blocks, and decoding. The residual block maintains image characteristics.

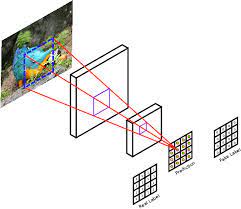

The patch-based discriminator classifies patches for better modeling of local structures. The discriminator produces spatial

outputs and the cycle consistency loss improves performance and convergence speed.

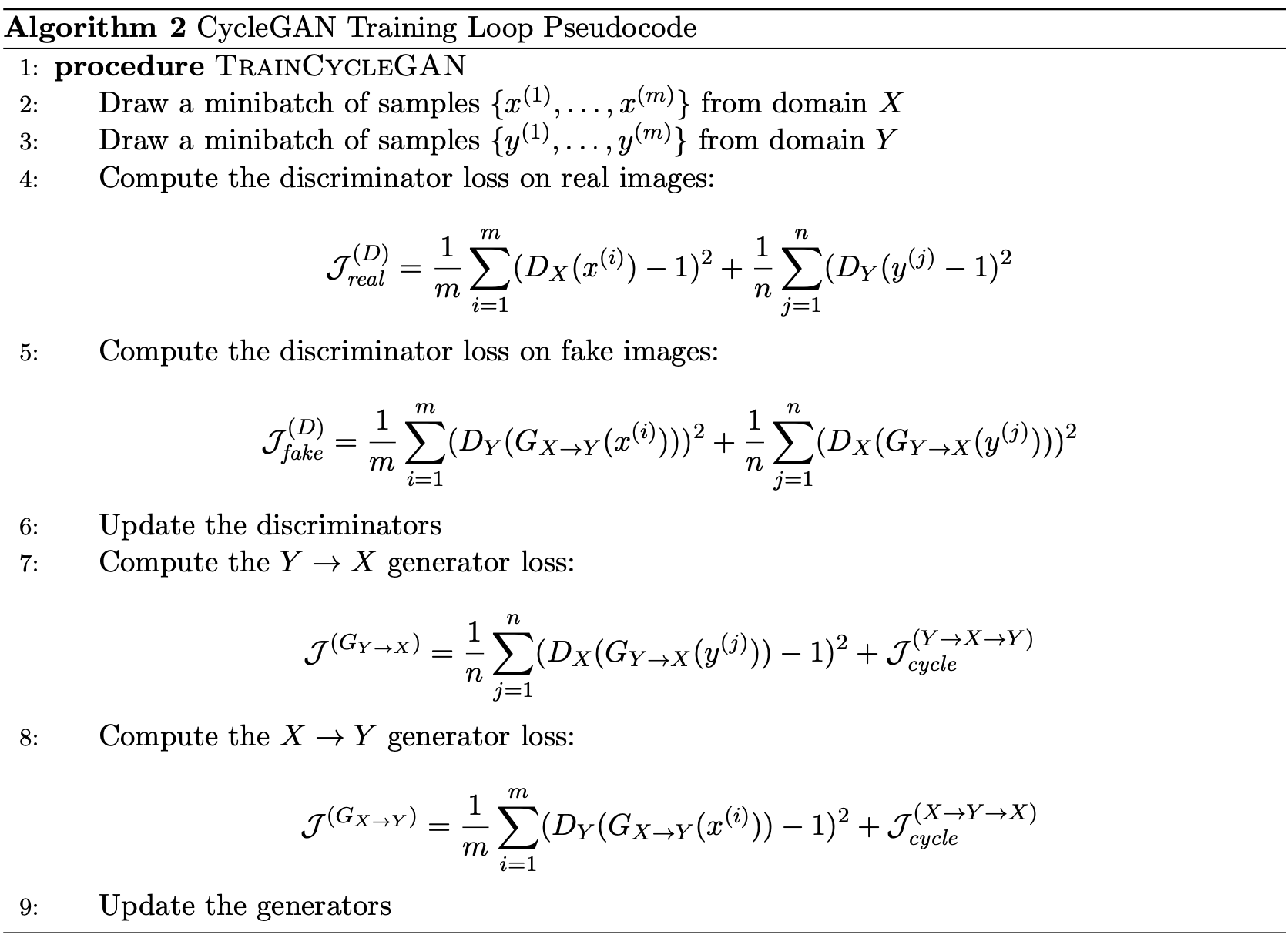

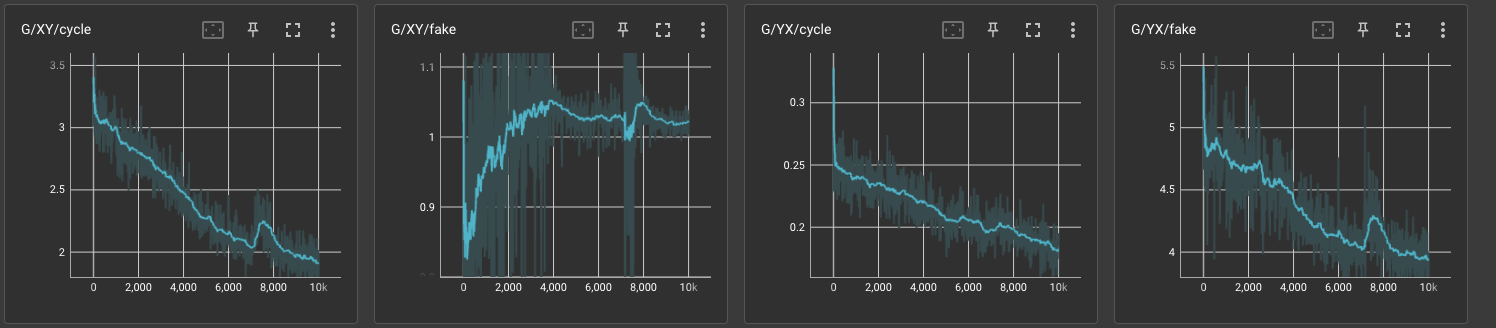

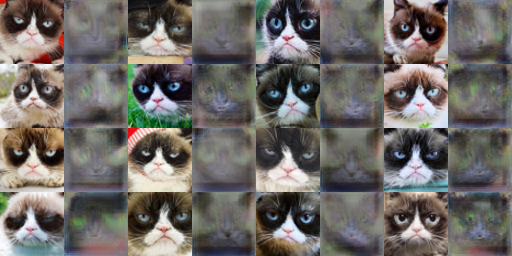

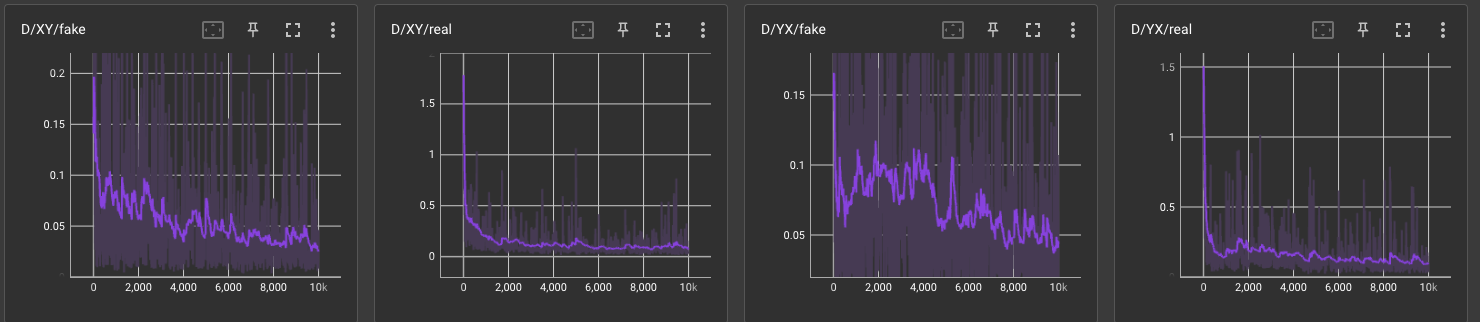

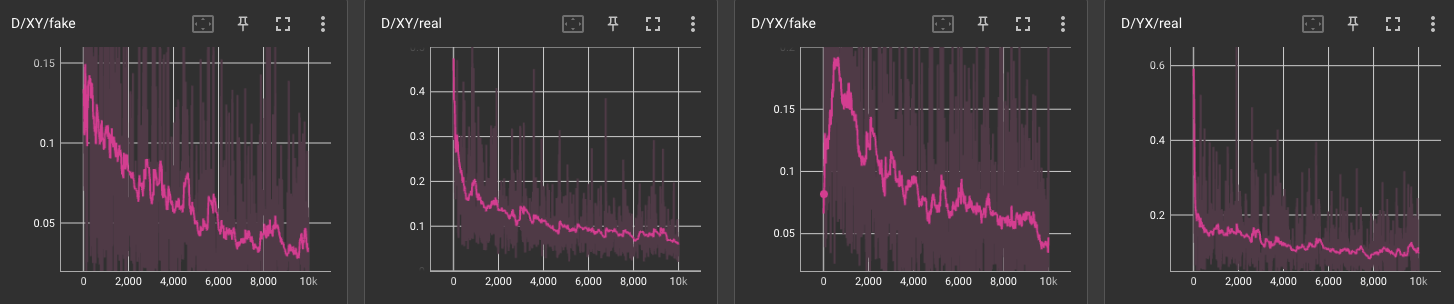

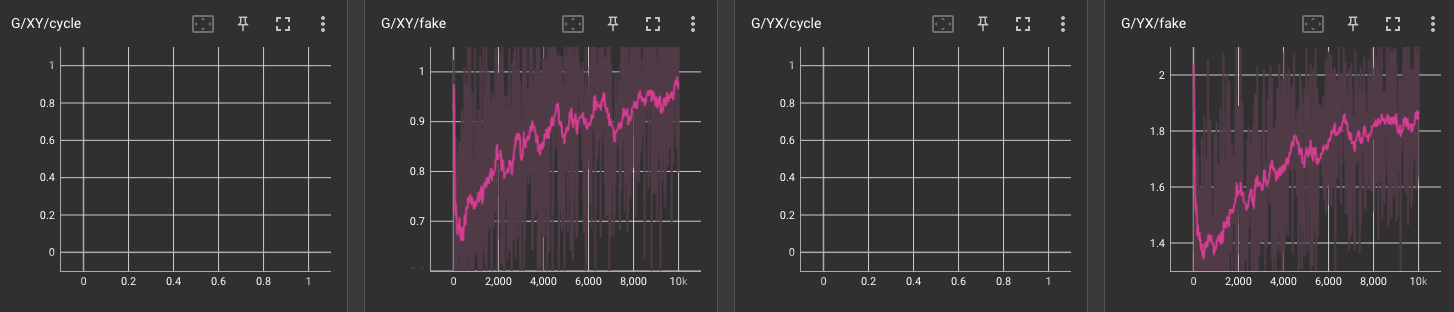

Below are the results on grumpifyCat and apple2orange dataset.

Deluxe parameter is normal augmentation including random crop and random horizontal flip. Differentiable Augmentation selected for training are Random translation and cutout

Below images are for iteration: 800 - 1000 |

|

|

|

|

|

|

|

|

|

Note: Model tries to preserve the shape of the object but changes the style/domain of the object

|

|

|

|

|

|

|

|

|

|

|

|

|

|