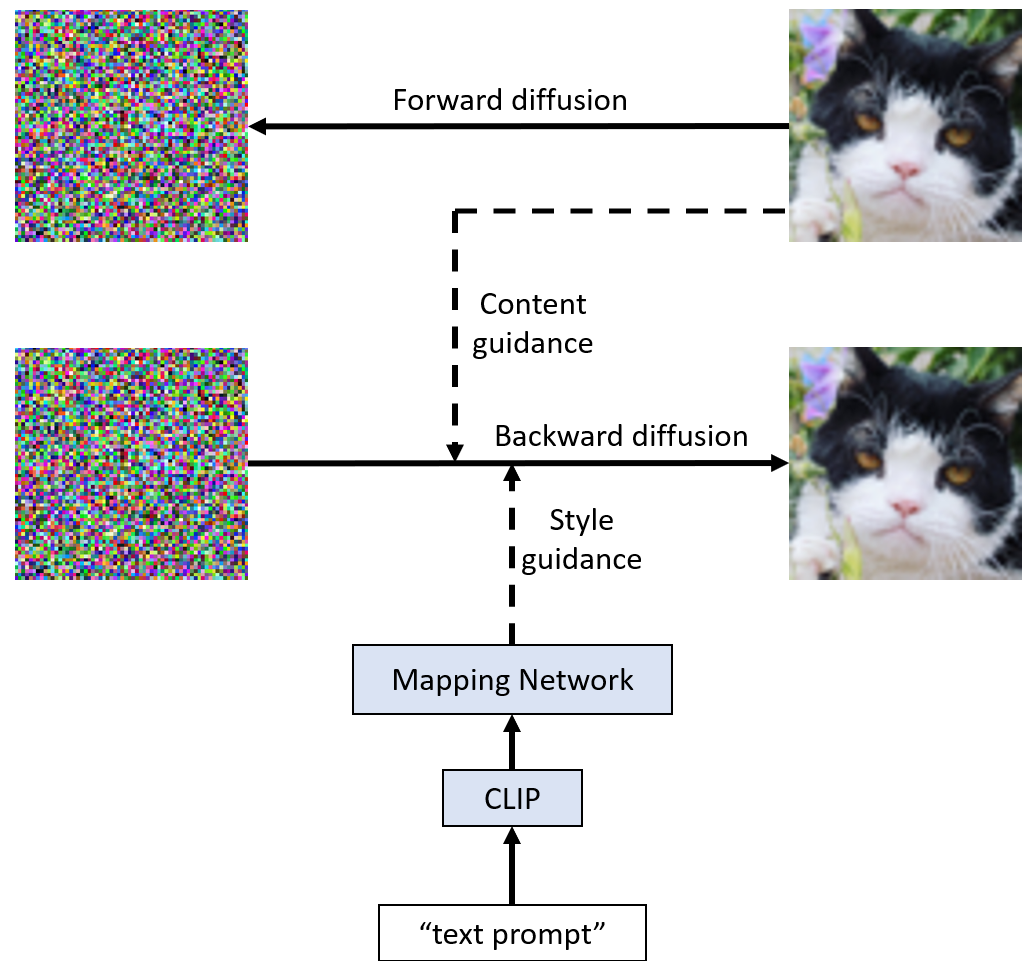

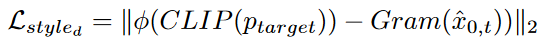

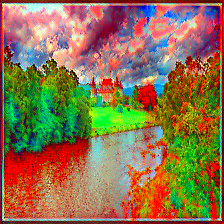

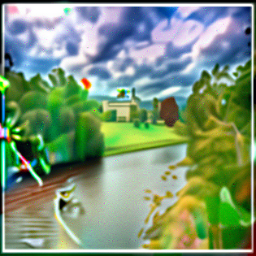

Artistic style transfer is a method to synthesize images like arts by combining content images and artistic style from other images. By leveraging CLIP (Contrastive Language-Image Pre-training) [Radford et al., 2021], previous papers such as [Bai et al., 2023] and [Yang et al., 2023] showed successful results of artistic style transfer with text guidance. However, current methodologies have several limitations. A method based on arbitrary style transfer and AdaIN [Bai et al., 2023] showed text-based style transfer, but the model can generate only one example given an image and text guidance as no randomness is involved. Also, though diffusion-based models such as DiffusionCLIP [Kim et al., 2022] and CLIPstyler [Kwon and Ye, 2022] used CLIP-based style loss to train models, they sometime transferred contents of text-guidance to synthesized images by mistake. This paper proposes diffusion-based text-guided style transfer using style extraction networks.

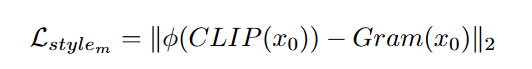

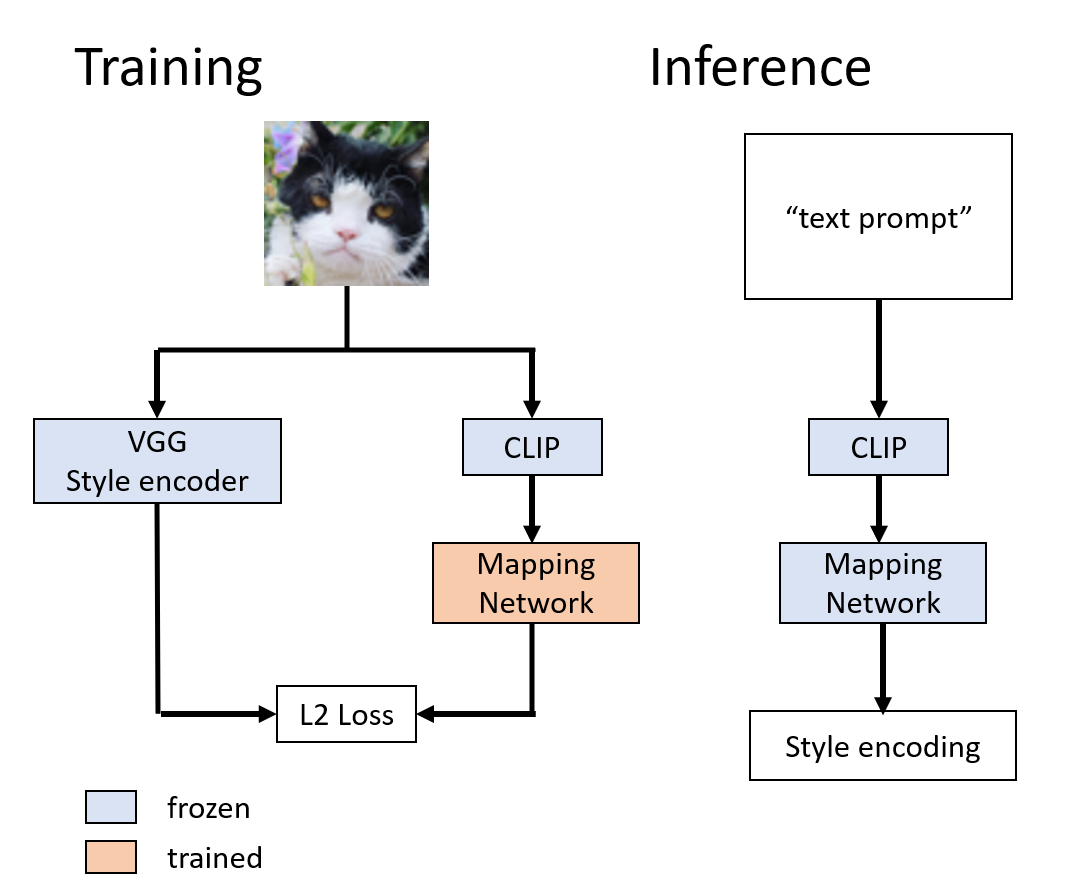

A novel contribution of this project is to utilize style extraction model proposed in ITstyler [Bai et al., 2023] in diffusion-based style transfer models. Style extraction model would enable the model to encode style embedding from CLIP encoding of text inputs, which would reduce mistakes of transferring contents of input text.