This project focuses on domain adaptation, where the goal is to train a model on a labeled source domain that can be generalized well to a target domain with different data distribution which is sparsely labeled.

The dataset includes timestamped images of a stream network located in the United States, which are captured hourly between mid-2018 and the end of 2021. Each of these images is associated with a stream flow value that represents the rate at which water flows through the river at a specific location and time. However, for some locations within the dataset, the stream flow values for most of the recorded times are unavailable.

The input to the problem is a set of labeled images from a source domain, and a set of sparsely labeled images from a target domain. The aim is to learn a model that can accurately predict labels of the unlabeled data in the target domain.

To transfer both images and labels from one domain to another and adapt them to the new domain, I extended the CycleGAN model by incorporating a label prediction module in its architecture. This module predicts the labels of the input images and maps them to the target domain. The label prediction module is composed of pretrained ResNet and fully connected layers.

For label prediction, I used L1 loss function. This loss measures the absolute difference between the predicted and ground-truth labels. Additionally, to ensure that the adapted labels can be transformed back to the source domain while maintaining their closeness to the target label, I used Cycle-consistency loss for labels.

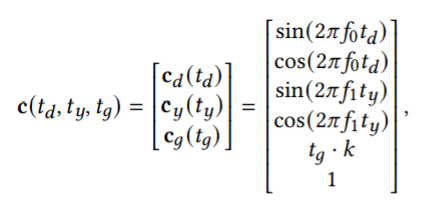

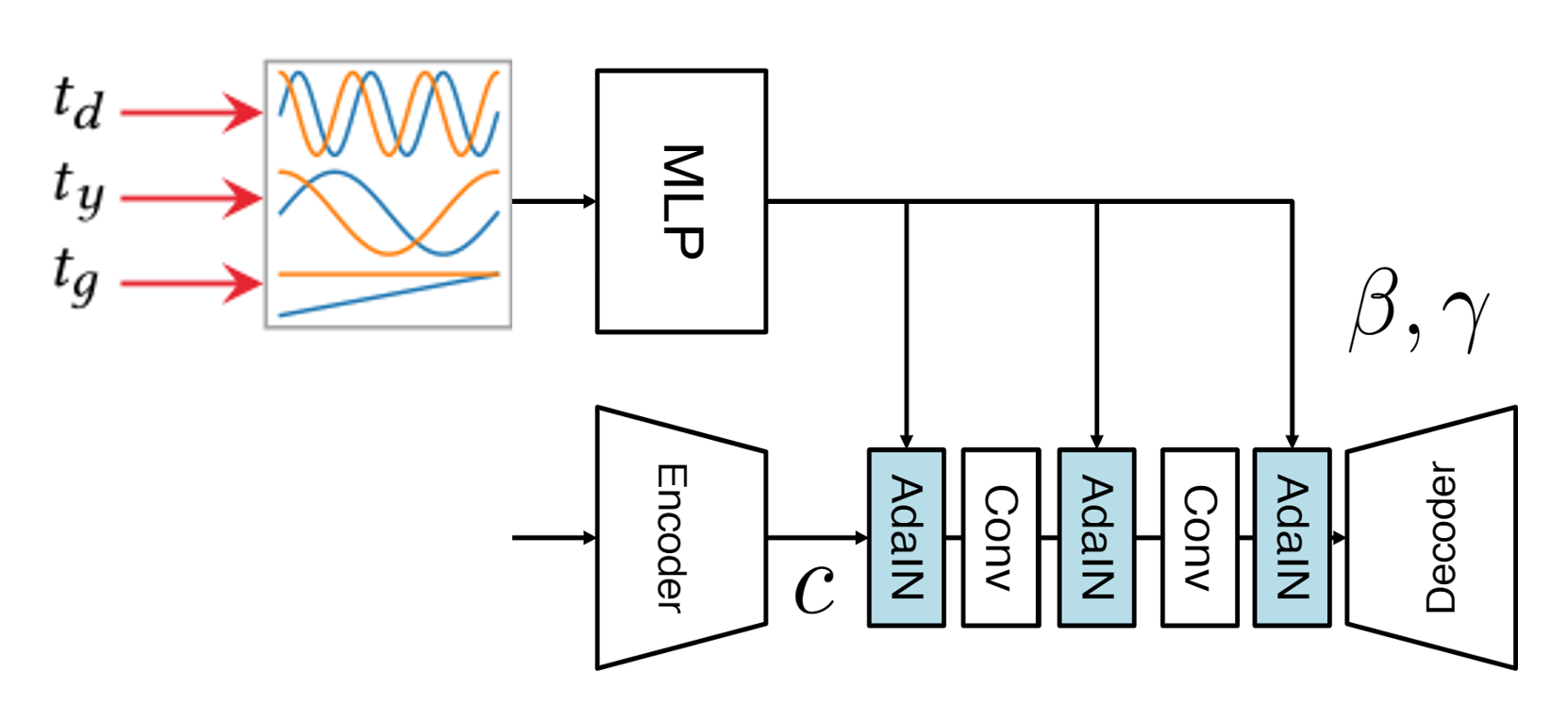

To generate an image with specific time of day 𝑐_𝑑, day of year 𝑐_𝑦, and global trend 𝑐_𝑔, the model is conditioned on time signals. The encoding process involves creating three conditioning signals based on the input time: 𝑡_𝑑 (time of day), 𝑡_𝑦 (day of year), and 𝑡_𝑔 (global trend) as follows:

where 𝑓_0 matched the day cycle, 𝑓_1 = 𝑓_0/365.25 matched the year cycle, and the scaling constant 𝑘 = 1×10^−2. These conditioning signals are then fed to an MLP, and AdaIN is used to feed them to the generator.

I conducted my experiments using data from following areas within the stream network: "West Brook Lower" which I will refer to as X, and "Avery Brook_Bridge" which I will refer to as Y.

I did an ablation study to evaluate the performance of the model. The study compared the time-conditioned model with the model that does not incorporate temporal information.

The generated images for different iterations of a fixed image are presented below:

The generated images for different times are displayed through following interface. The interface shows the real image on the left along with its associated time and flow value, which are displayed on top of the image. Additionally, there is a slider on the interface that can be used to choose a specific time for generating the image. On the right side, the generated image and its predicted flow are displayed. It is noticeable that for the model without temporal conditioning, the results are not influenced by time.

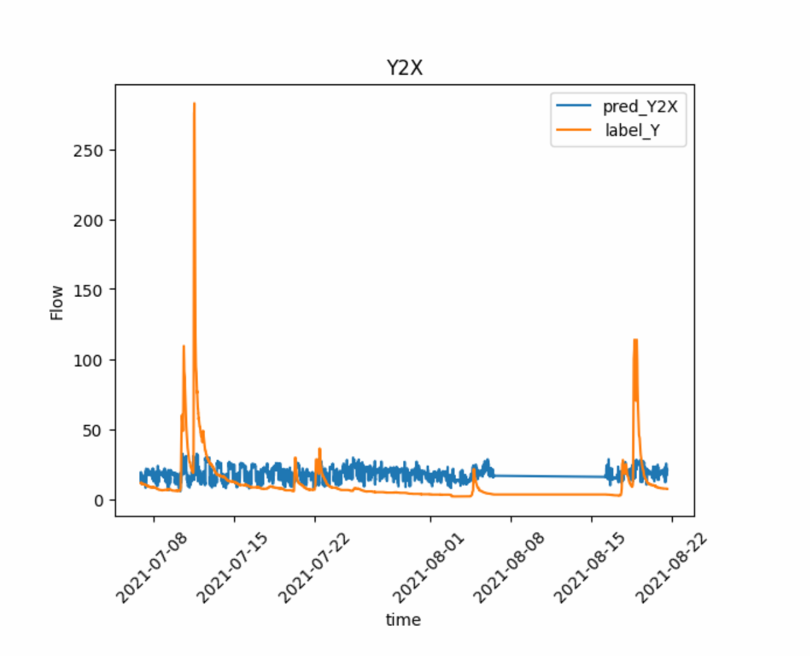

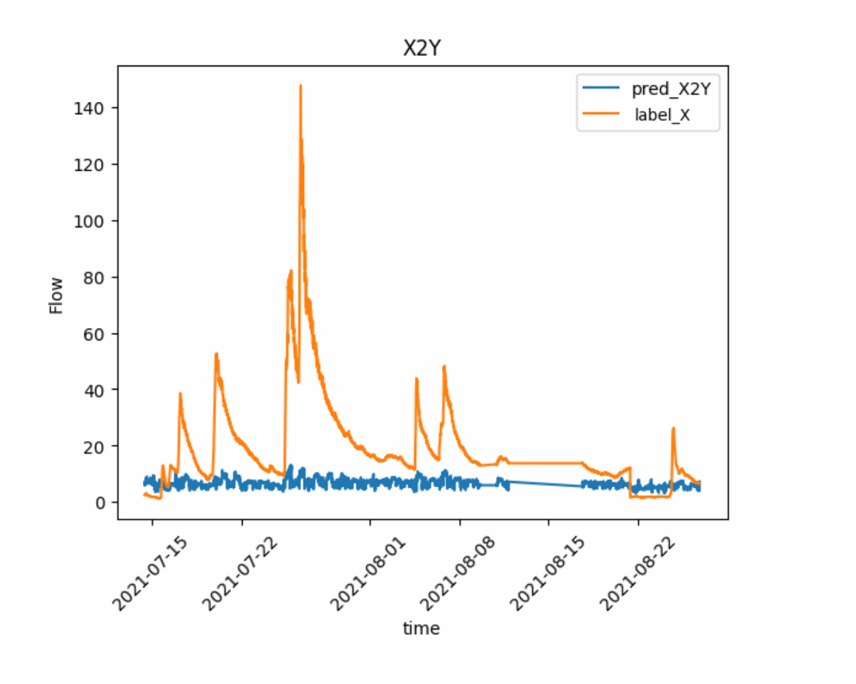

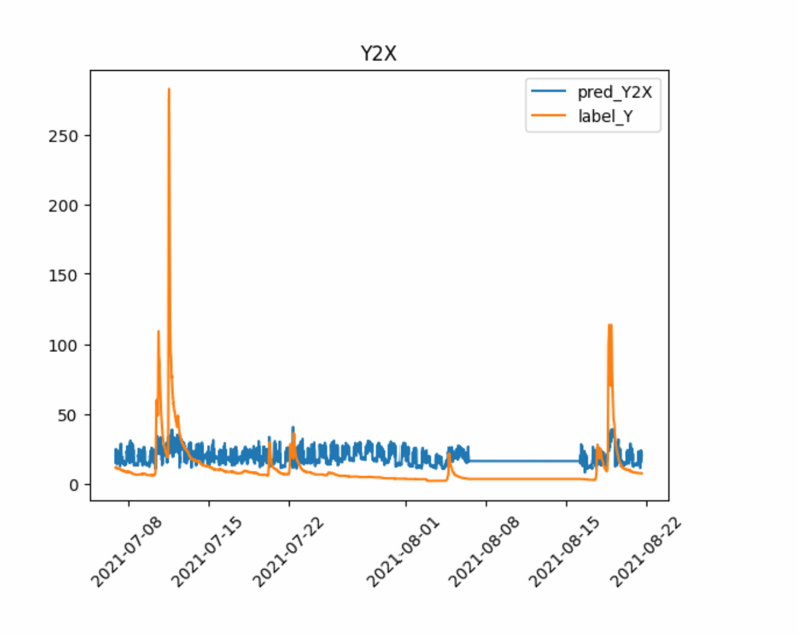

The predicted labels for each domain are as follows:

The generated images for different iterations of a fixed image are presented below:

The generated images for different times are displayed bellow:

As can be observed, the results of time-conditioned model are influenced by the input time. For instance, the generated image for June has a green background, while the generated image for December has a background with fall-seasonal colors.

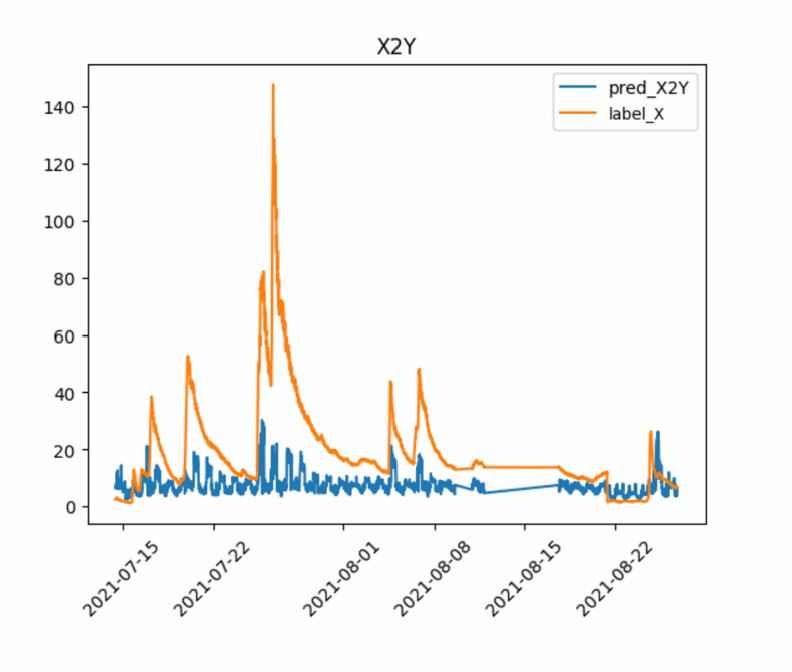

The predicted labels for each domain are as follows:

As can be observed, the model conditioned on the input time signals can predict the labels more accurately and capture the trends more effectively compared to the non-conditioned model.

Hoffman, Judy, Eric Tzeng, Taesung Park, Jun-Yan Zhu, Phillip Isola, Kate Saenko, Alexei Efros, and Trevor Darrell. "Cycada: Cycle-consistent adversarial domain adaptation." In International conference on machine learning, pp. 1989-1998. Pmlr, 2018.

Härkönen, Erik, Miika Aittala, Tuomas Kynkäänniemi, Samuli Laine, Timo Aila, and Jaakko Lehtinen. "Disentangling random and cyclic effects in time-lapse sequences." ACM Transactions on Graphics (TOG) 41, no. 4 (2022): 1-13.