UIFusion: Stable Diffusion for UI Generation

Faria Huq

Dataset Description

I used Rico and screen2words dataset for this project. Rico is a dataset containing 70k+ screenshots of mobile applications. Screen2words is an extension of Rico which contains human-annotated summary of the images in the Rico dataset. The reason I chose to work with Screen2words was because of its rich human annotation. As it was labelled by mturk workers, it contains the abstract description people usually use to describe the screens. Hence, it captures the more natural interaction.

Method & Configuration

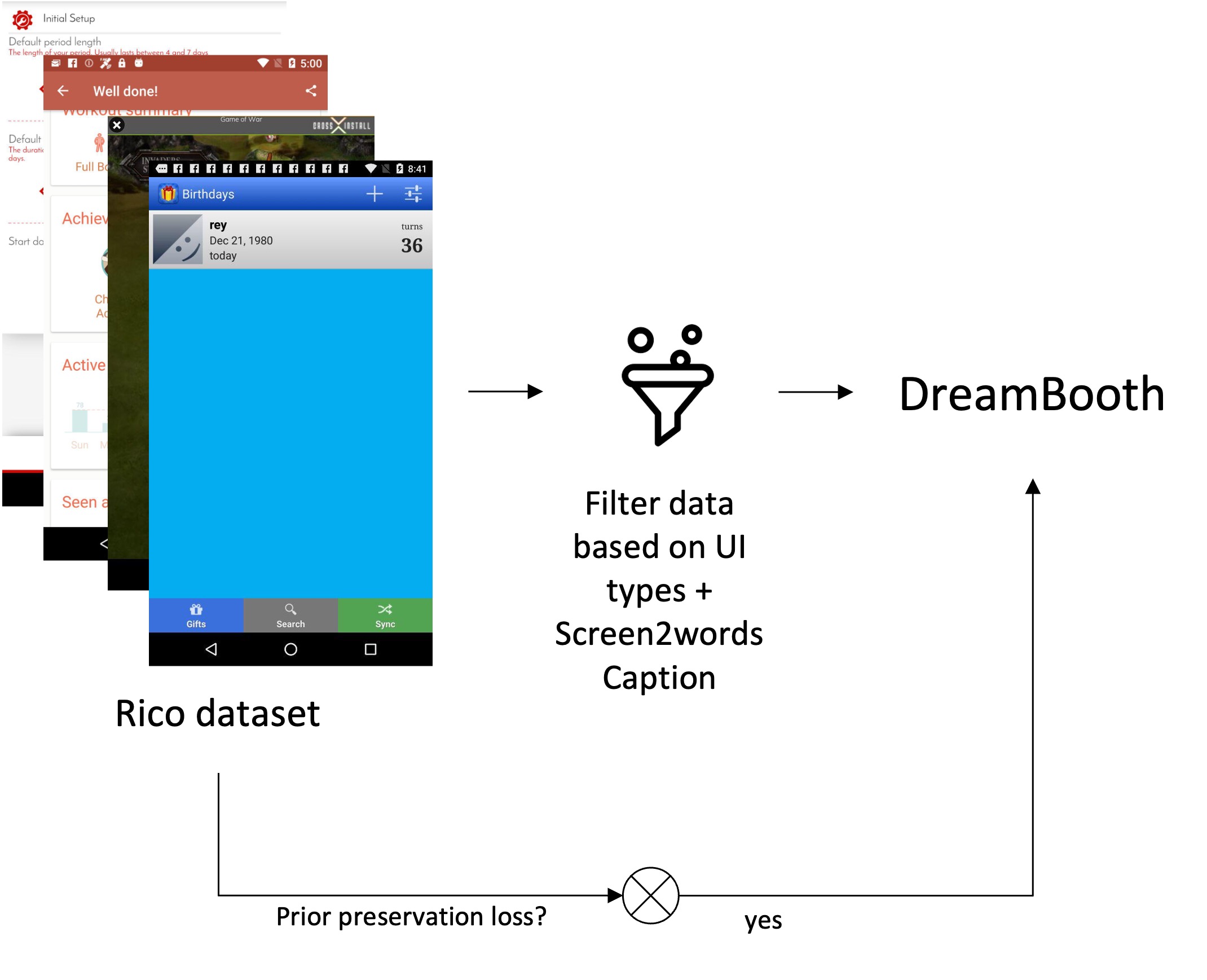

I used Dreambooth to finetune Stable Diffusion (Version 1.5, RunwayML) on specific UI categories (e.g: sign up, settings, cart screens) to analyze the result. The pipeline is shown below:

I experimented with both the basic settings and also with the prior preservation loss settings to understand the effect of language drift. For each configuration, the model was finetuned for 100 epochs with batch size = 1.

Result

Few examples for each of the categories are shown below. More results can be accessed at this folder. . The finetuned model checkpoints can be accessed from this google drive: link

Input Prompt: "page displaying the status of cart"

Input Prompt: "page showing settings"

Input Prompt: "sign up page"

Conclusion

As we can see from the results, finetuning the stable diffusion with Dreambooth didn't improve

the accuracy of the model for UI generation in a significant margin. In best case, it learned the

layout organization a little bit better. But, the results were still far from being perfect. The reasons can be multiple: 1)

The prompt was small and abstract. I haven't tested with granular description, that might yield better result. 2) Dreambooth

requires multiple images of the same object in the input. Whereas I used same category, which may not necessarily mean the same

vector embedding space for the model. That can be another reason the model couldn't perform better even after getting finetuned. 3) The model

might be underfitted. 4) UI images have a higher percentage of textual information. Stable Diffusion still

suffers to generate better images when there is text in the image.

In conclusion, we can see that Stable Diffusion is still not well-equipped enough to generate UI images. It requires

some modification, probably in conjunction of code understanding -- to generate UI images that can be useful to professional designers.