Joshua Cao | Carnegie Mellon University

About Course

16-726 Learning-Based Image Synthesis / Spring 2022 is led by Professor Jun-yan Zhu, and assisted by TAs Sheng-Yu Wang and Zhi-Qiu Lin.

This course introduces machine learning methods for image and video synthesis. The objectives of synthesis research vary from modeling statistical distributions of visual data, through realistic picture-perfect recreations of the world in graphics, and all the way to providing interactive tools for artistic expression. Key machine learning algorithms will be presented, ranging from classical learning methods (e.g., nearest neighbor, PCA, Markov Random Fields) to deep learning models (e.g., ConvNets, deep generative models, such as GANs and VAEs). We will also introduce image and video forensics methods for detecting synthetic content. In this class, students will learn to build practical applications and create new visual effects using their own photos and videos.

Assigment Summary

| Topic | Abstract | Reference | |

|---|---|---|---|

| A1 | Colorizing the Prokudin-Gorskii Photo Collection | Implement SSD, pyramid structure, USM, auto crop, contrast methods |

Dataset USM Hough Transform |

| A2 | Gradient Domain Fusion | ||

| A3 | When Cats meet GANs | ||

| A4 | Neural Style Transfer | ||

| A5 | GAN Photo Editing |

Copyright

All datasets, teaching resources and training networks on this page are copyright by Carnegie Mellon University and published under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. This means that you must attribute the work in the manner specified by the authors, you may not use this work for commercial purposes and if you alter, transform, or build upon this work, you may distribute the resulting work only under the same license.

All datasets, teaching resources and training networks on this page are copyright by Carnegie Mellon University and published under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. This means that you must attribute the work in the manner specified by the authors, you may not use this work for commercial purposes and if you alter, transform, or build upon this work, you may distribute the resulting work only under the same license.

Assignment #1

Introduction

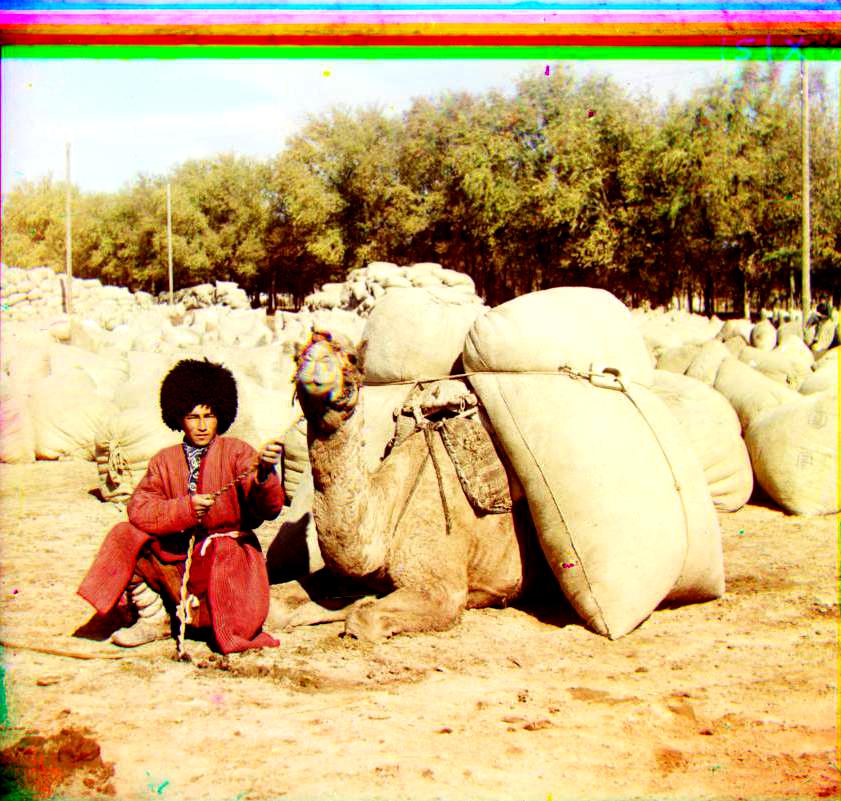

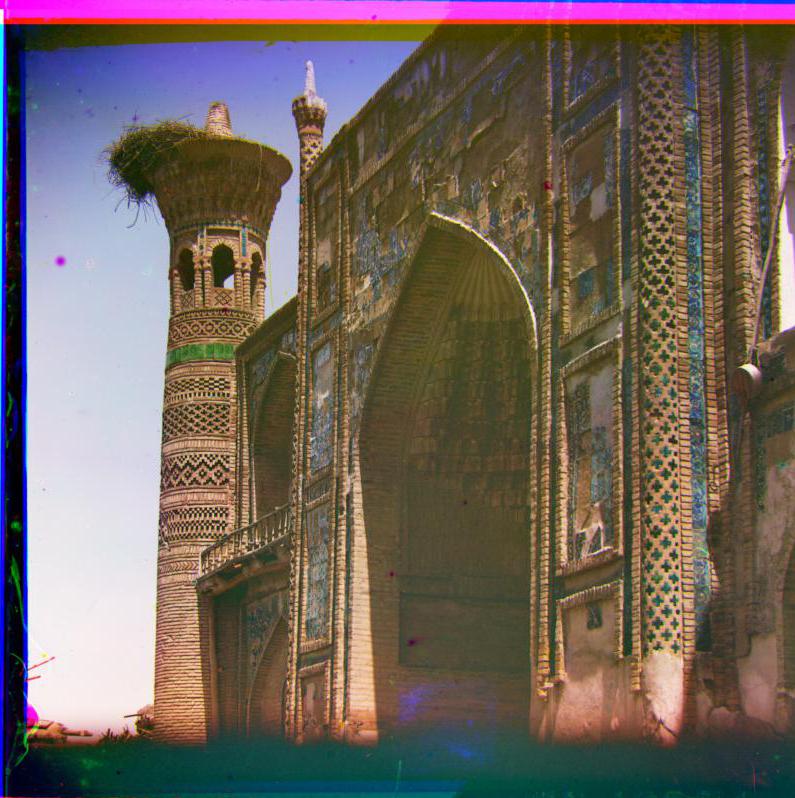

The Prokudin-Gorskii image collection from the Library of Congress is a series of glass plate negative photographs taken by Sergei Mikhailovich Prokudin-Gorskii. To view these photographs in color digitally, one must overlay the three images and display them in their respective RGB channels. However, due to the technology used to take these images, the three photos are not perfectly aligned. The goal of this project is to automatically align, clean up, and display a single color photograph from a glass plate negative.

Direct Method

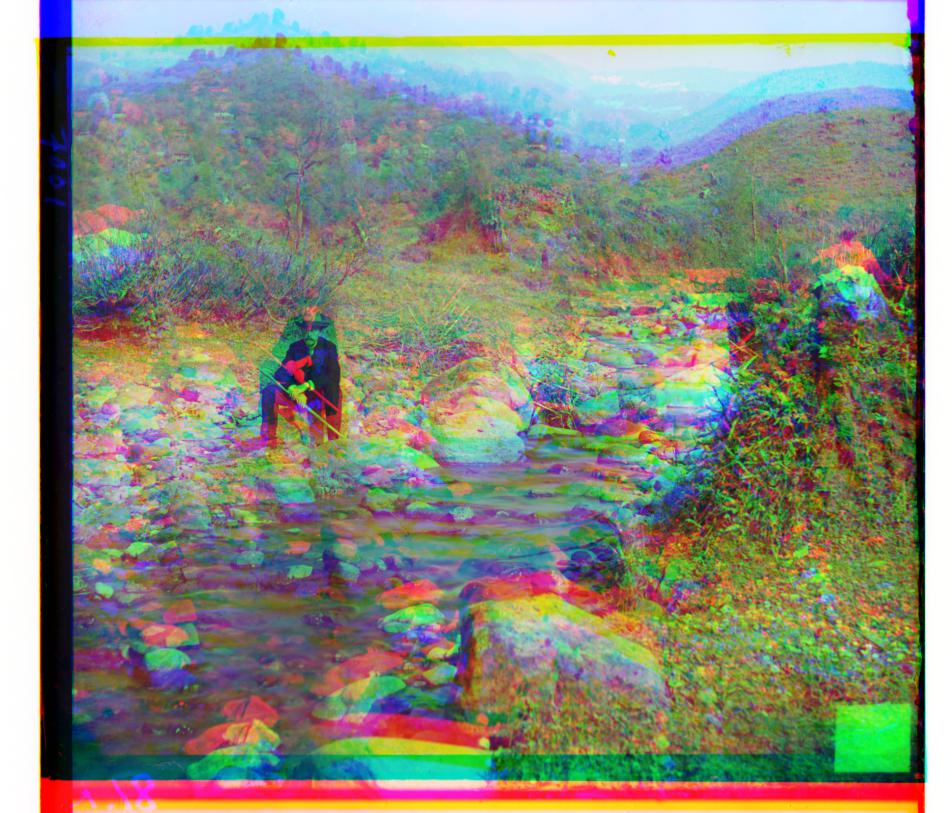

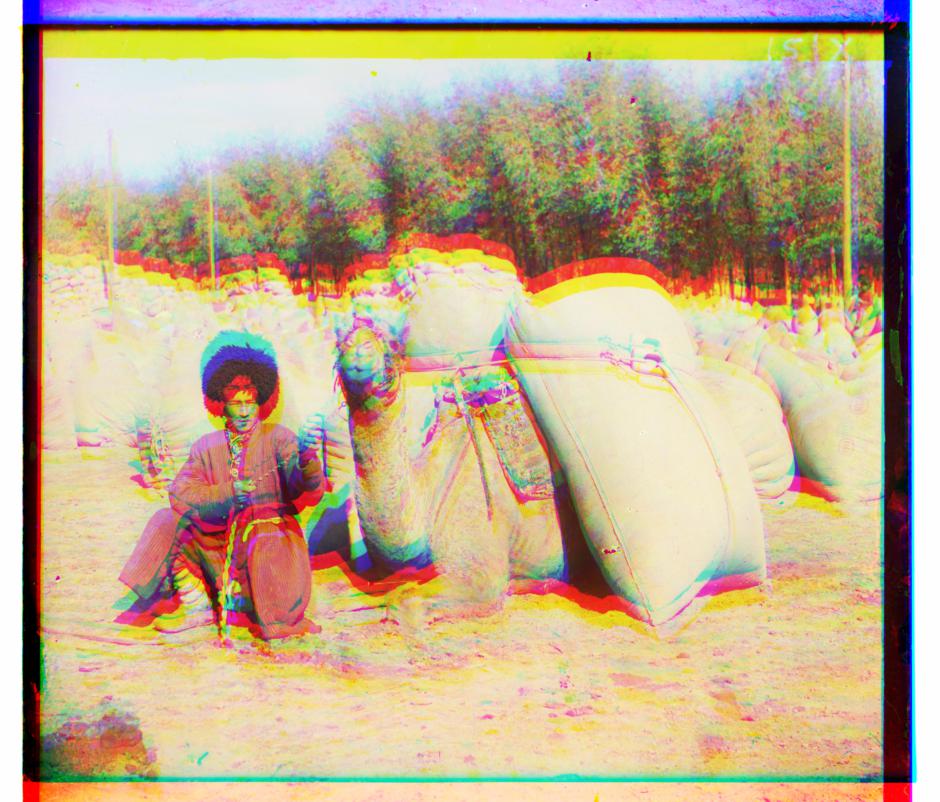

Before diving into algorithms, I decide to blend R, G, B images directly and have an intuitive feeling of the tasks, which at the same time provides a reference to compare how far my algorithm can go. There are total 1 small jpeg and 9 large tiff images from the given dataset. Their direct blending goes as below:

SSD & NCC Alignment

I implement both SSD and NCC to compare the small patch's similarity for alignment, the search range is [-15,15], and the algorithm works both well on the small jpeg image as shown below. To speed up the calculation, I also cropped 20% of each side of the image to decrease calculation on the edge. However, to deal with larger image, not only the search range is not large enough, but also the calulation takes extremely long. Therefore, the pyramid structure comes to practice.

Pyramid Aligment

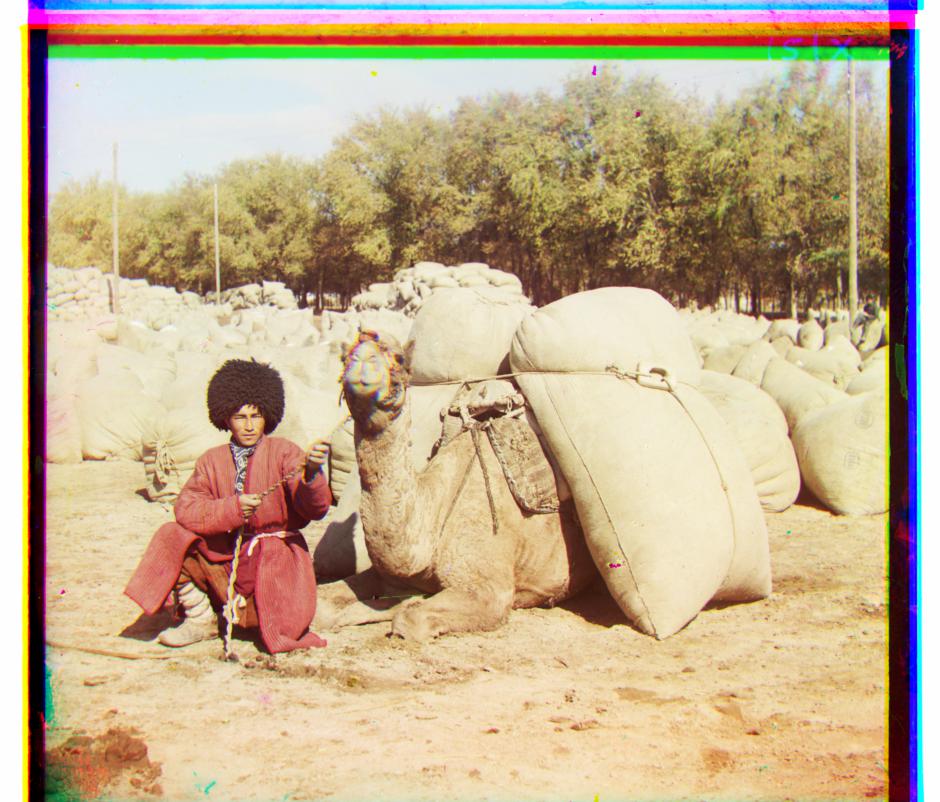

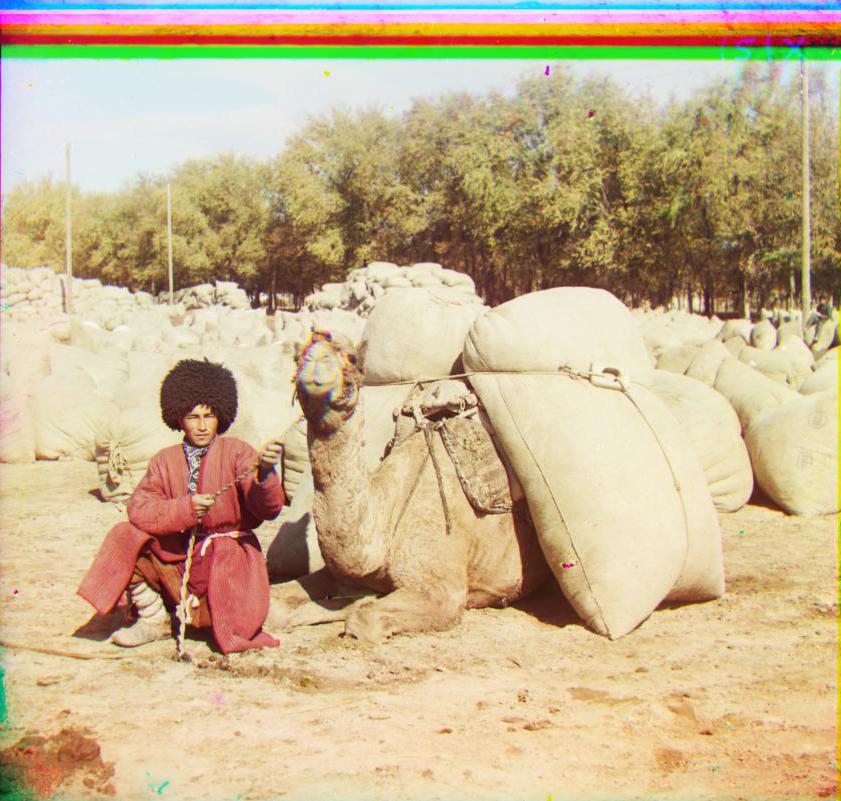

I use log2(min(image.shape)) to find out how many mamixmum layers the image can have, and add conditions to only apply the pyramid algorithm for images larger than 512*512, and the small image can directly use [-15,15] SSD search. For large images, my starting layer is a size around 265 pixels(2^8 as first layer), and exhaustively search till the original image size, because I realized missing final layer will give me color bias all the time(misalignment of color channel is very easy to detect even though it's just small pixels). The first implementation of my method took 180s for one image. To speed it up, I recursively decrease search region by 2 each time to shorten it to 55 seconds per iamge, because the center of search box is determined by last layer, so the deeper algorithm search, the smaller search range it requires to find the best alignment. The output is listed as below. As you can see, most of the images are aligned quite well but image like the piece of Sergei Mikhailovich Prokudin-Gorskii, work even worse than direct alignment, this is because the brightness of the images are different, therefore, I use an USM(Unsharp Mask) algorithm to fix the issue.

Extra Credits

USM Unsharp Mask

The USM algorithm is mainly to sharpen or soften the edge of images, and allows accurate SSD difference to make better alignment. The algorithm is called for each recursion in the pyramid alignment, and it first uses Gaussian Blur to blur the single channel(gray) image, and subtract it from the original image, then I take the region of difference that is larger than certain threshold and subtract them from the original image and multiple certain constant parameters. Here I use subraction because I notice certain edge needs to be softened instead of being sharpened, due to some disturbing edges stand out too much in the original image that makes SSD find the wrong alignment. The USM specifically improves the quality of this image:

Crop Image

To get rid of the borders, I mainly use two cropping method, the first one is to keep area only for all three channels have contents, and remove those blank area caused by alignment, this is implemented by retriving shift of each single channel image. But this method can't deal so well with the region that is originally black or white outside the image. Then I use a MSE(Mean Square Error) method to calculate each row and each column's error, and set up when three adjacent rows or columns all are smaller than certain threshold, it is the area should be cropped. The result shows as below:

Add Contrast

The contrast method is pretty straight-forward, I just calculate the accumulative histogram of the image, and take 5% and 95% as 0 and 255 respectively, and stretch the color value in between so that the contrast of the main image increase.

Other Dataset

I find some other similar dataset that has pretty large tiff image to test the algorithm. The result shows as below

Assignment #2

Introduction

The project explores the gradient-domain processing in the practice of image blending, tone mapping and non-photorealistic rendering. The method mainly focuses on the Poisson Blending algorithm. The tasks include primary gradient minimization, 4 neighbours based Poisson blending, mixed gradient Poisson blending and grayscale intensity preserved color2gray method. The whole project is implemented in Python.

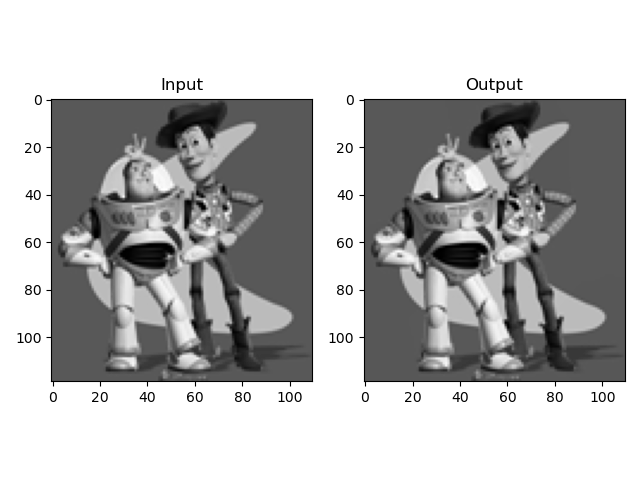

Toy Problem

The toy problem is a simplifed version of Poisson blending algorithm, therefore it helps understanding the Poisson blending a lot. The major functions are three, to calculate the gradient of x axis and y axis, and to align the left-top corner (0,0) of the image:

((v(x+1,y)−v(x,y))−(s(x+1,y)−s(x,y)))**2

((v(x,y+1)−v(x,y))−(s(x,y+1)−s(x,y)))**2

(v(1,1)−s(1,1))**2

We use the equations to loop through each pixel of the source image to construct A and b. By solving the least square form of Av=b, we can get the synthesized image v. Say the given gray image size is H by W, A's dimension is H*W by 2*H*W+1, b's dimension is H*W by 1. The result is pretty much to test if we can copy the original image, as shown below:

I implemented it in both loop method and non-loop method, the interesting thing is that with loop method, it only takes around 0.4s, whereas with the non-loop method, which is supposed to be faster in Python environment than loop, turns out to take around 10s. I think the major reason is that sparse matrix's arithmetic calculation is more expensive than directly assign value to coordinates. In the non-loop method, I mainly use lil_matrix to construct sparse matrix, and use np.roll, np.transpose to construct A matrix. Finally I decide to use the loop method for the rest of the task.

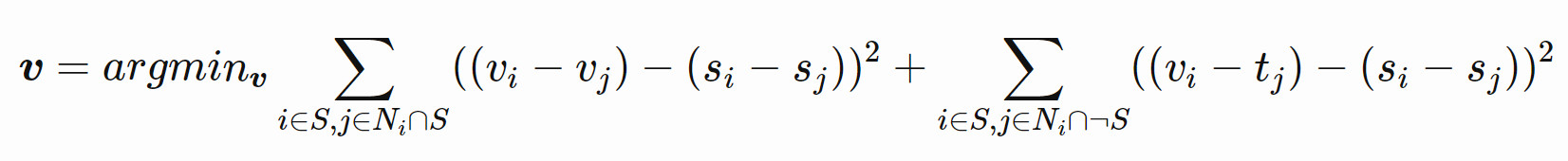

Poisson Blending

Based on toy problem's hint, Poisson Blending explores the four neighbour of each pixel, follow the equation below that v is the synthesized vector that we need to solve, s is the source image in the size of target image(but we're only interested in the masked source image), t is the target image:

In the equation, each i deals with 4 j, i.e. the same i is calculated 4 times with 4 different neighbour j. The left part considers the condition if all the neighbour of i is still inside the mask, and the right part considers the neighbour not all inside the mask. Notice the difference in code is that for the right part, tj is used to construct parameter b, whereas for the left part, vj is used to construct parameter A.

Also, the given image now has RGB, 3 channels, therefore we need to calculate each channel separately. When I implemented it, I consider that A matrix is always the same for 3 channels, but b are different for each channel, therefore I only calculate A once and b 3 times to speed up the algorithm. What's more, since the we only need to generate image from the maksed source image's coordinate, I only loop through this area to speed up. And finally I merged three v together to get new RGB image. The average speed is related to the image size, to deal with the given example of 130x107(source image) and 250x333(taget image), it generally takes around 20s.

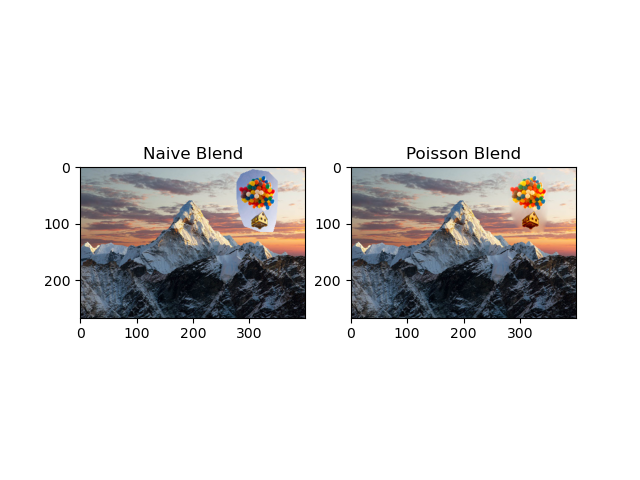

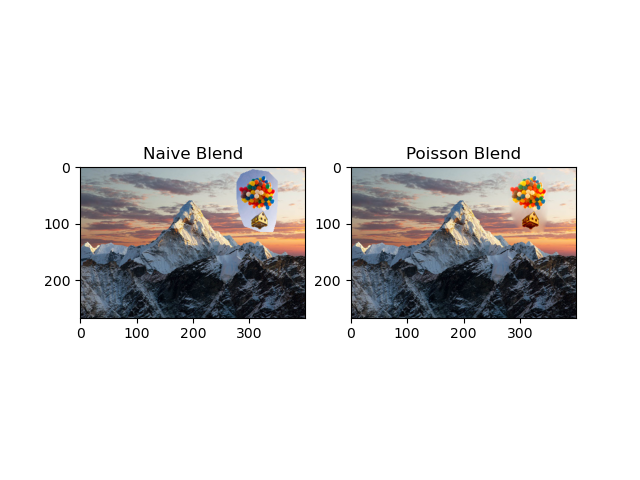

However, the naive Poisson Blending has some issue to deal with blending the image seamlessly, which is because only considering the source neighbour is not enough for strong difference of target and source images. As you can see the image below, the inner part of the ballon house is little blur and not blended so well with the background. Therefore, we need to implement Mixed Blending.

Extra Credits

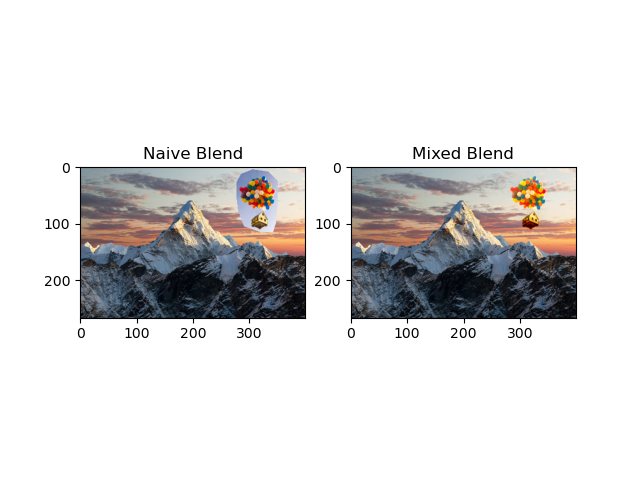

Mixed Gradients

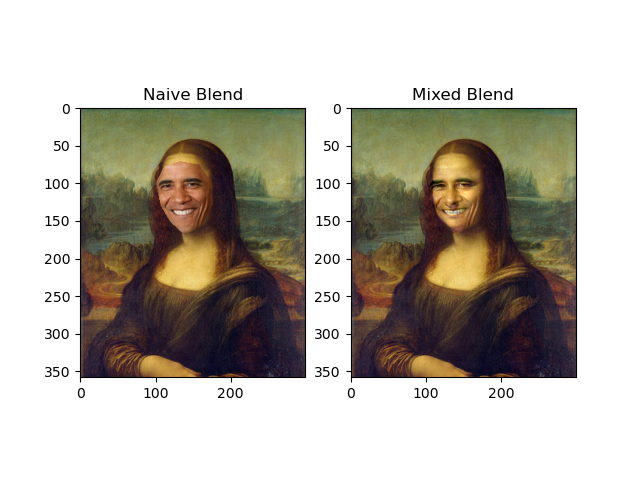

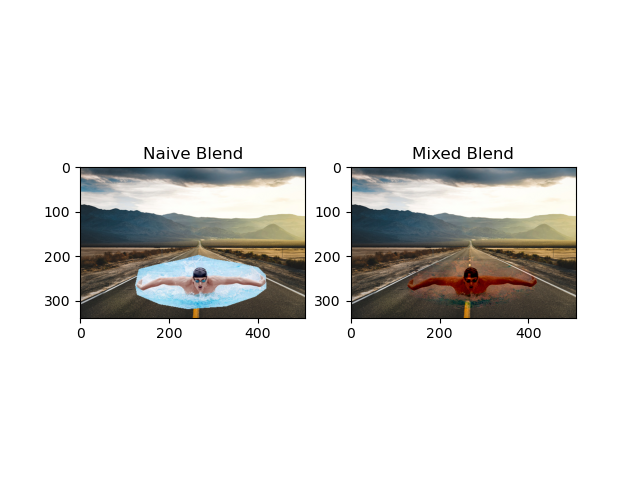

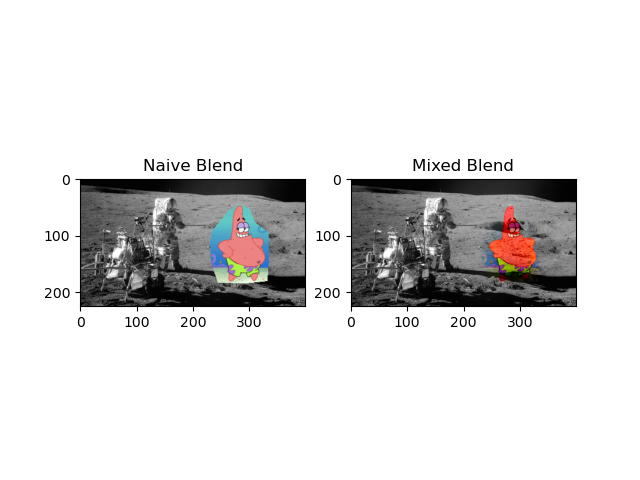

Mixed gradients is actually pretty straight forward based on Poisson Blending, we just add one condition that instead calculating the gradient of source image s, we compare the gradient of s and gradient of t, and take the larger one so that it can better blend when the difference of source and target image are large. The comparison of the same image can be seen as below(The left is blendered without Mixed gradients.):

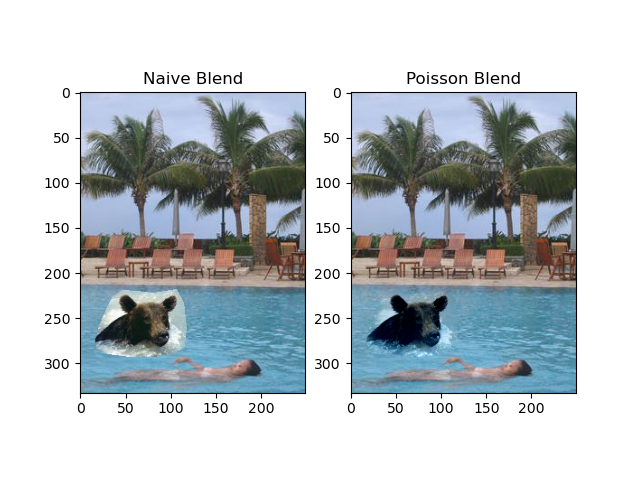

The given example of bear and pool is below:

There are some more generated blending images:

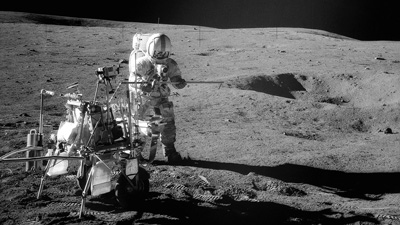

And there is a failure case where the colorful Patrix can't blend so well with the gray-scale like moon image:

This is mainly because there is upper limitation of the blending algorithm to adjust the color, if the source image and target image have too large difference, the algorithm will reach its limitation to find the solution that best approximate the least square function.

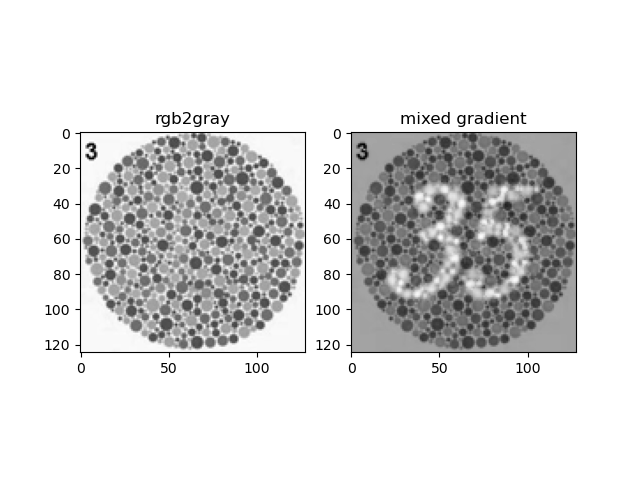

Color2Gray

The Color2Gray method first turns RGB image to the HSV color space, and only consider the S and V channels to represent the color contrast and intensity respectively. In this way, we can keep the color contrast of rgb image and preserve the grayscale intensity at the same time. The algorithm runs similar to the Mixed Gradient where source and target image are S and V. The result is shown as below:

Assignment #3

Lorem ipsum dolor sit amet, no eum reque putent scripta. Eos esse vidit nonumy eu. Sit ut nominati prodesset, affert consul expetendis et cum. Sea cu animal cotidieque. Ei qui affert voluptua hendrerit, eripuit menandri pericula et per. Eam cu iusto possim vocent, veri mazim sensibus ea has, salutandi periculis his eu. Mei tractatos sententiae no. Eu vim eirmod molestie electram, eam ad dicunt facilisi conclusionemque, at nostrud delectus incorrupte mea. Vel ea liber munere maluisset, cu habeo cotidieque vel, blandit indoctum ei vel. Sit invidunt erroribus ne. Ea postea possit persecuti qui, tota dicit discere ut usu. Mei te iudicabit repudiare. Legere tritani definitiones vix ei, mea ea eros singulis. Qui ne posidonium definitionem, maluisset repudiare appellantur eam et, vim et vivendum facilisi delicatissimi. Ius ei choro dictas. Sit in atqui antiopam, ut his iuvaret oportere sapientem. Solet equidem recteque eum at, nam mundi perpetua te. Eum te semper appareat omittantur. Labitur perpetua ea mea, nam ea impetus partiendo. Elitr perfecto adipisci ut eum, his vocibus tincidunt incorrupte et. Ad duo putant tractatos. Sint case qualisque vis cu, soluta percipitur eu eam, cum inermis definitionem cu. Ne summo democritum pri, et falli ludus eruditi nam. Per assum mucius ex, no nec petentium delicatissimi, et pri hinc tacimates similique. Purto prima omnes vel ad, eu feugiat nostrum eum, ut has idque laoreet periculis. Ad vel officiis lucilius accusata, nonumy volutpat te qui. Noster indoctum mediocritatem ne cum.

Assignment #4

Lorem ipsum dolor sit amet, no eum reque putent scripta. Eos esse vidit nonumy eu. Sit ut nominati prodesset, affert consul expetendis et cum. Sea cu animal cotidieque. Ei qui affert voluptua hendrerit, eripuit menandri pericula et per. Eam cu iusto possim vocent, veri mazim sensibus ea has, salutandi periculis his eu. Mei tractatos sententiae no. Eu vim eirmod molestie electram, eam ad dicunt facilisi conclusionemque, at nostrud delectus incorrupte mea. Vel ea liber munere maluisset, cu habeo cotidieque vel, blandit indoctum ei vel. Sit invidunt erroribus ne. Ea postea possit persecuti qui, tota dicit discere ut usu. Mei te iudicabit repudiare. Legere tritani definitiones vix ei, mea ea eros singulis. Qui ne posidonium definitionem, maluisset repudiare appellantur eam et, vim et vivendum facilisi delicatissimi. Ius ei choro dictas. Sit in atqui antiopam, ut his iuvaret oportere sapientem. Solet equidem recteque eum at, nam mundi perpetua te. Eum te semper appareat omittantur. Labitur perpetua ea mea, nam ea impetus partiendo. Elitr perfecto adipisci ut eum, his vocibus tincidunt incorrupte et. Ad duo putant tractatos. Sint case qualisque vis cu, soluta percipitur eu eam, cum inermis definitionem cu. Ne summo democritum pri, et falli ludus eruditi nam. Per assum mucius ex, no nec petentium delicatissimi, et pri hinc tacimates similique. Purto prima omnes vel ad, eu feugiat nostrum eum, ut has idque laoreet periculis. Ad vel officiis lucilius accusata, nonumy volutpat te qui. Noster indoctum mediocritatem ne cum.

Assignment #5

Lorem ipsum dolor sit amet, no eum reque putent scripta. Eos esse vidit nonumy eu. Sit ut nominati prodesset, affert consul expetendis et cum. Sea cu animal cotidieque. Ei qui affert voluptua hendrerit, eripuit menandri pericula et per. Eam cu iusto possim vocent, veri mazim sensibus ea has, salutandi periculis his eu. Mei tractatos sententiae no. Eu vim eirmod molestie electram, eam ad dicunt facilisi conclusionemque, at nostrud delectus incorrupte mea. Vel ea liber munere maluisset, cu habeo cotidieque vel, blandit indoctum ei vel. Sit invidunt erroribus ne. Ea postea possit persecuti qui, tota dicit discere ut usu. Mei te iudicabit repudiare. Legere tritani definitiones vix ei, mea ea eros singulis. Qui ne posidonium definitionem, maluisset repudiare appellantur eam et, vim et vivendum facilisi delicatissimi. Ius ei choro dictas. Sit in atqui antiopam, ut his iuvaret oportere sapientem. Solet equidem recteque eum at, nam mundi perpetua te. Eum te semper appareat omittantur. Labitur perpetua ea mea, nam ea impetus partiendo. Elitr perfecto adipisci ut eum, his vocibus tincidunt incorrupte et. Ad duo putant tractatos. Sint case qualisque vis cu, soluta percipitur eu eam, cum inermis definitionem cu. Ne summo democritum pri, et falli ludus eruditi nam. Per assum mucius ex, no nec petentium delicatissimi, et pri hinc tacimates similique. Purto prima omnes vel ad, eu feugiat nostrum eum, ut has idque laoreet periculis. Ad vel officiis lucilius accusata, nonumy volutpat te qui. Noster indoctum mediocritatem ne cum.