Part 1: HCI Motivation

I am currently working on an HCI research project that is: In an augmented reality context in a small

room, a user could easily have too many augmented user interfaces open floating in mid-air, hence over-crowding

the small room and cognitively overload them, (since we tend to multi-task nowadays).

Therefore, we want to give these AR UIs "chameleon effects" so some of them could be at the user's periphery,

and reduce the user's overall cognitive load.

The most simple chameleon effect could be simply warping the AR UI to a physical object

in the room and adjusting its transparency. More interestingly, we could even replace the UI with a virtual object

that semantically matches its meaning and the room's setting.

Learning about image-synthesis in our class, I became motivated to try to accomplish such a task

with algorithms and networks.

Part 2: Deep Image Analogy

After initially proposing my project idea, I was recommended to look at the work "Deep Image Analogy". It is a technique for visual attribute transfer across images that may hgave very different appearance but perceptually similar semantic structure. It can find semantically meaningful dense correspondences between two input images by adapting the notion of "image analogy" with features extracted from a deep convolutional network for matching.

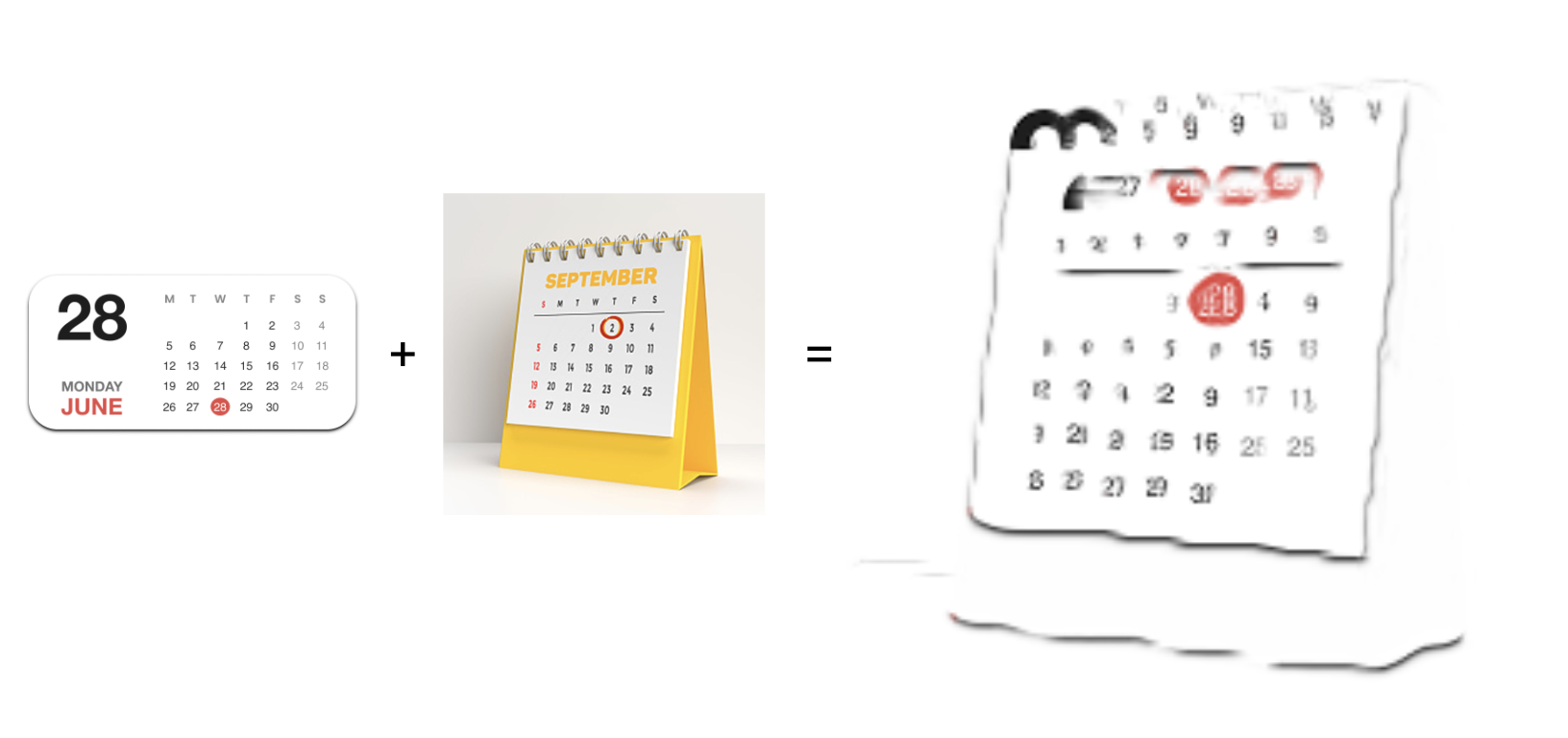

Experiments

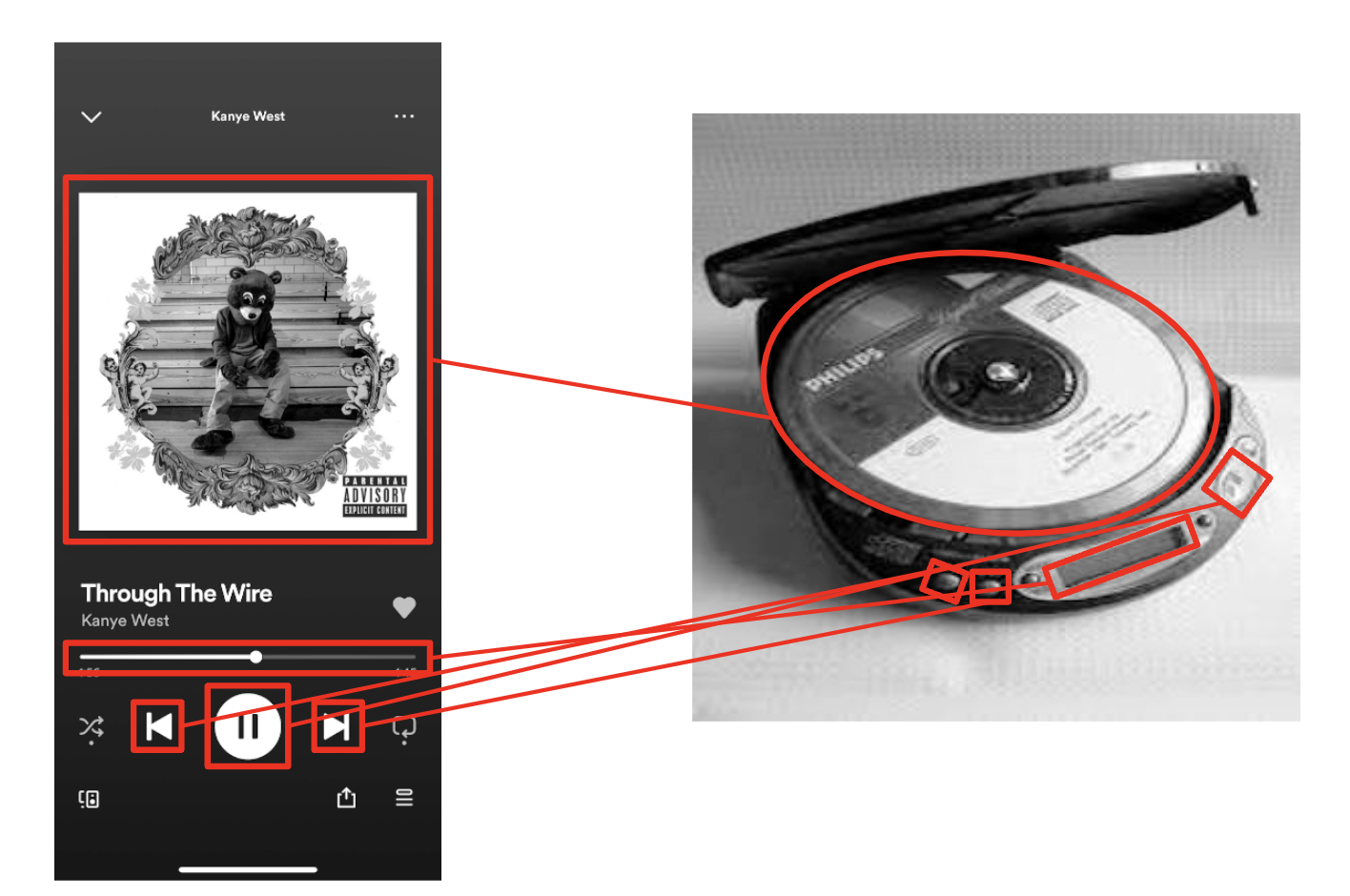

As an initial experiment, I deliberately chose a pair of UI and object images that have the same semantic meaning

and is perceptually similar, shown below.

The result here makes sense as it almost directly maps elements/features from the calendar UI to the calendar object,

while still maintaining the genral appearance of the calendar object.

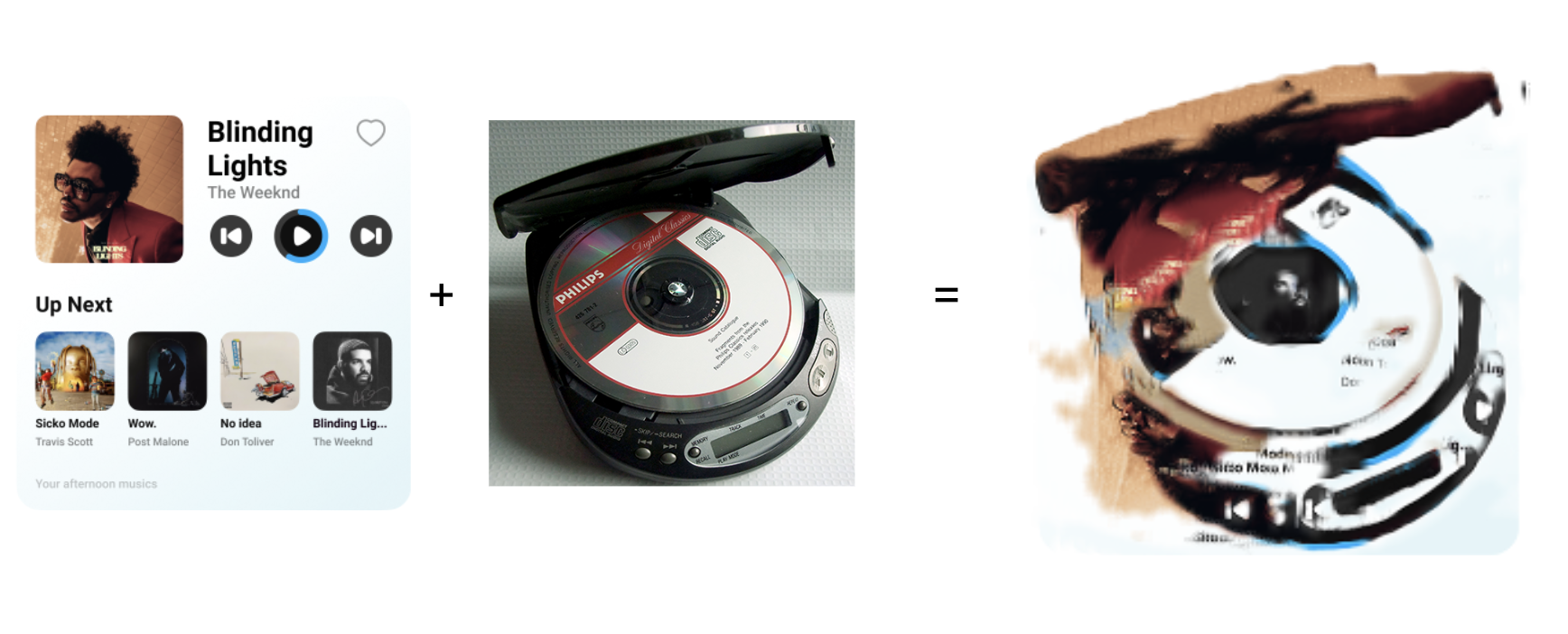

However, when I try to use another image pair that is not that perceptually similar, the visual attribute transfer

from Deep Image Analogy made little sense, Interestingly, some features such as the play button did get mapped

correctly, probably due to these features maintaining similar appearance in the image pair.

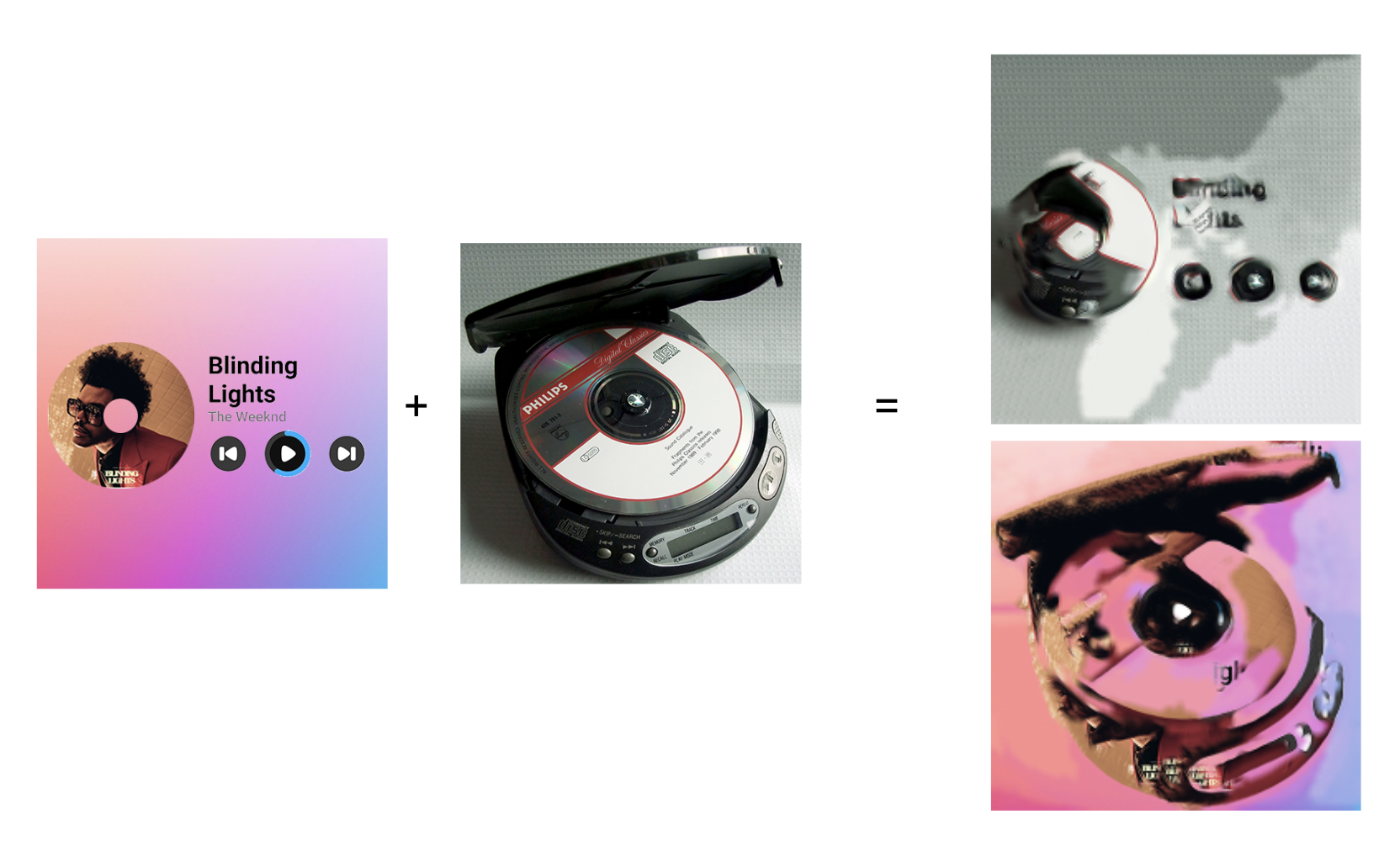

In attempt to get a result more similar to what I wanted, I tried to photoshop my input image (specifically the UI image)

before inputting it to the program: I got rid of the features that I would not want to map (e.g. other albums up next);

I changed the shape of the album being played to resemble the shape of the CD in the object image, hoping it would get mapped

to the CD; I changed the background color of the UI to resemble the color theme of Apple Music, hoping to also achieve color transfer.

The preliminary result from this experiment is shown below. The transfer from the object to the UI made more sense semantically

comparing to the transfer from the UI to the object:

Though Deep Image Analogy did not work out, it inspired me to think about how UI designs would

often times have analogies to real objects of the same semantic label. For example, a music UI and

a music object (e.g. CD player) would both have album covers, play and control buttons etc.

Hence maybe I could define a feature tracking and mapping problem to solve with computer vision techniques.

Part 3: Computer Vision Approach without Deep Learning

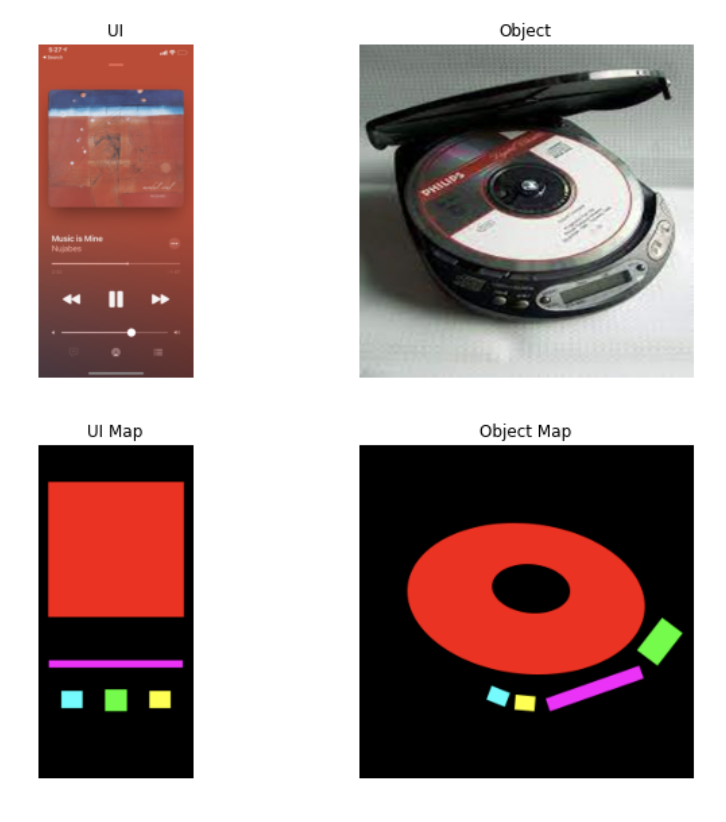

I tested out the idea with such a CV process:

1. Given an image pair (UI and object) of the same semantic meaning, create feature maps

to map out corresponding features

2. Match detected features across the image pair

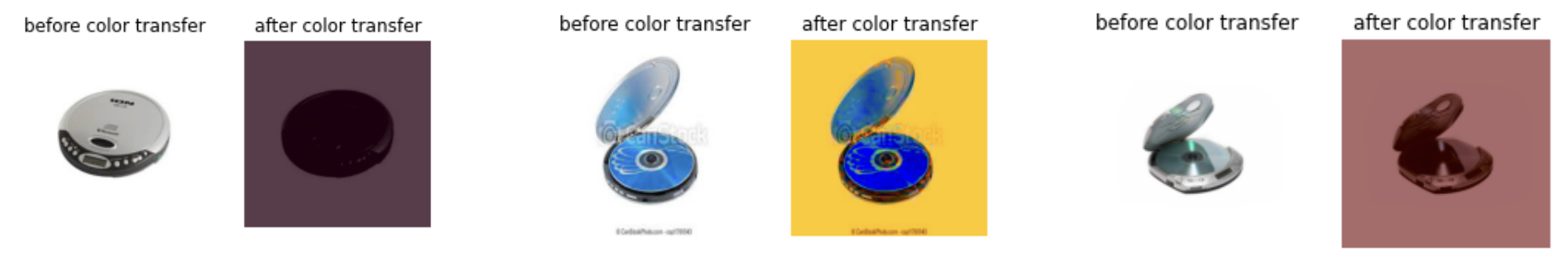

3. Calculate and apply color transfer from UI to object image

4. Apply geometric transformations to features and transfer from UI image to object image

Given initial success in my computer vision approach, I could mass-produce "target chameleon" images,

hence given a dataset of input UI images and a dataset of produced chameleon images, I could train a

GAN to perform this domain transfer task for me. But first I would need a CD player images dataset and

a music UI images dataset.

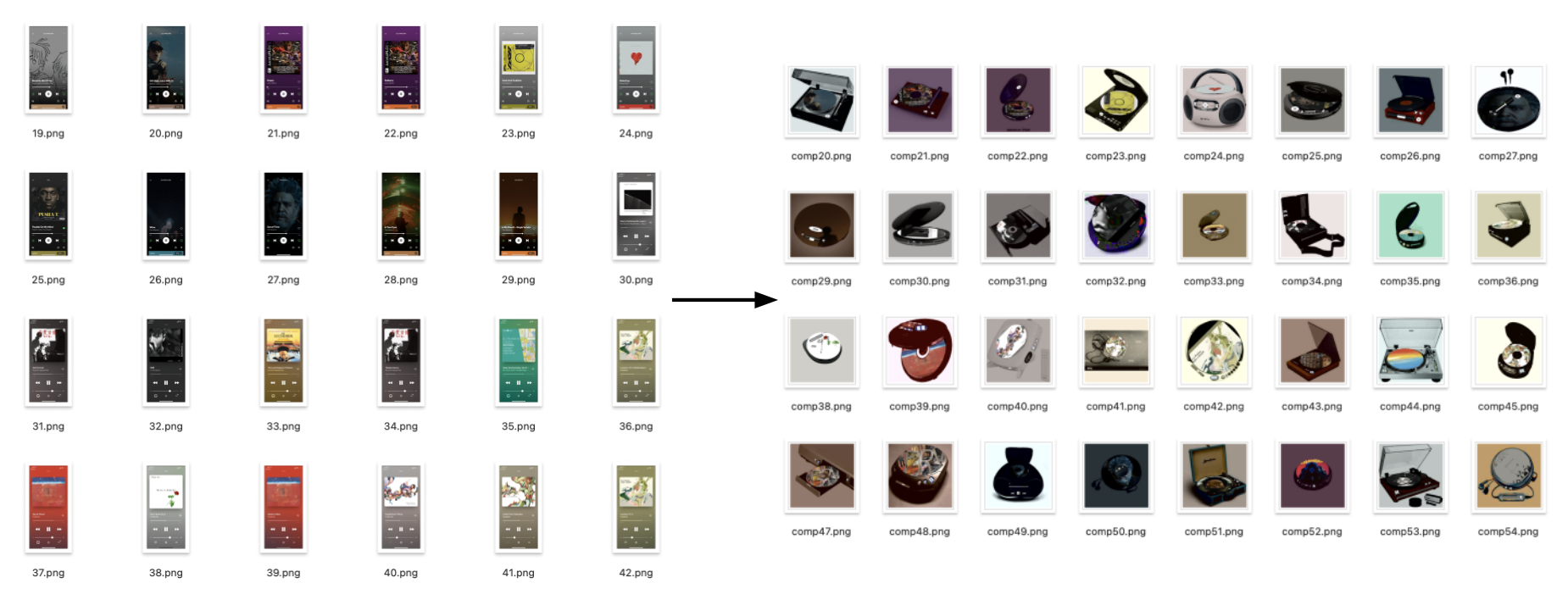

Part 4: Dataset Curation

The music UI dataset is simply curated from taking screenshots from different music apps.

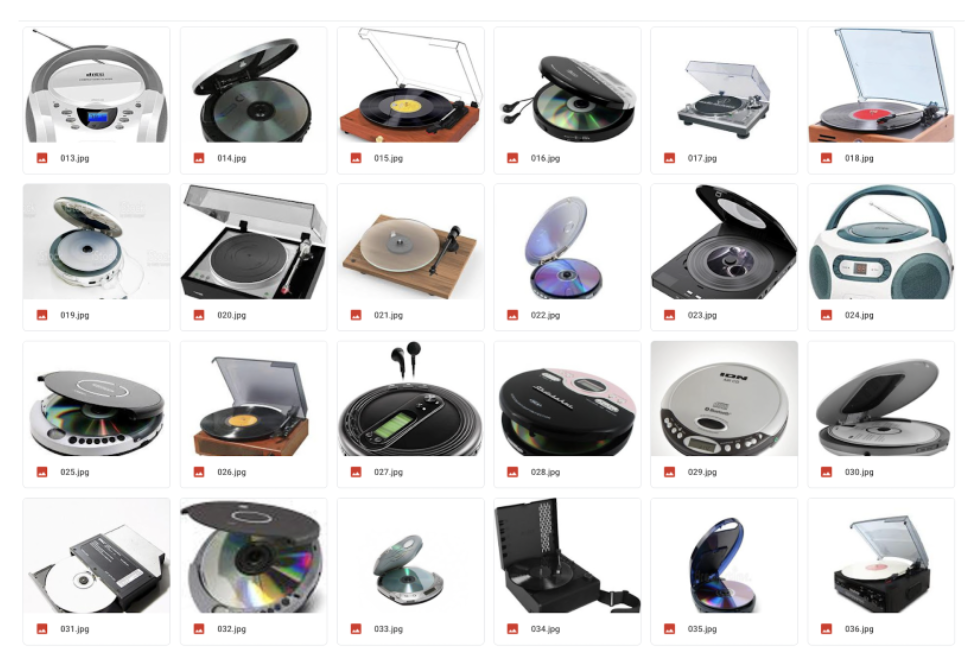

The curation of music objects images dataset is a bit more complicated. I curated two such datasets.

For the first dataset, I hand collected 300+ CD player images. They are mostly consistent in the CD players' shapes, mostly on white backgrounds,

and mostly have the entire object with minimal occlusion.

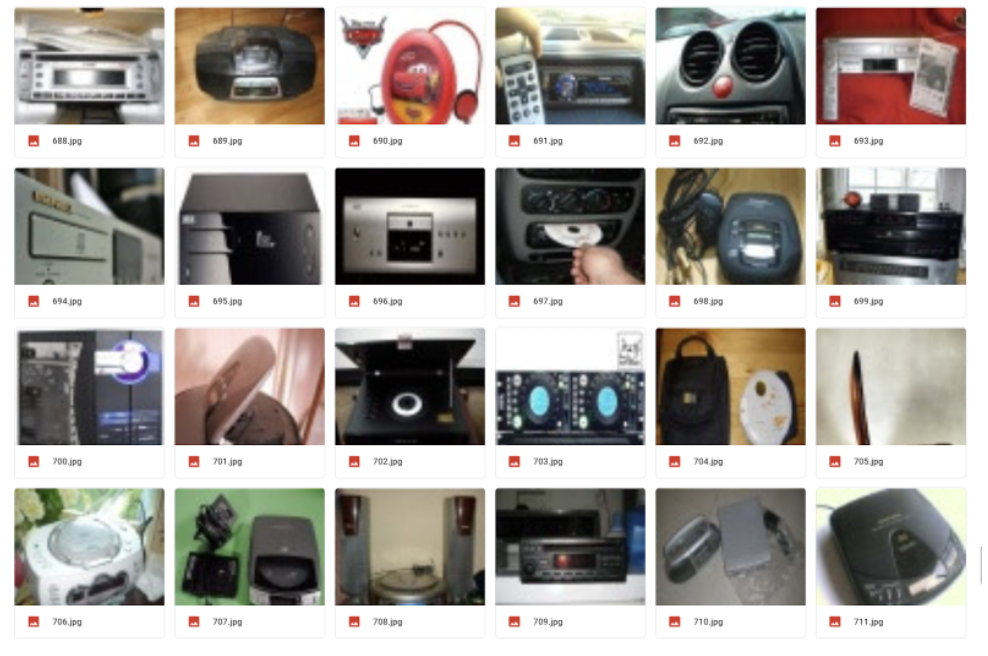

The second dataset consists 1500+ CD player images scraped from the web. However, the objects in these

images have very different shapes, are on random backgrounds, and many occlusions including the apperance of

other objects (e.g. human hands) exists in this dataset.

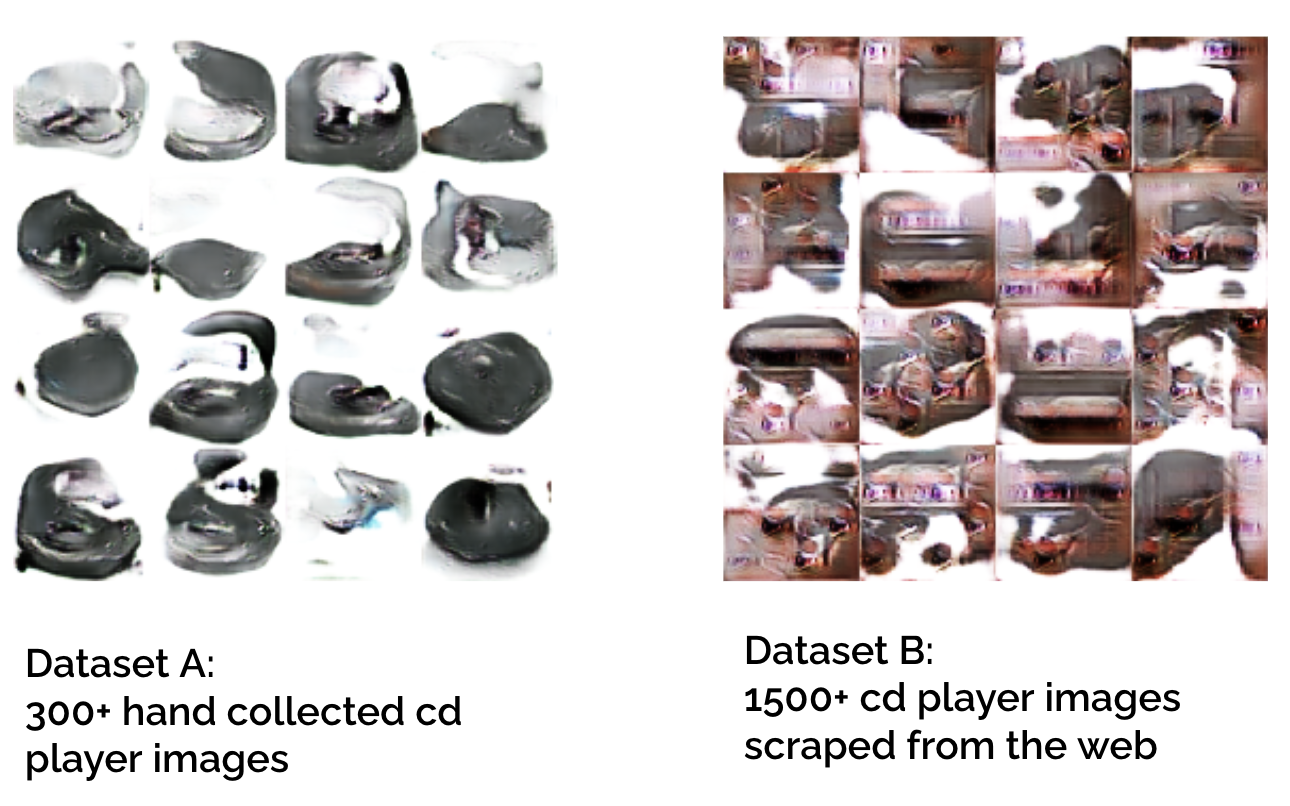

I tested the two datasets on the DC GAN we implemented from HW3,

below are generated outputs both at the 8600th iteration. As seen, the hand curated dataset

of 300 images generated better outputs than the 1500+ scraped images.

Part 5: Cycle GAN

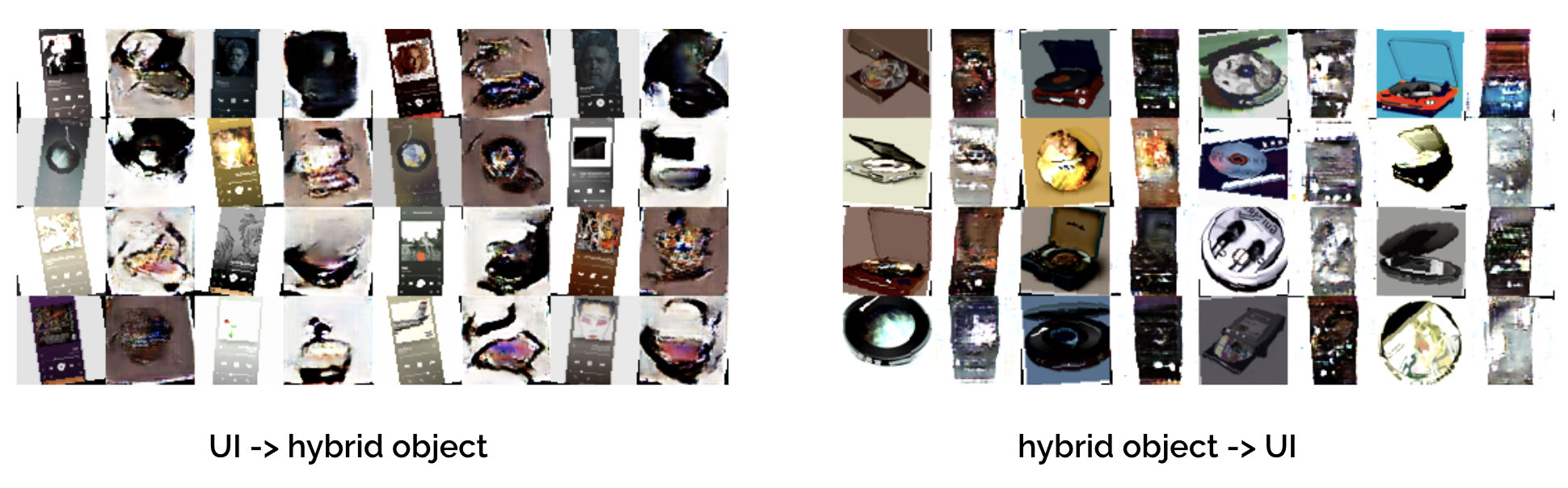

I experiemnted with running CycleGAN on 90 pairs of images (UIs and chameleonized UIs), yielding the result below.

Though results here aren't great, we can still see domain transfer in the general structure of the image pairs.