Deep Facial Expresssion Synthesis

16-726 Spring 2022

In recent years, facial expression synthesis has drawn significant attention in the field of computer graphics. However, challenges still arise due to the high-level semantic presence of large and non-linear face geometry variations.

There are two main categories for facial expression synthesis; the first category mainly resorts to traditional computer graphics technique to directly warp input faces to target expressions or re-use sample patches of existing images. The other aims to build generative models to synthesize images with predefined attributes.

Deep generative models encode expressional attributes into a latent feature space, where certain directions are aligned with semantic attributes. However, outputs of deep generative models tend to lack fine details and appear blurry or of low-resolution. In this project we explore three different deep generative models and techniques to finely control the synthesized images, e.g., widen the smile or narrow the eyes.Dataset

We use the Large Scale CelebFaces

Attributes (Celeb-A) dataset to evaluate facial expression synthesis. The dataset

contains over 200k images, each with 40 attribute annotations.

The list_attr_celeba.txt file contains image ids associated with their binary

attributes, where 1 indicates "has attribute" and -1 indicates "no attribute". Example

attributes include "male", "no beard", "smiling", and "straight hair".

Preprocessing

To preprocess our data, we define custom dataloaders in Pytorch for loading the images and their associated labels (40-dimensional binary vectors). Depending on the model, we crop and downscale images accordingly.

Methods

Variational Autoencoder

We consider two types of state-of-the art VAEs, Beta-VAE and DFC-VAE for learning disentangled representations of features.Background: AEs & VAEs

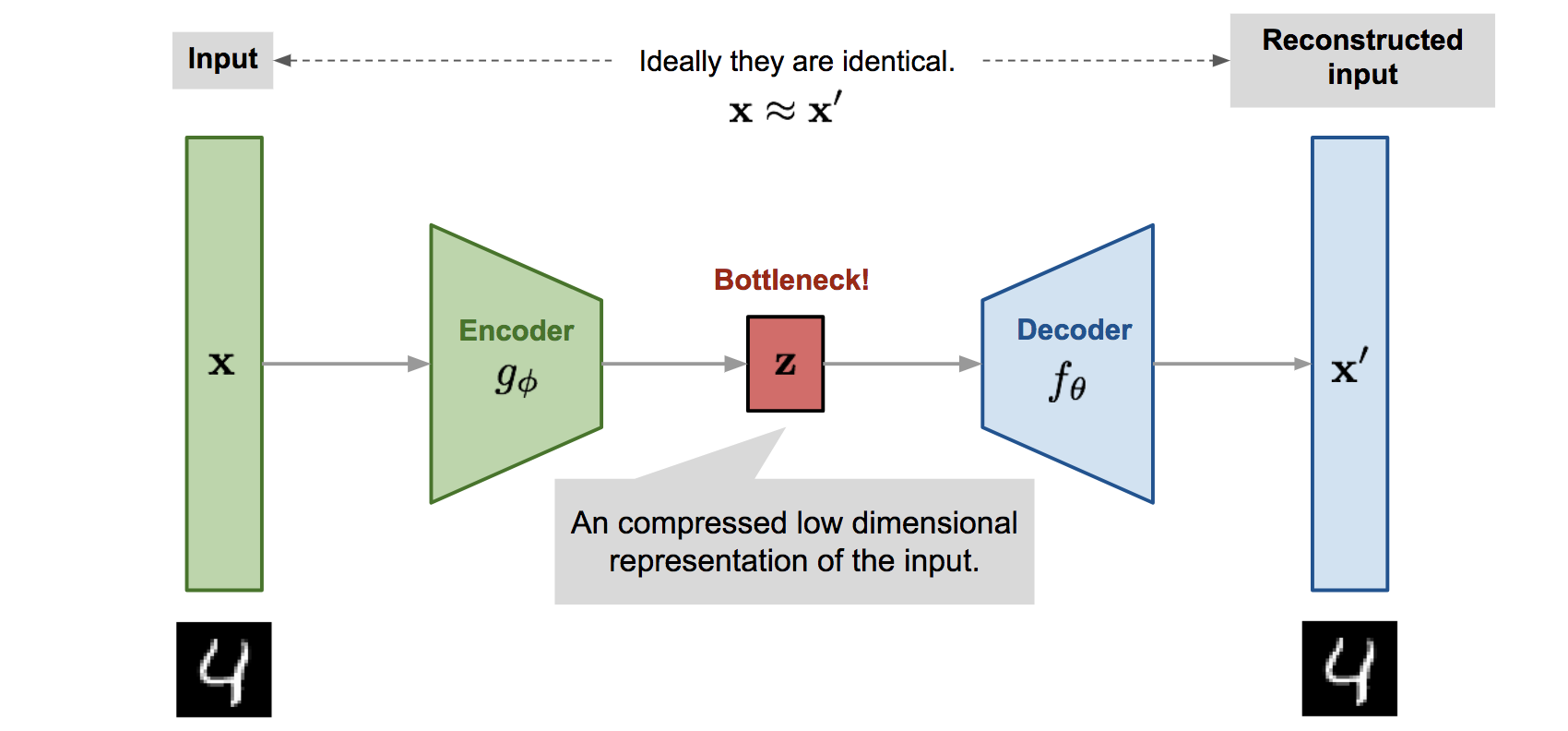

Autoencoders are a class of neural networks consisting of an encoder and a decoder. Through iterative weight optimization, autoencoders learn to encode the data into a low-dimensional space and then reconstruct (decode) the original data. The downside is that autoencoders have no way of synthesizing new data. Thus, variational autoencoders (VAEs) are introduced, in which the decoder effectively acts as a GAN (decode points that are randomly sampled from the latent space, akaz ~ p(z). ) VAEs are trained to minimize the sum of the

reconstruction loss

(binary cross entropy loss) and KL divergence between prior p(z) over latent

variables

and probabilistic encoder

q(z|x): KL(q(z|x) || p(z|x))., keeping the distance between the real

and estimated

posterior distributions small.

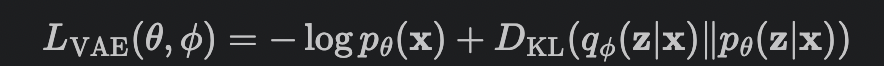

Beta-VAE

Beta-VAE (2017) is a type of latent variational autoencoder used to discover disentangled latent factors in an unsupervised manner. The addition of a hyperparameter Beta weights the KL divergence term, constraining the representational capacity of latent z and encouraging disentanglement. The loss function is as follows:

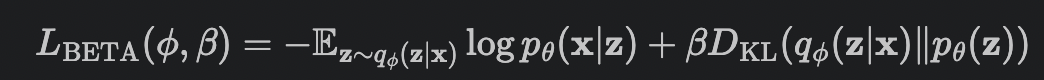

DFC-VAE

Deep Feature Consistent VAE (DFC-VAE, 2016) replaces VAE'sL_rec with a deep feature perceptual loss

L_p during training,

ensuring that the VAE's output preserves the spatial correlation characteristics

of the input. Weights w_i regularize each layer's perceptual loss term.

Latent Arithmetic on DFC-VAE

Given specified attribute A and B corresponding to specified genders genderA and genderB, we

fetch N images for each of with_A, without_A, with_B,

without_B, resizing each image

to 64x64x3.

without_A and

without_B categories

to get the mu/logvar, then sample from the learned distribution to get "z_without_A" and

"z_without_B".

Then, we compute "z_avg_A" by differencing the average latent vectors across with_A

category

by the averages across without_A category to get the latent representation of

attribute "A".

Finally, we add a weighted "z_avg_A" to without_A and without_B to

visualize the added

attribute.

z_arith_A = z_avg_A + (f * z_without_A)

Latent Space Interpolation

We also visualize interpolations between

- identities of the same gender

- same gender with and without attribute

- identities of different gender with/without attribute

z_interp = (f * z1 + (1 - f) * z2)

InterfaceGAN

In using GAN based methodologies we can perform vector arithmetic in the latent space to see if the latent attributes can be disentangled. For example, can we find a particular vectorized direction such that moving along the direction changes a specific attribute (e.g. smile, pose, etc). Given a dataset, we can perform GAN inversion to get a latent space mapping for the input images. We can perform latent space manipulation on this vector space to find attributes that can be manipulated and interpolate within a certain direction. This method does assume that attributes within the latent space can be disentangled in a linear fashion.

From this idea, InterFaceGAN applies latent space manipulation to style based generators (PGGAN, StyleGAN). We consider PGGAN specifically to explore new architectures as StyleGAN as been detailed within class.

The structure of PGGAN (Progressive Growing GAN) starts with training the generator and discriminator at low spatial resolutions and progressively adding layers to increase the spatial resolution of generated images as training progresses. This method builds upon the observation that the complex mapping from latents to high-resolution images is easier to learn in steps. The process of PGGAN is shown below.

PGGAN Training Procedure

What InterFaceGAN does is to utilize PGGAN as a baseline synthesis method and includes conditional manipulation for latent space manipulation. Conditional manipulation is done via subspace project such that given two latent vectors z1 and z2, a new latent direction can be produced by projecting one vector onto another such that the new direction is orthogonal. The idea is to make vectors orthogonal suh that moving samples along this new direction can change "attribute 1" without affecting "attribute 2".

Conditional Manipulation via Subspace Projection

Latent Space Disentanglement using PCA

In this project, we attempt to decompose the latent space mapping from projected latent space vectors from PGGAN. We utilize machine learning methods such as Principal Component Analysis (PCA) to perform linear dimensionality reduction. The components that arise from this method will create direction vectors such that moving along these directions can produce manipulable attributes. We show that this can produce attributes that can be manipulated and interpolated, although the limitations of this method assumes that the latent space can be linearly disentangled. The results for "smile" and "pose" manipulation using PGGAN are shown in the output section below.

Flow-based Methods

Flow based models have attracted a lot of attention due to its ability to predict exact loglikelihood and tracts the exact latent variable inference. In GANs, datapoints can usually not be directly represented in a latent space, as they have no encoder and might not have full support over the data distribution. This is not the case for reversible generative models and VAEs, which allow for various applications such as interpolations between datapoints and meaningful modifications of existing datapoints. We would be using one such interpolation for latent attributes manipulations, leading to meaningful manipulations to the existing features.

Experiments

Setup

Follow the steps create a conda environment and Install Pytorch with CUDA enabled . Also, make sure OpenCV is installed.Beta/DFC-VAE

To run Beta-VAE: cd beta/vae then run python evaluate.py .

Outputs are saved under beta-vae/[model-name]_outputs.

InterfaceGAN

Link to Colab NotebookThe linked notebook contains the necessary code and sections for running InterFaceGAN along with PCA (and additional attempted ML methods).

To load in pretrained weights for PGGAN trained on celebHQ,

Download the model here.

and place it under the directory models/pretrain in the InterFaceGAN

repository. The model

will generate interpolations for the user.

Flow-based Methods

Link to Colab NotebookRun the

GLOW_GAN_Working.ipynb notebook in a Colab or any suitable jupyter environment.

To run the notebook on the terminal:

!pip install runipy

!runipy GLOW_GAN_Working.ipynb

Example Outputs

VAE

Latent Arithmetic: Balding

Latent Arithmetic: Eyeglasses

Latent Arithmetic: Smiling

Latent Interpolation: Blonde Hair/Smiling

Latent Arithmetic: Eyeglasses

Latent Arithmetic: Smiling

Latent Interpolation: Blonde Hair/Smiling

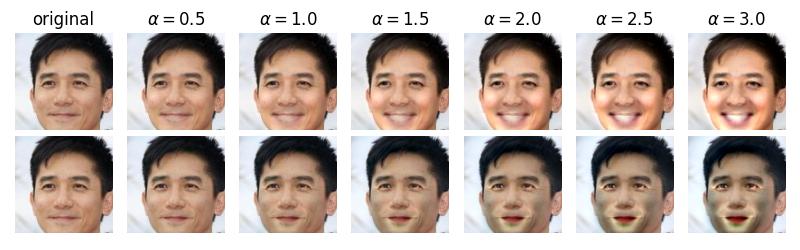

InterfaceGAN

Smile Manipulation

Pose Manipulation

Flow-Based Methods

One of the changes we tried to the existing model is the way we decompose the 40 latent attributes (bangs, age, gender etc.), the paper proposed to consider the presence of the latent attribute as a positive vector and its absence to be negative and then summing it up. I have hereby tried to take the average of some sample of pictures for the calculation of the latent attribute vectors.

GLOW Based Latent Interpolation: Gender

GLOW Based Latent Interpolation: Bangs

GLOW Based Latent Interpolation: Smiling

Discussion

With Beta-VAE and DFC-VAE, the results are better in terms of adding/subtracting attributes from faces using latent vector arithmetic. Further improvements include training on higher-resolution images.

From InterFaceGAN we see that latent attributes can be manipulated, however the assumption that these attributes can be linearly disentangled may be a weak assumption as other features can be altered jointly. Observing the example of smile manipulation, we can add/subtract a smile but other factors such as lighting are also altered.

For GLOW based interpolation, we observe somewhat better results on averaging the attribute vectors from seveal images for some specific latent attributes. Despite of training it over 40 different attributes, we observe a disparity in learning all of them explicitly. Also, we observed that some closely related attributes, for example: balding is mainly observed in male images so the latent space learnt for balding matches maximally with gender change thus incapable of separating the 2 latent spaces.