Table of Contents

Title: Analyzing the performance of different synthetic data generation methods on action classification for domain transfer

Author: Riyaz Panjwani, Emily Kim

Abstract

Human activity recognition, or HAR for short, is a broad field of study concerned with identifying the specific movement or action of a person based on sensor data. Movements are often typical activities performed indoors, such as walking, talking, standing, and sitting. They may also be more focused on activities such as those types of activities performed in a kitchen or on a factory floor. Generally, this problem is framed as a univariate or multivariate time series classification task. It is a challenging problem as there are no obvious or direct ways to relate the recorded sensor data to specific human activities and each subject may perform an activity with significant variation, resulting in variations in the recorded sensor data. Moreover, the paucity of videos in the current action classification datasets has made it difficult to identify good video architectures, as most methods obtain similar performance on existing small-scale benchmarks.

In this project, we will be comparing the performance of the SlowFast trained on the following datasets – real videos, videos synthesized by the Liquid Warping GAN, and videos synthesized by the SURREACT. We will use the action classification dataset FineGym [1] / Kinetics [2] as the benchmark dataset. We will select a subset of actions for classification. SlowFast will be pretrained with the Kinetics dataset, and the model will be fine-tuned with extended datasets. We will analyze the performance by examining the confusion matrices, accuracies, class-weighted precision and average recall to analyze the performance.

Previous Work

Various action classification methods like I3D [3] and Slowfast [4] have been developed and are continued to be enhanced by researchers in the field. As all deep learning models are, these models have been trained on real-life data that has been manually labeled, which often lacks diversity, leading to overfitting. A most common, simple method of improving the dataset is data augmentation such as spatial sampling, adding noise, adding ColorJitter, and changing the sharpness.

A more recent, sophisticated method of improving and diversifying the dataset is to create a synthetic dataset. In the earlier works, synthetic datasets have been developed by artists using CGI software like Blender and Maya, by using data collected from marker-based motion capture. However, this takes a long time as well as a lot of expertise.

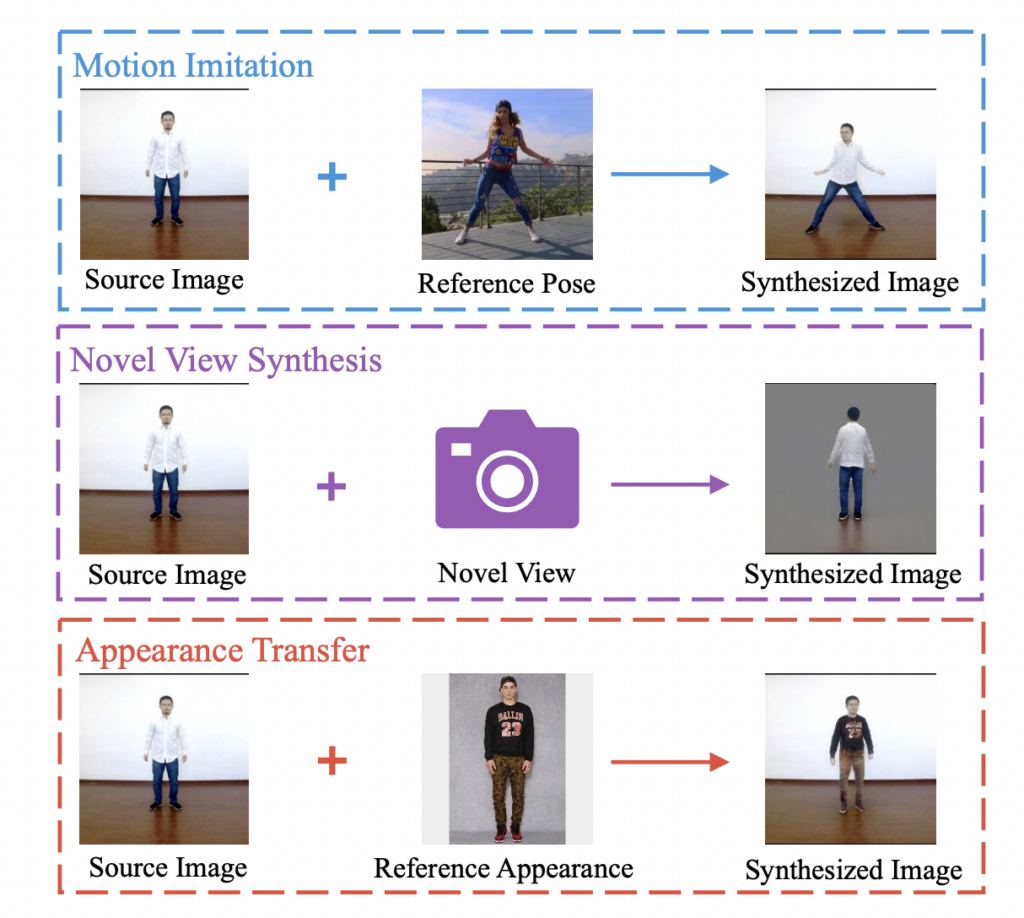

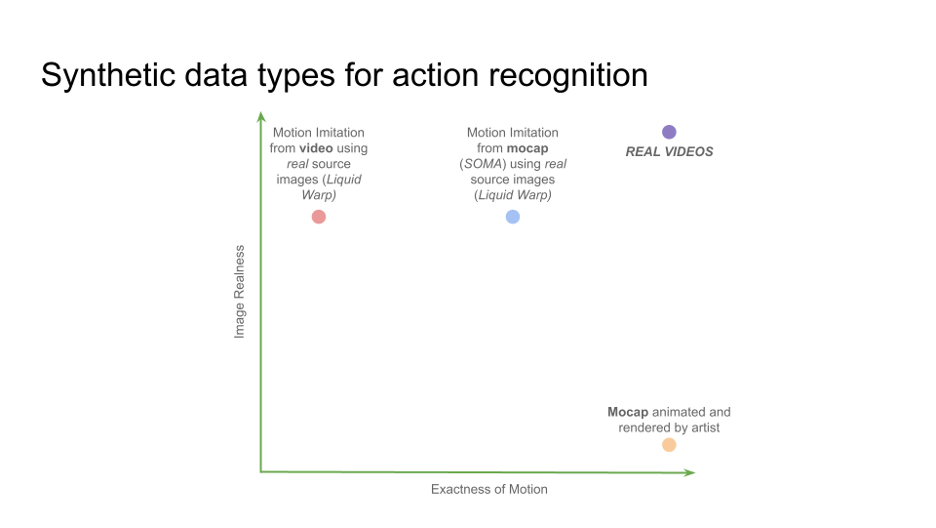

To reduce the time spent on creating synthetic data, motion imitators like Everybody Dance Now [5] and Liquid Warping GAN [6] have been developed to create less accurate but efficient models that look more like real videos than 3D graphics. Models like SURREACT [7] have also been developed to create synthetic datasets by using pose reconstruction and changing the camera viewpoints and placing the model in various background images. This method synthesizes videos that look unrealistic compared to the previously introduced motion imitation models.

Methodology

Liquid Warping GAN

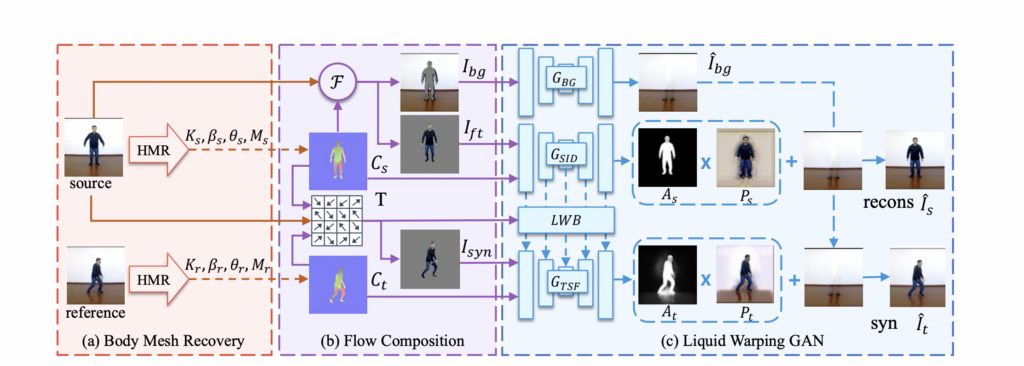

Liquid Warping GAN relies on a human mesh recovery (HMR) method for reconstructing the 3D mesh from the input image. Liquid Warping GAN contains three stages, body mesh recovery, flow composition, and a GAN module with Liquid Warping Block (LWB). The training pipeline is the same for different tasks. Once the model has been trained on one task, it can deal with other tasks as well. Here, we use motion imitation as an example, as shown in Fig. 1. Denoting the source image as Is and the reference image Ir. The first body mesh recovery module will estimate the 3D mesh of Is and Ir, and render their correspondence maps, Cs and Ct. Next, the flow composition module will calculate the transformation flow T based on two correspondence maps and their projected mesh in image space. The source image Is is thereby decomposed as the front image Ift and masked background Ibg, and warped to Isyn based on transformation flow T. The last GAN module has a generator with three streams. It separately generates the background image by GBG, reconstructs the source image Is by GSID, and synthesizes the image. It is under reference conditions by GTSF. To preserve the details of the source image, it proposes a novel Liquid Warping Block (LWB) and it propagates the source features of GSID into GTSF at several layers. They used three discriminators, DGlobal, DBody, and DHead, each used to evaluate the realness of the image, body and head, respectively.

The following diagram illustrates the motion imitation performed by Liquid Warping GAN.

Our Work

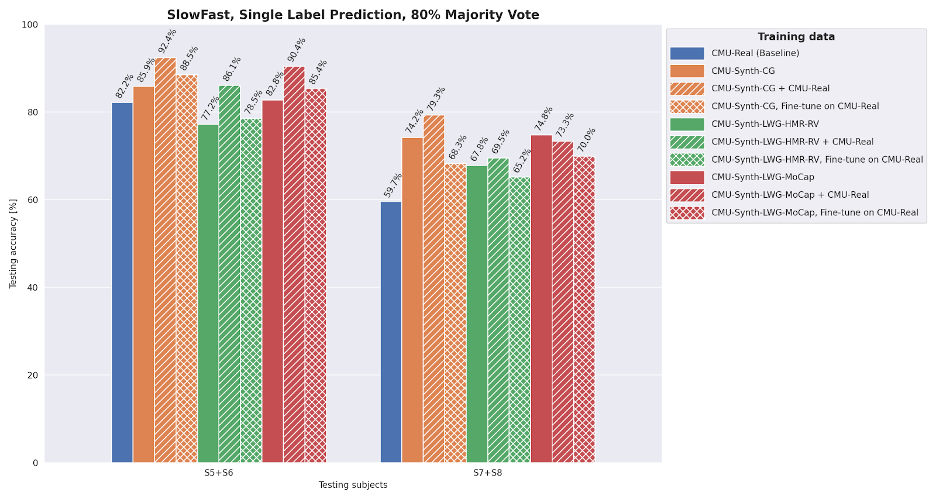

We modified the Liquid Warping GAN architecture to generate the videos from motion capture data to improve the motion quality. We used Motion Capture for the reconstruction of 3D mesh from raw motion capture data. We used the methods from the paper SOMA for the MoCap implementation to fine-tune liquid warping GAN. Mocap is captured at a higher frame rate and it captures motion as 3D points whereas HMR in the vanilla Liquid Warping GAN uses videos to infer the motion in 3D, which makes it difficult for the model to identify the occluded parts of the body.The results were benchmarked against various models using SlowFast, an activity recognition model, and single label prediction with an 80% majority vote. We found that the results were significantly higher quality than the videos generated by plain RGB videos.

We found that motion imitation through MoCap (SOMA) using real source images (Liquid Warp GAN) led to a better quality image (more real), and accuracy of motion as seen below.

Results

We had 9 subjects and they were evaluated with 5 gestures plus Idle as shown below.

The following are the results of training using real videos. In Figure 4 (b), we have used Liquid Warping GAN to generate videos from real video (see Figure 4 (a)). Figure 4 (c), shows the mocap animated artistically rendered video which has high motion accuracy but lacks realness compared to the liquid warping method.

Comparing the two videos side-by-side leads to better inference. Notice that the direction the palms are facing is fixed when generated from motion capture.

Here are the results tested on a SlowFast, an activity recognition model to benchmark our method’s perforamnce on various gestures.

Conclusion & Future Work

There are a few limitations for synthetic video generation such as the incosistency of the gestures amongst various subjects can hamper the model’s performance. Moreover, having diverse idle frames will not help in better generalization for activity classification. Also, there could be occlusions from the environment which might be of a concern to generate quality videos. Lastly, there might not be enough real videos which might lead to mode-collapse while training the model.

We have noticed through our experiments that high-quality synthetic data augmentation can often help model converge faster. A gestures can significantly vary from person to person, having synthetic data helps generate normalized videos. Since, real-videos might have many idle frames, this might not be useful for the model learning. The resolution & camera capture might be important attributes for real-videos but not for synthetic videos. We plan on extending this methodology for various other actions & subjects & benchmark performance with other novel methods.

CREDITS

https://arxiv.org/pdf/1705.07750.pdf

https://arxiv.org/pdf/1812.03982.pdf

https://arxiv.org/pdf/1808.07371.pdf

https://arxiv.org/pdf/2011.09055.pdf

https://www.di.ens.fr/willow/research/surreact/

https://sdolivia.github.io/FineGym/

https://arxiv.org/pdf/1705.06950.pdf

https://github.com/nghorbani/soma