Table of Contents

Author: Riyaz Panjwani

EXPLANATION

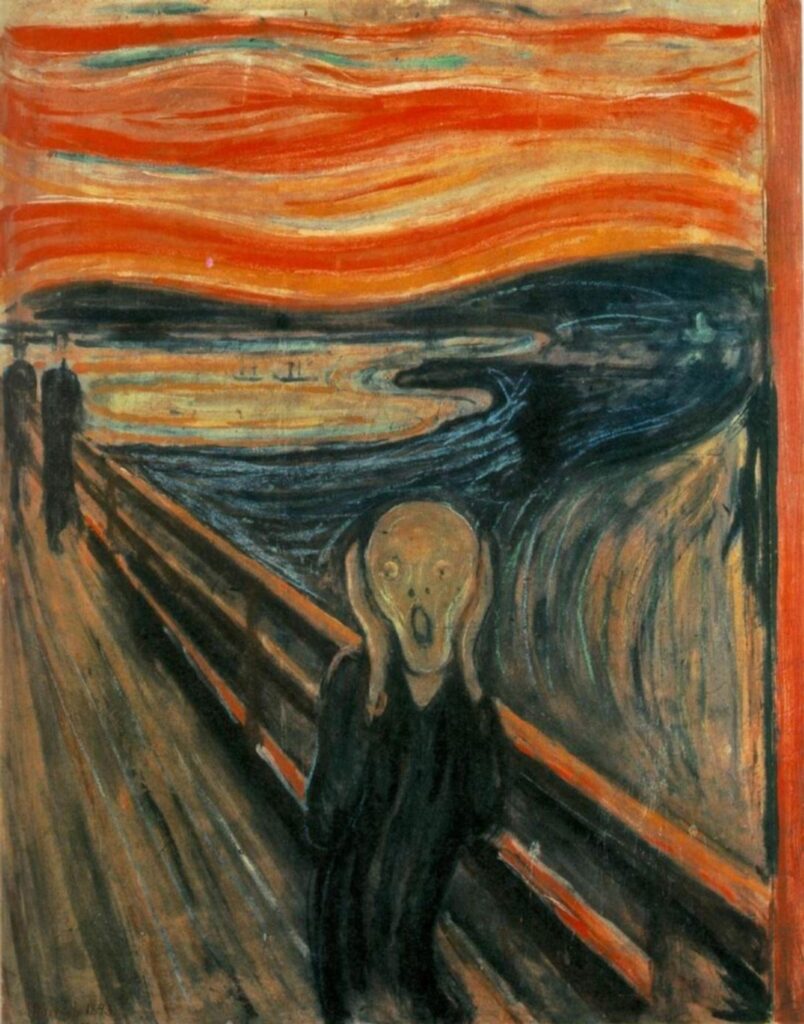

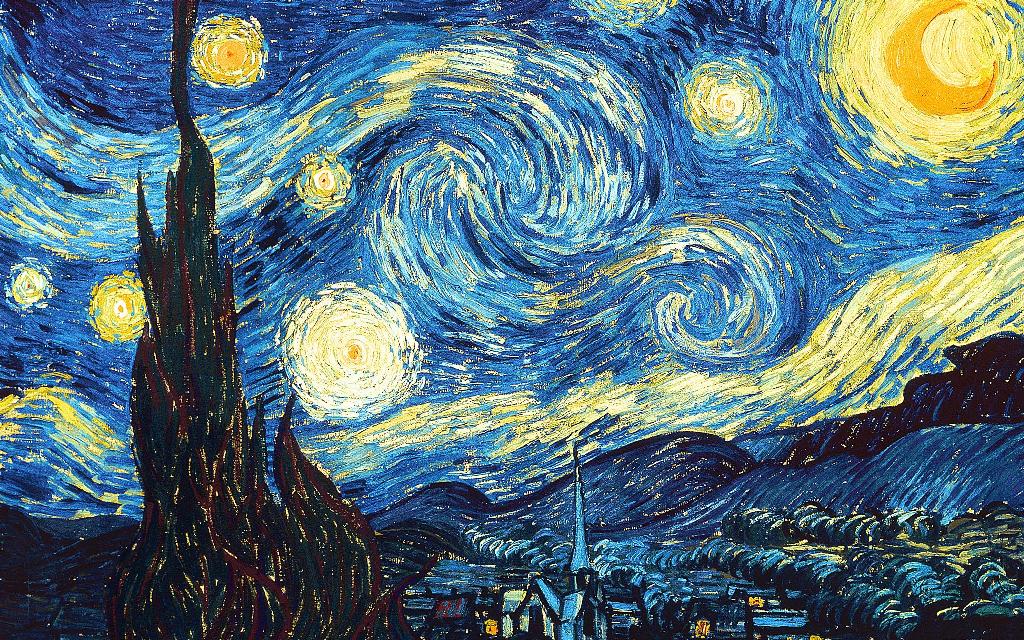

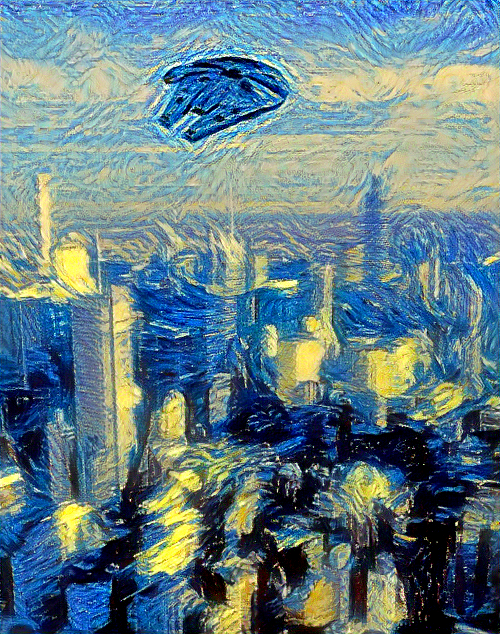

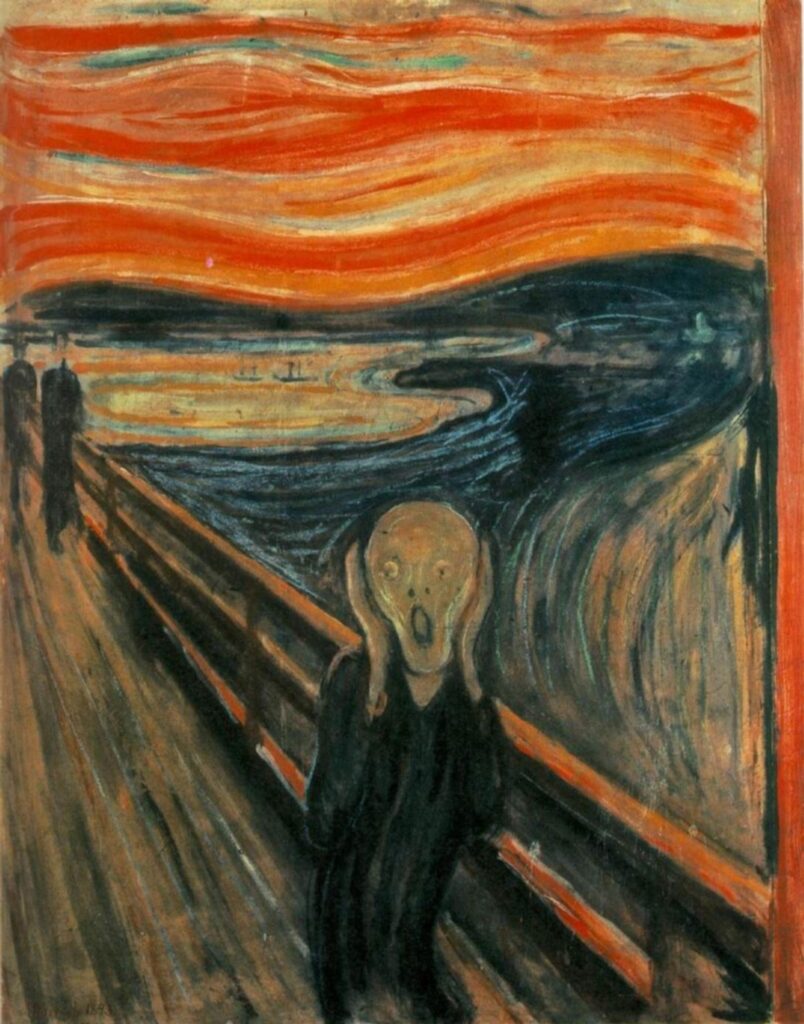

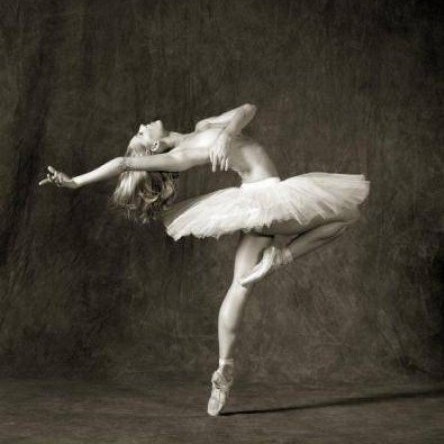

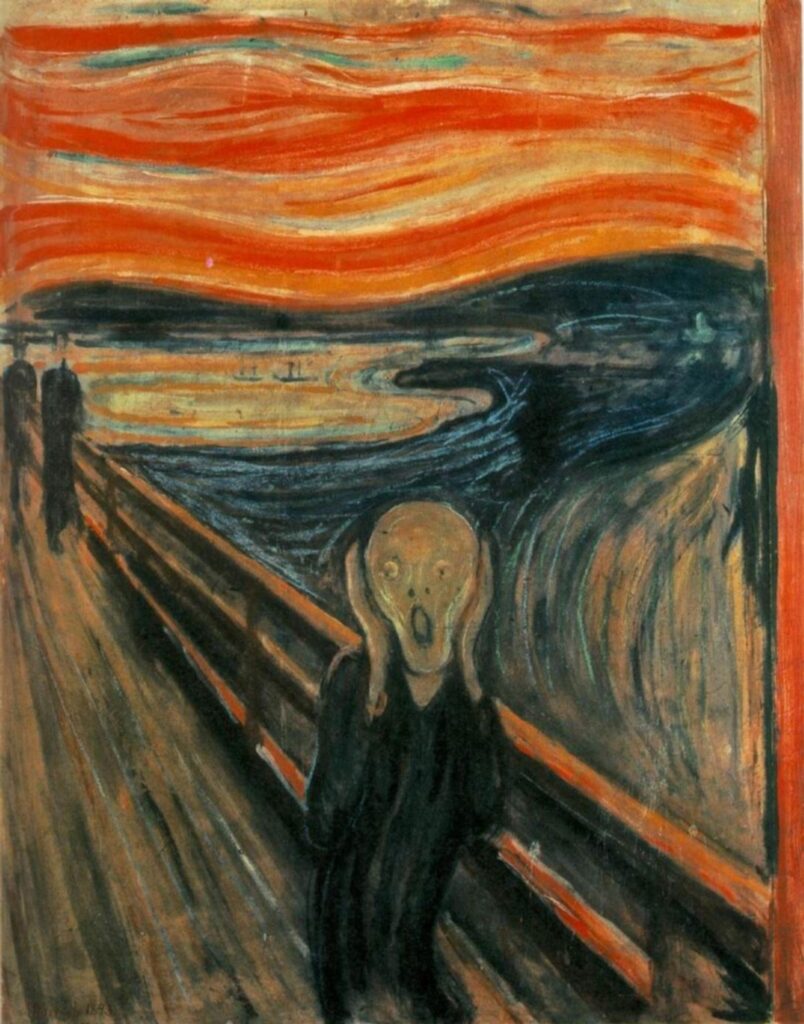

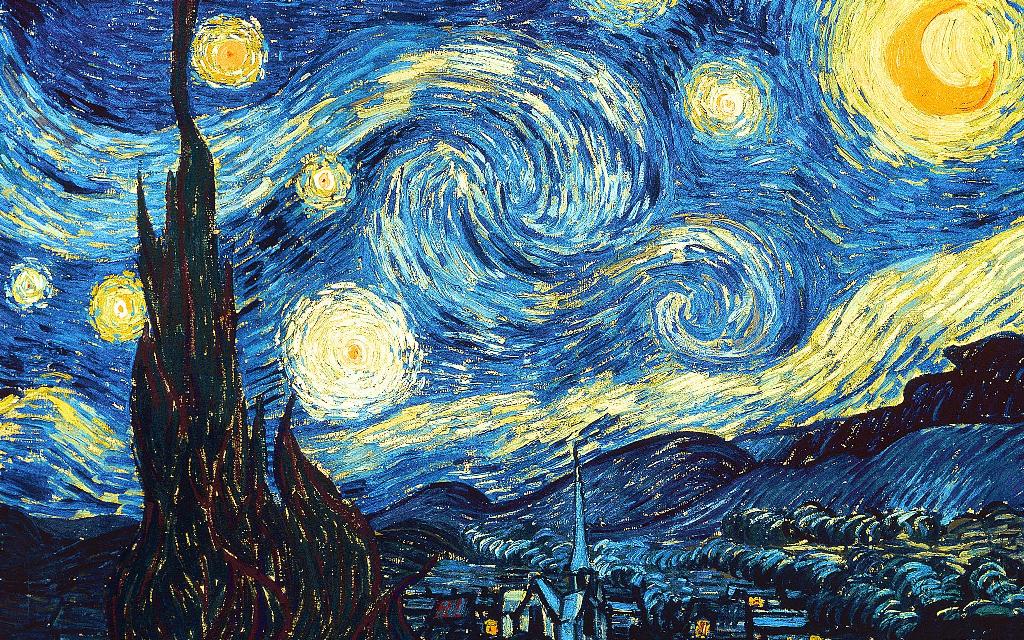

In this assignment, we will implement neural style transfer which resembles specific content in a certain artistic style. For example, generate cat images in Ukiyo-e style. The algorithm takes in a content image, a style image, and another input image. The input image is optimized to match the previous two target images in content and style distance space.

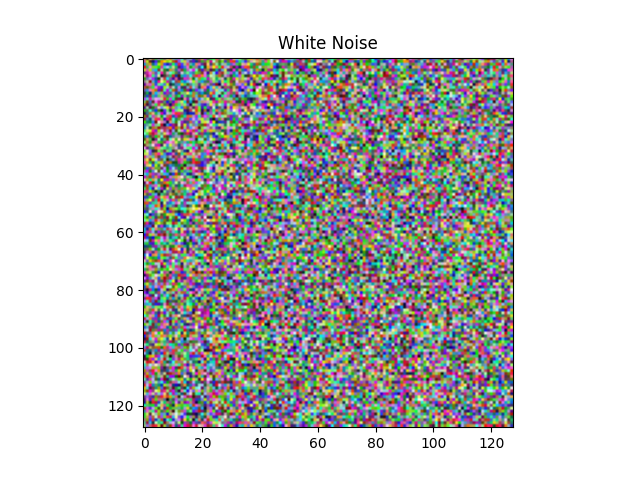

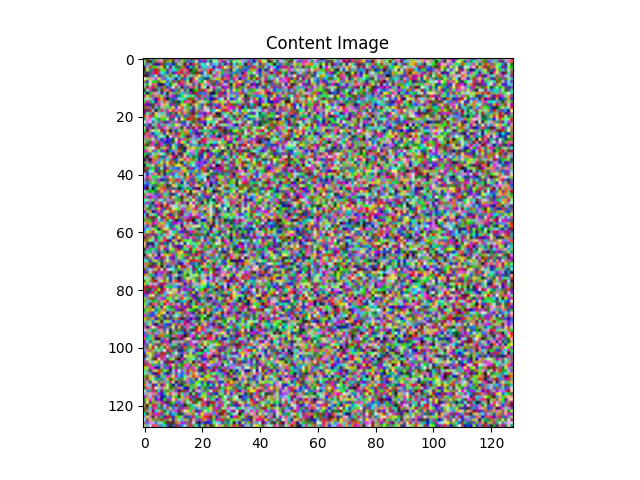

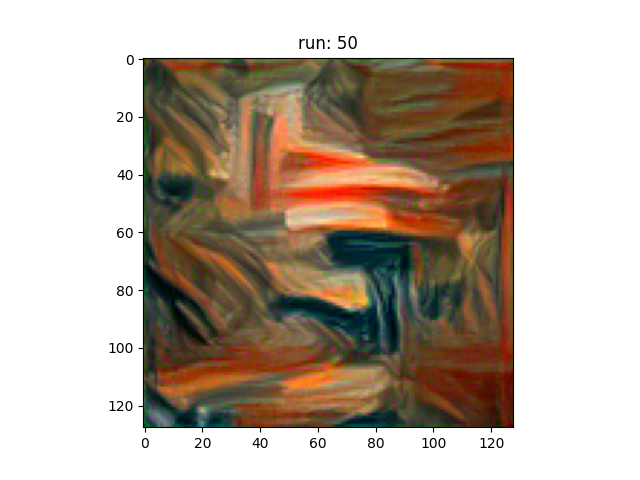

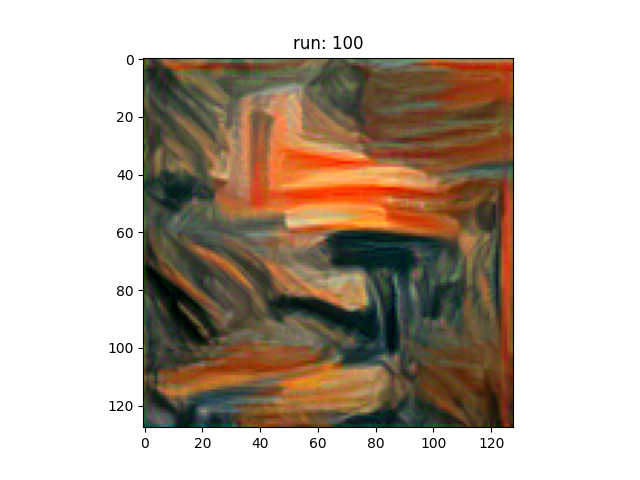

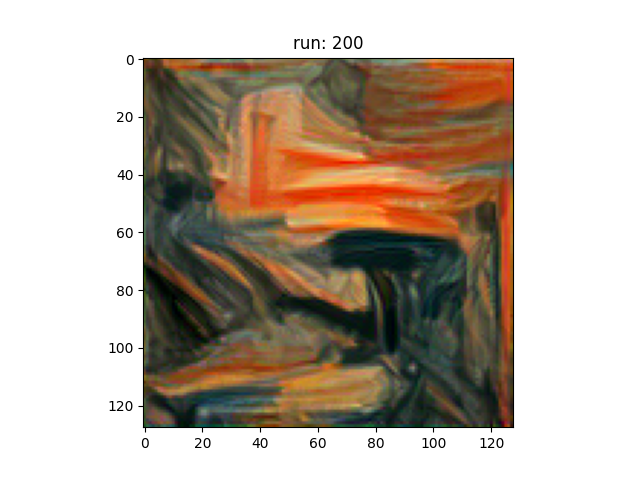

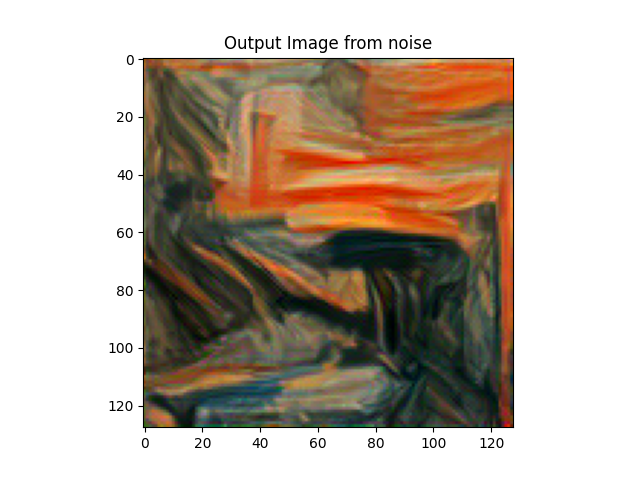

In the first part of the assignment, we start from random noise and optimize it in content space. In the second part of the assignment, we ignore content for a while and only optimize to generate textures. This builds some intuitive connection between style-space distance and gram matrix. Lastly, we combine all of these pieces to perform neural style transfer.

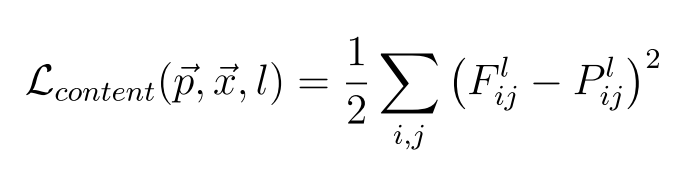

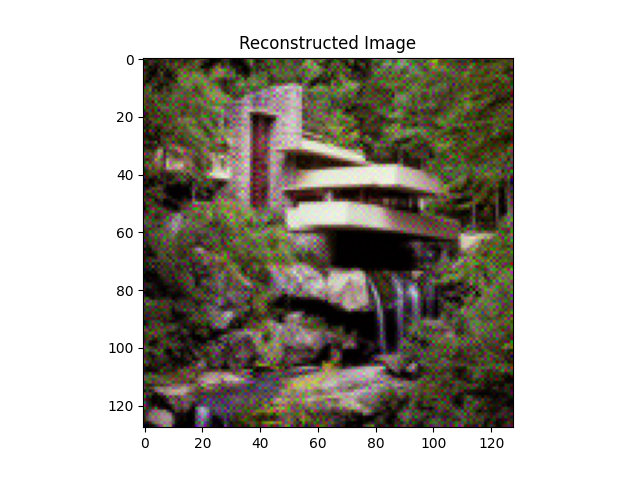

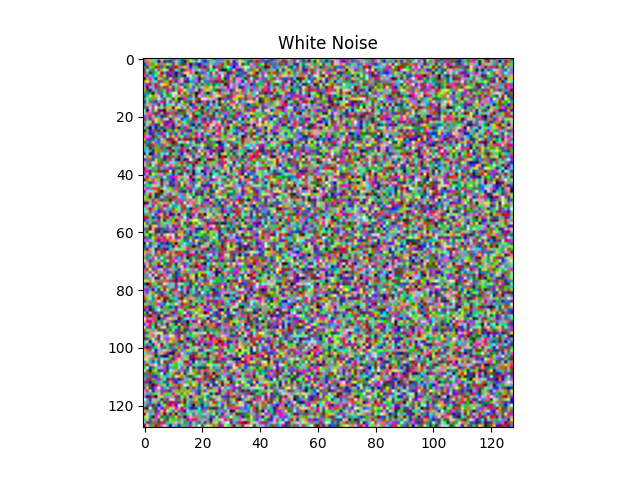

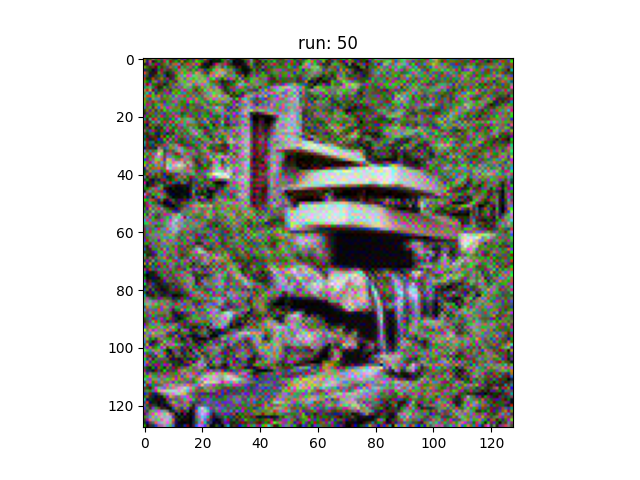

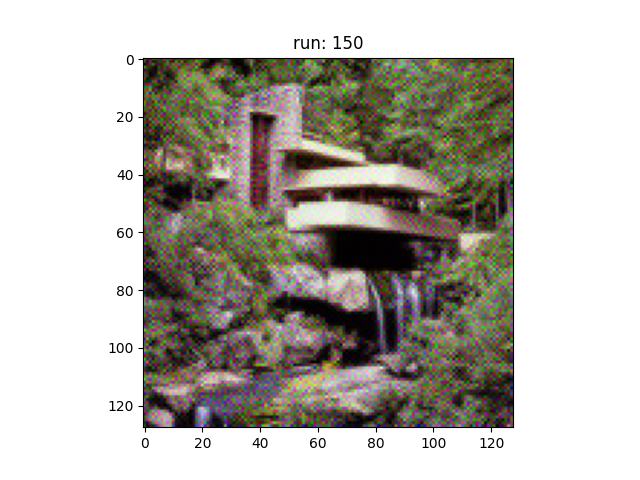

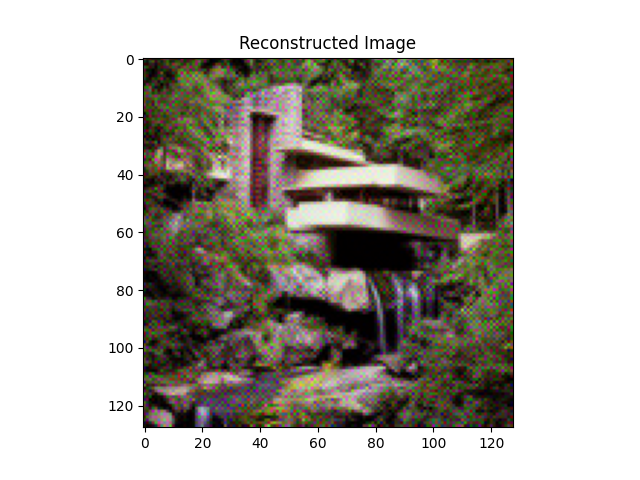

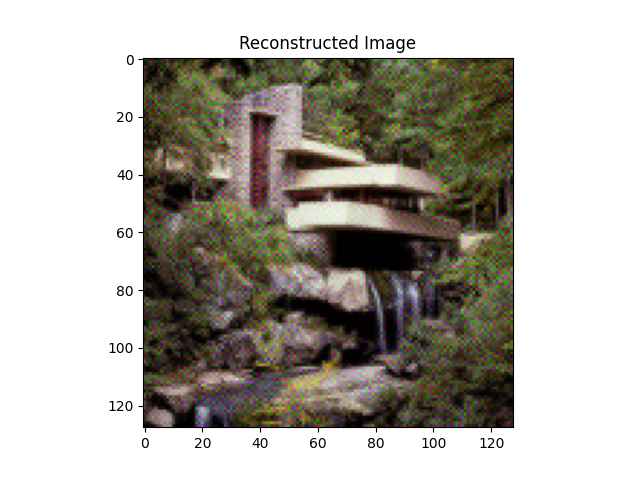

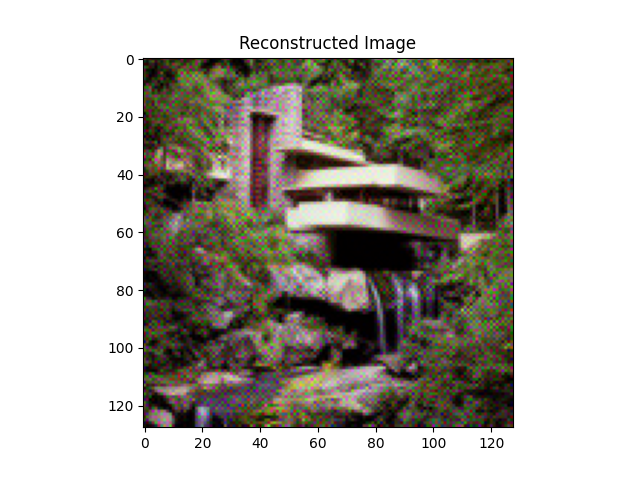

Part 1: Content Reconstruction

We implemented content-space loss and optimize a random noise with respect to the content loss only. The content loss between two images can be provided as below:

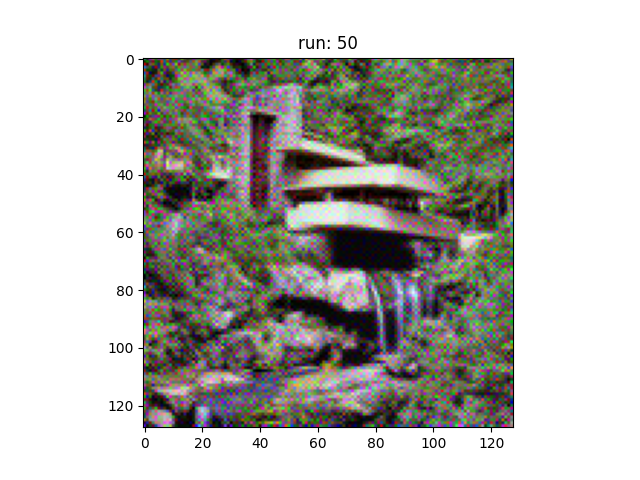

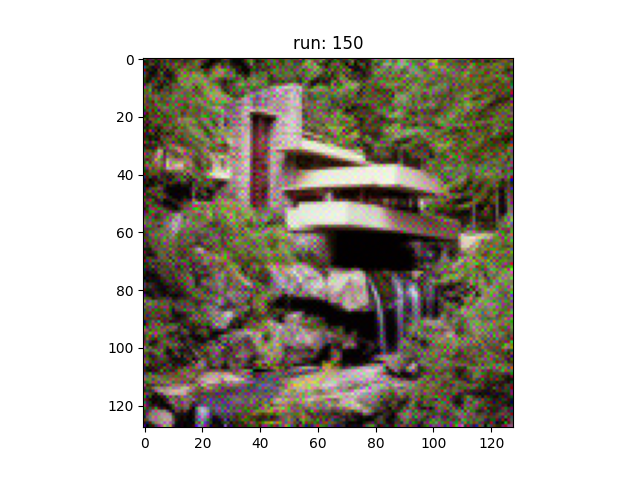

Results

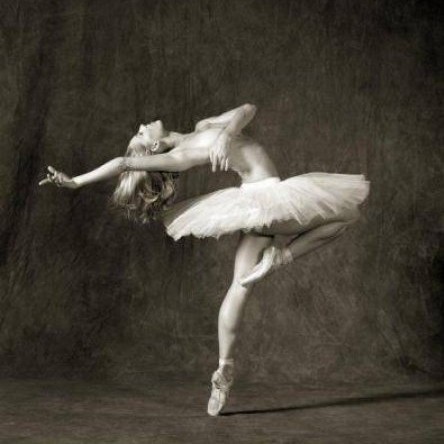

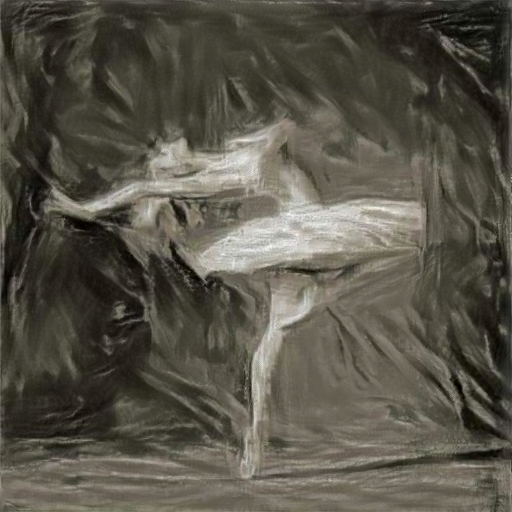

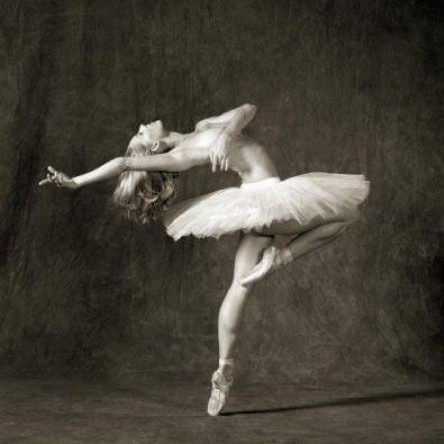

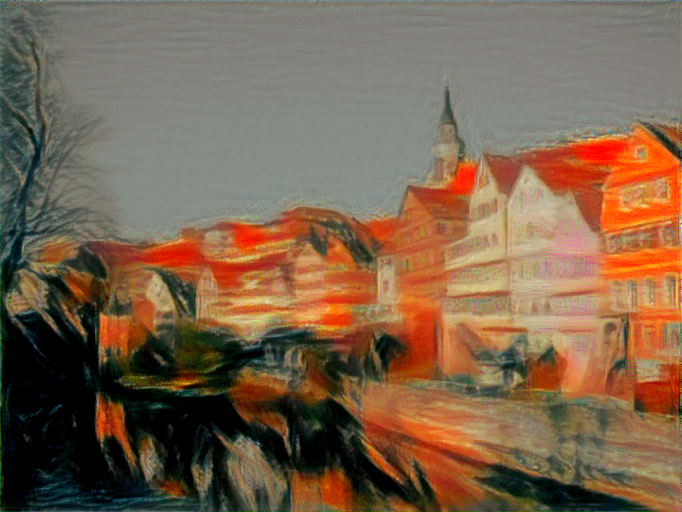

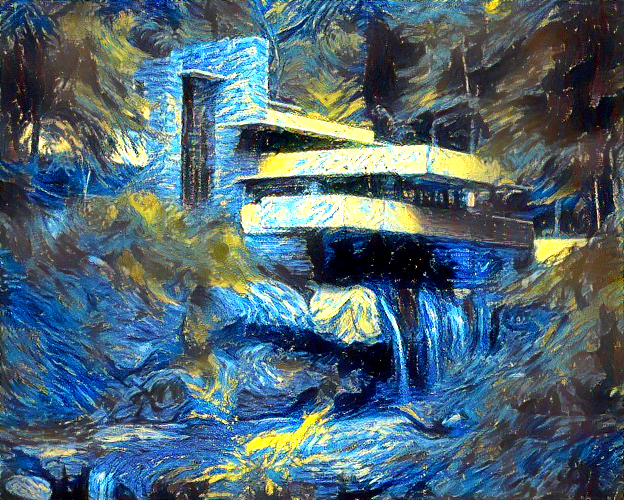

Side by Side comparison

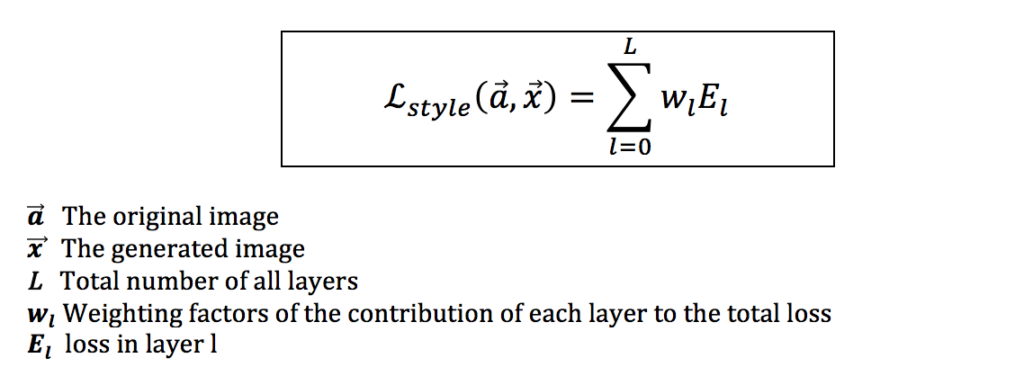

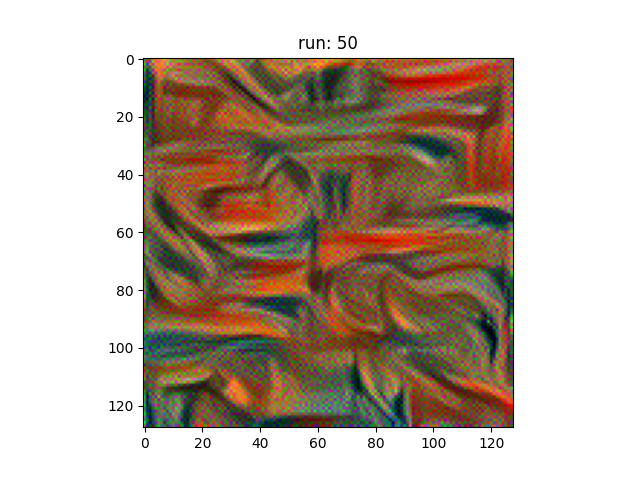

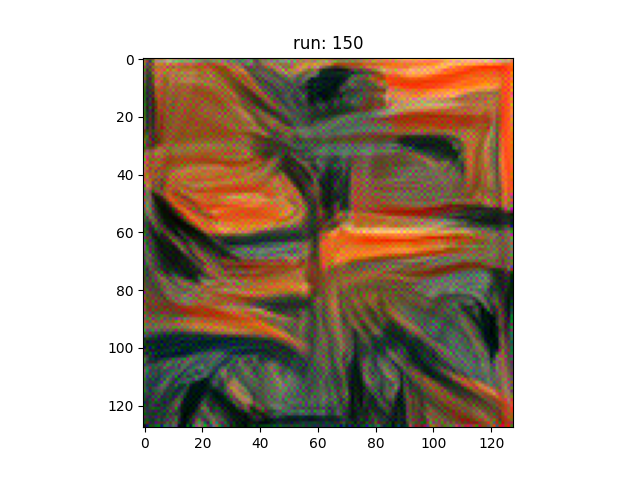

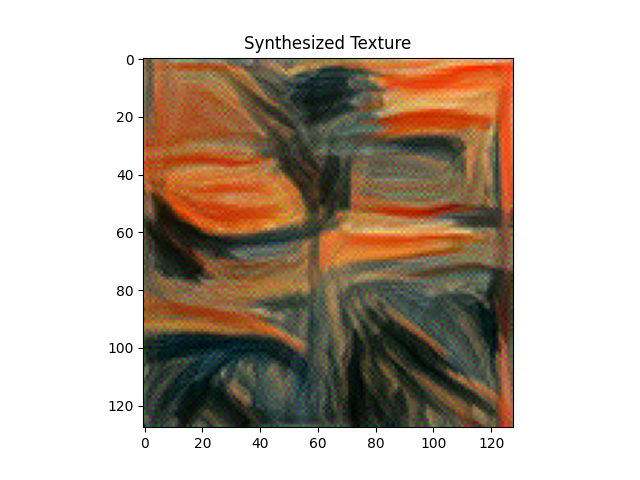

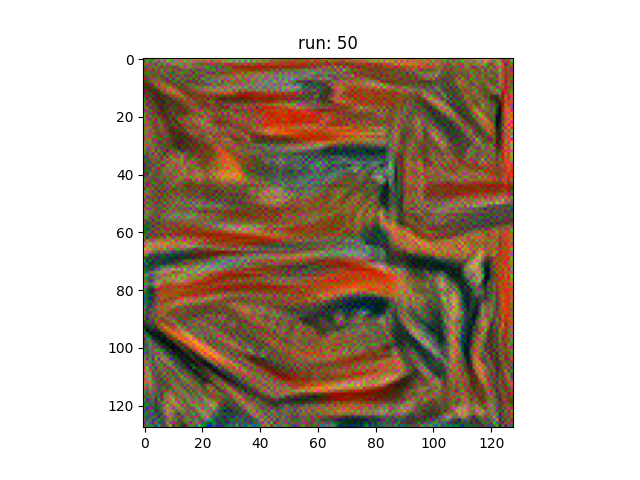

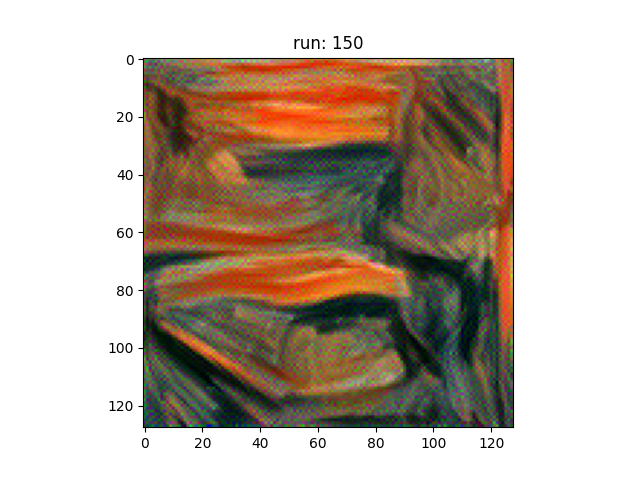

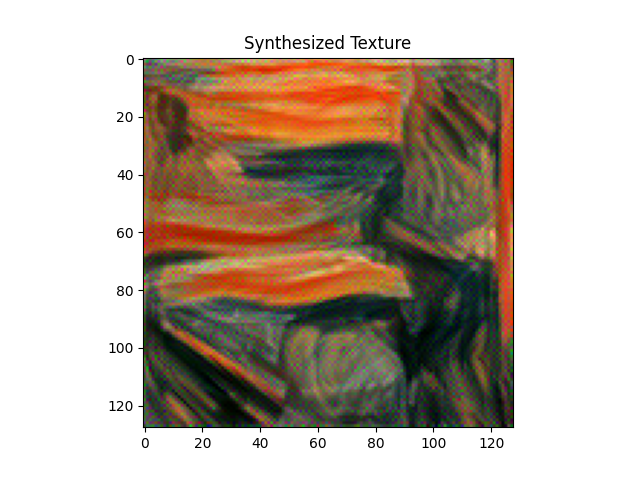

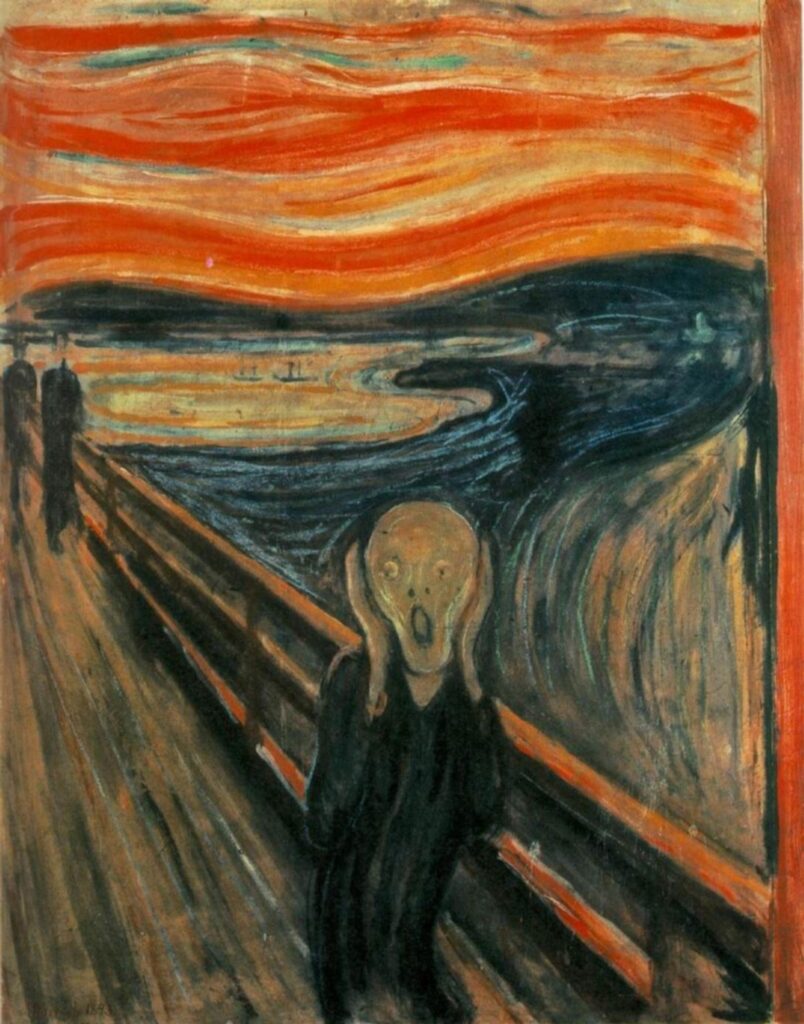

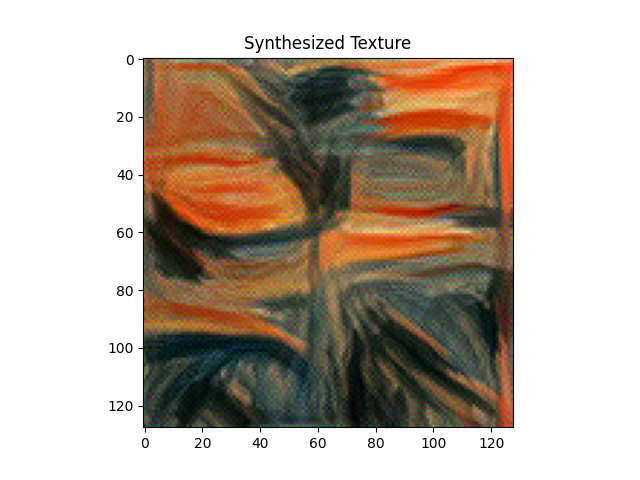

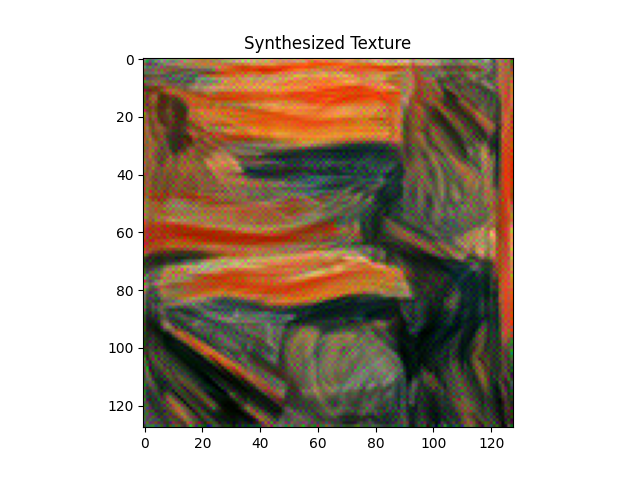

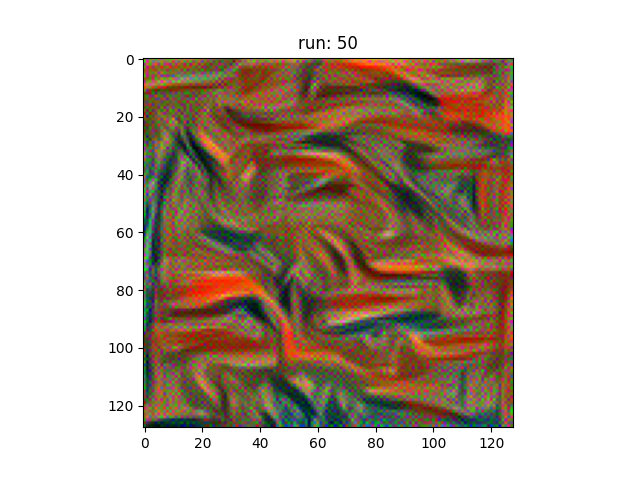

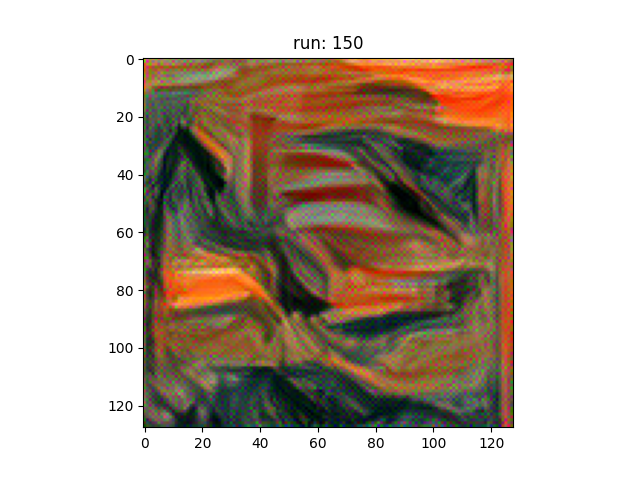

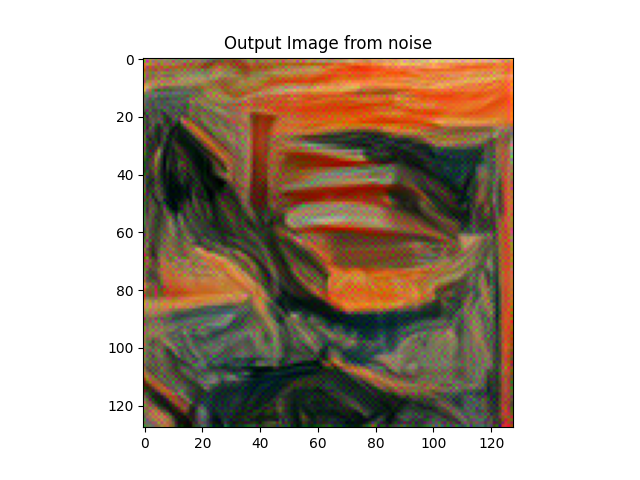

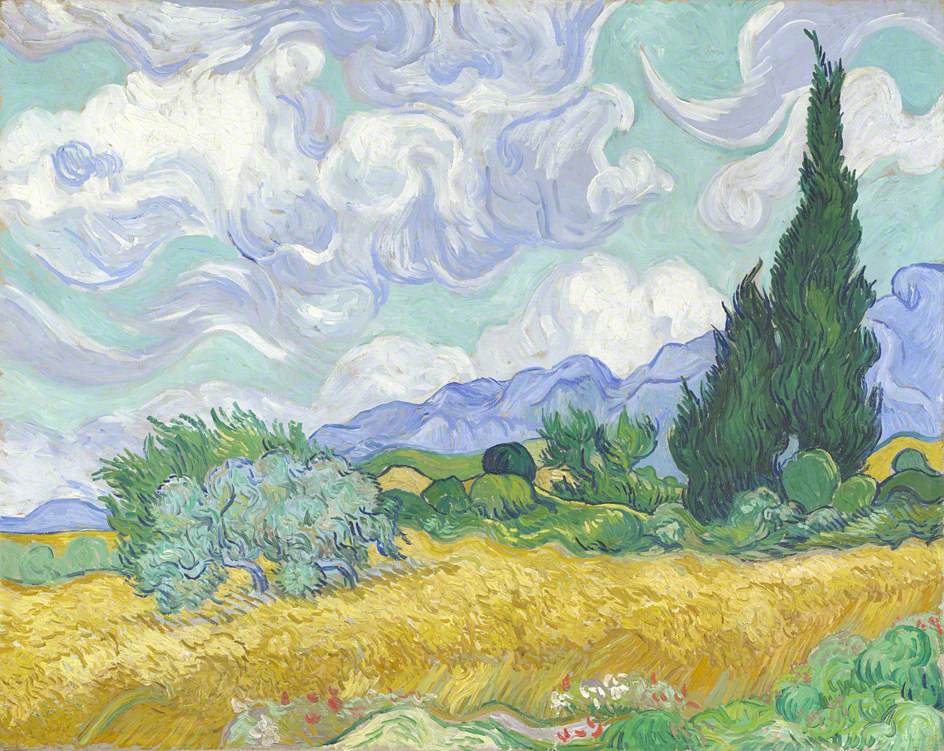

Part 2: Texture Synthesis

In order to perform texture synthesis, we use style transform for conv layers 1,2,3,4,5 as it is able to capture most of the texture from the style image. We use noise input in this case too.

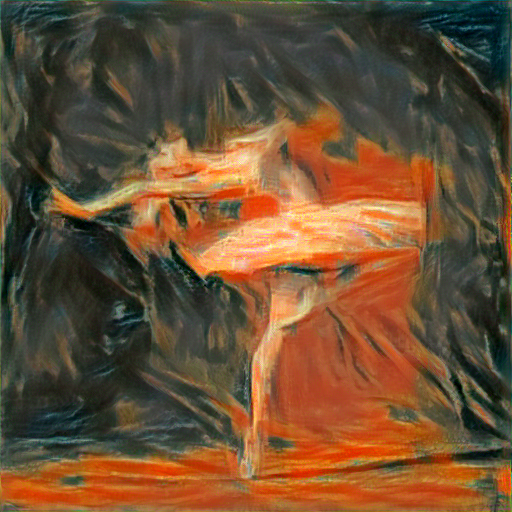

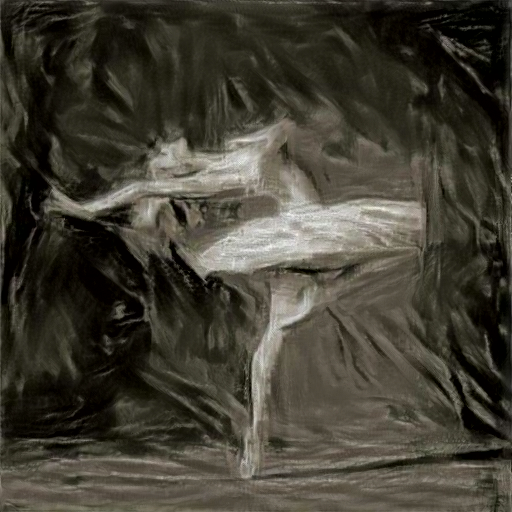

Results

Side by Side comparison

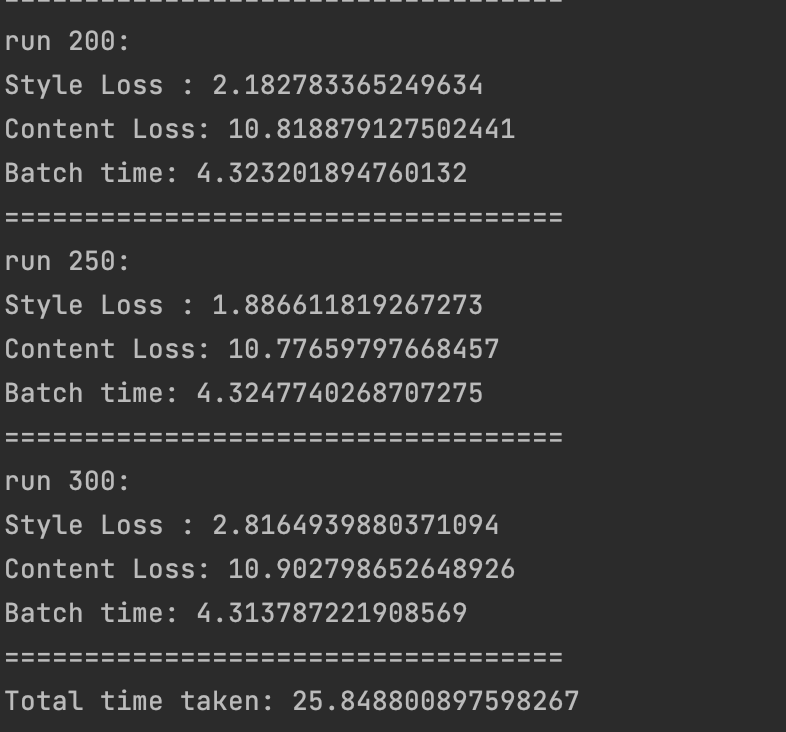

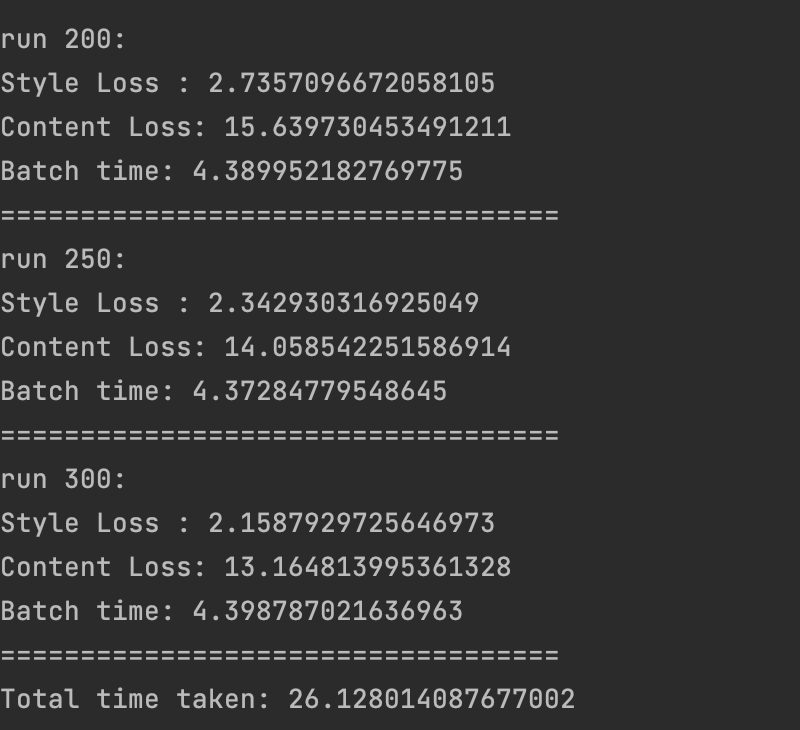

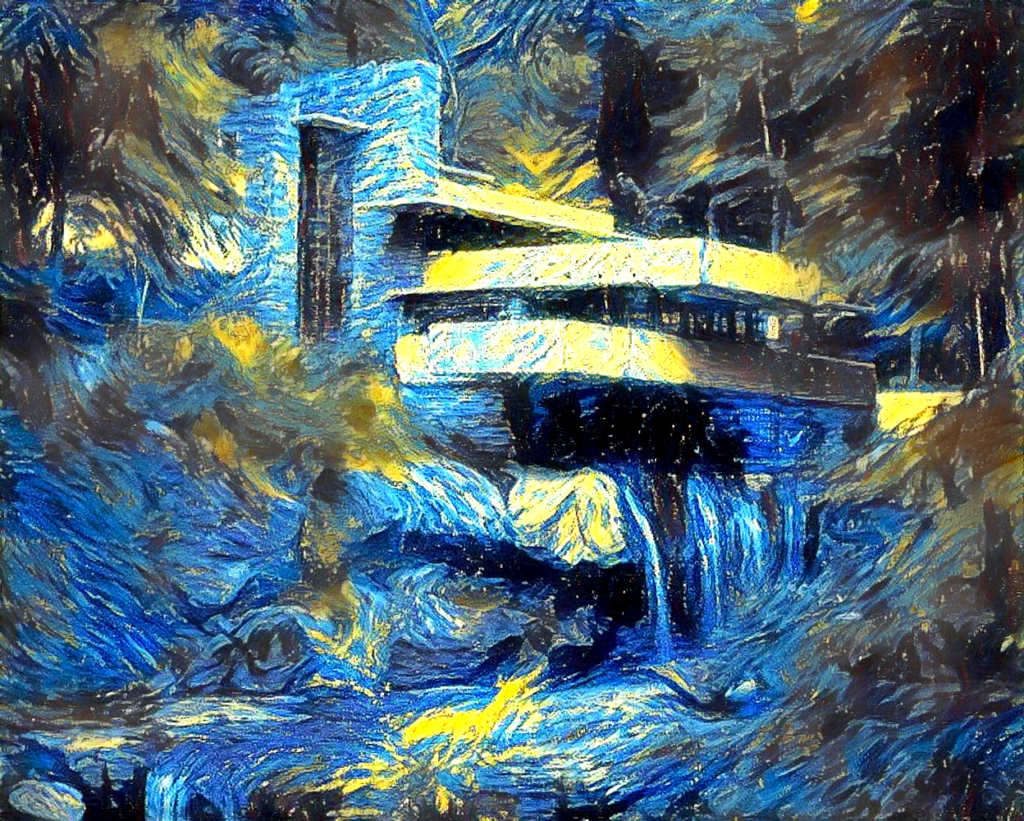

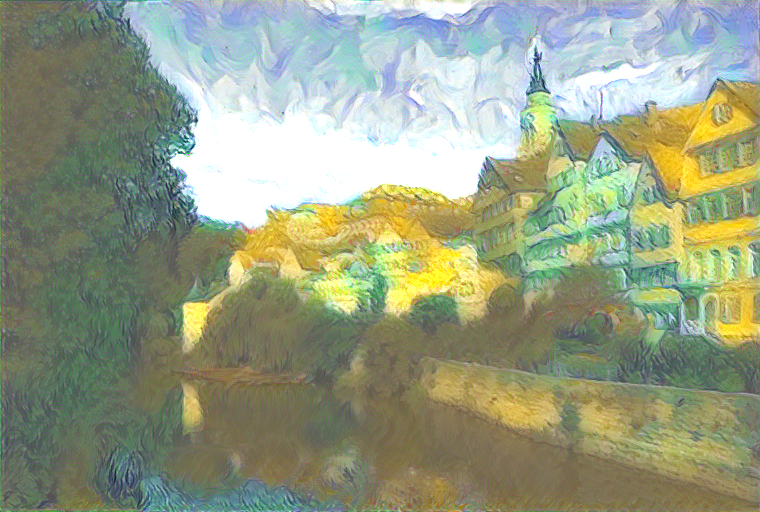

Part 3: Style Transfer

On the left is the time-taken using Input as Content Image & on the right side, we use input image as noise. However, the content loss is very high initially for noise image input & the batch size oscillates, while in the content image case it is pretty consistent as expected. Note, that training was carried out on CPUs. We found that the training was much faster on GPUs.

Results

Hyper-parameters were tuned for various values of mean & standard deviation for the noise images, a number of epochs & convolution layers for style and content loss. The best results are reported below. The quality saw a significant improvement on adding histogram loss, training it longer, and the performance was faster on GPUs than on CPUs.

BELLS & WHISTLES

Poisson Blending + NST

Preserve Luminance of the Context Image

Histogram Loss

Mixed Transfer

Super Resolution

Controlling Perceptual Factors in Neural Style Transfer

NST on Videos

We have applied frame by frame NST on videos & then applied temporal smoothening as mentioned in this paper. Note that we have used a pre-trained model for faster computation.

CREDITS

https://arxiv.org/abs/1604.08610

https://arxiv.org/pdf/1701.08893.pdf