|

|

|

|

|

|

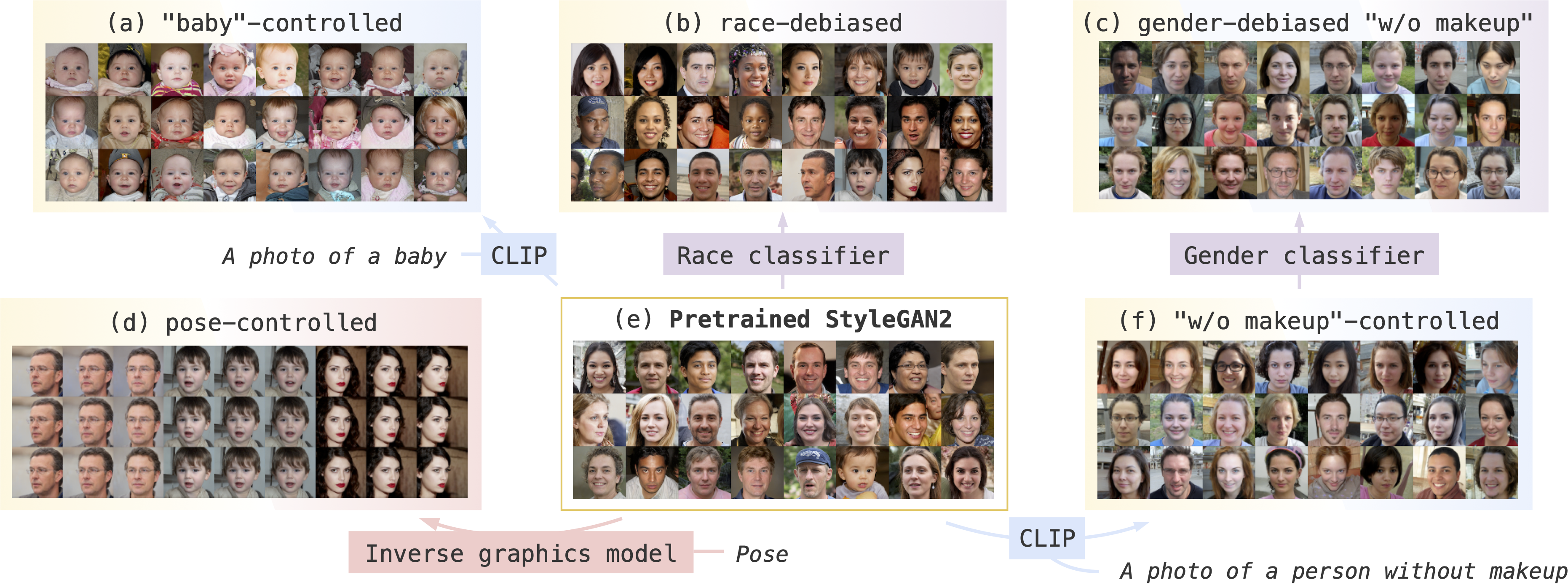

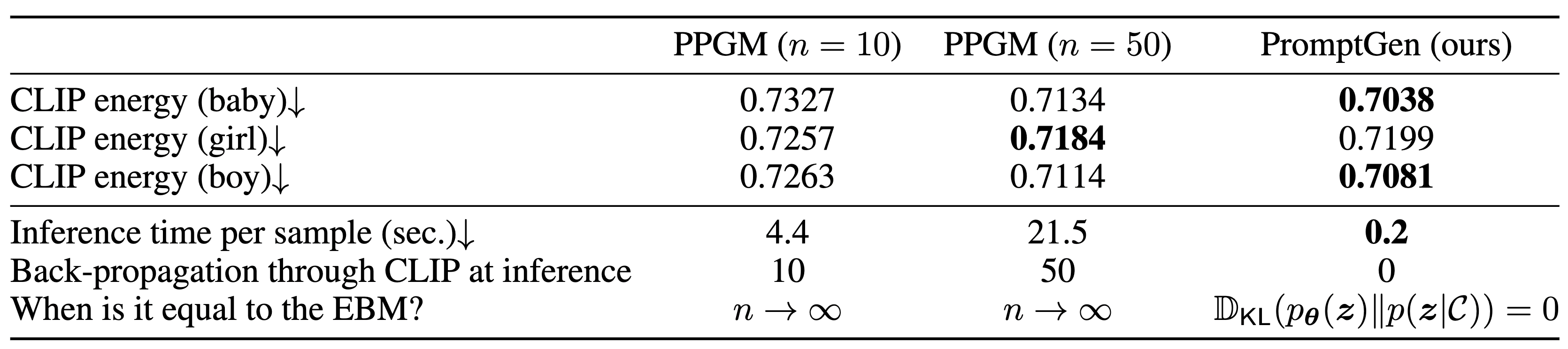

(1) with the CLIP model, PromptGen can sample images guided by text; (2) with image classifiers, PromptGen can de-bias generative models across a set of attributes; (3) with inverse graphics models, PromptGen can sample images of the same identity in different poses; (4) PromptGen reveals that the CLIP model shows "reporting bias" when used as control, and PromptGen can further de-bias this controlled distribution in an iterative manner. |

|

|

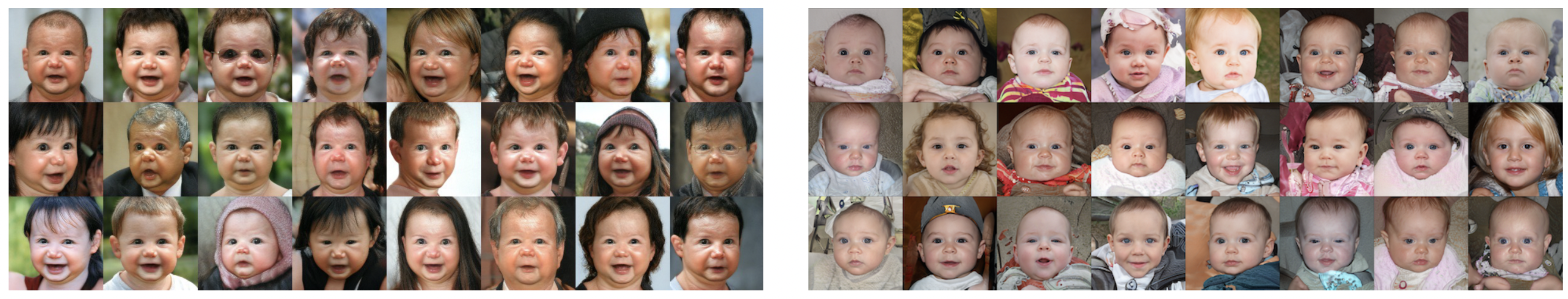

This project has nothing to do about domain adaption of pre-trained generative models.

Our method cannot generate a cartoonish or Donald Trump-like faces for different identities.

On the other hand, if you are interested in 1) what a generative model learns about the underlying data distribution, especially its subpopulations, and how to efficiently sample from it;

or 2) how to mitigate the bias learned by a generative model, this project would be interesting to you. There is something domain adaptation cannot do. For example, the left set of images are babies generated by StyleGAN2-NADA, a domain adaptation method; the right set of images are babies generated by our PromptGen. The two methods use exactly the same resources (StyleGAN2, the CLIP model, no additional training data).  Left: StyleGAN2-NADA; Right: PromptGen (ours) with StyleGAN2 |

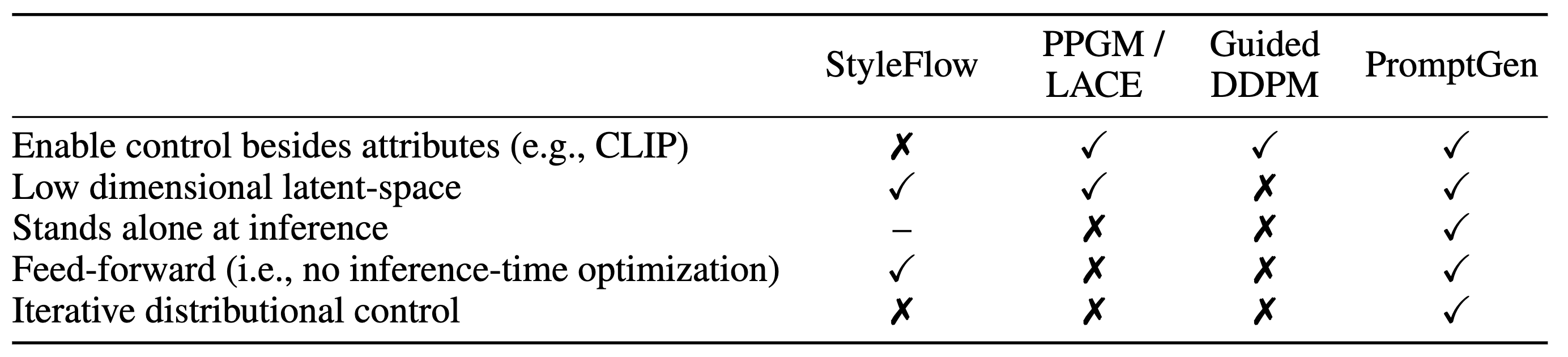

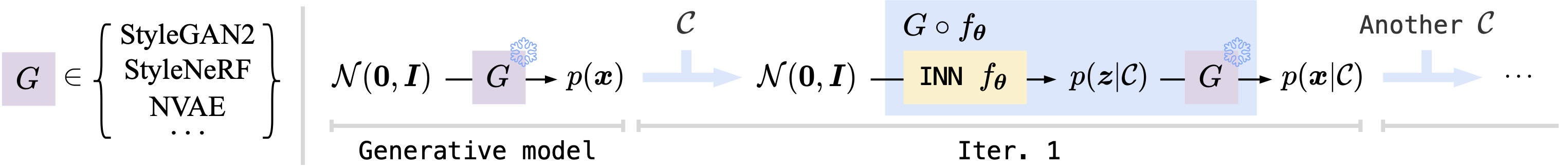

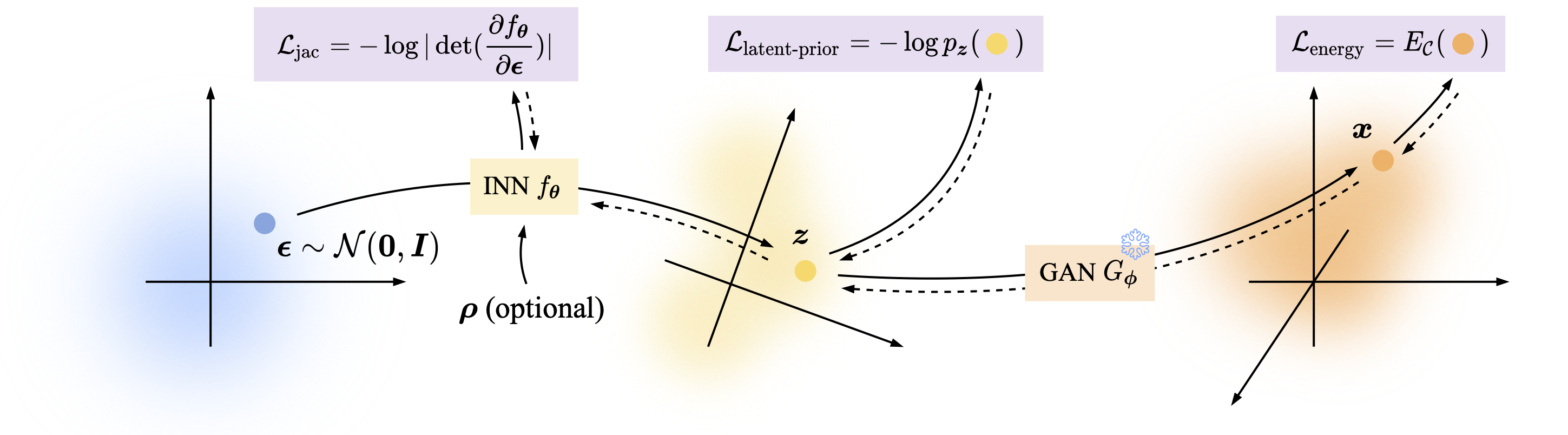

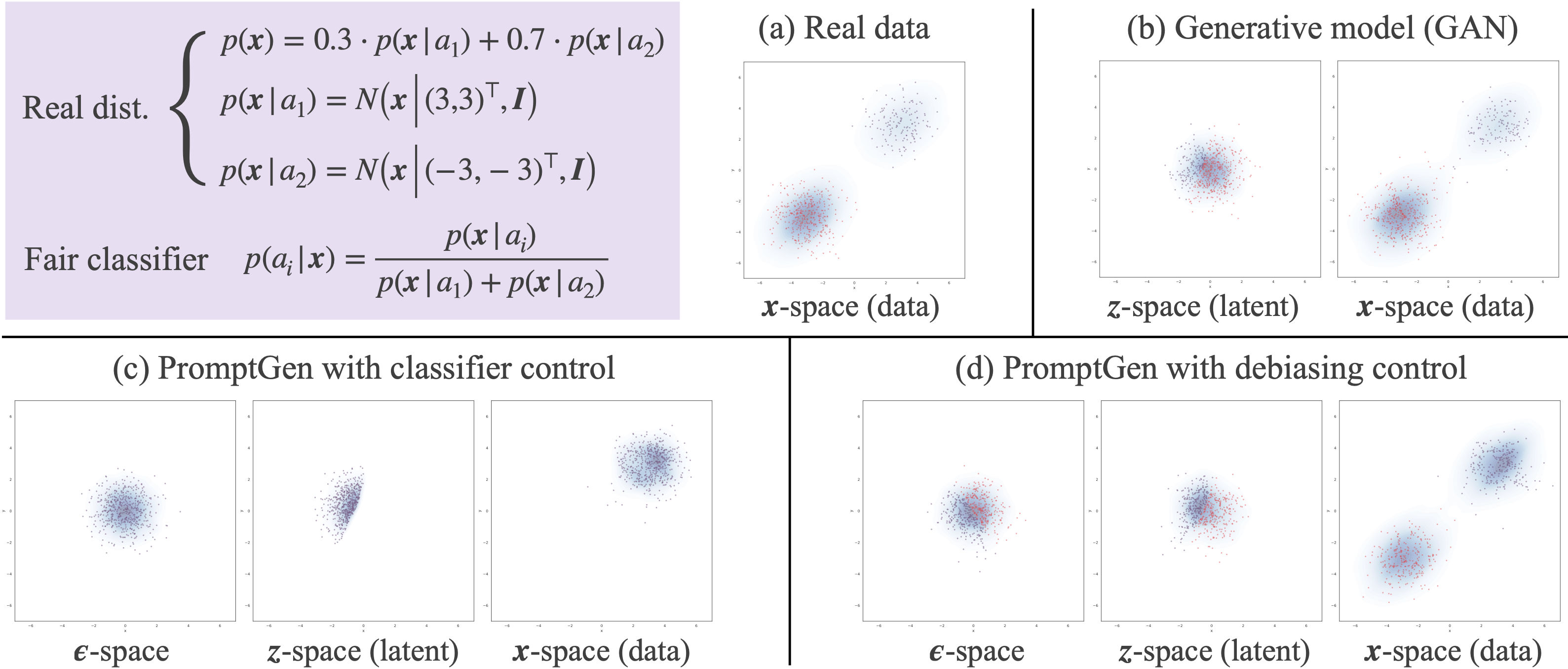

To begin, the user selects a pre-trained generative model. Then, the user specifies a control, by means of an energy-based model (EBM). Then we train an INN to approximate the EBM. In the case that some controls depend on others, PromptGen views the functional composition of the INN and the generative model as a new "generative model" and can perform iterative control. In the following parts, we introduce how we impose controllability as EBM and how we approximate this EBM with an INN, which enjoys efficient sampling. |

We leverage different off-the-shelf models to control a pre-trained generative model,

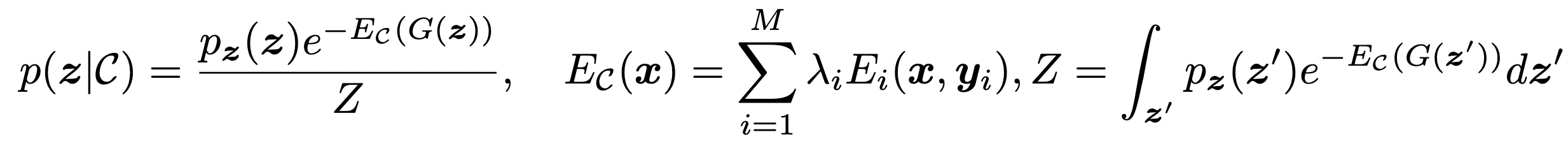

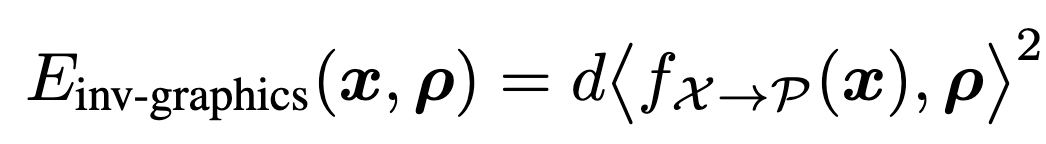

by means of energy-based model (EBM). The target latent-space distribution has the general form of a latent-space EBM:

In this project, we experiment with the following energy functions defined by various off-the-shelf models: 1. Classifier energy (the log-probability of a pre-trained image classifier):

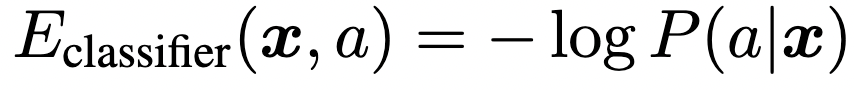

2. CLIP energy (the cosine distance between the embeddings of the image and the text, averaged over multiple differentiable augmentations):

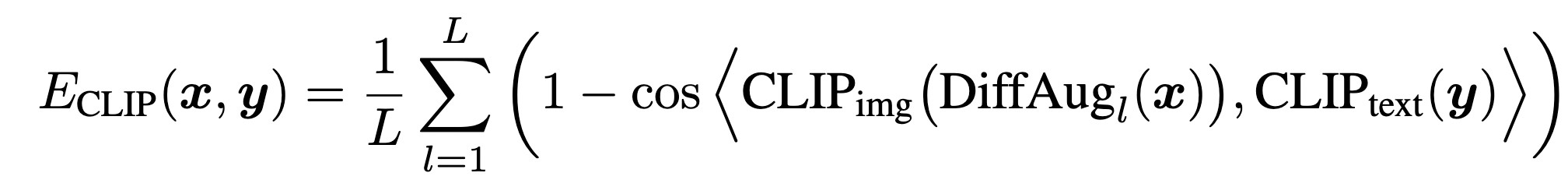

3. Inverse graphics energy (the squared geodesic distance between the inferred graphics parameters and the target graphics parameters):

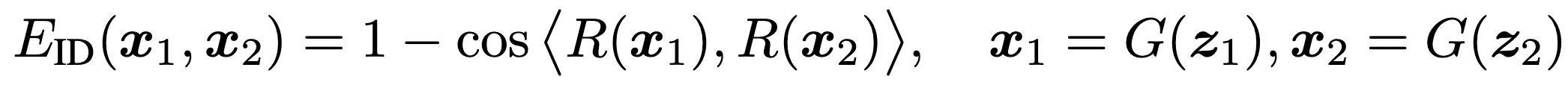

4. Identity preservation energy (the cosine distance between two faces in the embedding space of the ArcFace model):

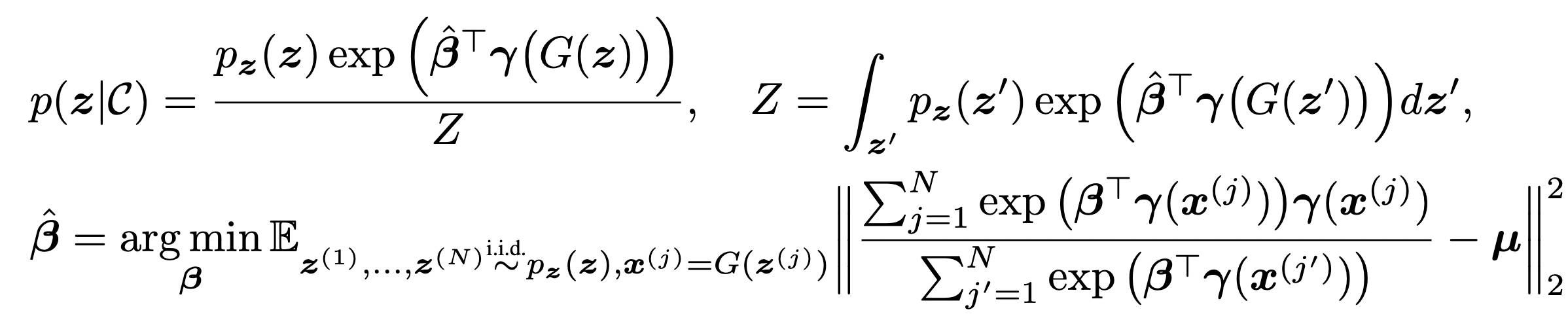

If we want to de-bias the generative across a set of attributes, then we cannot directly use an off-the-shelf model. Instead, we may use the moment constraints, originally proposed for de-biasing language models. 5. Moment constraints:

Essentially, moment constraints leverages importance sampling to encourage that the expectation of a certain metric (e.g., the output of a race classifier) is close the given one (e.g., a uniform distribution). |

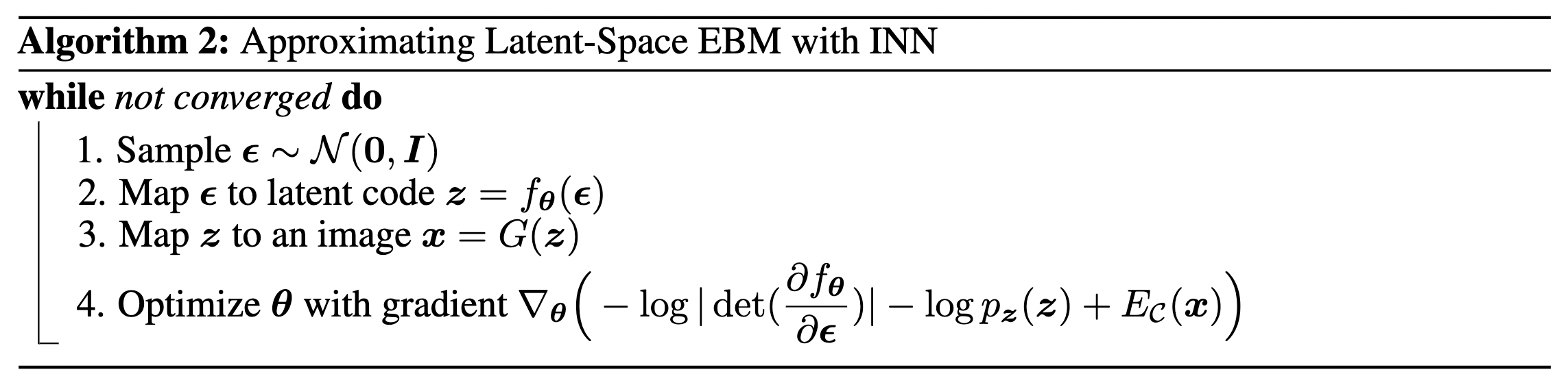

A remaining question is how to efficiently sample from the EBM.

Most existing methods use MCMC sampling, which require inference-time optimization.

In this project, we propose to approximate the latent-space EBM with an invertible neural network (INN).

The derivation is omitted here, and the algorithm is illustrated as follows.

In the forward pass (solid curves), we sample base noises from a Gaussian distribution, map base noises to latent codes with an INN, and map latent codes to images with a fixed generative model. Dashed curves show the gradients in the back-propagation. PromptGen learns a distribution in the latent space, which is used to "prompt" the generative model. This procedure is conceptually similar to the idea of "prompting" pre-trained language models. |

|

Text description: "a photo of a baby"; generative model: StyleGAN2 trained on FFHQ 1024

Text description: "a photo of a baby"; generative model: StyleNeRF trained on FFHQ 1024

Text description: "a photo of a british shorthair"; generative model: NVAE trained on FFHQ 256

Text description: "a photo of a british shorthair"; generative model: StyleGAN2 trained on AFHQ-Cats 512

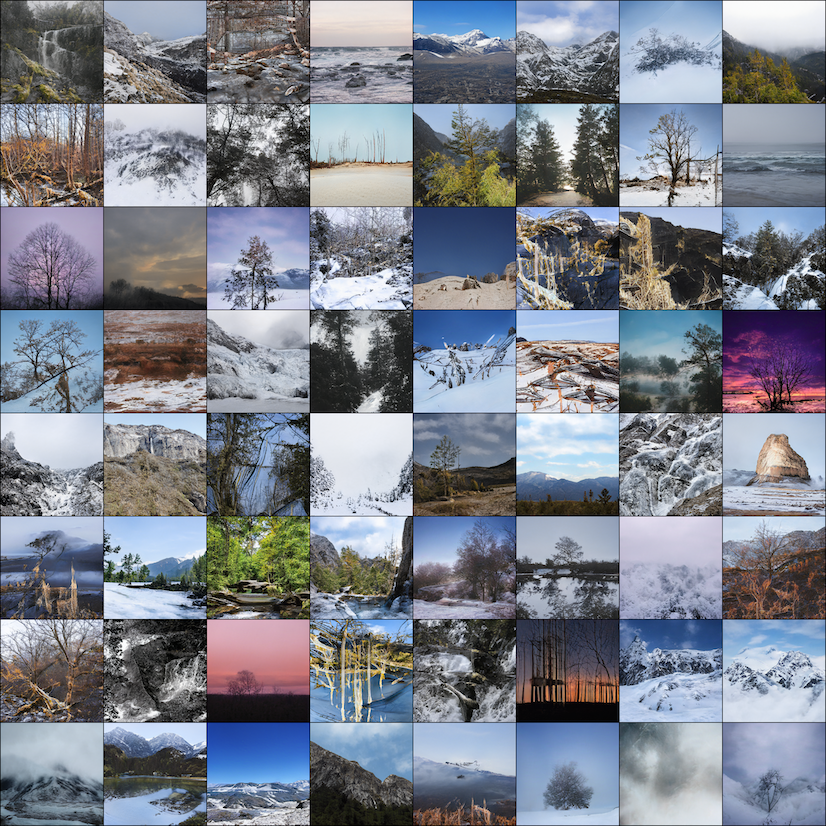

Text description: "autumn scene"; generative model: StyleGAN2 trained on Landscape HQ 256

Text description: "winter scene"; generative model: StyleGAN2 trained on Landscape HQ 256

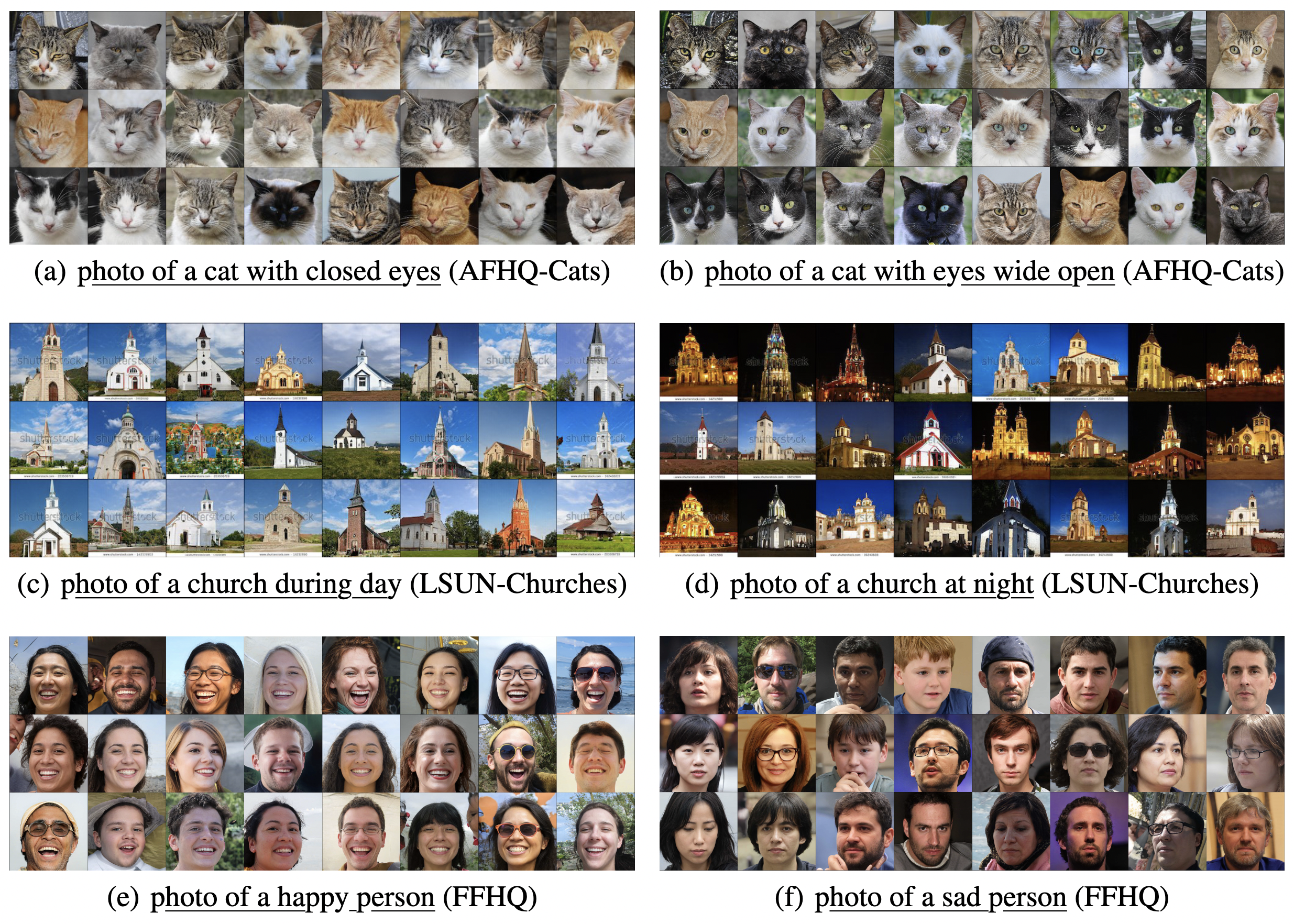

Contrastive samples generated by PromptGen with StyleGAN2 trained on multiple datasets

PromptGen with BigGAN trained on ImageNet, which has a class embedding space |

StyleGAN2 trained on FFHQ 1024

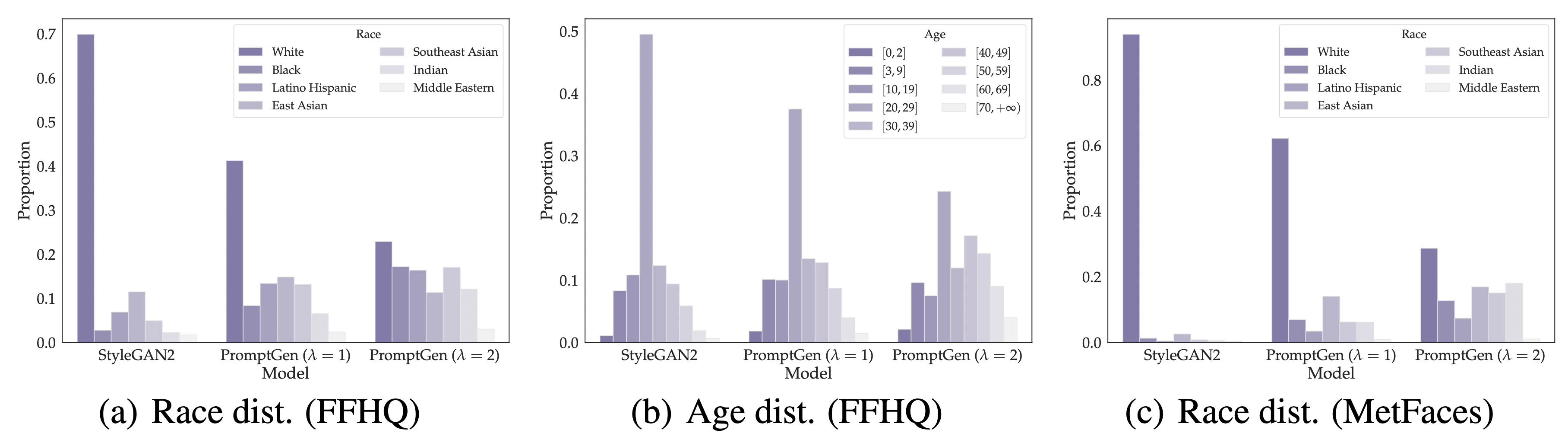

PromptGen for age de-biasing of StyleGAN2 trained on FFHQ 1024

PromptGen for race de-biasing of StyleGAN2 trained on FFHQ 1024

Age and race distributions before and after de-biasing |

PromptGen for pose-guided image synthesis of StyleGAN2 trained on FFHQ 1024

3D baselines |

PromptGen (iteration 1). Text description: "a person without makeup". Female: 81.6% vs male: 18.4%.

PromptGen (iteration 2). Gender de-biasing for the distribution learned in iteration 1. Female: 49.3% vs male: 50.7%. |

|

Acknowledgements |