16-726 SP22 Assignment #4 - Neural Style Transfer

Run

python run.py images\style\<style_pic_filename> images\content\<content_pic_filename>Bells and whistles using my previous pictures.

Introduction

This Assignment talks about neural network style transfer. We start by defining two losses: content loss and style loss, which indicates the difference of feature map of certain layer to the input image. We insert these loss after certain layers in a trained VGG19 network.

Experiments

Part1: Content Loss

I insert the content loss layer into conv_1, conv_3, conv_5, conv_7 layers for each experiment and get the result. It can be inferred that the deeper layer the content loss layer is inserted, the worse result it gets.

| Image | Conv_1 | Conv_3 | Conv-5 | Conv-7 |

|---|---|---|---|---|

| Wally |  |  |  |  |

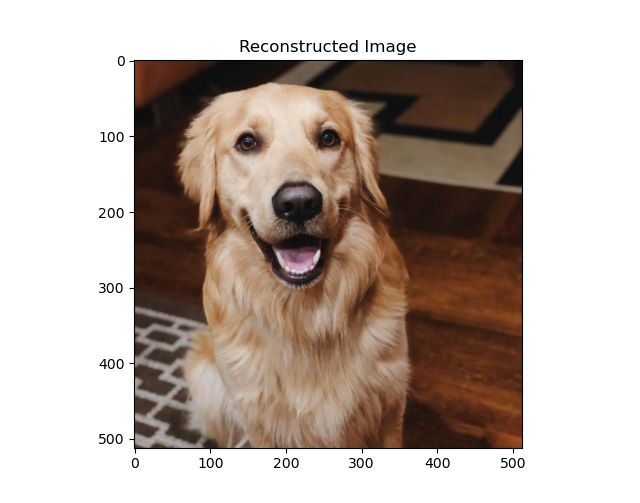

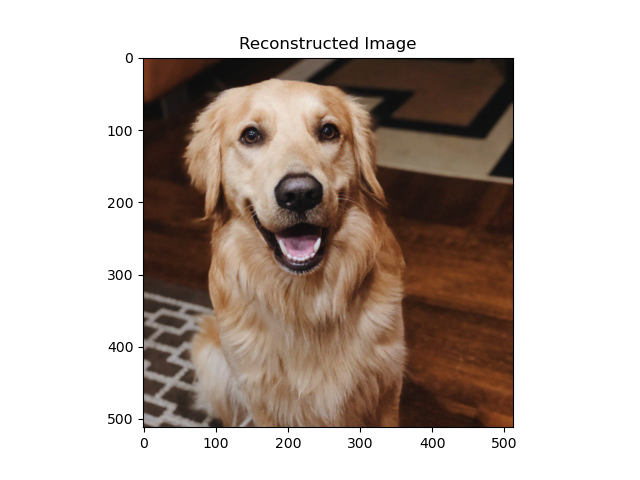

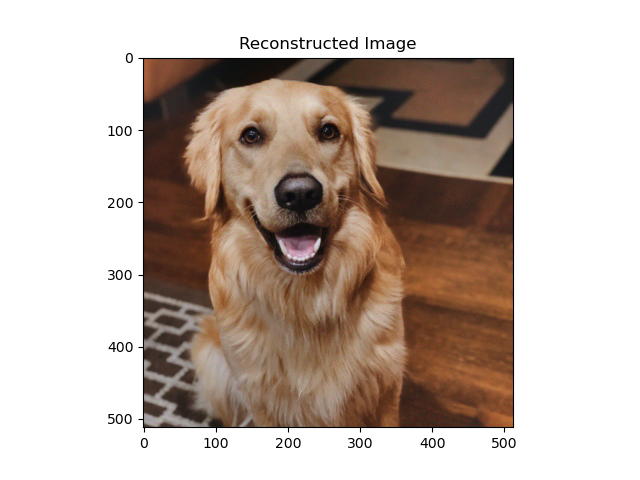

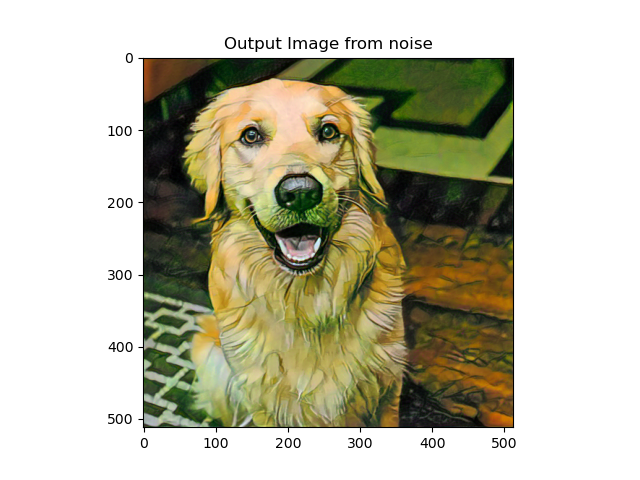

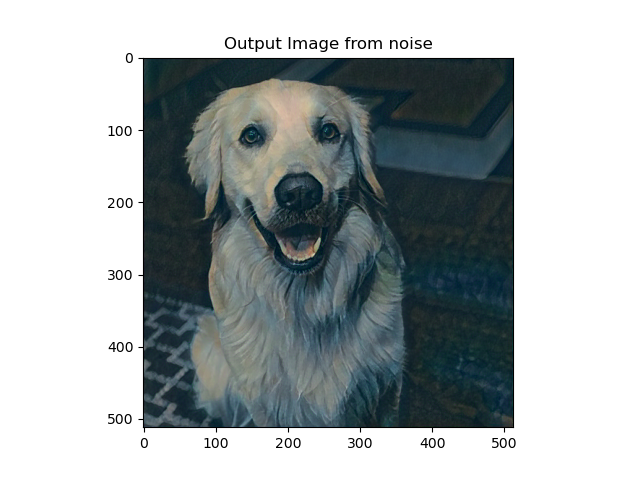

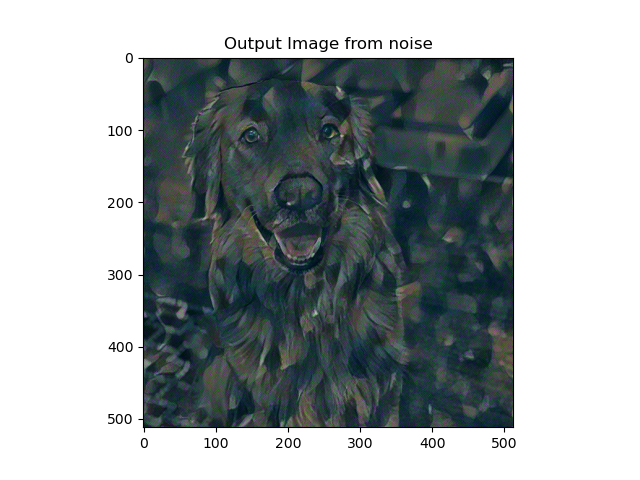

And from two random image with content loss (inserted into conv_4 as default):

| Noise | Result |

|---|---|

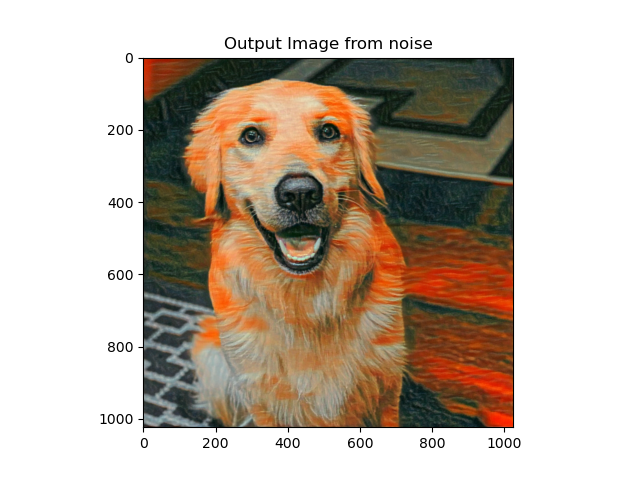

|  |

|  |

The result image is different, some details on dog's face preserve these noises from input image. Probably due to the different noise distribution from input.

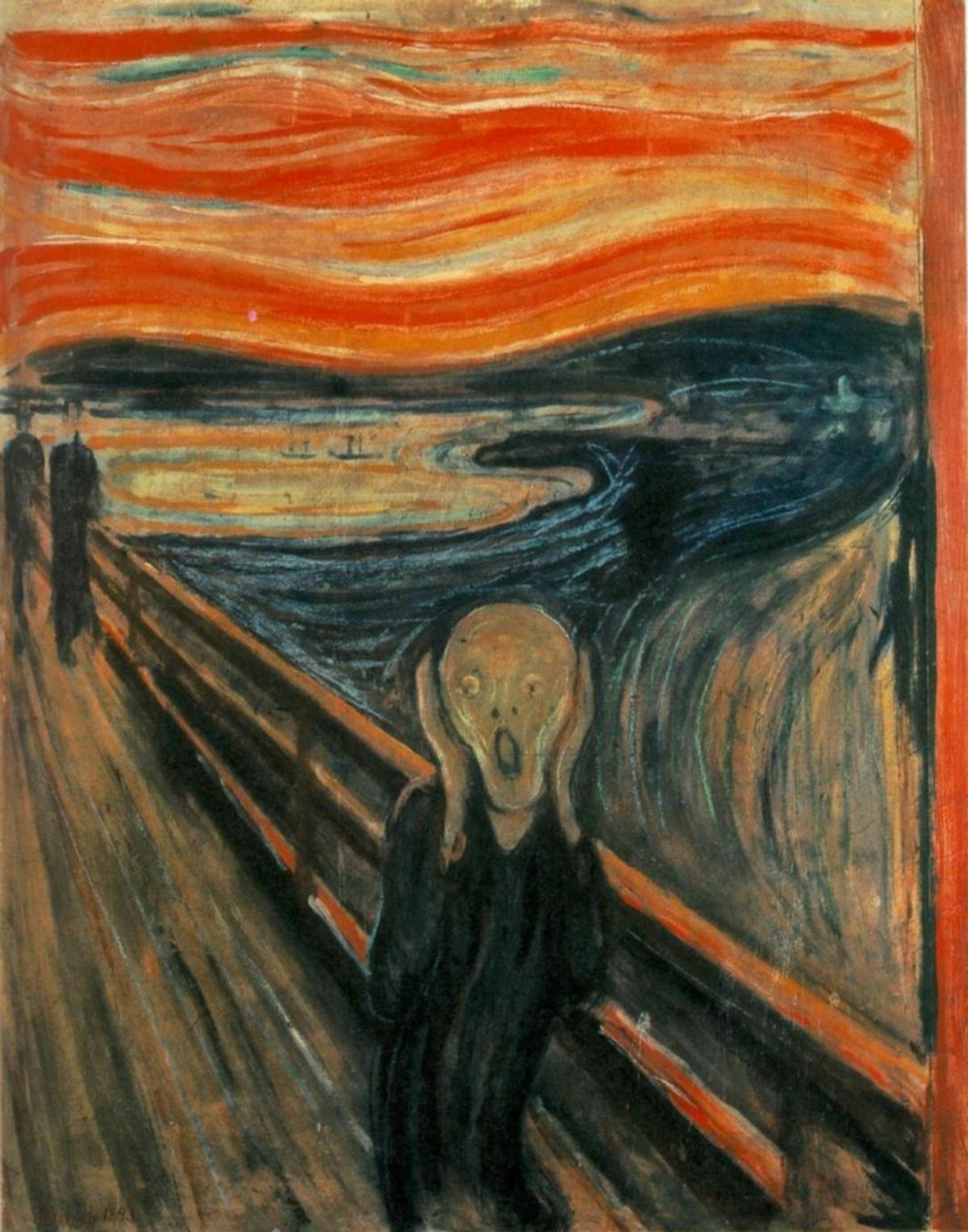

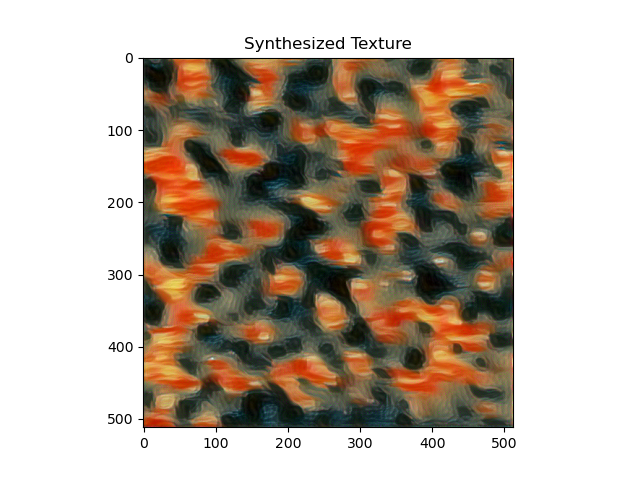

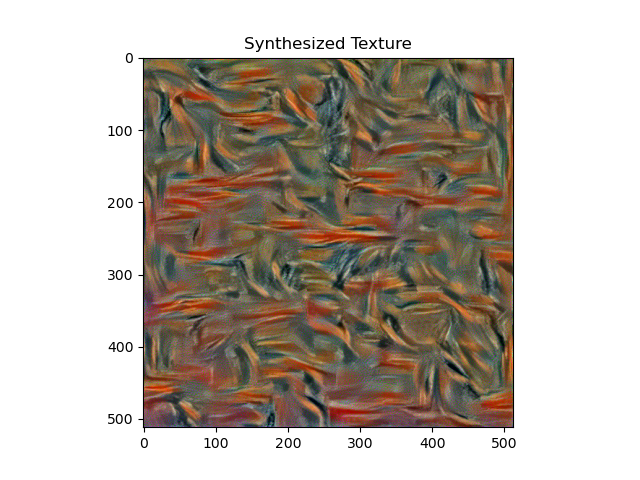

Part2: Style Loss

I insert the style loss layer into conv_1-3, conv_4-6, conv_7-8 for each experiment and get the result. It can be inferred that in the shallow layers, the color and the large area of color block is kept, while in the middle layers, the mixing feeling is kept and in the even deeper layer, unexpected noise occurs.

| Style | Conv_1-3 | Conv_4-6 | Conv-7-8 |

|---|---|---|---|

| The_scream |  |  |  |

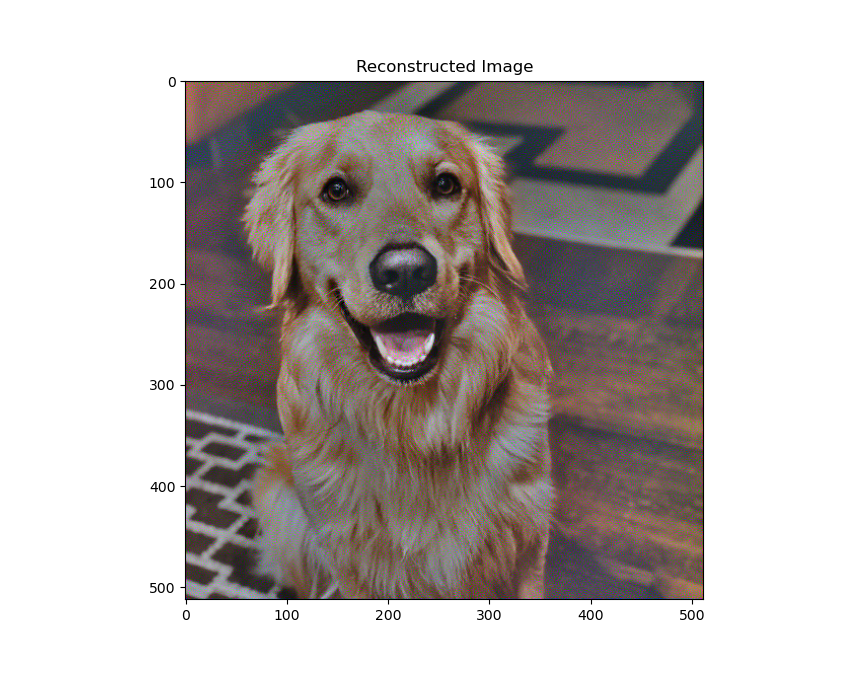

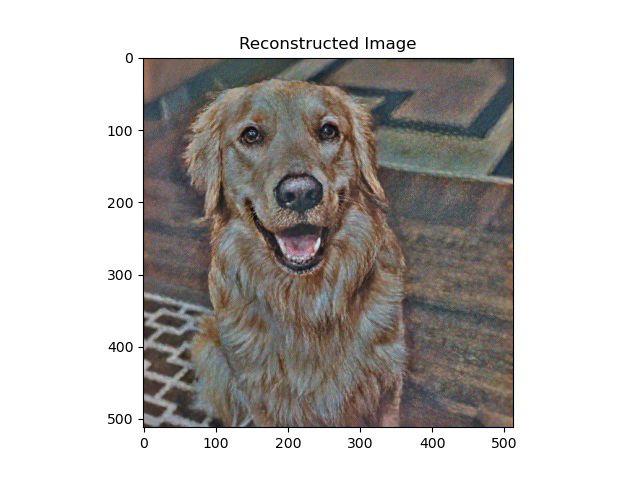

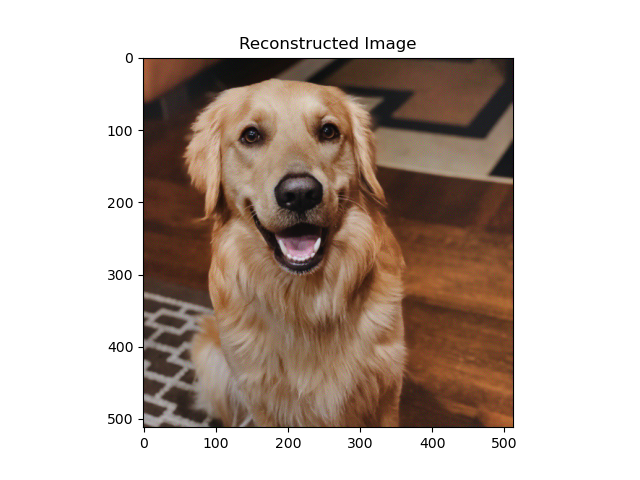

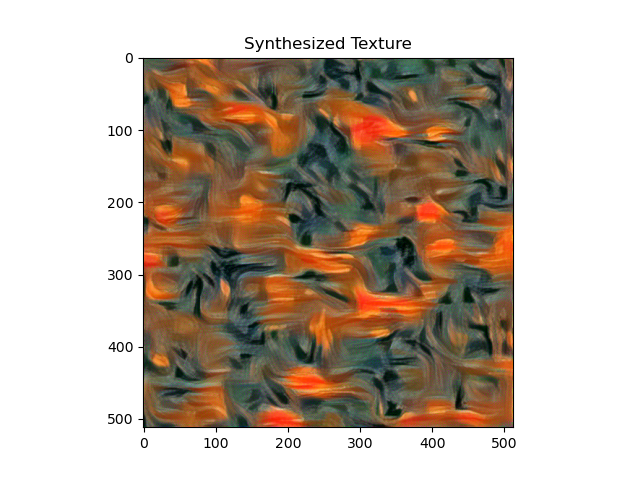

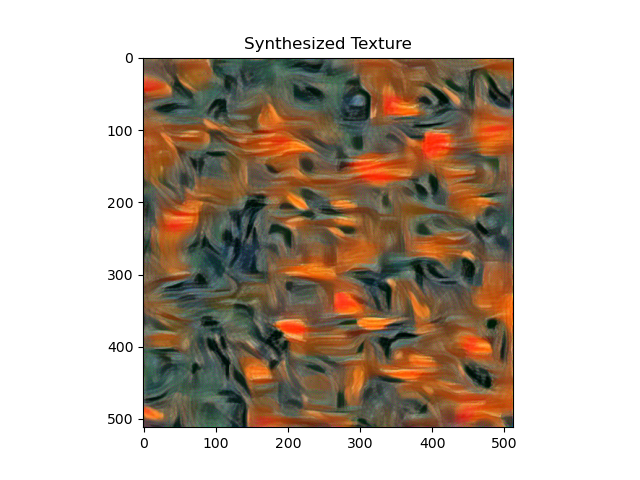

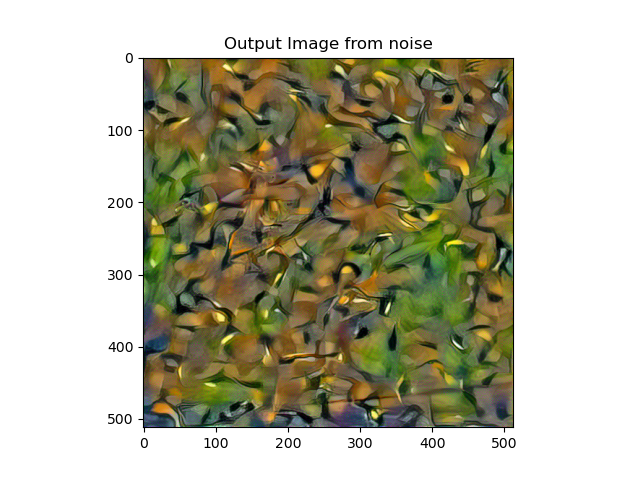

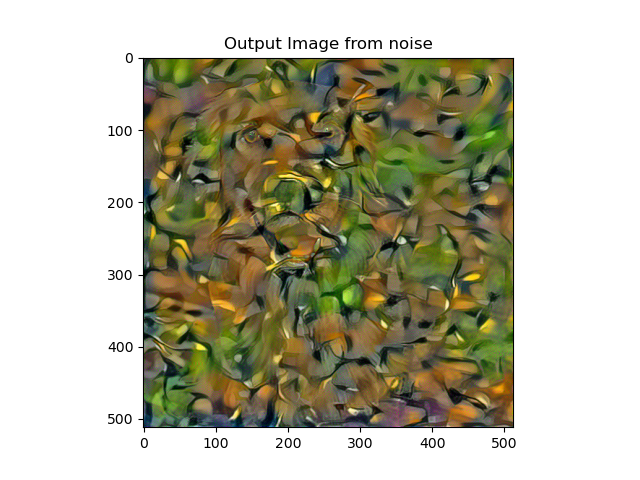

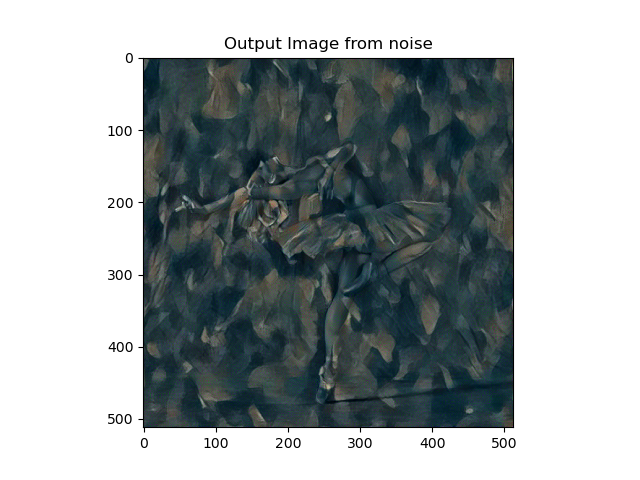

And from two random image with only style loss(inserted as default conv1-5):

| Noise | Result |

|---|---|

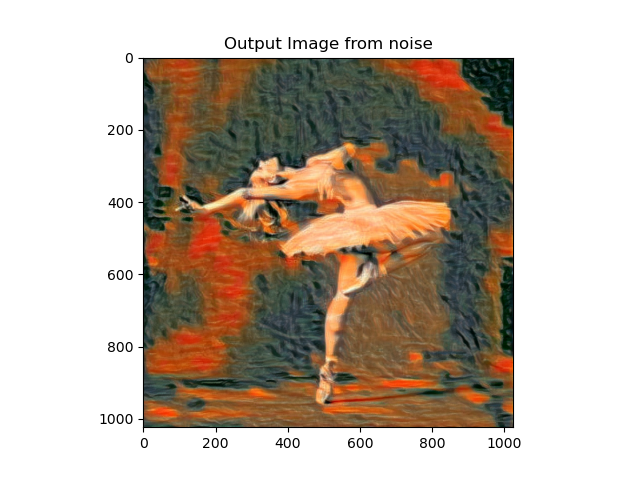

|  |

|  |

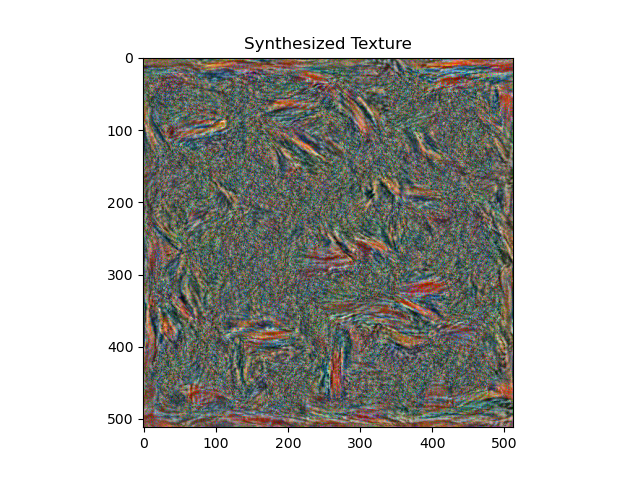

As you can see, different noise distribution affects the brushstrokes in the texture generated. Below is the top left part(0,0-100,100px) of the generated texture.

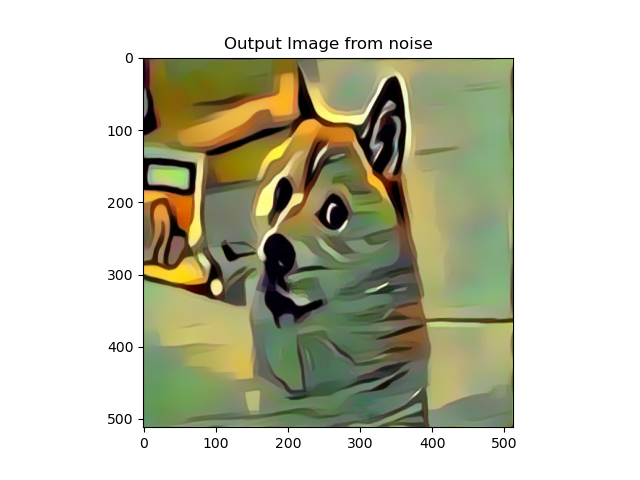

Part 3: Style Transfer

Hyper-parameters

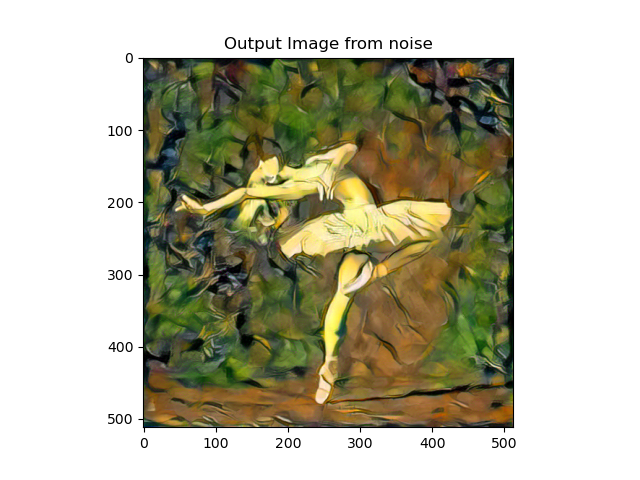

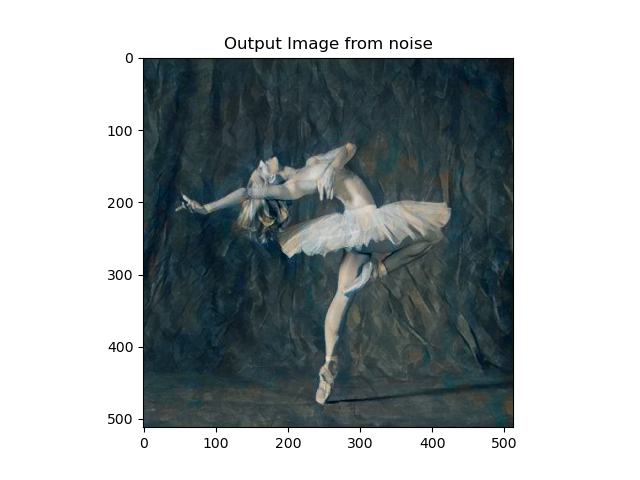

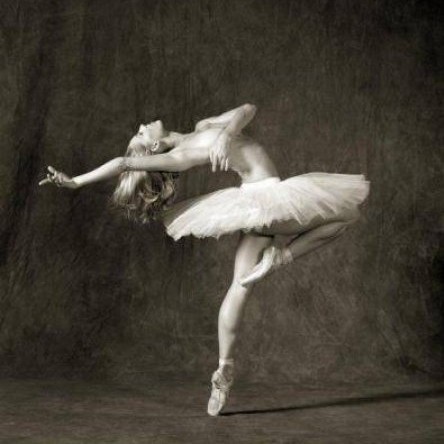

One intuitive method is to adjust the input image size. I tried 1024 instead of default 512, and the result is more interesting than the default one. There are more delicate strokes and has no large areas of color blocks. The dog input picture is realistic so that it could be weird. I use ballet dancer as an alternative to show the difference. One interesting discovery for the input is that the greater resolution the input image is, the more organized the style will be. For lower resolution input, the stroke on final result seems wild and fantastic.

A second approach is to adjust the default style weight and content weight. I adjust the 1000000:1 to 800000:1. The result is very exciting and artistic.

For the following parts, I use adjusted weight ratio 900000:1 to show my result.

Results generated from content

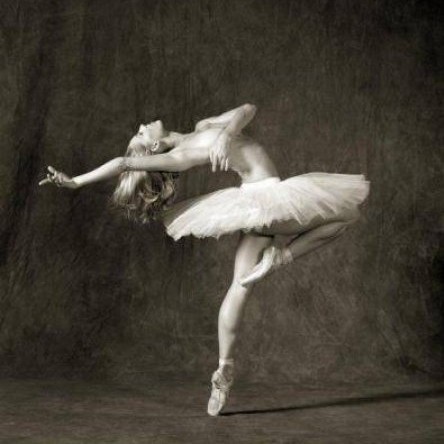

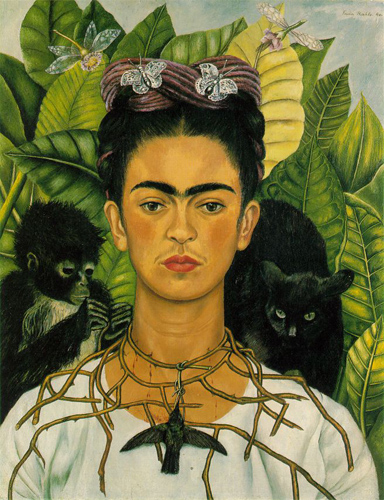

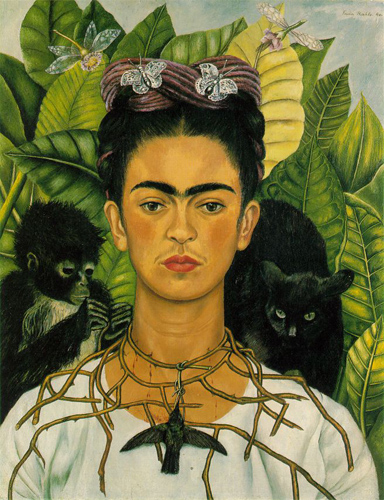

| style image\ content image |  Dancing Dancing |  Wally Wally |

|---|---|---|

Frida_kahlo Frida_kahlo |  |  |

Picasso Picasso |  |  |

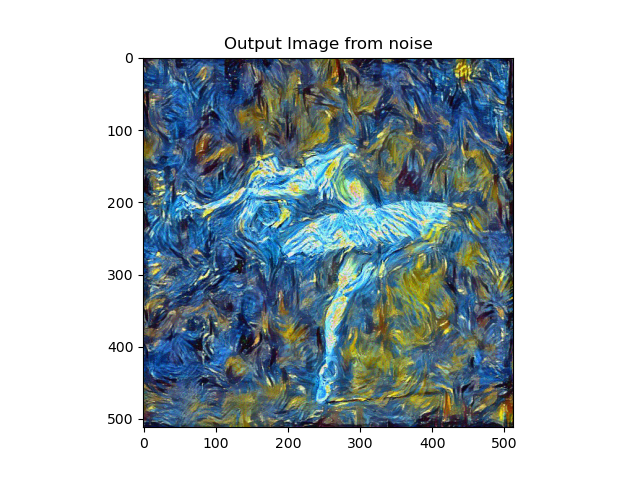

Results generated from noise

| style image\ content image |  Dancing Dancing |  Wally Wally |

|---|---|---|

Frida_kahlo Frida_kahlo |  |  |

Picasso Picasso |  |  |

Generally speaking, the image quality generated by content image is better than that from noise, ceteris paribus.

I added the elapsed time code into project. The result generated from noise cost 18.077855s while the result generated from content cost 17.449960s, based on 300 runs on Picasso+Dancing. The result generated from noise cost 29.000032s while the result generated from content cost 28.763395 s, based on 500 runs on Picasso+Dancing. It seems that no huge time consuming difference exists.

My favourite pictures

| Style | Content | Result |

|---|---|---|

|  |  |

|  |  |

Bells and Whistles

I tried my previous work (image blending) with style transfer too.

| Style | Content | Result |

|---|---|---|

Impression Sunrise Impression Sunrise |  |  |

Reference

- WARNING: For clarification, part of my code are inspired by the official tutorial of PyTorch in this link: https://pytorch.org/tutorials/advanced/neural_style_tutorial.html.