16-726 Final Project

Alex Strasser (astrasse)

An Exploration of GANs through LEGO Synthesis

Introduction

In this project, I attempted multiple different GAN techniques to try to generate LEGO bricks.

Methods

For this project, I wanted to use some of the common GAN models to see how they compare in the generation of LEGO bricks.

This is a very interesting generation problem due to the hard edges (blobs will not be sufficient), distinct features (studs need to be very visible), and different angles (underside looks very different).

I approached this problem using the B200C LEGO classification dataset. This dataset contained 4,000 images of the 200 most popular parts. Traditionally, these types of datasets are used to train classification networks, but I wanted to try to train generative networks instead.

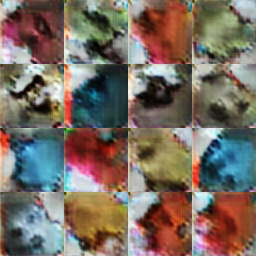

First I tried to generate results using the vanilla DC GAN model. This model takes advantage of convolutional layers instead of using fully connected layers in the generative and discriminator networks. I ran the DC GAN model with the deluxe preprocessing and differential augmentation enabled. Unfortunately, all the backgrounds were relatively gray, and although there are some lego patterns, the results are mostly blobs, even right up until collapse and divergence.

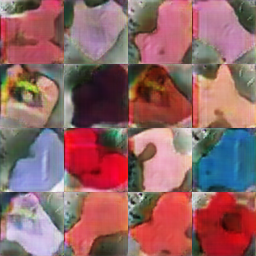

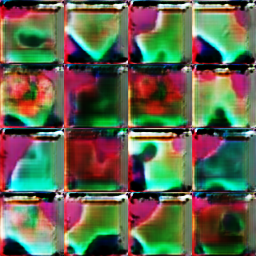

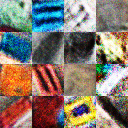

Next, I used a conditional GAN, which allowed me to conditionally generate piece types. In order to get results, I only used the first 10 LEGO brick categories. Additionally, I tried grayscale before scaling up to use RGB. Since the structure of this conditional GAN uses fully connected linear layers, scaling up to RGB required three times as many weights to train.

I also attempted to train up a conditional DC GAN. This is a conditional network which takes advantage of convolutional layers instead of fully connected layers. In theory, this would make the RGB training easier and would allow the model to perform better at higher resolutsions. Unfortunately, there is very little literature available for conditional DC GANs, and as such I was not able to get it to properly train.

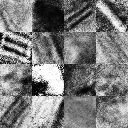

Finally, I trained StyleGAN on an unlabeled version of the dataset which uses all 800,000 images. Unfortunately, due to time and resource contraints, this was not able to finish training, but I have shown the most recent results.

All results are shown below.

Results

Vanilla DC GAN

| 5000 | 33,000 | 34,000 |

|---|---|---|

|

|

|

Conditional GAN (grayscale)

| 5000 | 50,000 | 450,000 |

|---|---|---|

|

|

|

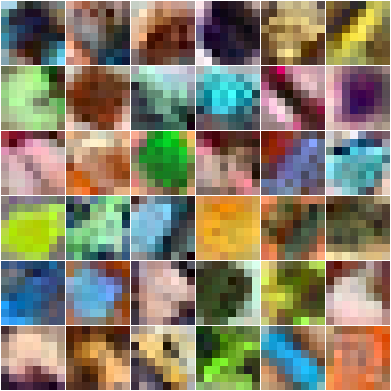

Conditional GAN (RGB)

| 5000 | 50,000 | 1,000,000 | 1,250,000 |

|---|---|---|---|

|

|

|

|

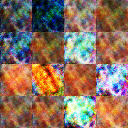

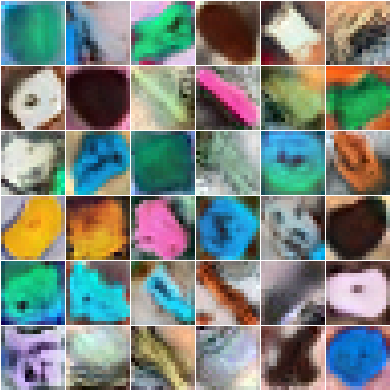

Conditional GAN (RGB) Conditionality

All results shown using the model frozen at 1,000,000 epochs.

It is clear from these results that some pieces worked better than others. However, a lot of them show distinctly LEGO pieces, and this does demonstrate that the conditionality is working as intented.

| Original Piece | Sample |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Conditional DC GAN

These results are omitted as the model was not able to train past the first couple of iterations.

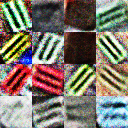

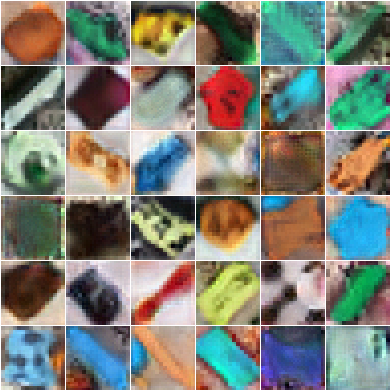

StyleGAN

StyleGAN trains in a way working from lower resolution and building up to a higher resolution.

Although training was not complete, it is pretty clear that LEGO pieces are emerging from the generated images.

| Resolution | Sample |

|---|---|

| 8x8 |  |

| 16x16 |  |

| 32x32 (unfinished training) |  |

In the future, it would be interesting to see the StyleGAN training results once complete, as well as to explore the latent space of the StyleGAN, as done in GANSpace. It would be in theory possible to expand on my work and create controls to generate custom LEGO bricks using the interpreted GAN controls.

I thoroughly enjoyed putting everything I have learned this semester to use in order to analyze the performance of each of these models. It appears that StyleGAN's performance is the best, but it also takes much longer to train than any of the other models. It would be interesting to add the conditionality component to StyleGAN as well, though this would not allow you to generate custom pieces as well.

References

Vanilla DC GAN Code: modified from 16-726 Assignment 3 from CMU

Conditional GAN Code: heavily modified from https://github.com/arturml/mnist-cgan/blob/master/mnist-cgan.ipynb

Conditional DC GAN Code: heavily modified from https://github.com/togheppi/cDCGAN

StyleGAN Code: modified from https://github.com/huangzh13/StyleGAN.pytorch

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … Bengio, Y. (2014). Generative Adversarial Networks. https://arxiv.org/abs/1406.2661

Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. https://arxiv.org/abs/1511.06434

Mirza, M., & Osindero, S. (2014). Conditional Generative Adversarial Nets. http://arxiv.org/abs/1411.1784

Karras, T., Laine, S., & Aila, T. (2018). A Style-Based Generator Architecture for Generative Adversarial Networks. https://arxiv.org/abs/1812.04948

Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, Sylvain Paris (2020). GANSpace: Discovering Interpretable GAN Controls. https://arxiv.org/abs/2004.02546