Antioch Sanders

Proposal

I would like to shrink down a model so that I can perform robust arbitrary style transfer on the

OAK-1, a hardware platform for light-weight deep-learning based computer vision. I would like to

mix two ideas from recent literature (2021) in order to do so in a manner that is not only fast,

but presents high-quality results. The two papers are:

Rethinking and improving the robustness of image style transfer

Drafting and revision: Laplacian pyramid network for fast high-quality artistic style transfer

However, because the OAK-1 platform is so new, some of the base code that previously worked

no longer works, or was not well documented. Thus, in this work I show how I decreased parameter size

etc., but was not able to run the models on the device.

Initial Big-Model Results

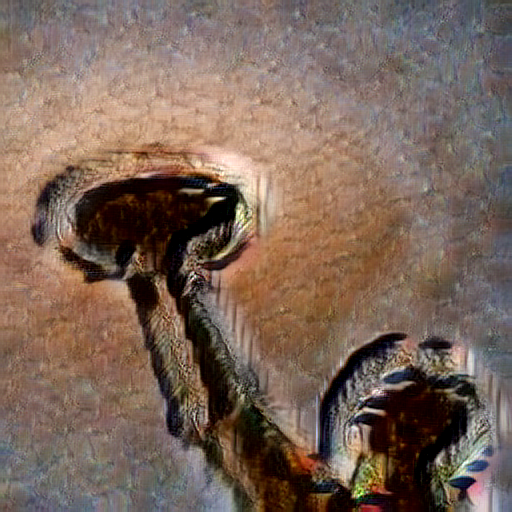

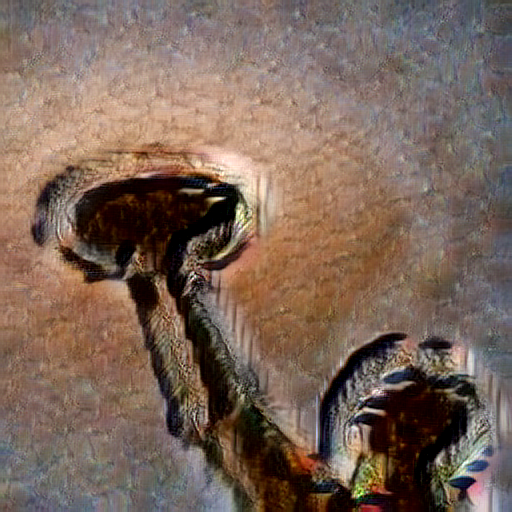

With the original AdaIn before this project, these are results I am able to get:

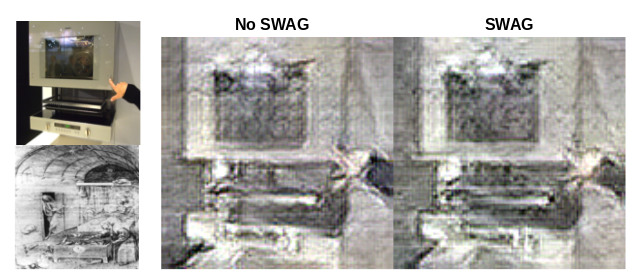

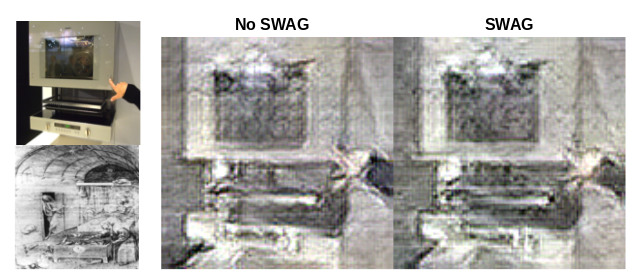

Low-Res Results Residual SWAG

However for experimentation, I had to drastically lower the resolution. I also moved to using a

residual network, since I can shrink a residual network to be smaller. The first papers also

says that for the residual network, it is good to use SWAG. Swag is where in the loss functions,

the activations are either multiplies by a value near 0, or taken a softmax over some or all

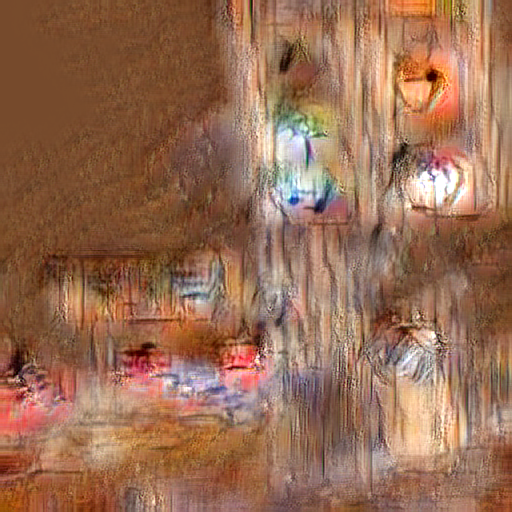

dimensions. Originally, this led to lots of images like so:

I had to do extensive hyperparameter experimentation to fix this; the lambdas had to be changed

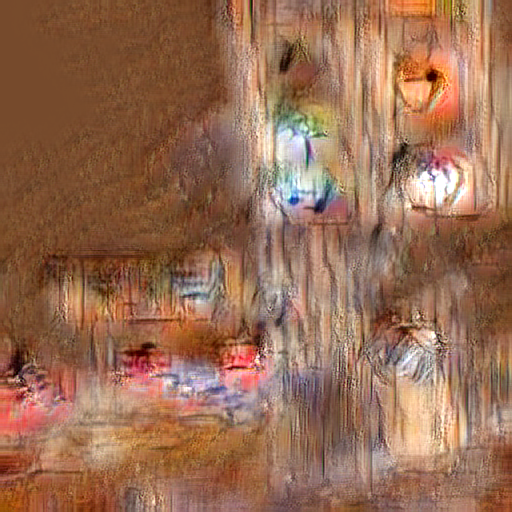

by several orders of magnitude in order to fix the problem. After this, my results looked like so:

You can see that by switching to the residual network and using SWAG, there's not a lot of

difference. However, I also didn't notice a big difference just switching to the residual net.

By switching to the residual network for the encoder, I was able to greatly decrease model size,

with very little change in how the output looks (once SWAG is used). In fact, I go from 683072

parameters to 3509568 parameters; I decrease model size by 80%, and still get the results from

above. This in theory should be enough to fit on the OAK-1.

For the draft and revision network, I have built certain components of the model, but there is

still work to be done; the parts I do have are present in the code submission.

Here are some more images: