Return to the lecture notes index

January 25, 2011 (Lecture 5)

Storage Devices, Failure, and the Prospects for Recovery

Today, we're going to discuss some of the many types of storage devices often

encountered in a forensic practice, their durability, resiliance, and

longevity, and the prospects for a recovery in the event of damage or failure.

A Quick Word of Caution

A "destructive examination" is any examination that changes the subject

of the examination in any way. In general, destructive examinations

are conducted only when they are no viable alternatives to get the sought

information, and only with specific permission and guidance from the

authority convening the investigation, or a duly authorized representative.

In most legal cases, this means that the attorney who has hired you

needs to be consulted before such an examination is conducted. The attorney

may well need to initiate specific legal processes to enable the examination.

In corproate settings, consulation from peers or permission of a manager

might be required. In any case, it is usually essential to thoroughly

document the original condition of the device and the reasons the

destructive examination was necessary. And, in many cases any other

interested party, e.g. the "other side", might want to thoroughly

examine the device first, be present during the destructive exam, or

conduct the destructive exam themselves.

It is important to note that even "essential repairs" of failed devices

cannot be repaired without special permission. Although fixing something

broken might seem kind, and might be essential, it is changing evidence.

This should set off alarms.

USB "Thumb" Flash Drives

USB "Thumb" or "Flash" drives are familiar to all of us. In very approximate

terms, they come in sizes from near-0 to a terrabyte or so. They are very

convenient because of their relatively high storage capacity for their size.

Their read throughput is about 30Mbps, which is about 1/3 of the fastest

hard drives, but on par with the slowest. Their write performance is about

half of that, which really hurts. On the good side, unlike hard drives,

there are no heads to sync, so read and write latency are negligible.

Like anything else, the "flash" memory, for which the devices are named,

does have a finite lifetime. But, what doesn't? In particular, a sector

will wear out with more than 100,000 writes, or thereabout. And, you can

imagine that certain sectors are necessarily the hottest, because of the

metadata they store.

But, back to the real world. USB drives are really durable. There are no

moving parts. They can usually take a beating and keep on ticking. If a

thumb drive doesn't work -- it is immediately a pretty bad sign. We probably

want to try again using a different port or eliminating any hubs, etc. But,

after that, the prognosis is pretty poor.

What can go wrong? Well, if the drive doesn't work, our best hope is that

it is a mechanical issue. The connectors, traces, and small wires within

the drive can break. If this is the case, they might be repairable. Or,

you might be able to work around them. To achieve this, the plastic case

can be opened, which might cause it to crack. Then the bad traces can be

repaired, such as with a trace pen or soldering iron, Or, they can be

bypassed by soldering wires before the failure. These traces and wires

are very small. It takes the right quipment and the right skills to fix

them. If either is missing, it can make a mess and cause further damage,

which might make a future repair, even by someone competent and equipped,

impossible. A failed repair may also cause embarassment and call one's

overall competency and credibility into question.

If the problem isn't mechanical, it is unlikely to be resolvable. Take

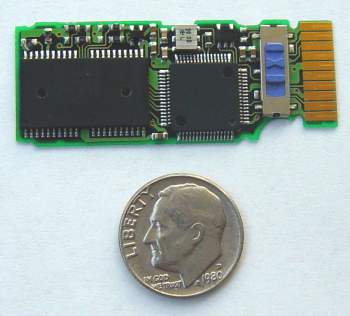

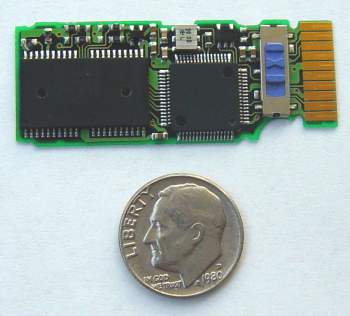

a look at the picture below. It is the inside of a fairly typical drive.

What do you notice?

As an aside, do be careful about viruses and other malware from thumb drives.

I'm not talking about evidence here. I'm talking about your lab equipment

and life equipment. People pick up thumb drives from anywhere and use them.

Where have they been? What do they have? They might be fine. They might

have caught something along the way -- or someone might have planted something

nearby, just for you to pick up.

Source: http://usbmemorysticks.net"

|

Other than the obvious parts, like the connector, case, and LED, these drives

contain very few significant parts, anywhere from one to three. For most

drives, there are two chips. A big one and a small one. The big one is

usually the flash memory. The small one usually manages communication over

the USB bus. Sometimes you can distibguish the oscillator, a crystal or

chip that provides the timing pulses that drive the electronics. Often times,

there are a scatting of capacitors, diodes, resistors, and other small part.

But, they aren't like the ones in ECE class -- they are the size of a broken

piece of pencil lead. For the most part, if the electronics are bad, the

drive is dead. One bad sign, for example, is a message on the computer

indicating that the USB device is drawing too much power. This signals an

electronic problem beyond a broken connection.

A determined and highly skilled technician can often replace some of the

small caps, diodes, etc. In rare cases, an extremely skilled technician can

replace the USB controller, but this is very dependent on the availability

of a replacement part, the skill of the technician, the equipment available,

and the manufacturing techniques used on the drive. A lot needs to align

correctly for this type of repair to be possible. If the flash memory chip

has failed -- it is game over.

I guess the upshot is this. If you get a USB device and it doesn't work, it

is time for good communication. It is important that you explain the likely

possibilities (repairable mechanical failure, repairable electronic failure,

unrepairable failure), your capabilities, and the likely cost-effectiveness

of having a specialist attempt the repair prior to any attempt at analysis.

For non-technical audiences, it is often worth noting that any repair

involves a non-reversible alteration of the evidence. Unsaid, this is not

clear to everyone.

CD-ROMs (and Audio CDs)

In understanding the various compact disc formats, it is essential to

remember that the original CD was designed to for listening to music,

and only listening to it -- not recording it or editing it. And,

with that application in mind, it was designed to get a higher density,

greater durability, lighter weight, and greater portability than other

contemporary options including the magnetic disks and tapes available

at the time.

Think about that -- it is very different than today. CDs were magical.

They had a dramatically greater capacity than floppy diskettes, tapes,

hard drives, etc, etc, etc. And, yet, they were dramatically lighter

and less durable. I mean it. Magic. Shiny magic.

To get a higher bit density than other media, they used lasers and

reflection, rather than magnetism and coiled wire. The orignal CDs were

not burnable at home, and were certainly not rewritable. They were

manufactured or "pressed". The basic idea was this. A thin, reflective

foil disk was "pressed" to install a pattern of pits. These pits

could be distinguished from the unpitted areas called lands by

the way the light projected by the laser bounced back.

The pattern of pits and lands were organized in a single spiral

"track", in much the same way your grandparents vinyl records encoded

sounds through bumps in single spiral groove. It is important to note

that, if the disk were to spin at a constant angular velocity (CAV)

(45RPM or 78 RPM) as it did for your grandparents, the pits and lands

would be smaller on the insider than the outside. This is because the

outside of the disk is bigger and rotates and, inch-for-inch, the disk

passes faster. This is fine. And, this is simple. As your grandparents

knew, there is no reason the encoding can't be denser on the inside

than on the outside. And, it is very simple and easy to get right

and keep right. This is why your grandparents, without electronic

control systems, did it this way.

But, the problem with it is that we're wasting space on the outside

by being less dense than otherwise necessary. For this reason, your

parents, blessed with excellent electronic controls, had the luxury

of constant linear velocity (CLV) CDs. These disks don't

rotate at a constant RPM. They go faster when they are reading the

outside than when they are reading the inside, in order to maintain

the maximum information density across the whole CD.

As it turns out, slowing down and speeding up a CD takes time. And,

so does calibrating one's understanding of the current position of

the laser and detector, as well as the current speed of the disks

rotation. The magic word here is "tolerance". It is, in practice,

impossibel to get things "exactly" right. Instead, we have to

design systems that can "tolerate" variances. Ask a friendly engineering

student. Since we don't know "exactly" where we are on the CD, and don't

know "exactly" what speed it is rotating, and we can't read the disk if

the speed isn't right for the location, we've got some guessing to do.

Disks use heuristics to tune their initial speed and location, and then

feedback to maintain it. Seeks around a CD are expensive, precisely

because of this. It takes time to slow down or speed up the rotation

of the disk to match the right speed for the location, and time to

that, once we are at approximately the right speed and location.

This is fine for audio CDs, because seeks are rare. We might need to seek

to a particular song, but once we are there, we just plog along linearly.

The CD drive is capable of slowly adjusting its speed, tuning its location

and speed as it goes. But, this is inconvenient for data files, because

we often want to skip around. This is why CDs are often viewed as an

archival or distribution media, not a media for active use akin to a hard

drive.

So, at this point, we know that the data on a CD-ROM is encoded through

pits and lands within a spiral track on a shiny disk (packaged in acetate

on the bottom and laquer on the top). But, how is this track organized.

The basic unit is a sector. Any time we rear or write, it is at least

a sector. By desing, we can't buffer half a sector. Technically, a sector

is decomposed into frames which represent a few stereo audio samples. But,

for data, we don't care about that. A sector is 2352 bytes. Of these 2352

bytes, 2048 contain actual data. The rest contain metadata needed to get

to the 2k we care about. The sector is organized as follows:

- sync (12 bytes): 0x00ffffffffff00

- header (4 bytes): mode (1 byte), timestame (3 bytes)

- user data (2048 bytes)

- EDC (4 bytes): Error Detection Codes, Extra bits used to detect some errors beyond those that can be corrected by ECCs (below)

- reserved (8 bytes): All 0s

- ECC(276 bytes): Error Correction COdes, Extra bits used to correct some

errors in reading

An Aside About Error Detection and Correction

Stored date, or data in transmission, is rarely perfectly safe. Transmitting

data subjects it to interference. Stored data is subject to interference

during reading and writing, aging, and damage. Corrupted data is very hard

for application software to manage -- it can't reasonably be expected to

check every single piece of data for errors before using it. So, we try to

take care of this within the storage system, itself.

The basic idea is that we break the data into pieces, for example sectors or

frames. To each of these we add some additional information --

Error Detection Codes (EDCs) or Error Correction Codes (ECCs).

These extra bits will enable us to detect and or correct most failures.

In either case, sending these extra bits wastes some storage space, so

we like to keep them to a minimum, thereby spending most of our time

sending the actual data. And, ECCs are larger than EDCs, so we use them

sparingly. In fact, we generally only use ECCs in storage systems, e.g.

disk drives, or in particularly error-prone but high-bandwidth media,

e.g. satellites. For communication, we use only EDCs, because we can often

resend the data on the rare occasions that it becomes corrupted in

transmission.

In summary, by adding ECCs to data, we gain the ability to correct

some errors. By adding EDCs, we gain the ability to detect some errors

-- but we are unable to correct them, so our recourse is to discard the

frame.

Visualizing Error Correction and Error Detection

Imagine that we want to send a message over the network. Or, as in

a storage system, from ourselves now, to ourselves or someone else later,

using the storage system as a long-time buffer. Let's imagine a really

simple case. Our message is either a) RUN or b) HIDE. We could very

efficiently encode these messages as follows:

But, the problem with this encoding is that if a single bit gets flipped,

our message is undetactably corrupted. By flipping just one bit, one

valid codeword is morphed into another valid codeword.

Now, let's consider another encoding, as follows:

The 2-bit encoding requires twice as many bits to send the messages -- but

offers more protection from corruption. If we, again, assume a single-bit

error, we still garble our transmission. Either "00" or "01" can become

"01" or "10". But, neither can, in the case of a single bit error, be

transformed into the other. So, in this case, we can detect, but not

correct the error. it would take a two bit error for the error to become

an undectable Byzantine error.

Now, let's consider a 3-bit encoding, which takes three-times as much

storage as our original encoding and 50% more storage than our encoding

which allowed for the detection of single-bit errors:

Given the encoding above, if we encounter a single bt error, "000" can

become "100", "010", or "001", each of which is 2-bits from our other

valid codeword, "111". Similarly, should a transmission of "111" encounter

a single-bit error, it will become "011", "101", or "110" -- still closer to

"111" than "000".

The upshot is this. Since single-bit errors are much more common than

multiple bit errors, we correct a defective codeword by assuming that it

is intended to be whatever valid codeword happens to be closest to it as

measured in these bit-flips. If what we have doesn't match any codeword,

but is equally close to more than one codeword, it is a dectable, but

uncorrectable error.

Hamming Distance

In the examples above, I measured the "distance" between to codewords in

terms of the number of bits that one would need to flip to convert one

codeword into the other. This distance is known as the

Hamming Distance.

The Hamming Distance between two codes (not individual codewords) is the

minimum Hamming distance between any pair of codewords within the code.

This is important, because this pair is the code's weak link with respect

to error detection and correction.

This is a useful measure, because as long as the number of bits in error is

less than the Hamming Distance, the error will be detected -- the codeword

will be invalid. Similarly, if the Hamming distance between the codewords is

more than double the number of bits in the error, the defective codeword

will be closer to the correct one than to any other.

How Many Check Bits?

Let's consider some code that includes dense codewords. This would, for

example, be the case if we just took raw data and chopped it into pieces

for transmission or if we enumerated each of several messages. Given

this situation, we can add extra bits to increase the Hamming distance

between our codewords. But, how many bits do we need to add? And, how

do we encode them? Hamming answered both of these questions.

Let's take the first question first. If we have a dense code with m

message bits, we will need to add some r check bits to the message to

put distance between the code words. So, the total number of bits in the

codeword is n, such that n = m + r

If we do this, each codeword will have n illegal codewords within 1

bit. (Flip each bit). To be able to correct an error, we need 1 more bit

than this, (n + 1) bits to make sure that 1-bit errors will fall

closer to one codeword than any other.

We can express this relationship as below:

- 2n >= (n + 1) * 2m

- 2r >= (m + r + 1)

Cyclic Redundancy Check (CRC)

Perhaps the most popular form of error detection in netowrks is the

Cyclic Redundancy Check (CRC). The mathematics behind CRCs is

well beyond the scope of the course. We'll leave it for 21-something.

But, let's take a quick look at the mechanics.

Smart people develop a generator polynomial. These are well-known

and standardized. For example:

- CRC-12: x12 + x11 + x3 +

x2 + x1 + 1

- CRC-16: x16 + x15 + x2 + 1

- CRC-CCITT: x16 + x12+ x5 + 1

These generators are then represented in binary, representing the presence

or absence of each power-of-x term as a 1 or 0, respectively, as follows:

- CRC-12: 1100000001111

- CRC-16: 11000000000000101

- CRC-CCITT: 10001000000100001

In order to obtain the extra bits to add to our codeword, we divide our

message by the generator. The remained is the checksum, which we add to

our message. This means that our checksum will have one bit fewer than

our generator --= note that, for example, the 16-bit generator has 17 terms

(16-0). Upon receiving the message, we repeat the computation. If the

checksum matches, we assume the transmission to be correct, otherwise, we

assume it to be incorrect. Common CRCs can detect a mix of single and

multi-bit errors.

The only detail worth noting is that the division isn't traditional

decimal long division. It is division modulus-2. This means that

both additon and subtraction degenerate to XOR -- the carry-bit goes away.

Let's take a quick look at an example:

1 1 0 0 0 0 1 0 1 0

________________________________

1 0 0 1 1 | 1 1 0 1 0 1 1 0 1 1 0 0 0 0

1 0 0 1 1

---------

1 0 0 1 1

1 0 0 1 1

---------

1 0 1 1 0

1 0 0 1 1

---------

1 0 1 0 0

1 0 0 1 1

---------

1 1 1 0 <------This is the checksum

The sync pattern and timestame were required by old CD drives to

help figure out where they were. The sync pattern was used to find

the start of a sector. And, if it could be read, the timestamp

would tell the CD which sector it had found in MM:SS:FF format, where

each fraction (FF) is 1/75th of a second.

The mode field, used on data CDs, is sort of interesting. Most CDs are

mode 1. They do all of the cool stuff I just described. Mode 2 CDs

are to hold audio and video files which, becuase they can be interpolated,

can tolerate some error. In these cases, we ditch the EDC, reserved, and ECC

fields and use this space for data.

As another bit of trivia, the bytes on a CD aren't encoded in a normal

8-bit form. Instead, a 14-bit pattern is used to encode each 8-bit byte.

This is known as eight-to-fourteen modulation (EFM). The reason

for this is the complexity of dealing with long streams of 0s or 1s.

A CD counts 0s or 1s by measuring the time it sees a particular pattern

of light. Because it "knows" its speed and "knows" the length of time

it has been seeing a particular light reflection, it can compute how

many pits or lands in a row would be required to pass by in that time.

But, back to "tolerances". The drive doesn't know exactly how fast it

is going, so this is an estimation process. Over time, error can creep

up. And, adding an extra 1 here or 0 there can really mess up data.

In order to prevent this, the bit patters associated with an 8-bit

byte are encoded in a 14-bit pattern. There are still only 256 bit

patterns. The extra bits are used, in effect, to introduce 1s or 0s,

interrupting long runs of 0s or 1s. Just like mile-markers help

you to estimate mileage correctly on the highway, state-changes

help the CD player to resync and time the 0s and 1s so none

are introduced within the timing tolerances.

Forensic investigations of CD-ROMs are rare. They are manufactured.

Maybe we need to get some old documentations or find out about

some feature of old software. But, we aren't usually looking for

hidden data. So, what's there to know?

CDs are not invincible. The metal foil can oxidize, making them

harder to read over time. The most commonly encountered damage

is chipping and scratching. Cracks or cuts are also possible. The

best we can probably do about the oxidation and graying of the internal

foil is to try another drive that might be less sensitive, or send the

CD to a true data recoverly lab, who can almost certainly read it with

very sensitive equipment. So, let's think about dealing with scratches,

chips, and cracks.

The bottom of the CD is a thick acetate. The top is a thin laquer.

If the bottom is scratched beyond the drive's ability to read it, we

might well be able to sand/polish it out -- as long as we don't

sand into the foil later or scratch the top of the CD in the process.

If the top is scratched, we are probably hosed. Many times if you take a

CD with scratches or chips on the top and hold it up to the light, you will

be able to see light through the chips, because the foil holding the data

will have been nicked.

Although intelligence services and some true data recovery labs are able

to recover the remaining bits on a CD with damaged foil, this is often times

beyond the rest of us. If it doesn't work in one player, we can try

another, but that is about the end of it. The same is true of a cracked

or cut CD. The best we can often do, outside of a very advanced lab,

is to stabalize the crack or cut, polish around it to ensure that the

laser isn't more distracted than it need be, and hope for the best.

In terms of stabalizing damage, there are some important things to

consider. The first is that we need to use the slowest speed we can

on the CD drive. Broken CDs can shatter, destroying any hope of

recovery, damaging the CD player (likely beyond repair), and possibly

injuring a person. We should not attempt to recover a CD if we believe

there is anything beyond a truly negligible risk of it breaking apart

during the attempt.

Cracks and cuts can cause the CD to have a convex shape. If we can cause

it to lay flat as part of our repair, without making any holes in it,

this can be helpful. Balance is also important, because it affects

the stability of the spin. For this reason, many attempts at repair

involve stabalizing the disk by placing clear labels over the entire disk

surface, e.g. one big label cut to size, rather than taping just at the

crack. Glues rarely have enough surface area to work. Taping or gluing on

the top of the disk can pull off the laquer and the metal foil, making

the disk virtually unrecoverable. It is probably obvious that the edges

of the crack or cut need to be brought together to read the bits, but

it may be les obvious that this needs to be smooth and flat, without an

edge, to allow the CD to spin freely.

Sometimes, beyond sensitivity, different CD players have different

characteristics that might make them better or worse for recovering a

particular CD. For example, players that clam a CD from the center

and suspend it on its side, or that can suspend it upside down, might

work better with a slightly bent CD than those that rely on a better

fit and gravity.

Damage on the inner part of a CD is genrally worse than on the outer

part of the disk for two reasons. The first is that, as we previously

mentioned, the CD is used form the inside out. So, if the CD is not

full, damage on the outside might not matter. It is also the case that

cuts, bends, etc, near the center tends to cause more bowing than

the same damage toward the outside, because over the distance to the

edge, the deviation often grows.

CD-Rs and CD-RWs

CD-Rs and CD-RWs are, in most important ways, like their close ancenstor,

the CD. Functionally, the biggest difference is that the CD-R can be

written by the end user. And, a CD-RW can be written, and over-written

by the end user. The way this is accomplished is by changing the encoding

layer between the acetate at the bottom and the laquer at the top.

In a CD-R or CD-RW, there is still a shiny foil -- but it contains no

data. Instead it serves as a mirror, reflecting the layer. Beneath it,

toward the laser, is a layer that holds the bits. In the case of a CD-R,

this layer is an organic dye. A CD-burner uses a laser more powerful than

used for reading to "burn" darkened areas in the CD-R. These "darkened"

areas don't allow as much light back to the sensor as do the unburned areas.

In this way the unburned areas serve as lands and the burned areas serve as

pits.

There are several different dyes used in CD-Rs. They have different

characteristics. But, all degrade with exposure to sunlight.

the older dyes (green cyanine) can degrade significantly with as little as

a week of direct sunlight exposure. New dyes (pthalocyanine, which is

silver gold or green or Azo/Super Azo, which are blue) resist UV

better, and can withstand a couple of weeks to a month of direct sunlight.

Of course, these CDs typically last much longer -- because they are not

exposed to direct sunlight for weeks to months. It is also worth noting

that some of the dyes are -- dyed. So, it isn't always possible to know

the quality of the dye based on its color. Different players have

different sensitivities to degraded dyes. If an old CD-R won't read

in one player, try another. True data recovery labs have very sensitive

equipment.

CD-RWs do essentially the same thing, but using a different material.

It is really cool, actually. They use a funky glass-like material,

a chalcogenide layer, to encode the bits. It starts out smooth and amorphous,

but when exposed to a very intense laser, it turns into a crystal. The crystal

reflects light differently than the amorphous area. To erase the bits, a

higher power used used, which, in effect, melts the crystals. For the curious,

a crytal is formed by heating with a pulse to 200C. Crystals are melted by

heating them to 500-700C. The rate of cooling is also important. This is

possible, because the high energy pulses are very small and very short. The

disk acts as a heat-sink and the heat doesn't last long. CD-RWs do wear out

over time -- the material dosn't crystalize or decrystalize as well after

many writes. They are said to have a lifetime of about 1,000 writes. Heat,

such as from the sun on car's dashboard, or sunlight, such as received by

are car's dashboard can also degrade burned CD-RWs over time. Shocked, huh?

To get calibrated, the burner needs a reference point. This is called

the pregroove. It is, in effect, a small pre-recorded area. It contains

information about the CD-R/W manufacturer, etc. It can also be used

for copy protection, to tell a copy from an original.

For forensic purposes, CD-Rs and CD-RWs are very much like CD-ROMS.

They are often made less expensively, so the tops tend to be more fragile.

They have shorter lifetimes, because they suffer from foil degradation,

just as do CD-ROMS, plus a faster degradation of their marking layer.

But, the same basic principles and guidance apply. Set reasonable

expectations. Educate your client. Recommend specialists where appropriate

and cost-effective.

Digital Video Disks, Digitial Versatile Disks, or whatever, DVDs

DVDs are much like CDs on steroids. They can store up to 4.7GB on the

outer layer. And, on some, there is an inner layer that can also hold data.

They use a semi-transparent gold outer layer in front of the aluminum

layer. Each holds data. the laser can focus on either one.

The tracks on a DVD are just 0.74um apart, as compared to 1.6um on a CD.

The pits and lands, called bumps on DVDs are a tiny 0.4 microns.

A single layer of a DVD has 1351 turns/mm for 49,324 turns -- producing

11.8km of track. On a 20x DVD player, the outer track spins at 156 mph.

They are actually quite impressive, I think.

But, they are much the same. Still pits. Still lands (bumps). Still

CLV, still a spiral track. Still lasers and sensors. DVD-Rs use dyes.

DVD-RWs use phase-change crystal-amorphous materials.

Whereas CDs ued EFM, DVDs used EFMPlus, an 8-to-16 modulation,

actually.

From a forensics perspective, they are much the same as CDs. They

age the same way. Scratches to the bottom can be managed the same way.

But, because of the even more tremendous tolerances, recovery from

any significant damge or defect is dramatically less likely, most

especially outside of a true data recovery lab.

Incidentally, DV+RWs and DV-RWs aren't the same thing. They are competitors

of sorts. Ditto for DVD-R and DVD+R. The "plus formats" are essentially the

same technology, but are licensed differently, sending the royalties for

the patents to a differnet consortium of manufacturers. From a forensics

purpose we don't really care. But, if you get a DVD+R or DVD+RW, in the event

of problems, make sure your player is compatible.

Blu-Ray Discs (BDs)

Let me be honest. I've not yet had reason to ponder these professionally.

And, I have no first hand knowledge of their lifetime, failure modes, etc.

Most of what I think I know I read somewhere else or just assumed based on

CD-xx and DVD technology.

Blue Ray disks have a bit-density equal to about 5x that of a DVD. BD-Rs,

write-once disks similar to CD-Rs, use a similar organic dye to hold the

bits. BD-REs, akin to CD-RWs, use a similar crytalline metal/glass alloy.

The recording material is closer to the surface in blu ray discs, so they

use a scratch resistant coating to prevent damage. This strikes me as a

potential vulnerability, since, by definition, it isn't thick enough to

tolerate deep damage. It seems that it might also make polishing any

damage difficult or impossible, though perhaps scratches can be filled?

I've read what seem to be exaggerated claims about the lifetime of BDs.

What to say? Dunno. My inituition about BD-R and BD-RE technology, at

the least, is that they seem likely to suffer the same instabilities and

ensuing aging as the similar dies and materials used in CD-R and CD-RW

technology.

Warning to all Readers

These are unrefined notes. They are not published documents. They are

not citable. They should not be relied upon for forensics practice. They

do not define any legal process or strategy, standard of care, evidentiary

standard, or process for conducting investigations or analysis. Instead,

they are designed for, and serve, a single purpose, to help students to jog

their memory of classroom discussions and assist them in thinking critically

about the issues presented. The author is certainly not an attorney and

is absolutely not giving any legal advice.