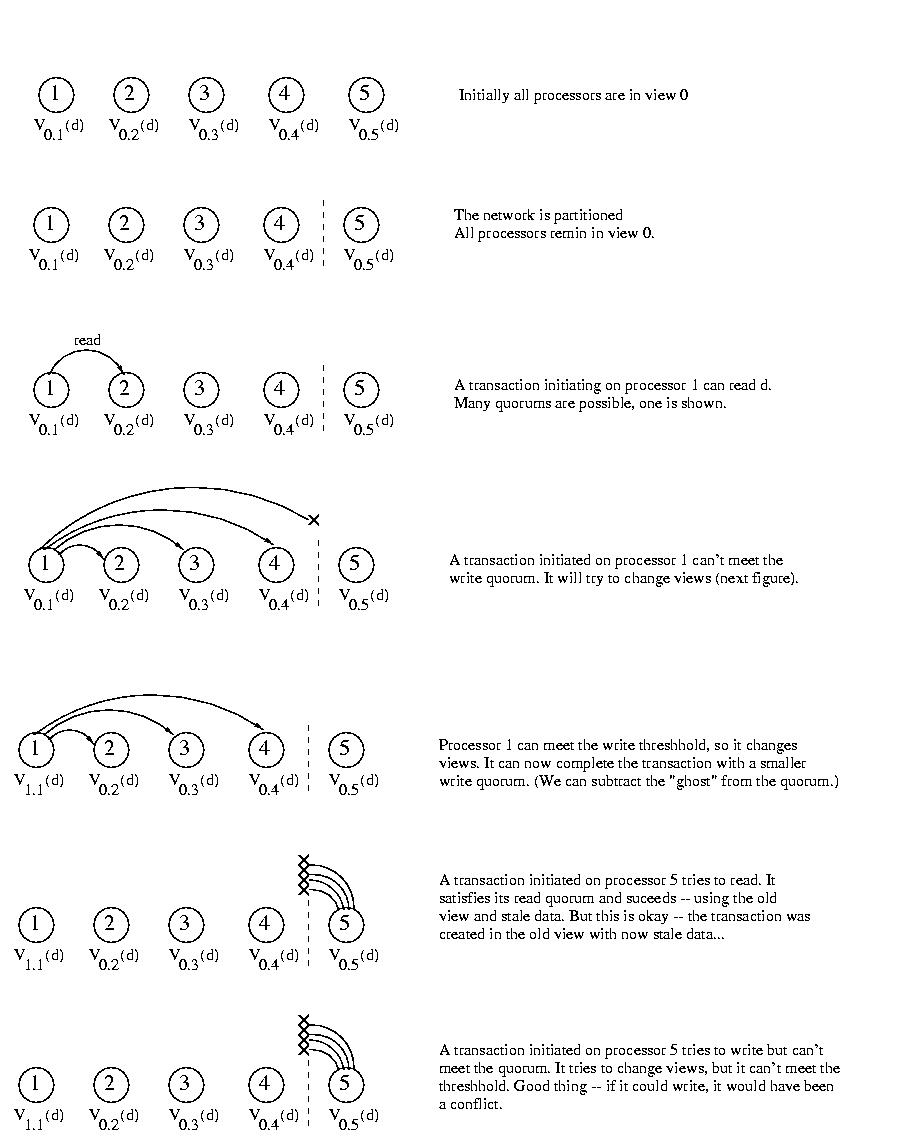

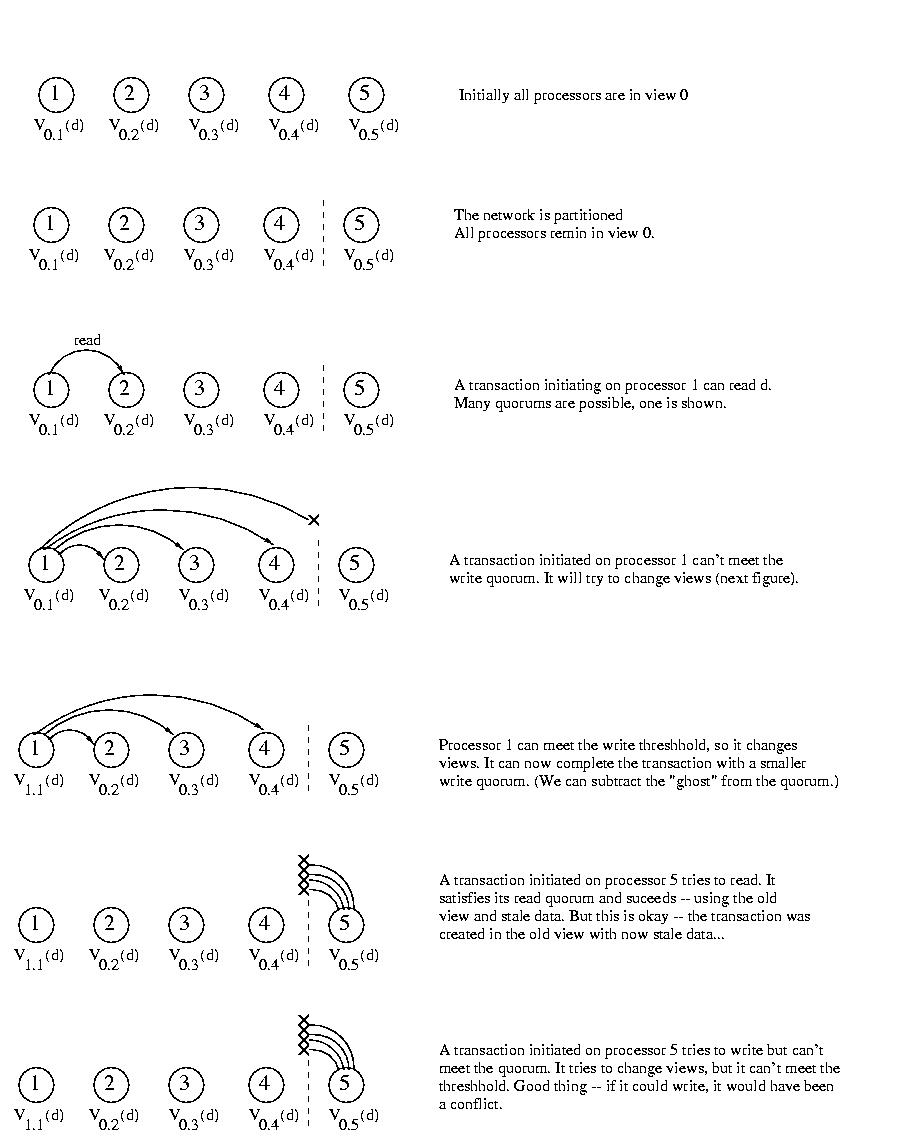

N = 5

w = 5

r = 1

Aw = 4

Ar = 2

Reading:

Introduction to View-based Quorums

Do you remember Voting with Ghosts (Van Renesse and Tannenbaum)? We said that this approach wasn't practical, because we couldn't tell dead processors from a partitioned network. It was possible that processors in two different partitions might achieve a quorum by using a ghost to account for each other. The result, when the partitioning was repaired, would be a write-write conflict.What would happen if there was some type of agreement among the processors such that processors partioned into a small group would "play dead?" Could processors in a sufficiently large partition safely account for them using ghosts?

Well, let's say that processors in a majority partition could account for unreachable processors using ghosts, but that processors in a minority partition would be well-behaved and do nothing that would complicate things later. If this were the case, only the processors in the majority partition could write, and the others would know which processors to contact upon repair for up-to-date information.

This approach would certainly work. But taking a straw poll before doing anything sounds expensive. And is a "majority wins" rule the only way? or can we do better? Let's take a look at the details.

Threshholds

Let's define two threshholds, one for read and another for write:

- Let Ar be the read threshhold

- Let Aw be the write threshhold

A partition will need to be bigger than the threshhold size in order to count ghosts toward a quorum. Processors in partitions smaller than the appropriate threshhold won't be allowed to do any harm. We'll discuss the details shortly. But first, let's try to put some bounds on Ar and Aw.

Bounding The Read Threshhold

Let's consider write-write conflicts. To avoid these in the event of a partitioning, we will need to ensure that at most one partition can write. to achieve this, we follow a rule similar to the one used for static write quorums:Aw > (N/2), where N is the total number of processorsCan we do better than this? Nope. If the threshhold size is less than a majority, we might have processors in each of two different threshholds accounting for each other with ghosts. We might get conflicting updates.

Bounding The Write Threshhold

This time we'll consider read-write conflicts. (There's no reason to consider read-read conflicts -- there really is no such thing.) it is certainly the case that if we can write to a partition, we can read from it. So Ar >= Aw is certainly safe. But can we do better than this? Perhaps.Much as was the case when selecting read and write quorums, we know that the threshholds must intersect to avoid read-write conflicts. If they didn't a read in one partition could account for processors in another partition using ghosts, while a ghost in the other partition accounted for the processors in the read's partition using ghosts. the read could get a stale value.

Ar + Aw > NThis read threshhold will protect against read-write conflicts, while the write threshhold defined earlier will protect against write-write conflicts.

Views

Well, there's one more little detail. This algorithm is called View-Based Quorums, so what is a view? A view is a processor's understanding of the processors in its partition. It is important to realize that this understanding is not necessarily up-to-date. A processor may have an outdated view of its partition.Why should a processor have an outdated view? Well, earlier I hinted that "taking a straw poll" before performing every transaction would be very expensive. So instead, we are only going to do that when necessary -- when we can't get a quorum for an operation.

In other words, if we try to read and can't get a quorum, or if we try to write and can't get a quorum, we try to change our view. Changing views involves checking the appropriate threshhold value and, if satisfied, counting ghosts toward the operation's quorum.

Views are per-processor -- different processors within the same partition may have different views. Views are also per data object. As strange as it may sound, given several objects repicated on the same set of servers, a processor may have a different view for each of the objects. This is allowed, because the views are only updated if necessary to achieve a quorum.

Views also have names. The names takes the form Vx.y(d), where x is the view sequence number, y is the processor number, and d is the data object.

Each time a processor changes the view of an object, it increases the sequence number. The sequence number can only advance.

How Does It Actually Work?

It works exactly as did static quorums. Except that failing to meet a quorum may not be terminal. If a processor cannot meet the quorum for an operation, it looks to see if it can meet the threshhold for that operation. If it can, it advances its view and accounts for the unreachable processors using ghosts. (Actually it just decreases the quorum by the number of unreachable processors).Transactions are initiated by processors that have a particular view of the data that they are operating on. These transactions must execute in the same view. If the view of the data at the time the transaction is executed is different than the view at the time it was created, the transaction fails. This is necessary to ensure that the transaction is operating with consistent data. If the view has changed, the data that was the basis for the transaction may have changed.

When a partitioning is repaired, the first processor to notice initiates an update. The view sequence number is updated to one past the highest view number in use. The transactions are serialized by sequence number. Ties are broken using the processor id. The race condition isn't important, because the transactions are serializable.

Why are the transaction necessarily serializable in this way? Because each transaction was created and executed with the same view of the data. This ensures the consistency of each individual transaction. The only trick after the partition is repaired is to ensured that the views are appropriately serialized. To do this, the transactions that are handling the updates must obtain a quorum to read or write during the transition.

In order to speed things along, a processor should initiate an update after servicing an update request. This makes sense, because the update request informs the processor that the network partion has been repaired and that global consistency can be restored. The system has the greatest flexibility to satisfy a quorum when each processor has the greatest number of other processors within its view.

Example

One very common configuaration is read-one/write-all. Let's take a look at this example using 5 processors.N = 5

w = 5

r = 1

Aw = 4

Ar = 2

Optimizations

We can actually relax the read-rule to allow a transaction to use any view of the data lower (older) than its own to form a read quorum. But if we do this, subsequent accesses to the data must be from transactions with a higher view. This is because a subsequent write from a transaction with a lower view could invalidate the data already read and delivered to the client. Subsequent reads must also be from a higher view, bevcause intermediate writes may have been invalidated.In a similar fashion, the write-rule can be relaxed to allow transactions to use objects in older views to form a write quorum, but subsequent reads and writes at lower levels must be rejected. This is because raising the view of the data may have prevented lower-level writes and as a consequence prevented lower-level reads, occuring at levels above the rejected writes, but below the relaxed-write to return a stale copy.

Let's Be Optimistic

The replica management techniques that we have discussed so far are said to be pessimistic techniques. This is because they sacrafice a lot of efficiency in order to prevent conflicts from occuring. Just like the pessimists that you and I know, they are very concerned about the bad things that can happen.Optimistic techniques assume that problems are far less likely and try to optimize for the common case. In some cases, they may allow for stale data or other inconsistencies.

Today we are going to talk about two such techniques. The first offers no guarantees at all, and the second allows us to detect conflicts, but doesn't always prevent them.

Best-Effort Replication

The replication methods that we have discussed so far ensure that stale data is never received by a client. In most applications, this is a useful requirment. But sometimes it is okay to deliver stale data, at least for a while. Consider a password database. A user is likely to remember the old password for a while after changing passwords. So if the password change doesn't immediately reach some host, a properly educated user will simply use the old password.It is important to note that even best-effort replciation strategies should provide protection against conflicts in the event of partitioning. It is the case that reading stale data is okay, but if a new value arrives, we must be able to determine whether it is an update, or a value more stale than our own.

Allowing best-effort consistency releases the requirement that writes occur to more than one site. For example, it is possible to update only one "master" site and allow that site to asynchronously update "slave" sites. It is also unnecessary to use a read quorum, since a stale value is acceptable.

Epidemic algorithms are one approach to best-effort replication control. This approach models the spread of disease. There are three types of systems:

- Infectious: Systems that know of an update and are trying to spread it

- Susceptible: A system that has not seen an update before and as a consequence can contract it.

- Immune: A system that has contracted an update and is consequently immune from getting the same update again, but that is no longer infections.

When a system learns of an update, it becomes infectious. An infectious system contacts other systems and lets them know about the update. Some of the systems that are contacted will be immune. Immune systems have already seen the update; they take no action. Others will be susceptible. When a susceptible systems is contacted by an infectius system, it updates its own copy fo the data, becomes infectious, and starts to contact other systems to spread the update. Eventually an infectious system stops trying to spread the update. Depending on the implementation, the system may become immune after some n number of attempts to spread the disease, after some m number of failures to spread the disease, or after its failure rate reaches some threshhold.

One characteristic of epidemic algorithms is that there is no guarantee that all systems will eventually receive the update. This is because the spread of the update is uncoordinated. Some processors simple may not be contacted. The longer a system remains infectious, the more systems will get the update and the more likely it is that all systems will get the update. But there are no guarantees.