Return to lecture notes index

January 14, 2010 (Lecture 2)

The Area We Call "Systems" -- And the Funny Creatures We Call "Systems People"

When I'm hanging out in the "Real world", people often ask me about my job.

I usually explain that I am a teacher. Everyone understands what a teacher

does. We talk for a living. Beyond that, I'm safe. Everyone knows, "Those

who can, do. Those who can't, teach."

When people ask me what I teach, I tell them, "Computer Science". Oddly

enough, they only hear the first word, "Computer". Sorry, ya'll, I don't do

windows. You'll need IT for that. This brings me to two questions,

"What is the area we call, Comptuer Systems?" and, "How does Distributed

Systems fit in?"

When I explain my area of interest to every day folks, I like to tell them

that in "Systems" we view the computing landscape as commerce from the

perspective of the air traffic system or the system of highways and roadways.

There is a bunch of work that needs to get done, a bunch of resources that

need to be used to get it done, and a whole lot of management to make it

work.

And, like our view of commerce, it only gets interesting when it

scales to reach scarcity and when bad things happen. The world can't be

accurately descibed in terms of driveways, lemonaide stands, and sunny days.

Instead, we care about how our roadways and airways perform during rush hour,

in the rain, when there is a big game, and, by the way, bad things happen to

otherwise good drivers along the way. In otherwords, our problem space

is characterized by scarcity, failure, interaction, and scale.

Distributed Systems, In Particular

"Systems people" come in all shapes and sizes. They are interested in such

problems as operating systems, networks, databases, and distributed systems.

This semester, we are focusing mostly on "Distributed systems", though

we'll touch on some areas of networks, and monolithic databases and

operating systems.

Distributed systems occur when the execution of user work involved

managing state which is connected somewhat weakly. In other words,

distributed systems generally involve organizing resources connected

via a network that has more latency, less bandwidth, and/or a higher

error rate than can be safely ignored.

This is a different class of problems, for example, than when the limiting

factors might include processing, storage, memory, or other units of work.

There is tremendous complexity in scheduling process to make efficient use

of scarce processors, managing virtual memory, or processing information

from large attached data stores, as might occur in monolithic operating

systems or databases. It is also a different class of problems than managing

the fabric, itself, as is the case with networks.

Exploring the Model

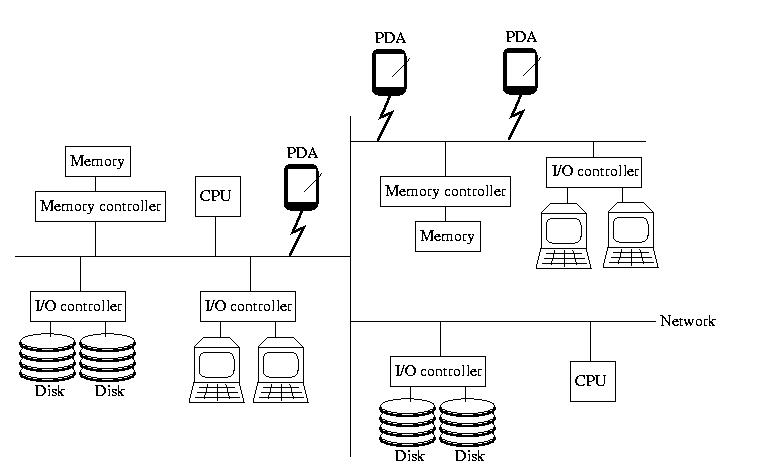

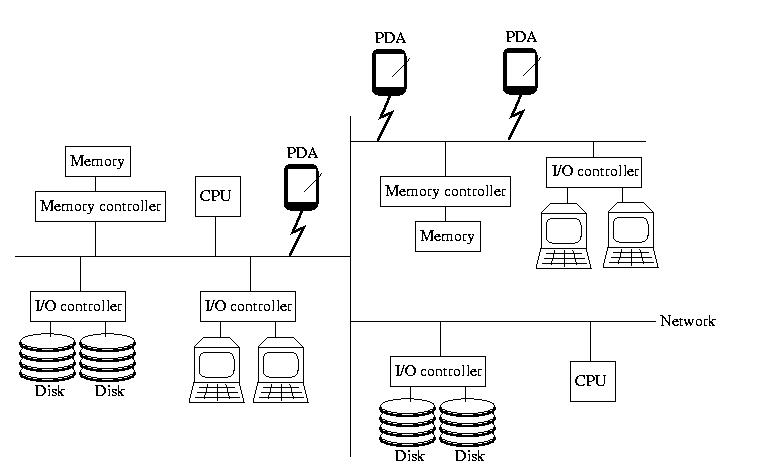

When I've taught Operating Systems, I've begun with a picture

that looks like the one below. If you didn't take OS, please don't

worry -- everything on the picture, almost, should be familiar to

you. It contains the insides of a computer: memory and memory

controllers, storage devices and their controllers, processors, and

the bus that ties them all together.

This time however, the bus isn't magical. It isn't a fast, reliable,

predictable communication channel called that always works and maintains

a low latency and high bandwidth. Instead, it is a simple, cheap,

far-reaching commodity network that may become slow and bogged down

and/or lose things outright. It might become partitions. And, it might

not deliver messages in the same order that they were sent.

To reinforce the idea that this is a commodity network, like the

Internet, I added a few PDAs to the picture this time. Remember,

the network isn't necessarily wired -- and all of the components

aren't necessarily of the same type.

Furthermore, there is no global clock or hardware support for

synchronization. And, to make things worse, thr processors aren't

necessarily reliable, and nor is the RAM or anything else. For

those that are familiar with them, snoopy caches aren't practical,

either.

In other words, all of the components are independent, unreliable

devices connected by an unreliable, slow, narrow, and disorganized

network.

What's the Good News?

The bottom line is that, despite the failure, uncertainty, and

lack of specialized hardware support, we can build and effectively

use systems that are an order of magnitude more powerful. In fact we can

do this while providing a more available, more robust, more convenient

solution. This semester, we'll learn how.

Distributed Systems vs. Parallel Systems

Often we hear the terms "Distributed System" and "Parallel System."

What is the difference?

Not a whole lot and a tremendous amount -- all at the same time.

"Distributed System" often refers to a systems that is to be used by

multiple (distributed) users. "Parallel System" often has the connotation

of a system that is designed to have only a single user or user process.

Along the same lines, we often hear about "Parallel Systems" for

scientific applications, but "Distributed Systems" in e-commerce or

business applications.

"Distributed Systems" generally refer to a cooperative work environment,

whereas "Parallel Systems" typically refer to an environment designed to

provide the maximum parallelization and speed-up for a single task.

But from a technology perspective, there is very little distinction.

Does that suggest that they are the same? Well, not exactly. There are

some differences. Security, for example, is much more of a concern in

"Distributed Systems" than in "Parallel Systems". If the only goal of

a super computer is to rapidly solve a complex task, it can be locked

in a secure facility, physically and logically inaccessible -- security

problem solved. This is not an option, for example, in the design of

a distributed database for e-commerce. By its very nature, this system

must be accessible to the real world -- and as a consequence must

be designed with security in mind.

Abstraction

We'll hear about many abstractions this semester -- we'll spend a great

deal of time discussing various abstractions and how to model them in

software. So what is an abstraction?

An abstraction is a representation of something that incorporates the

essential or relevent properties, while neglecting the irrelevant details.

Throughout this semester, we'll often consider something that exists in the

real world and then distill it to those properties that areof concern to us.

We'll often then take those properties and represent them as data structures

and algorithms that that represent the "real world" items within our software

systems.

The Task

The first abstraction that we'll consider is arguably the most important

-- a represention of the work that the system will do on behalf of a

user (or, perhpas, itself). I've used a lot of different words to describe

this so far: task, job, process, &c. But I've never been very specific about

what I've meant -- to be honest, I've been a bit sloppy.

This abstraction is typically called a task. In a slightly different

form, it is known as a process. We'll discuss the subtle difference when

we discuss threads. The short version of the difference is that a task is

an abstraction that represents the instance of a program in execution, whereas

a process is a particular type of task with only one thread of control.

But, for now, let's not worry about the difference.

If we say that a task is an instance of a program in execution, what

do we mean? What is an instance? What is a program? What do we mean by

execution?

A program is a specification. It contains defintions of what type fo data is stored, how it can be accessed, and a set of instructions that tells the computer

how to accomplish something useful. If we think of the program as a

specification, much like a C++ class, we can think of the task as an instance

of that class -- much like an object built from the specification provided by

the program.

So, what do we mean by "in execution?" We mean that the task is a real

"object" not a "class." Most importantly, the task has state

associated with it -- it is in the process of doing something or changing

somehow. Hundreds of tasks may be instances of the same program, yet they

might behave very differently. This happens because the tasks were

exposed to different stimuli and their changed accordingly.

Representing a Task in Software

How do we represent a task within the context of an operating system?

We build a data structure, sometimes known as a task_struct

or (for processes) a Process Control Block (PCB) that contains

all of the information our OS needs about the state of the task. This

includes, among many other things:

- content of registers (like the PC)

- content of the stack

- memory pages/segments

- open files

When a context switch occurs, it is this information that needs to be

saved and restored to change the executing process.

The Life Cycle of a Process (For our present purposes, a Task)

Okay. So. Now that we've got a better understanding of the role of the

OS and the nature of a system call, let's move in the direction of this

week's lab. It involves the management of processes. So, let's begin

that discussion by considering the lifecycle of a process:

A newly created process is said to be ready or runnable.

It has everything it needs to run, but until the operating system's

schedule dispatches it onto a processor, it is just waiting.

So, it is put onto a list of runnable processes. Eventually, the OS

selects it, places it onto the processor, and it is actually

running.

If the timer interrupts its execution and the OS decides that it

is time for another process to run, the other process is said to

preempt it. The preempted process returns to the ready/runnable

list until it gets the opportunity to run again.

Sometimes, a running process asks the operating system to do

something that can take a long time, such as read from the disk

or the network. When that happens, the operating system doesn't

want to force the processor to idle while the process is waiting

for the slow action. Instead, it blocks the process.

It moves the proces to a wait list associated with the slow resource.

It then chooses another process from the ready/runnabel list to run.

Eventually, the resource, via an interrupt, will let the OS know that

the process can again be made ready to run. The OS will do what it

needs to do, and ready, a.k.a., make runnable, the previously blocked

process by moving it to the ready/runnable list.

Eventually a program may die. It might call exit under the programmer's

control, in which case it is said to exit or it might end

via some exception, in which case the more general term, terminated

might be more descriptive. When this happens, the process doesn't

immediately go away. Instead, it is said to be a zombie. The

process remains a zombie until its parent uses wait() or waitpid() to

collect its status -- and set it free.

If the parent died before the child, or if it died before waiting for

the child, the child becomes an orphan. Shoudl this happen, the

OS will reparent the orphan process to a special process called

init. Init, by convention, has pid 1, and is used at boot time

to start up other processes. But, it also has the special role of

waiting() for all of the orphans that are reparented to it. In this

way, all processes can eventually be cleaned up. When a process is

set free by a wait()/waitpid(), it is said to be reaped.

The Conceptual Thread

In our discussion of tasks we said that a task

is an operating system abstraction that represents the state of a

program in execution. We learned that this state included such things as

the registers, the stack, the memory, and the program counter, as well

as software state such as "running," "blocked", &c. We also said that

the processes on a system compete for the systems resources, especially

the CPU(s).

Another operating system abstraction is called the thread.

A thread, like a task, represents a discrete piece of work-in-progress.

But unlike tasks, threads cooperate in their use of resources and in fact

share many of them.

We can think of a thread as a task within a task. Among other things

threads introduce concurrency into our programs -- many threads

of control may exist. Older operating systems didn't support

threads. Instead of tasks, they represented work with an abstraction

known as a process. The name process, e.g. first do ___,

then do ____, if x then do ____, finally do ____, suggests only

one thread of control. The name task, suggests a more general

abstraction. For historical reasons, colloquially we often say

process when we really mean task. From this point

forward I'll often say process when I mean task --

I'll draw our attention to the difference, if it is important.

Tasks in Distributed Systems

In distributed systems, we find that the various resources needed to

perform a task are scattered across a network. This blurs the distinction

between a process and a task and, for that matter, a task and a thread.

In the context of distributed systems, a process and a thread

are interchangable terms -- they represent something that the user wants

done.

But, task has an interesting and slightly nuianced meaning. A

task is the collection of resources configured to solve a

particular problem. A task contains not only the open files and

communication channels -- but also the threads (a.k.a. processes).

Distributed Systems people see a task as the enviornment in which

work is done -- and the thread (a.k.a. process) as the instance of

that work, in progress.

I like to explain that a task is a factory -- all of the means of production

scattered across many assembly lines. The task contains the machinery and

the supplies -- as well the processes that are ongoing and making use of them.