Return to the lecture notes index

September 31, 2014(Lecture 11)

The CAP/Brewer Conjecture

It is commonly desirable for distributed systems to exhbit

Consistency, Availability, and Partition tolerance.

- By consistency we mean that all participating systems share

the same view of the data. For example, if one system observes the

value five, all systems would, if they looked, observe the value

five at that time. None, for example, would be more stale or more

fresh than others.

- By Availability we mean that the system is able to respond

quickly enough for the user's needs. For example, if a Web page

times out, or users abort before seeing the results, it is not

available.

- By Partition tolerance we mean that, in the event of the

failure or isolation of some participants, the other participants

can continue to do whatever they can. For example, the loss of

certain nodes might necessitate disconnecting certain clients or

the inability to return certain results -- but should not unduely

interfere with the ability of the functioning nodes to service

clients and/or return results.

The CAP Conjecture, attributable to Eric Brewer of UC-B, is that

we can build systems that guarantee up to two of these properties -- but

not necessarily all three. Let's consider why.

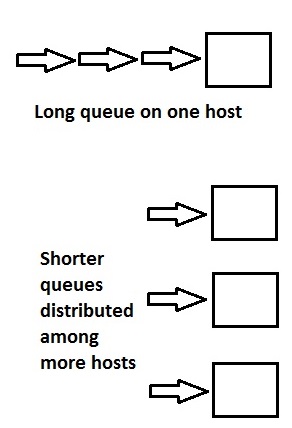

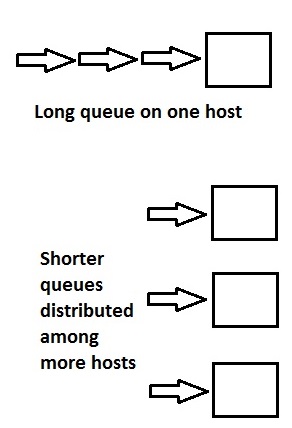

Imagine a system with too much of a queue. We can fix that by adding

more systems and dividing the work as seen below:

The picture above is nice, because we have availability. And, in

the event of a partitioning, the reachable nodes can still respond, so we

have partition tolerance. The problem, though, is that we've lost

consistency. Each host is operating independently, so the values can

diverge if updates differ. This is the "AP"/"PA" case.

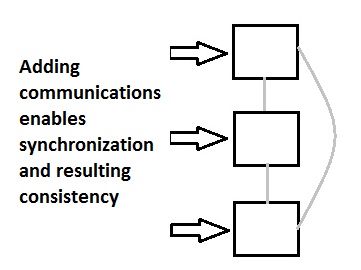

To add back consistency, we'll need to have communications among the hosts

such that they can sync values:

But, notice what has happened. We gained consistency through communication.

If we break that communication, we're back where we started. So, we now

have consistency and availability, but not also partition tolerance. This

is the "CA"/"AC" case.

What about the "PC"/"CP" case? How can we have consistency and

partition tolerance without availability, at least in any

meaningful way? One ansewer, which is I think a good example, is that we

enable reads, but not updates. Now we have sacraficed the avaialability

of writes in order to ensure maintaining consistency in light of a

partitioning.

Note: You'll often see what I've called the "CAP Conjecture"

described as the "CAP Theorem". I do not believe this to be correct.

The conjecture, is, I think, important because it allows us to

frame the trade-offs we're likely to see in real world systems. Attempts

to "prove" the conjecture into a theorem have introduced constraints and

formalisms that, I think, separate it from the generally applicable

cases. As it turns out, there is a natural tension at work here: One

can only prove something that is defined, but fuzzy edges makes things

more applicable and gives them a broader reach.

ACID Transactions

Traditional database systems have relied upon bundling work into

transactions that have the ACID properties. In so doing,

they guarantee consistency at the expense of availability and/or

partition tolerance.

ACID is an acronym for:

- Atomicity

- Consistency (serializability)

- Isolation

- Durability

- Acid - "All or nothing"

- Consistency -- This implies two types of consistency. It implies

that a single system is consistent and that there

is consistency across systems. In other words,

if $100 is moved from one bank account to another,

not only is it subtracted from one and added to

another on one host -- it appears this way everywhere.

It is this property that allows one transaction to

safely follow another.

- Isolation - Regardless of the level of concurrency, transactions

must yields the same results as if they were executed

one at a time (but any one of perhaps several orderings).

- Durability - permanance. Changes persist over crashes, &c.

Transactions, Detail and Example

Transactions are sequences of actions such that all

of the operations within the transaction succeed (on all recipients)

and their effects are permanantly visible, or none of none

of the operations suceed anywhere and they have no visible effects;

this might be because of failure (unintentional) or an abort (intentional).

Characterisitically, transactions have a commit point. This is the

point of no return. Before this point, we can undo a transaction. After this

point, all changes are permanant. If problems occur after the commit point,

we can take compensating or corrective action, but we can't wave a magic

wand and undo it.

Banking Example:

Plan:

A) Transfer $100 from savings to checking

B)Transfer $300 from money market to checking

C) Dispense $350

Transaction:

1. savings -= 100

2. checking += 100

3. moneymkt -= 300

4. checking += 300

5. verify: checking > 350

6. checking -= 350

7.

|

8. dispense 350

Notice that if a failure occurs after points 1 & 3 the

customer loses money.

If a failure occurs after points 2, 4, or 5, no money is lost,

but the collectiopn of operations cannot be repeated and the result

in not correct.

An explicit abort might be useful at point 6, if the test fails

(a negative balance before the operations?)

Point 7 is the commit point. Noticve that if a failure occurs after

point 7 (the ATM machine jams), only corrective action can be

taken. The problems can't be fixed by undoing the transaction.

Distributed Transactions and Atomic Commit Protocols

Often times a transaction will be distributed across several systems.

This might be the case if several replicas of a database must remain

uniform. To achieve this we need some way of ensuring that the

distributed transaction will be valid on all of the systems or none

of them. To achieve this, we will need an atomic commit protocol.

Or a set of rules, that if followed, will esnure that the transaction

commits everywhere or aborts everwhere.

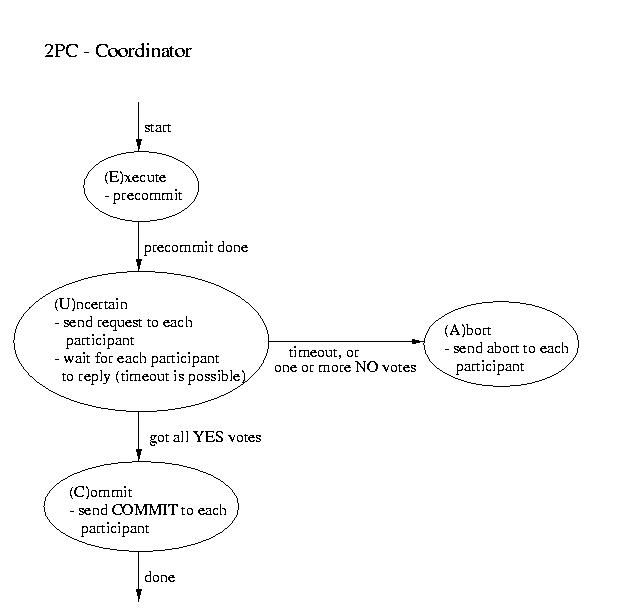

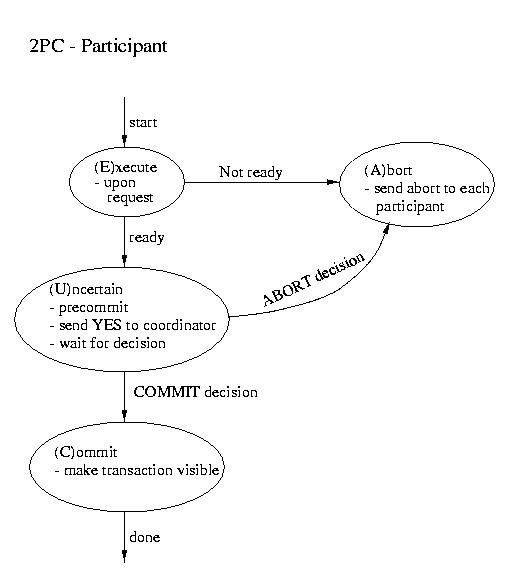

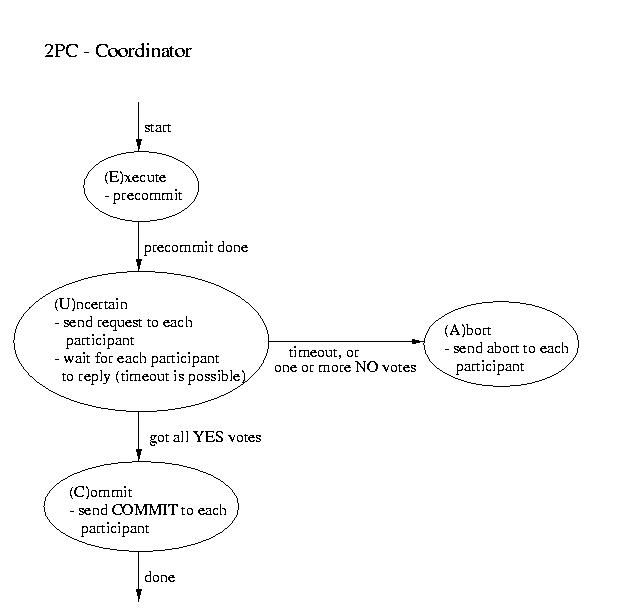

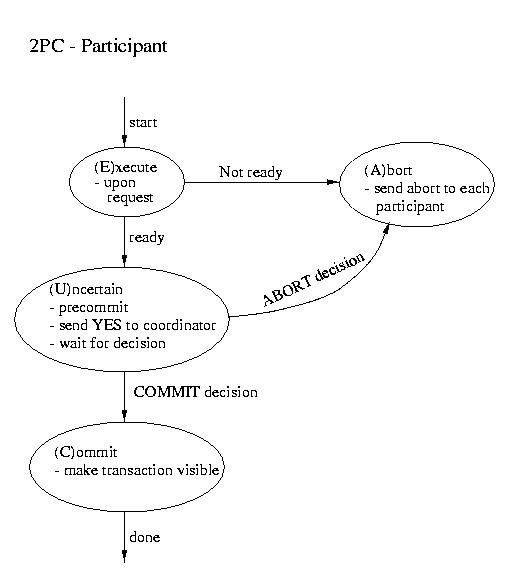

Two Phase Commit (2PC)

The most commonly used atomic commit protocol is two-phase commit.

You may notice that is is very similar to the protocol that we used

for total order multicast. Whereas the multicast protocol used a

two-phase approach to allow the coordinator to select a commit time

based on information from the participants, two-phase commit lets the

coordinator select whether or not a transaction will be committed

or aborted based on information from the participants.

| Coordinator | Participant |

|---|

| ----------------------- Phase 1 ----------------------- |

|---|

- Precommit (write to log and.or atomic storage)

- Send request to all participants

|

- Wait for request

- Upon request, if ready:

- Precommit

- Send coordinator YES

- Upon request, if not ready:

|

Coordinator blocks waiting for ALL replies

(A time out is possible -- that would mandate an ABORT) |

|---|

| ----------------------- Phase 2 ----------------------- |

|---|

|

This is the point of no return!

- If all participants voted YES then send commit to

each participant

- Otherwise send ABORT to each participant

|

Wait for "the word" from the coordinator

- If COMMIT, then COMMIT (transaction becomes visible)

- If ABORT, then ABORT (gone for good)

|

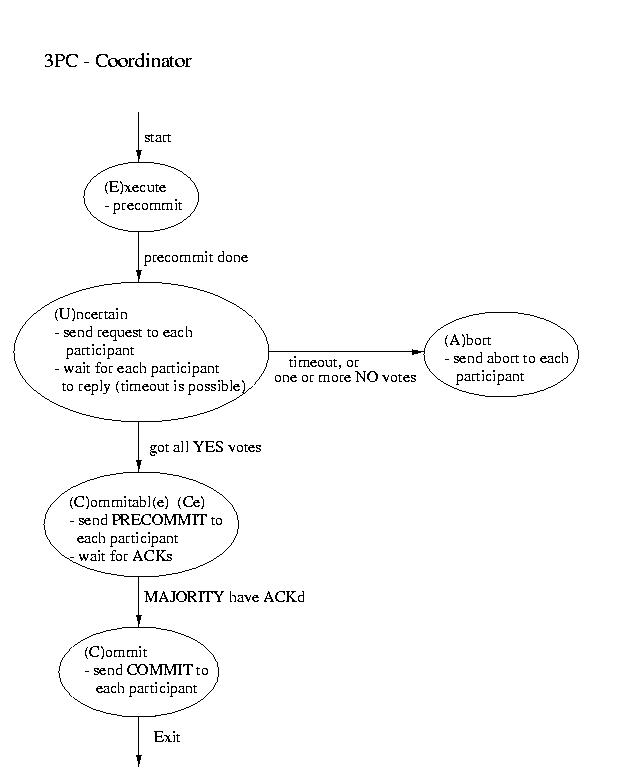

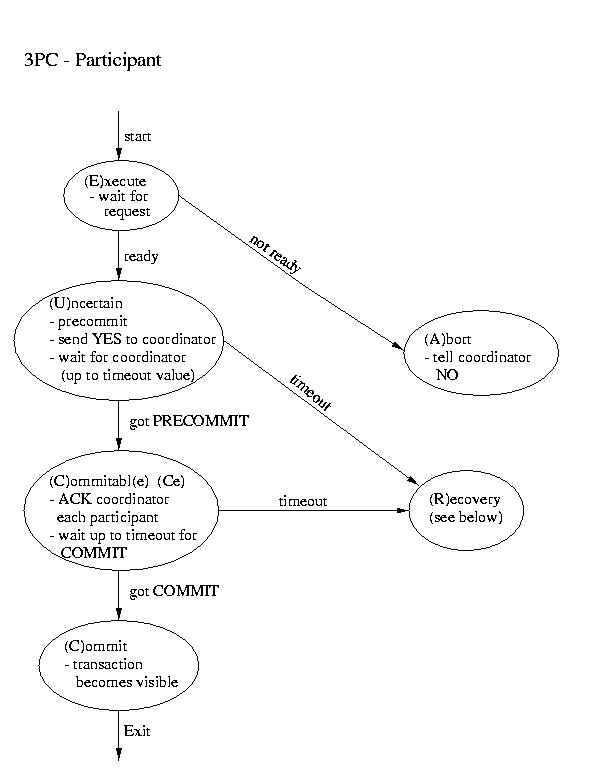

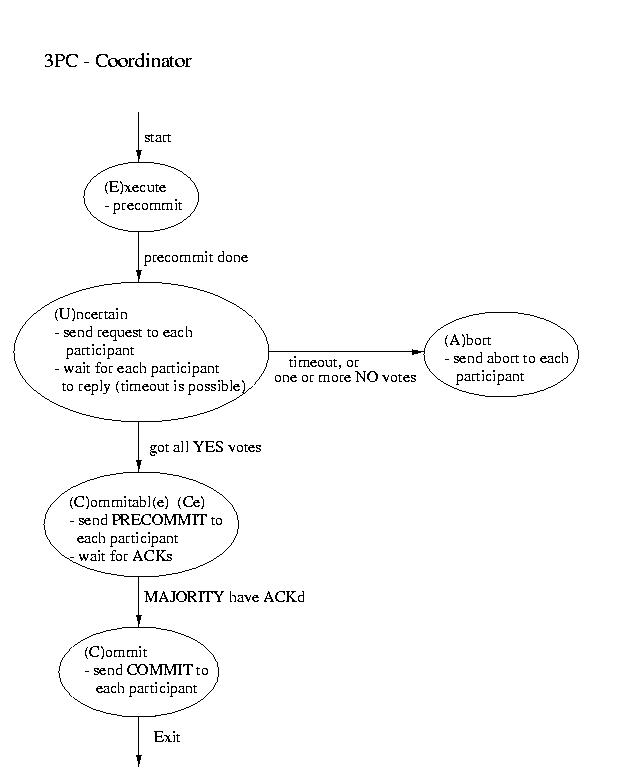

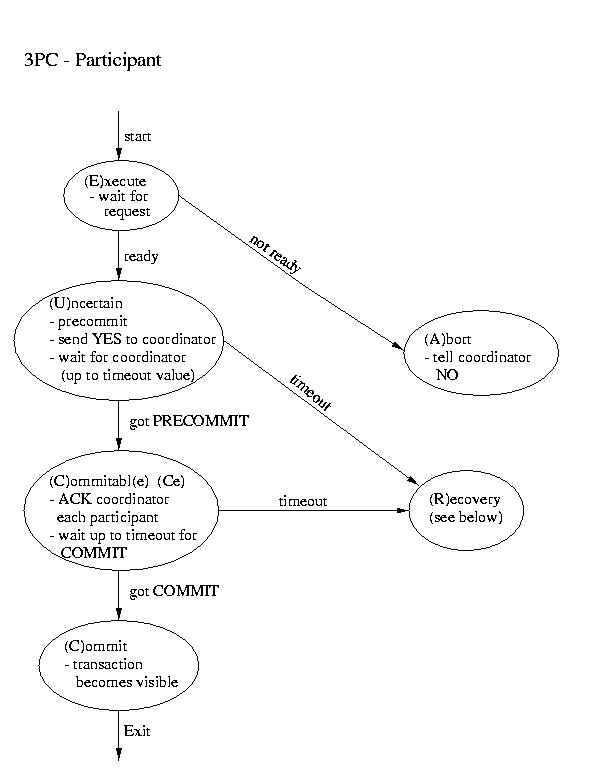

Three-phase Commit

Another real-world atomic commit protocol is three-pahse commit (3PC).

This protocol can reduce the amount of blocking and provide for more

flexible recovery in the event of failure. Although it is a better choice

in unusually failure-prone enviornments, its complexity makes 2PC the

more popular choice.

Recovery in 3PC

If the participant finds itself in the (R)ecovery state, it

assumes that the coordinator did not respond, because it failed. Although

this isn't a good thing, it may not prove to be fatal. If a majority

of the participants are in the uncertain and/or commitable states, it

may be possible to elect a new coordinator and continue.

We'll discuss how to elect a new coordinator in a few classes. So, for now,

let's just assume that this happens auto-magically. Once we have a new

coordinator, it polls the participants and acts accordingly:

- If any participant has aborted, it sends ABORTs to all

(This action is mandatory -- remember "all or none").

- If any participant has committed, it sends COMMIT to all.

(This action is mandatory -- remember "all or none").

- If at least one participant is in the commitable state

and a majority of the participants are commitable or

uncertain, send PRECOMMIT to each participant and proceed

with "the standard plan" to commit.

- If there are no committable participants, but more than half

are uncertain, send a PREABORT to all participants. Then

follow this up with a full-fledged ABORT when more than

half of the processes are in the abortable state. PRECOMMIT and

abortable are not shown above, but they are complimentary to

COMMIT and commitable. This action is necessary, because

an abort is the only safe action -- some process may have

aborted.

- If none of the above are true, block until more responses are

available.

Two-Phase Locking

Transaction, by their nature, play with many different, independent

resources. It is easy to imagine that, in our quest for a high

throughput, we process two transactions in parallel that share

resources.

This can generate an obvious problem for "Isolation". We need to ensure

that the results are consistent with the two (or more) concurrent transactions

being commited in some order. So we can't allow them to make uncoordinated

use of the resources.

So, let's asusme that, while any transaction is writing to a resource, it

is locked, preventing any other transaction from writing to it. Now,

a transaction can acquire all of the locks it needs, complete the mutation,

and release the locks. This scheme prevents the corruption of the resources

-- but opens us up to another problem: deadlock.

Let's imagine that one transaction wants to access resource A, then C, then

B. And, other concurrent transaction wants to access resource B, then A, then

C. We've got a problem if the one of the transactions grabs resources A and

C, and the other grabs resources B. Neither transaction can complete. One is

hold A and C, waiting for the other to release B. And, the other is holding

B, while waiting for C and A. What we have here is known as circular

wait, which is one of the four "necessary and sufficient" conditions

for deadlock, usually captured as:

- Non-sharable resource, e.g. mutual exclusion

- Non-preemptable (no police, or even big sticks, to settle disputes)

- Hold and wait usage (holding a resource while needing another)

- Circular wait (hold and wait + a circular dependency chain)

Fortunately, circular-wait is often very easy to attack. If the resources

are enumerated in a consistent way across users, e.g. A, B, C, D, ...Z,

and each user requests resources only in increasing order (without

wrap-around), circular wait is not possible. One can't wait on a lower

resource than one already holds, so the depednency chain can't be

circular.

This observation is the basis of a two-phase lock. In the first phase,

a transaction acquires -all- of the locks it needs in strictly -increasing-

order. It then uses the resources. In the last phase, it shrinks,

or releases locks. This universal ordering in which all users acquire

resources prevents deadlock.

In practice, transactions often are said to begin phase-1 (growing) when

they start and end phase-1 when they are done processing and are ready to

commit. Phase-2 begins (and ends) upon the commit. So, the essential

part of 2PL is, as you might guess, the serial order in which the locks

are acquired. The two phases are, in practice, mostly imaginary.

Safe Schedules

Transactions must execute as if in isolation. That doesn't

necessarily prohibit concurrency among transactions -- it just

implies that this concurrency shouldn't have any effect on the

results or the state of the system. In fact, as long as the results

and other state are the same as for some serial execution of the

transactions, the transactions can be interleaved, executed concurrently,

or btoh.

Transaction processing systems (TPSs) contain a

transaction scheduler that dispatches the transactions and

allows them to execute. This scheduler isn't necessarily FIFO,

and it doesn't necessarily dispatch only one at a time. Instead,

it tries to maximize the amount of work that gets done. One

popular measure of the performance of a TPS is the number of

transactions per second (TPS). Yes, unfortuantely, TPS

is also its abbreviation.

In discussing the scheduler, it is helpful to ask the question,

"What is a schedule?" It is an ordering of events. The transaction

scheduler's job is to execute the indivdual operations that compose

the transactions in an order that is efficient and preserves the property

of isolation. As a result, it is the schedule of individual operations

that is our concern.

Safe Concurrency: Serial Schedules and Serializability

A serial schedule is a schedule that executes all of the operations

from one transaction, before moving on to the operations of another

transaction. In other words the transactions are executed in series.

An interleaved schedule is a schedule in which the operations

of an individual transaction are executed in order with respect to the

same transaction, but without the restriction that the transactions

be scheduled as a whole. In other words, interleaving allows the scheduling

of any operation, as long as the operations of the same transaction are

not reversed.

Some interleaved schedules are safe, whereas other way result in violations

of the isolation property. Safe interleaved schedules are known as

serializable schedules. This is because an interleaved schedule is

only safe if it is equivalent to a serial schedule -- that's why

they call it serial-izable.

What did I mean when I wrote, is equivalent? An interleaved

schedule is equivalent to a serial schedule, if transactions which

containing conflicting operations are not interleaved. Operations are

said to be conflicting if the results differ depending on their

order.

This means that an interleaved schedule is serializable if, and only if,

each pair of operations occurs in the same order as they would in

some serial schedule.

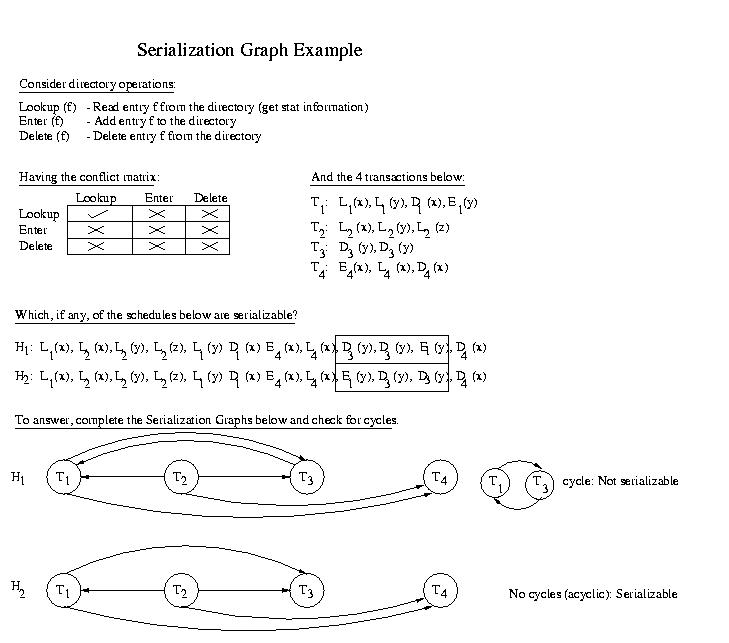

Serializability Graphs

We can see if a schedule is serializable by building a serialzability

graph. The Fundamental Theorem of Serializability states

that a schedule H is serializable, if and only if, SG(H) is acyclic.

So how do we build a serializability graph?

- Create a node for each transaction

- Draw an edge from Ti to Tj if and only if

some operation in Ti conflicts with an operation in

Tj and the operation in Ti occurs before

the operation in Tj in the given schedule. The example

belwo will make this a bit clearer.

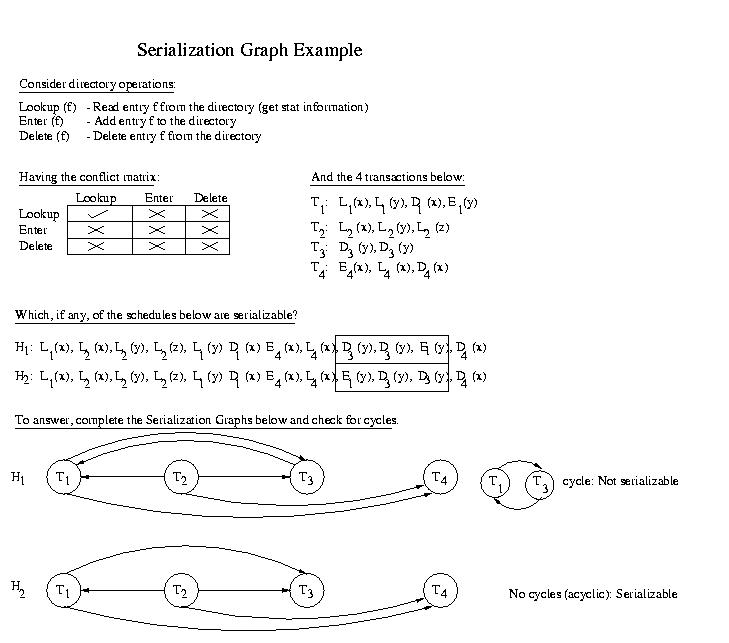

Example: Directory Operations

Let's take a careful look and make sure that we understand the source of

the problem in schedule H1. The fragment below shows only

the relevant portion of the schedule:

. . . L1(x) . . . L1(y)

. . . D3(y) . . . D3(y)

. . . E1(y) . . .

If we look at the fragment, we see that L1(x) conflicts

with D3(y). Since L1(x) occurs before

D3(y) in H1, an equivalent serial schedule

must execute T1 before T3. But if we look further

ahead in the trace we see that D3(y) occurs before

E1(y). Similarly, since these operations conflict, it

implies that T3 must occur before T1 in an

equivalent serial schedule. Both of these statments cannot be true.

If T1 executes before T3, T3 cannot

execute before T1. This schedule cannot be converted to an

equivalent serial schedule. It is not serializable -- it is not safe.

ACID semantics require a lot of effort and communication. This is worth it

when the answer needs to be correct. But, in many cases, this just isn't

the case. In many real-world situations we can tolerate some staleness

and inconsistency.

In class, I gave the example of the "quantity available" an online store

displays for an item via its online catalog and shopping cart. If you are

buying one or two items, does it matter if they tell you 450 or 452 are

available? In this case, a slightly slate value doesn't matter, right?

When does it matter -- when you check out. If they take your money, you'd

better have your goods. Have you ever went to check out and gotten an

error, "Ooops. Your items sold!". Did it really just sell, that very

moment? Or, had it been sold the whole time? You don't know. What you do

know is that it doesn't matter -- you can't have it. This is a case where

most lookups can be a little stale -- and, only at the very end, do we

need the "correct" answer.

The idea that we can trade off correctness for time, effort, and

availability is a good one, as is the observation that favoring complete

correctness and consistency at any cost, may be an unnecssary extreme --

depending upon the application.

You'll occasionally hear or read of the acronym BASE. This

acronym captures one way of thinking about "good enough":

- Basically available means that small failures don't

generate large disabilities. It is the same idea as what we call

"soft failure" vs "hard failure", but with the added emphasis that

a few failures in a large scale system shouldn't really be noticable.

- Soft state is usually intended to convey state that can be

generated or refreshed upon demand, rather than necessarily being

stored as "hard state". But, in this case, it is being used to

convey that values, even after written, will continue to change

without any explicit user request. Specifically, they'll propagate

out slowly.

- Eventual consistency conveys the idea that, although

the system might be inconsistent for some time after an update,

it will eventually converge to consistency. Without this property,

or an approximation thereof, what good would the system be?

BASE is often contrasted with ACID. The idea being that

traditional ACID semantics are very pessimistic and do a lot

of work, assuming that any inconsistency would be noticed and result in

disaster. BASE, by contrast, is vry optimistic and assumes

that the inconsistencies are unlikely to result in disaster before they

are eventually fixed.

The idea that we can trade off correctness for time, effort, and

availability is a good one, as is the observation that favoring complete

correctness and consistency at any cost, may be an unnecssary extreme --

depending upon the application....even if the acronym is, well, a little

bit of a stretch.