Lecture 10 (Monday, February 7, 2000)

Return to the Lecture notes Index

Lecture 10 (Monday, February 7, 2000)

Interprocess Communication (IPC)

Motivation:

- Data

transfer

- Sharing

data

- Event

notification

- Resource

sharing

- Process

control

Why not threads?

- Tasks

may be on different machines

- Robustness/availability

may require different address spaces

- Watchdog

jobs must be independent from watched processes

- Source

code may be unavailable, so tasks can’t be converted to threads

- Constrained

growth of stack space

Why not just shared memory?

- Very

little protection among threads implies vulnerability

- Source

code might be required to convert tasks to threads within a task

- Generally

unavailable, except to processes running on the same host

Universal IPC Facilities

(Review: Signals and pipes were required for Project #1)

Signals – a.k.a software interrupts

- No

data – just occurance of signal, which can represent an event

- Limited

breadth in describing events – typically only 31 signals (4 byte mask)

- Asynchronous

- Handler

operates similarly to the unexpected invocation of a function.

- Signals

only received on return from system call (or context-switch) –

fortunately, there are plenty

- Originally

designed for exceptions

- Early

UNIXs used signals (SIGPAUSE, SIGCONT) for process synchronization

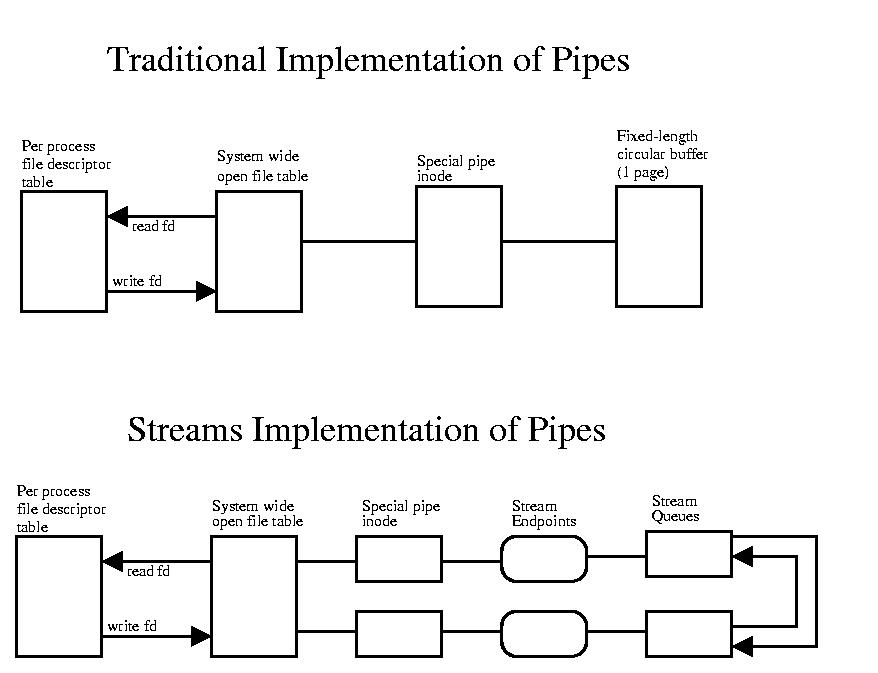

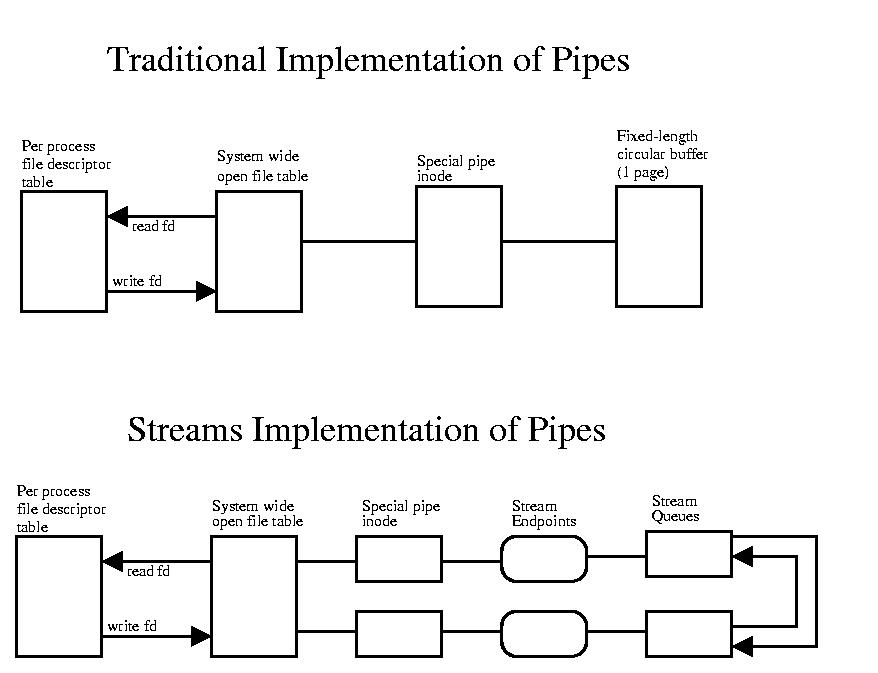

Pipes

- Unstructured

messages (concatenates writes) – hard to separate messages

- Traditional/BSD:

Unidirectional FIFO based on filesystem I-node and circular buffer

(typically 4K)

- SYSVR4

pipes: Bidirectional – 2 FIFOS based on Streams (layered device driver)

interface

- Reader

typically blocks on empty

- Writer

typically blocks on full

- Can’t

broadcast to multiple receivers (read always removes)

- If

reading from multiple writes, no way of knowing sender

- Processes

must have pipe’s entry in system open file table (anonymous pipe), or use

named pipe (actualt file system directory entry used for naming)

Ptrace

- Basic

level of support for debuggers to trace children

- Ptrace

(cmd, pid, addr, data)

- Cmd

examples: read/write addr space or registers, intercept signals, set

watchpoints, terminate, pause, &c

- Set-uid

disabled or don’t survive exec to prevent evil things (consider tracing a

program and replacing parms to an exec with tcsh – root shell)

- Exec_()’s

generate a SIGTRAP so parent can regain control

- Ptrace()

can’t trace grandchildren, just children

- Massive

context-switch overhead – movement of data from child to parent is via

kernel space

Sockets

- One

machine or two machines or many machine (broadcast)

- SOCK_STREAM:

Unformatted, reliable (connection-oriented, typically TCP)

- SOCK_DGRAM:

Formatted, unreliable (connectionless, typically UDP)

- More

during networking

System V IPC

Semaphores, message queues, shared memory,

Common elements

- Key –

user supplied ID for instance of resource (eg which semaphore or which

queue)

- Creator

– who created the resource

- Owner

– current owner of resource (initially creator, but may be changed by

creator, owner, or super-user)

- Permissions

– file system-like permissions r/w/e user/group, &c

Implementation

- Fixed-sized

resource table for each resource (Danger – can run out)

- Each

entry contains ipc_perm (key, creator, owner, perms) & resource

specific & sequence number

- Sequence

number is like a generation number – inc’d with reuse

- Id

returned on create = seq * table_size + index

- Kernel

discovers index = id % table_size

- Created

with semget(), shmget(), or msgget()

- Flags

on get IPC_CREAT (create), IPC_EXCL (exclusive), IPC_RMID (deallocate),

IPC_STAT (get status information), IPC_SET (set status information)

- Danger

– unless IPC_RMID is stays allocated – even if all users gone

Mechanisms

- Semaphores

– usual operations

- Message

queues

- Shared

memory

Message Queues

- Like

PIPE, but more flexible – discrete messages/boundaries preserved (like

diff between TCP and UDP)

- FIFO

- Big

messages can be expensive – 2 copies – into and out of kernel

- No

broadcast mechanism

- Other

than perms on queue, no way to limit recipient of particular message – any

legal reader

Shared Memory

- Maps

same storage into two different processes’ address spaces

- More

about implementation during discussion of VM later in semester

- Fastest

– no copy and no context switch (after init’d)

- No provided

synchronization

- No

provided protocol for use

- Most

UNIX variants (included SYSVR4) provide mmap() – similar, but maps file

through VMM

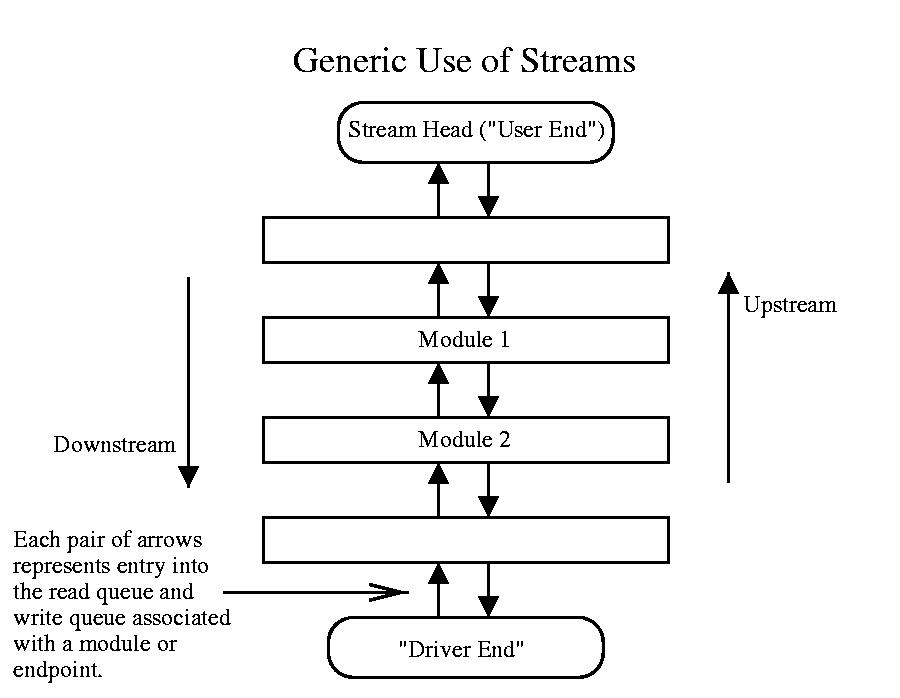

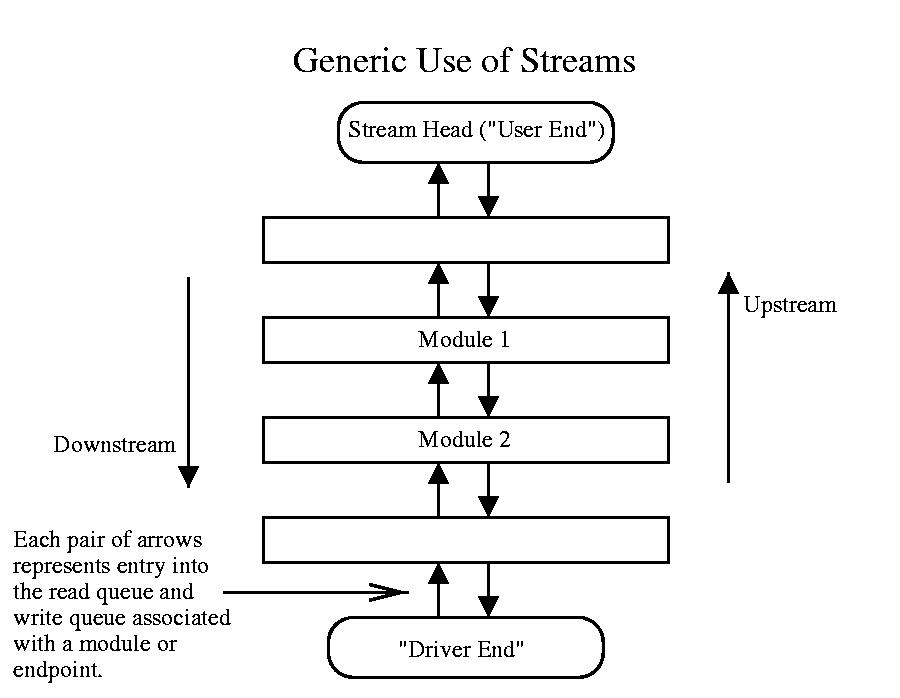

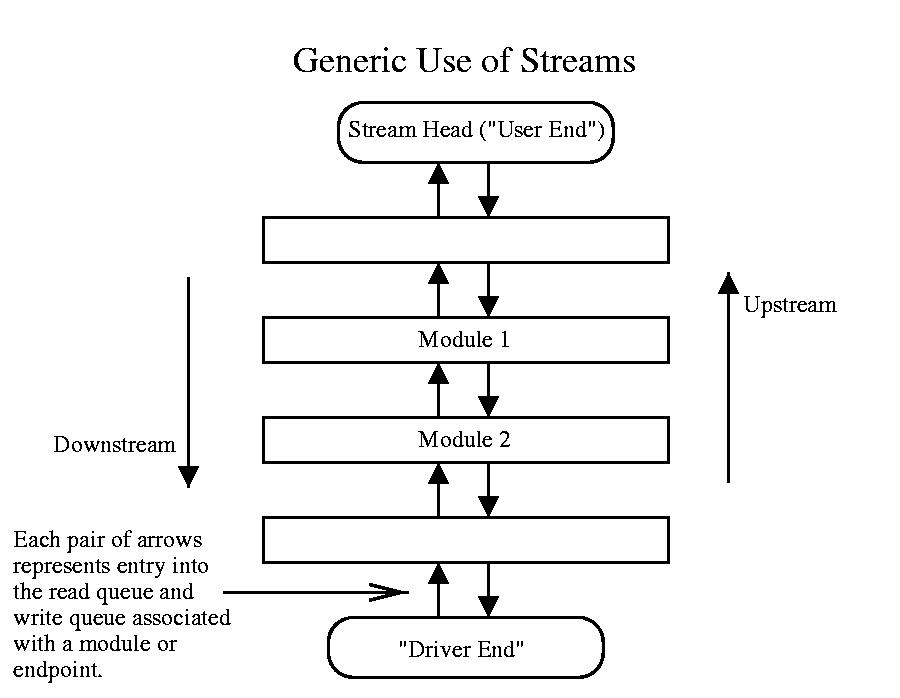

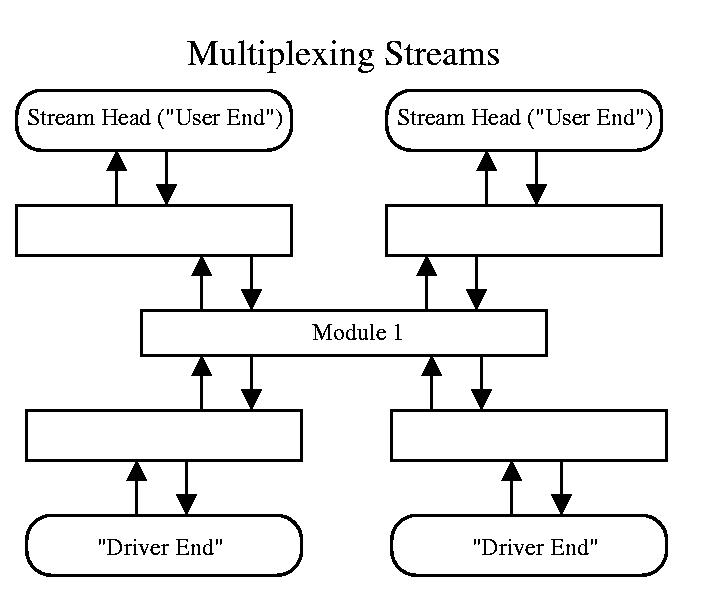

SYVR4 Streams

More flexible than IPC, but can function as IPC and is used to implement

some IPC facilties

- This

is a very brief overview – streams are reasonably intricate

- Originally

developed by Ritchie to provide structured way to implement device drivers

in layers and allow for reuse

- Now

used to implement device drivers, terminal drivers, and IPC constructs

like pipes

- (SYSVR4

is based on streams)

- Also

used for TCP/IP and other networking stacks (very natural -- we’ll see

why).

- Each

layer contains read and write queues for messages – can be prioritized

- Layers

are stacked

- Head/top

is usually user end

- Bottom

is usually device driver, but can be another stream

- Upstream

is flow toward head

- Downstream

is flow toward user.

- Each

module in-between can be viewed as a smart filter

- Modules

can be mixed and matched and reused

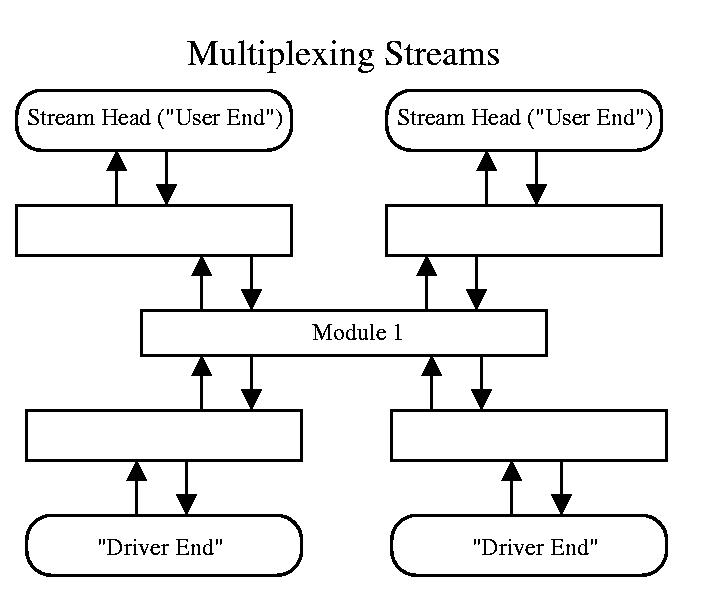

- Can

be multiplexed (consider use for broadcast/multiple receivers/multiple

senders)

- Supports

“virtual copying” (shared data) among modules

/proc File System

- Originally

intended to replace ptrace() and support debugging

- Now

in most implementations, ptrace() is implemented via /proc

- One

directory under /proc for each process, name is PID

- Not

real file system – just interface

- Each

PID directory contains subdirectories for a representative LWP

- status

– r/o status info PID, PGID, SID, size and location of stack, heap,

&c (struct pstatus)

- psinfo

– r/o anything viewable by the ps command, duplicates some info in status

(struct psinfo)

- ctl

– w/o perform control operations (wait, run, kill, wait until stopped,

stop on exit to syscall)

- map

– r/o description of virtual address space (where on core or backing

store)

- as

– r/w map of virtual address space – change by lseek and write

- sigact

– r/o information about signals: mask, handlers, &c (struct

sigaction)

- pcred

– effective, real, saved UIDs and GIDs (struct pcred)

- object

– directory, one entry for each mapped object (ex memory mapped files).

- lwp

– subdirectory info about each lwp in process. Each subdir contains

lwpstatus, lwpsinfo, lwpctl (same as above, but for individual LWPs)

Mach IPC

Mach is an operating system based on a microkernel architecture. This means

that many of the josb that are typically part of the operating system's kernel

are actually user processes. This makes an interesting application for IPC. It

is in fact the case that IPC is necessary for operating system components

implemented as user processes to interact with each other. In many ways, IPC is

part of the foundation for a microkernel based operating system -- not the

other way around. The file system, pager, memory management, &c are all

implemented as user-level tasks outside of the kernel -- they interact with the

kernel via the Mach IPC

Some key goals of the designers of Mach IPC included:

- Efficient support for

messages varying in size from a few bytes to many gigabytes

- Protection should be

fine-grained and strongly enforced

- Support for user-kernel and

user-user communication

- Communication among

processes on different hosts should function as does communications among

processes on a single host.

In the Mach model, data is formed into

messages. These messages are

then passed among processes. A process receives a message at a port. A port is a queue of messages.

Ports

Each port's queue has a finite capacity. When the queue is full the senders

block; when it is empty, the receivers block. Senders must hold capabilities to

access a port. These capabilities come in two flavors: read and write. Many

processes may have write capabilities to a port, but only one may have read

capability.

In the context of Mach, a capability

is a name for a port that is unique within a process's space. Two different

processes may have two different capabilities representing the same port.

Capabilities are reference counted.

There are some special ports:

- task_self: a port that is

used to send messages to the kernel on behalf of the task

- thread_self: similar to

task_self, but for individual threads

- task_notify: a port that is

used to receive messages from the kernel

- reply: receives results

form system calls and RPC calls.

- exception: receives

notification of exceptions

Backup Ports

Ports can have backup ports. If

a port is deallocated (freed) and messages are sent to this deallocated port,

the backup port will recieve them.

Port Sets

Port sets implement similar

functionality to the UNIX select() function. One important difference is that

port sets do not suffer a performance degredation if there are many ports in

the set -- access time is constant. One can view a port set as a common queue

for several ports. Since the message itself contains the original destination,

the intended recipient can be discovered.

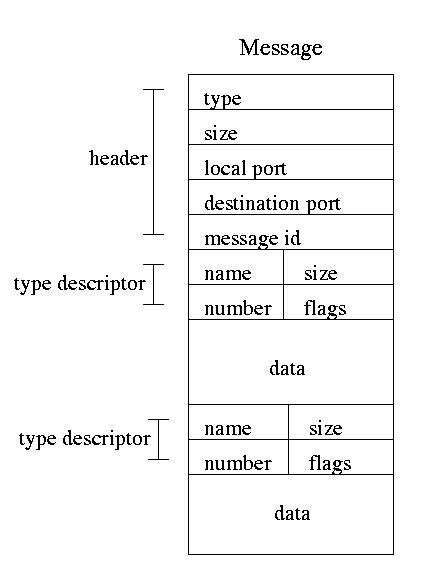

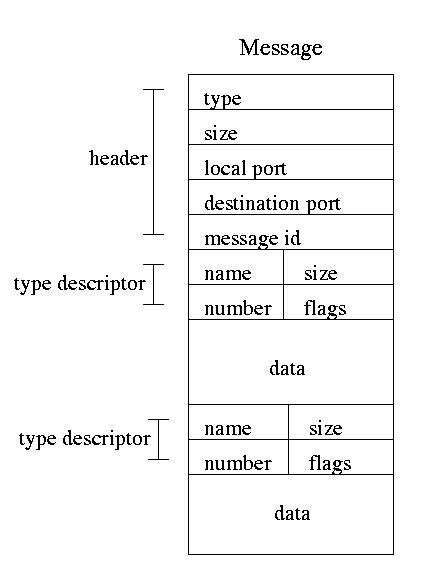

Messages

Messages contain the data that is being sent from process to process and the

metadata needed to transport and interpret it. The actually user data may be

contained within the message, or it may be referenced in shared memory. Small

amounts of data contained within the message itself are known as in-line memory. Larger amounts of

data that are only referenced within the message are known as out-of-line memory. Out-of-line

memory is shared using a copy-on-write approach. The memory is shard by both

tasks, until either tasks write to it, at which time a private copy of the page

is made.

Messages may be sent (msg_send),

received (msg_recv), or sent, when a reply is expected (msg_rpc). msg_rpc() is

typically used to implement remote procedure calls.

- type - ordinary or complex. Ordinary is simple

data. complex may require some type of translation or other special

treatment like out-of-line memory.

- size - size of entire

message, including header

- destination port - name of

port that will receive message

- reply port - if there are

result, send them here

- message id - not necessary,

name assigned by user program

Type descriptor

- name - more properly a type.

Ex: internal memory, rights, byte, 16-bit integer, string, real, &c

- size - size of data item

- number - how many data items

(of type size)

- flags - in-line,

out-of-line, &c