95-865: Unstructured Data Analytics (Fall 2018 Mini 2)

Class time and location:

- (Pittsburgh) Lectures: Tuesdays and Thursdays 4:30pm-5:50pm HBH 1002, recitations: Fridays 9am-10:20am HBH A301

- (Adelaide) Lectures: Tuesdays 9am-11:50am Classroom 1 GF, recitations: Wednesdays 4:30pm-5:50pm Classroom 5

Instructor: George Chen (georgechen [at symbol] cmu.edu)

Teaching assistants:

- (Pittsburgh) Abhinav Maurya (ahmaurya [at symbol] cmu.edu), Mi Zhou (mzhou1 [at symbol] andrew.cmu.edu)

- (Adelaide) Erick German Rodriguez Alvarez (erickger [at symbol] andrew.cmu.edu)

Office hours:

- (Pittsburgh) Abhinav: Mondays 3pm-4pm HBH 2003, Mi: Mondays 10:55am-11:55am HBH 2009, George: Wednesdays 1pm-2pm HBH 2216

- (Adelaide) Erick: Wednesdays 6pm-8pm Project Room 5, George: by appointment (please email to schedule)

Contact: Please use Piazza (follow the link to it within Canvas) and, whenever possible, post so that everyone can see (if you have a question, chances are other people can benefit from the answer as well!).

Course Description

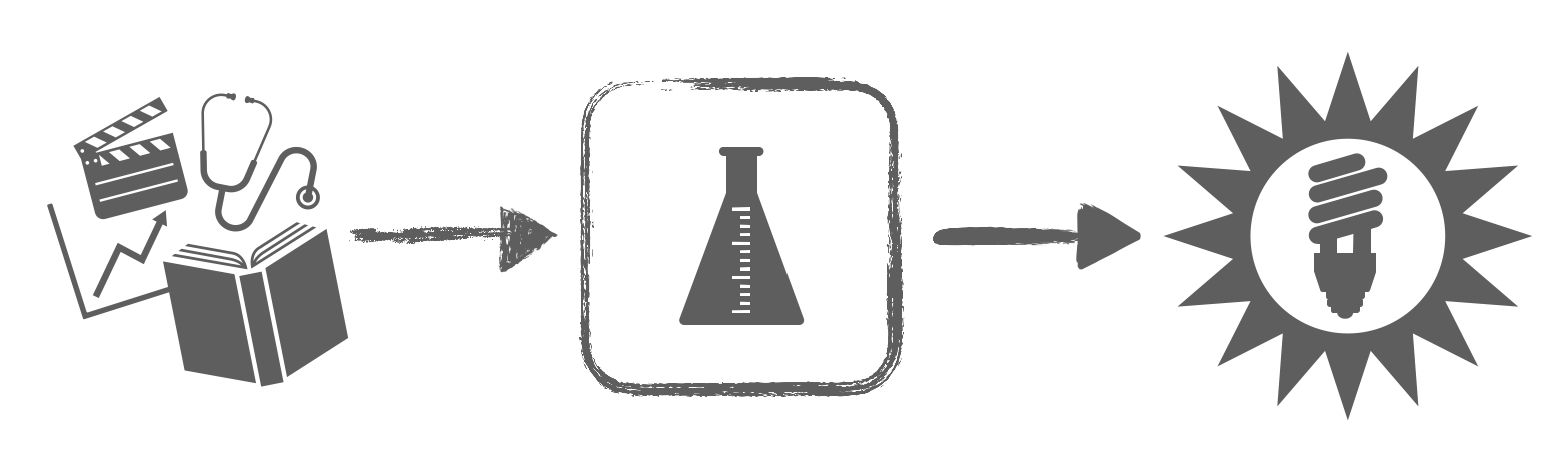

Companies, governments, and other organizations now collect massive amounts of data such as text, images, audio, and video. How do we turn this heterogeneous mess of data into actionable insights? A common problem is that we often do not know what structure underlies the data ahead of time, hence the data often being referred to as "unstructured". This course takes a practical approach to unstructured data analysis via a two-step approach:

- We first examine how to identify possible structure present in the data via visualization and other exploratory methods.

- Once we have clues for what structure is present in the data, we turn toward exploiting this structure to make predictions.

We will be coding lots of Python and working with Amazon Web Services (AWS) for cloud computing (including using GPU's).

Prerequisite: If you are a Heinz student, then you must have either (1) passed the Heinz Python exemption exam, or (2) taken 95-888 "Data-Focused Python" or 16-791 "Applied Data Science". If you are not a Heinz student and would like to take the course, please contact the instructor and clearly state what Python courses you have taken/what Python experience you have.

Helpful but not required: Math at the level of calculus and linear algebra may help you appreciate some of the material more

Grading: Homework 20%, mid-mini quiz 35%, final exam 45%. If you do better on the final exam than the mid-mini quiz, then your final exam score clobbers your mid-mini quiz score (thus, the quiz does not count for you, and instead your final exam counts for 80% of your grade).

Syllabus: [Pittsburgh] [Adelaide]

Calendar (tentative)

Warning: As this course is still relatively new, the lecture slides are a bit rough and may contain bugs. To provide feedback/bug reports, please directly contact the instructor, George (georgechen [at symbol] cmu.edu). The Spring 2018 mini 4 course website is available here.

Pittsburgh

| Date | Topic | Supplemental Material |

|---|---|---|

| Part I. Exploratory data analysis | ||

| Tue Oct 23 |

Lecture 1: Course overview, basic text processing and frequency analysis

HW1 released (check Canvas)! |

|

| Thur Oct 25 |

Lecture 2: Basic text analysis demo, co-occurrence analysis

|

|

| Fri Oct 26 |

Recitation 1: Basic Python review

|

|

| Tue Oct 30 |

Lecture 3: Finding possibly related entities, PCA, Isomap

|

Causality additional reading:

PCA additional reading (technical): |

| Thur Nov 1 |

Lecture 4: t-SNE

|

Python examples for dimensionality reduction:

Additional dimensionality reduction reading (technical):

|

| Fri Nov 2 |

Recitation 2: t-SNE

|

|

| Tue Nov 6 |

Lecture 5: Introduction to clustering, k-means, Gaussian mixture models

HW1 due 4:30pm (start of class), HW2 released! |

Additional clustering reading (technical):

Python cluster evaluation: |

| Thur Nov 8 |

Lecture 6: Clustering and clustering interpretation demo, automatic selection of k with CH index

|

|

| Fri Nov 9 |

Recitation 3: Quiz review session |

|

| Tue Nov 13 | Mid-mini quiz | |

| Wed Nov 14 | George's regular office hours are cancelled for this week | |

| Thur Nov 15 |

Lecture 7: Hierarchical clustering, topic modeling

|

Additional reading (technical): |

| Part 2. Predictive data analysis | ||

| Fri Nov 16 |

Lecture 8 (this is not a typo): Introduction to predictive analytics, nearest neighbors, evaluating

prediction methods, decision trees

|

|

| Tue Nov 20 |

Lecture 9: Intro to neural nets and deep learning

Mike Jordan's Medium article on where AI is at (April 2018):

The Atlantic's article about Judea Pearl's concerns with AI not focusing on causal reasoning (May 2018): HW2 due 4:30pm (start of class); HW3 released |

Video introduction on neural nets:

Additional reading: |

| Wed Nov 21 |

Adelaide Recitation 5: Support vector machines (SVM's), cross validation, decision boundaries, ROC curves

|

|

| Thur Nov 22 |

Thanksgiving: no class |

|

| Tue Nov 27 |

Lecture 10: Image analysis with CNNs (also called convnets)

|

CNN reading:

|

| Thur Nov 29 |

Lecture 11: Time series analysis with RNNs, roughly how learning a deep net works (gradient descent and variants)

|

LSTM reading:

Videos on learning neural nets (warning: the loss function used is not the same as what we are using in 95-865):

Recent heuristics/theory on gradient descent variants for deep nets (technical): |

| Fri Nov 30 |

Recitation 4: Word embeddings as an example of self-supervised learning

|

|

| Tue Dec 4 |

Lecture 12: Interpreting what a deep net is learning, other deep learning topics, wrap-up

Gary Marcus's Medium article on limitations of deep learning and his heated debate with Yann LeCun (December 2018): HW3 due 4:30pm (start of class) |

Some interesting reads (technical): |

| Thur Dec 6 |

No class |

|

| Fri Dec 7 |

Recitation 5: Final exam review session |

|

| Fri Dec 14 |

Final exam 1pm-4pm HBH 1002 |

|

Adelaide

| Date | Topic | Supplemental Material |

|---|---|---|

| Part I. Exploratory data analysis | ||

| Tue Oct 23 |

Lecture 1: Course overview, basic text processing, co-occurrence analysis (finding possibly related entities with discrete outcomes)

HW1 released (check Canvas)!

Pittsburgh Recitation 1: Basic Python review

|

|

| Tue Oct 30 |

Lecture 2: Finding possibly related entities, PCA, manifold learning (isomap, t-SNE)

Pittsburgh Recitation 2: t-SNE

|

Causality additional reading:

Python examples for dimensionality reduction:

PCA additional reading (technical):

Additional dimensionality reduction reading (technical):

|

| Tue Nov 6 |

Lecture 3: Introduction to clustering, k-means, Gaussian mixture models, automatic selection of k with CH index

|

Additional clustering reading (technical):

Python cluster evaluation: |

| Wed Nov 7 | HW1 due at 8am (start of Pittsburgh class); HW2 released! |

|

| Tue Nov 13 |

Lecture 4: Hierarchical clustering, topic modeling

|

Additional reading (technical): |

| Part 2. Predictive data analysis | ||

| Tue Nov 20 |

Lecture 5: Introduction to predictive data analytics, neural nets, and deep learning

Mike Jordan's Medium article on where AI is at (April 2018):

The Atlantic's article about Judea Pearl's concerns with AI not focusing on causal reasoning (May 2018): |

Video introduction on neural nets:

Additional reading: |

| Wed Nov 21 |

Recitation 5: Support vector machines (SVM's), cross validation, decision boundaries, ROC curves

HW2 due at 8am (start of Pittsburgh class); HW3 released! |

|

| Tue Nov 27 |

Lecture 6: Image analysis with CNNs (also called convnets), time series analysis with RNNs,

roughly how learning a deep net works (gradient descent and variants)

Pittsburgh Recitation 4: Word embeddings as an example of self-supervised learning

|

CNN reading:

LSTM reading:

Videos on learning neural nets (warning: the loss function used is not the same as what we are using in 95-865):

Recent heuristics/theory on gradient descent variants for deep nets (technical):

Some interesting reads (technical): |

| Wed Dec 5 |

HW3 due 8am (start of Pittsburgh class) |

|

| Thur Dec 6 |

Final exam 9am-12pm |

|