16-726 Learning-Based Image Synthesis

Assignment 3: When Cats Meet GANs

Jun Luo

Mar. 2021

Overview

In this assignment, we focus on GAN training. In the main part, we train a Deep Convolutional GAN (DCGAN) to generate grumpy cats samples from noise, and a CycleGAN to convert two different types of cats. In Bells & Whistles part, we explore train the GANs on a Pokemon dataset, and also explore the Variational Autoencoder.

Deep Convolutional GAN

Data augmentation: To introduce some variation of the input images and prevent the discriminator from easily overfitting, we need data augmentation, especially for a small dataset like the grumpy cat dataset that we use in this assigment. Below lists several types of data augmentation that I used.

- Resize: Resize the image to 1.1 times its original size.

- Random Horizontal Flip: Randomly decide if to flip the image horizontally.

- Random Rotation: Randomly rotate the image by (-5, +5) degrees.

- Random Crop: Randomly crop the image to the size of its original image

DCGAN discriminator padding equation: Let \( P \) be the padding size, \( I \) be the input size, \( O \) be the output size, \( K \) be the kernel size, and \( S \) be the stride. Then we have $$ P = (S (O-1) + K - I) / 2 . $$ For instance if the input size is 64, output size is 32, kernel size is 4 and stride is 2, then the padding of it is \( P= (2 \times (32 - 1) + 4 - 64) / 2 = 1 \)

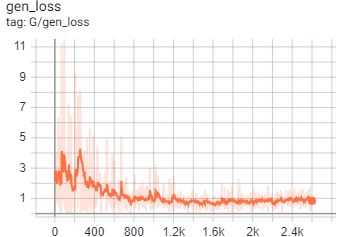

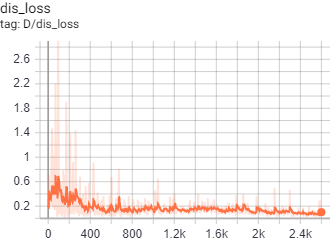

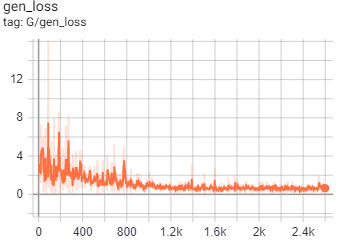

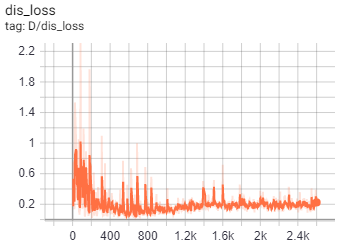

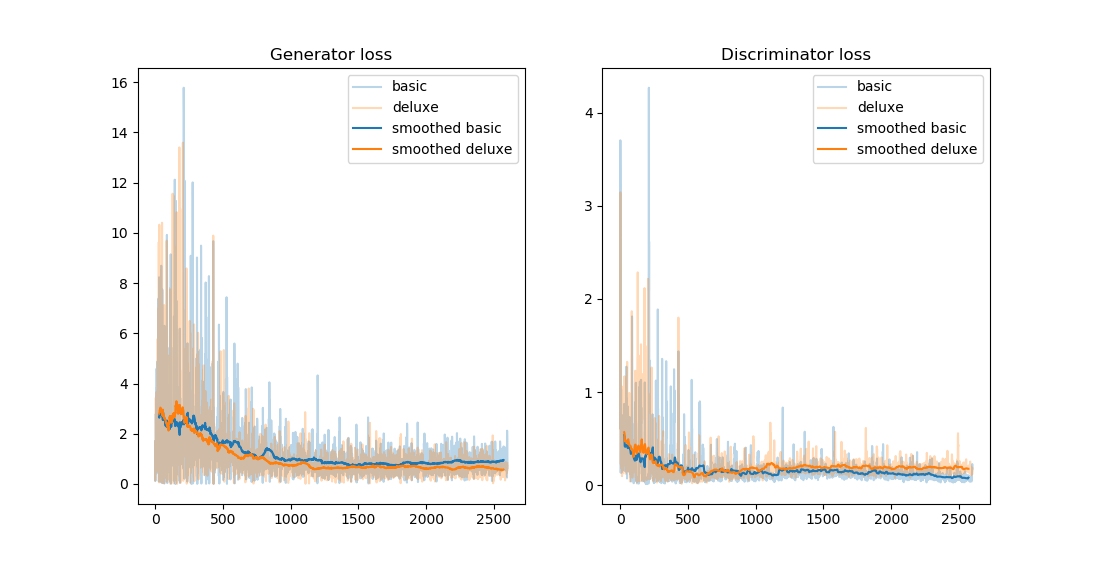

Loss curves:

|

|

|

|

To put it together, we have:

Using a "deluxe" data augmentation, we can see that the generator has a smaller loss and the discriminator has a larger loss. This means that the GAN's generator manages to generate better images to fool the discriminator.

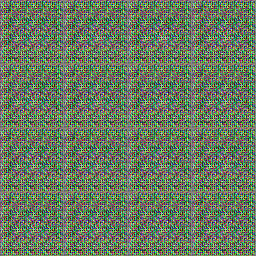

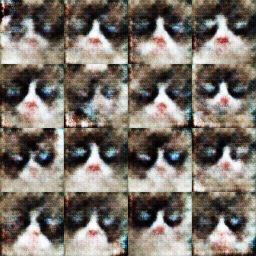

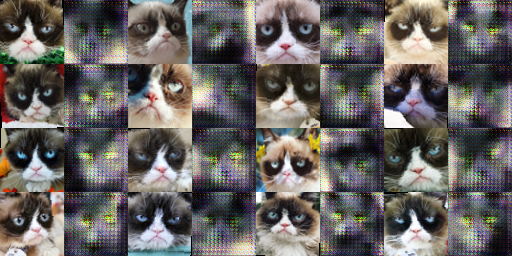

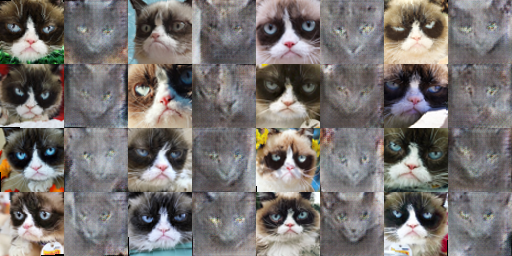

Generated images:

|

|

|

From these generated images we can see that the generator can only generate images that are noise after 200 iterations and it gets better on genrating images after more iterations. The generated images looks much similar to the real images after 2600 iterations.

Cycle GAN

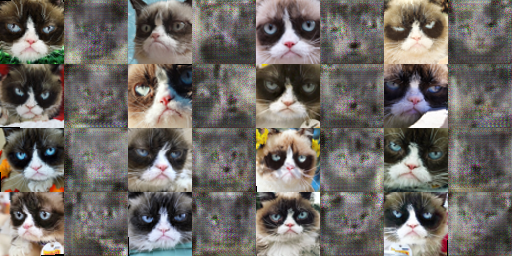

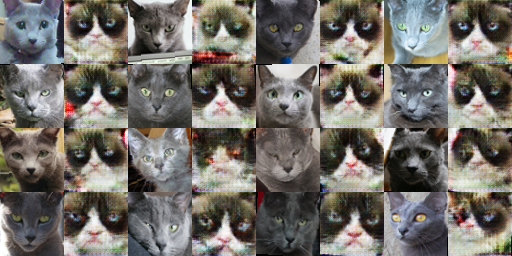

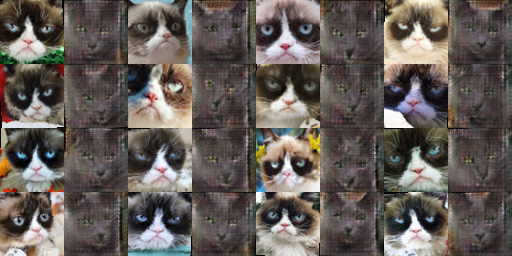

Generated images:

|

|

|

|

|

|

|

|

Above are results using the network architecture that is describe in the assignment (3 resblocks). We can see that with cycle consistency loss, the generator is able to generate images in the target domain that are more similar with the input image from the source domain, especially the angle of the cat's face. And for Y to X (russian blue to grumpy cat), the quality of the generated images are slight better if we add the cycle consistency loss (not as granulated as without cycle consistency loss). I think this is because the reconstruction loss will force the fake image in target domain to look realistic so that the reconstructed image in the source domain would be similar to the real source domain image with respect to L1 or L2 loss.

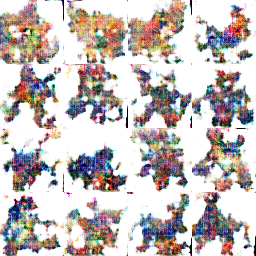

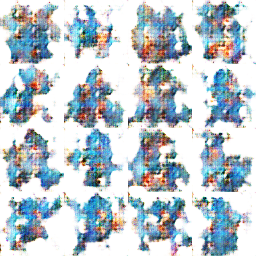

Bells & Whistles: DC/Cycle GAN for Pokemon

We train a DCGAN using Fire pokemon and Water pokemon respectively for 10000 iterations, as well as a CycleGAN to convert the Fire and Water pokemon. To make the generated images look more realistic, we train the CycleGAN for 400k iterations. Below are the results.

|

|

|

|

|

|

We can see from the results above that the CycleGAN is actually trying to find a mapping between the input image in the source domain to an image in the target domain that has the similar contour with the input image. I think this is because the discriminator is extremely good at recognizing the fake image that the generator generates, therefore the generated images are somewhate pulled by the real image in the target domain, which causes the generator to generate images that are similar to existing real image.

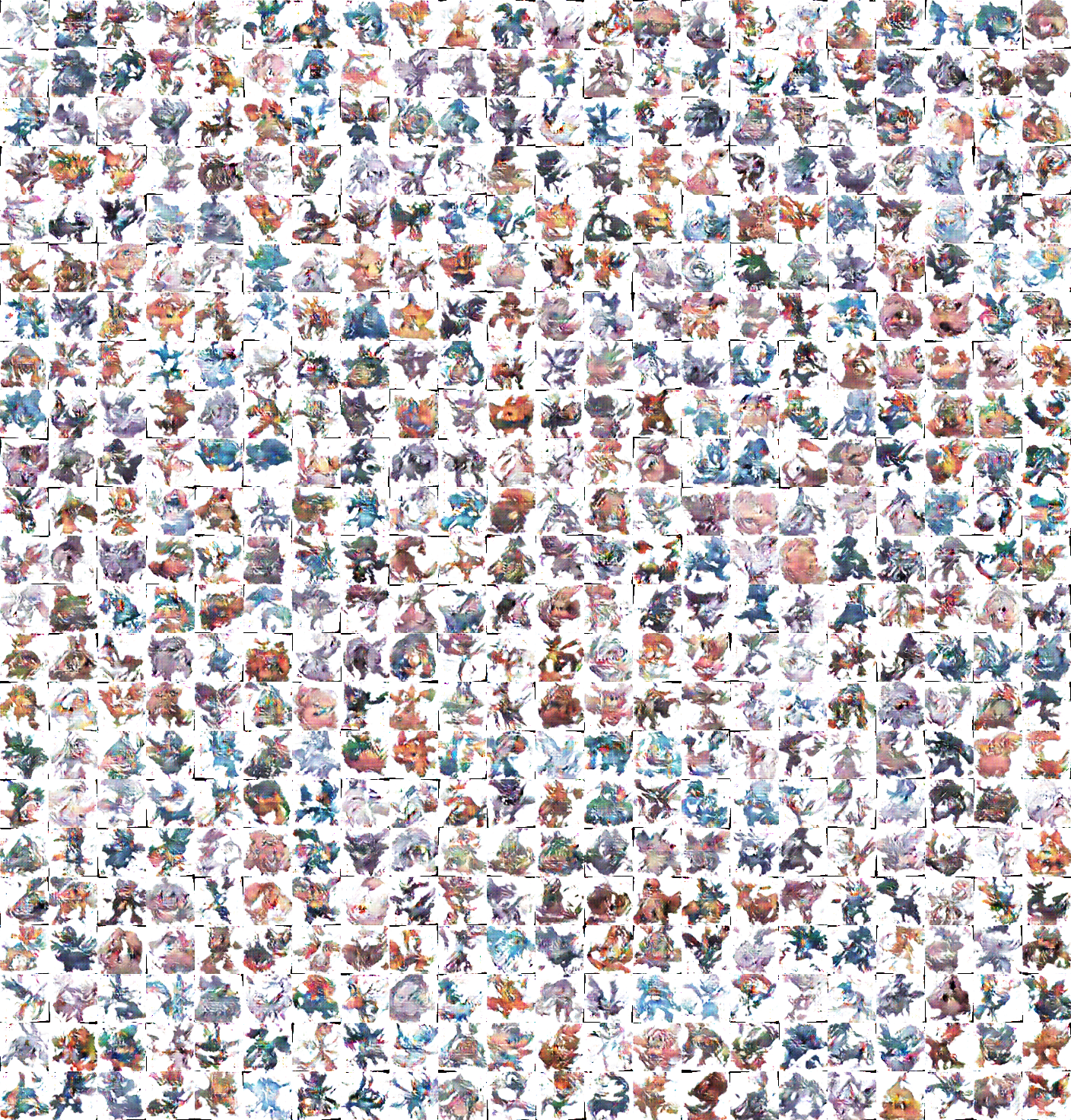

Bells & Whistles: Best Quality New Pokemon from DCGAN

To generate more realistic Pokemon images from DCGAN, we train the DCGAN for 40k iterations with a batch size of 512 images using the entire pokemon dataset. Below is a gif of the generated pokemons after different numbers of iterations.

We can see that the generated pokemons actually have reasonable quality. There are obvious feet or wings in some of the pokemons after 20k iterations. However, the generator collapse after 38k iterations and generates bad output images. Below is a static image of the generated pokemons after 36600 iterations.

Bells & Whistles: "Cycle of Triplet"

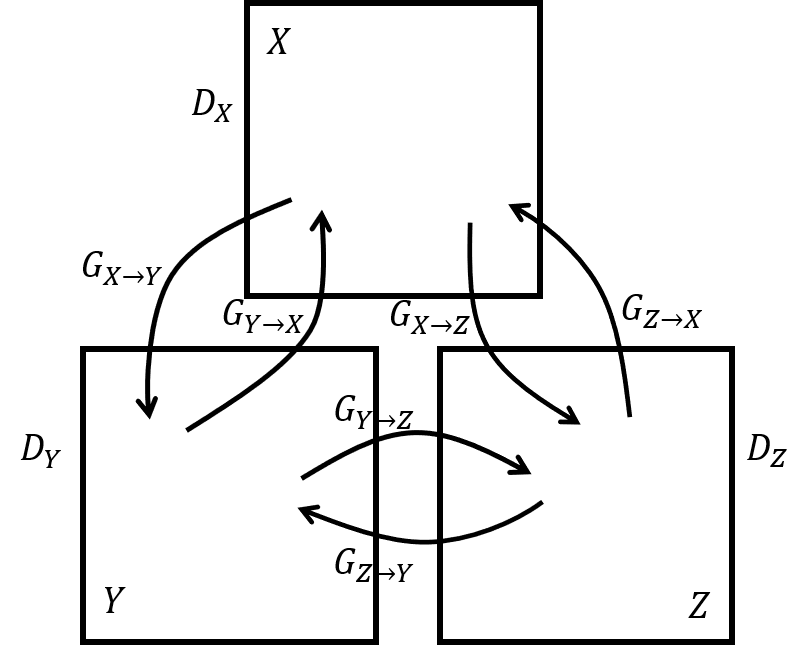

In this section, we explore another settings of CycleGAN where we simutaneously focus on three types of conversion between a triplet of domains described in the figure above. In this setting, we jointly train 9 deep networks: 3 discriminators for the three domains and 6 generators for the three pairwise conversion. Below, we specifically focus on the loss of the generators and explore how the "GAN loss" and the cycle consistency loss are extended in this setting.

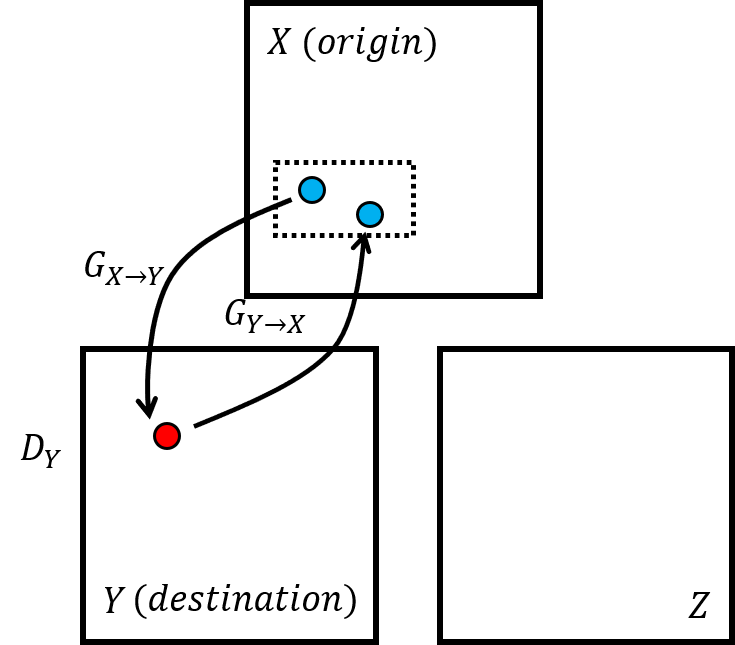

One-hop loss

We first consider an identical situation where we only have a pair of domains. Starting from a source (origin) domain, the target (destination) domain can be reached with one conversion (one hop). We refer this conversion as a hop from the origin domain to the destination domain, an example of which is depicted in the figure below with domain \(X\) as the origin and domain \(Y\) as the destination.

In this situation, the generator loss is identical to the original CycleGAN setting, i.e. $$ \mathcal{J}_{cycle_1}(ori, des) = \frac{1}{n} \sum_{i=1}^{n} |y^{(i)} - G_{des \rightarrow ori}(G_{ori \rightarrow des}(y^{(i)}))|_p $$ $$ \mathcal{J}_{GAN_1}(ori, des) = \frac{1}{n} \sum_{j=1}^{n}\left(D_{des}\left(G_{ori \rightarrow des}\left(y^{(j)}\right)\right)-1\right)^{2} $$ $$ \mathcal{J}_1(ori, des)=\mathcal{J}_{GAN_1}(ori, des) + \mathcal{J}_{cycle_1}(ori, des) $$ where the \( ori, des \) stands for the origin and destination domain respectively, the \( \mathcal{J}_{cycle_1}, \mathcal{J}_{GAN_1}, \mathcal{J}_1 \) stand for a \( p \) norm of one-hop cycle consistency loss, the one-hop GAN loss and the one-hop loss. Here, the origin can be any of the three domains, and the destination can be any of the remaining two domains, which makes it a total of 6 one-hop losses.

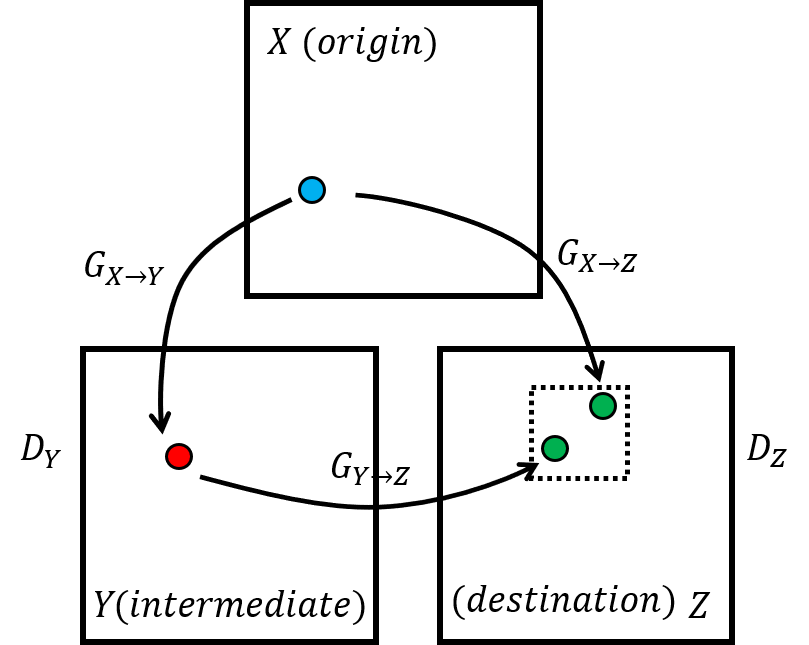

Two-hop loss

We then consider a situation that involves three domains. Starting from the origin domain, the destination domain can be reached with one hop or two hops. Figure below describes an example of this situation with domain \(X\) as the origin and domain \(Z\) as the destination. We refer the generator loss of this situation as a two-hop loss, and the domain between the origin and the destination in a two-hop path as the intermediate domain.

In this situation, the generator loss can be computed by the equations below: $$ \mathcal{J}_{cycle_2}(ori, inter, des) = \frac{1}{n} \sum_{i=1}^{n} |G_{ori \rightarrow des}(y^{(i)}) - G_{inter \rightarrow des}(G_{ori \rightarrow inter}(y^{(i)}))|_p $$ $$ \begin{split} \mathcal{J}_{GAN_2}(ori, inter, des) = & \frac{1}{n} \sum_{j=1}^{n}\left(D_{inter}\left(G_{ori \rightarrow inter}\left(y^{(j)}\right)\right)-1\right)^{2} + \\ & \frac{1}{n} \sum_{k=1}^{n}\left(D_{des}\left(G_{inter \rightarrow des}\left(G_{ori \rightarrow inter}\left(y^{(k)}\right)\right)\right)-1\right)^{2} + \\ & \frac{1}{n} \sum_{l=1}^{n}\left(D_{des}\left(G_{ori \rightarrow des}\left(y^{(l)}\right)\right)-1\right)^{2} \end{split} $$ $$ \mathcal{J}_2(ori, inter, des)=\mathcal{J}_{GAN_2}(ori, inter, des) + \mathcal{J}_{cycle_2}(ori, inter, des) $$ where the \( inter \) stands for the intermediate domain, the \( \mathcal{J}_{cycle_2}, \mathcal{J}_{GAN_2}, \mathcal{J}_2 \) stand for a \( p \) norm of two-hop cycle consistency loss, the two-hop GAN loss and the two-hop loss. Here, the origin also can be any of the three domains, and the destination also can be any of the remaining two domains, which makes it a total of 6 two-hop losses.

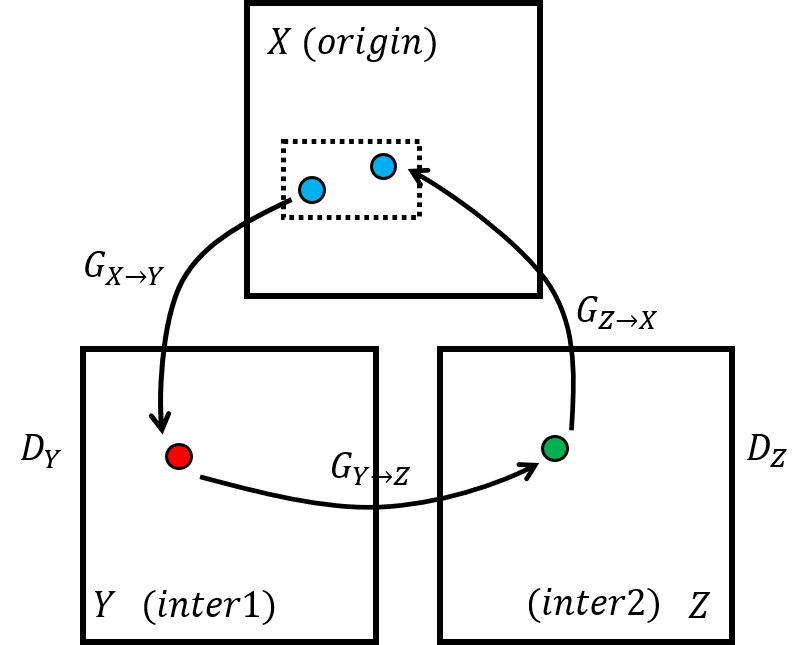

Three-hop loss

Finally, we consider a situation where we start from the origin domain, go through the other two domains and go back to the origin domain. We refer the two intermediate domain as inter1 and inter2. Figure below describes an example of this situation with domain \(X\) as the origin domain, \(Y\) as the first intermediate (inter1) domain, and \(Z\) as the second intermediate (inter2) domain. We refer the generator loss of this situation as a three-hop loss.

In this situation, the generator loss can be computed by the equations below: $$ \mathcal{J}_{cycle_3}(ori, inter1, inter2) = \frac{1}{n} \sum_{i=1}^{n} |y^{(i)} - G_{inter2 \rightarrow ori}(G_{inter1 \rightarrow inter2}(G_{ori \rightarrow inter1}(y^{(i)})))|_p $$ $$ \begin{split} \mathcal{J}_{GAN_3}(ori, inter1, inter2) = & \frac{1}{n} \sum_{j=1}^{n}\left(D_{inter1}\left(G_{ori \rightarrow inter1}\left(y^{(j)}\right)\right)-1\right)^{2} + \\ & \frac{1}{n} \sum_{k=1}^{n}\left(D_{inter2}\left(G_{inter1 \rightarrow inter2}\left(G_{ori \rightarrow inter1}\left(y^{(i)}\right)\right)\right)-1\right)^{2} \end{split} $$ $$ \mathcal{J}_3(ori, inter1, inter2)=\mathcal{J}_{GAN_3}(ori, inter1, inter2) + \mathcal{J}_{cycle_3}(ori, inter1, inter2) $$ where the \( inter1, and inter2 \) stands for the first and second intermediate domain respectively, the \( \mathcal{J}_{cycle_3}, \mathcal{J}_{GAN_3}, \mathcal{J}_3 \) stand for a \( p \) norm of three-hop cycle consistency loss, the three-hop GAN loss and the three-hop loss. Here, the origin also can be any of the three domains, and the first intermediate domain can be any of the remaining two domains, which makes it also a total of 6 two-hop losses.

The final generator loss for this CycleGAN for triplet domains is then: $$ S = \{X,Y,Z\} $$ $$ \begin{split} \mathcal{J} = & \left(\sum_{ori \in S} \sum_{\begin{split} des & \in S \\ des & \neq ori \end{split}}\mathcal{J}_1(ori, des)\right) + \\ & \left(\sum_{ori \in S} \sum_{\begin{split} inter & \in S \\ inter & \neq ori\end{split}} \sum_{\begin{split}des & \in S \\ des & \neq ori \\ des & \neq inter\end{split}} \mathcal{J}_2(ori, inter, des)\right) + \\ & \left(\sum_{ori \in S} \sum_{\begin{split}inter1 & \in S \\ inter1 & \neq ori\end{split}} \sum_{\begin{split}inter2 & \in S\\ inter2 & \neq ori \\ inter2 & \neq inter1\end{split}} \mathcal{J}_3(ori, inter1, inter2)\right) \end{split} $$

We use this loss to train the network for 100k iterations (collapse after 26k), and the results are shown below.