16-726 Learning-Based Image Synthesis

Final Project: May I see your face?

Chang Shi, Cora Zhang, Sanil Pande

Overview

The wearing of the face masks appears as a solution for limiting the spread of COVID-19. However, face masks also cover up a large portion of the facial expressions which could have served as a great assistance to languages during conversations. Linguists have confirmed that reading the shape of the lips can largely help the understanding of language, and whether the speaker is smiling or not apparently reveals his/her emotions. Thus, we would like to generate the facial expressions covered by the masks and aim for faces that are as natural as possible.

Goal

Goal

|

Datasets

We use the data from Flickr-Face-HQ (FFHQ) Dataset which contains faces without mask. We also use the Correctly Masked Faces subset (CMFD) from the MaskedFace-Net dataset which contains faces with facemask. These two dataset are paired for convenient use.

Flickr-Faces-HQ Dataset (FFHQ)

Flickr-Faces-HQ Dataset (FFHQ)

|

Correctly Masked Faces Dataset (CMFD)

Correctly Masked Faces Dataset (CMFD)

|

Method 1: CycleGAN

We first decided to naively treat whether wearing a mask or not as a style domain problem. We used unpaired data to train the model, and then only used the "mask -> no mask" generator to generate faces without facial masks.

Generator

Generator

|

Discriminator

Discriminator

|

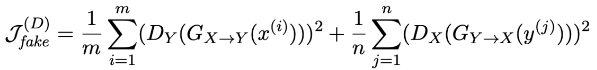

The loss of cycleGAN is the combination of the following four terms with cycle-consistency loss.

The result is shown below, which is pretty bad. Though we meant to remove the mask, generated faces are dyed to blue while the mask areas are turned to skin color. Clearly, whether wearing a mask or not should not be treat as a "style", since it aligns with intuition that the covered and uncovered area may need to be dealt separately. Also, paired data may work better for delicate facial expressions.

Result

Result

|

Part 2: Pixel2Pixel

We then decided to try a Pixel2Pixel model on paired training data.

Generator

Generator

|

Discriminator

Discriminator

|

The loss of Pixel2Pixel is the combination of GAN loss and $L_1$ loss.

The result is shown below, the generated mouse is often weirdly showing the mask folds. One possible reason is the L1 loss could be a disruption here because the network is trying to “generate a mouth” with respect to the “shape and color of a face mask”. What’s more, we would better adapt an architecture that “already know” there will be a mouth under the mask.

Result

Result

|

Part 3: StyleGAN2

So we tried StyleGAN2. We didn’t optimize the generator but used one that pretrained on FFHQ dataset which knows there will be nose and mouse under the mask. The main optimization work is on the latent space (W+ space). At first we generate a random face from noise by mapping it to the latent code manifold of faces. Then we calculate the loss with respect to the target face and gradually making to face similar to our target face. Specifically, the perceptual loss is calculated from conv2 layer of the pretrained vgg network. We tried several sets of perceptual loss + $L_2$ combination, and $\lambda=0.8$ gave the best result.

The underlying idea is that. We believe the face with a facemask lacks the latent code of the mouth, we want to “borrow” the latent code from a randomly generated face so that we can generate face looks like the right picture.

Since we tried $L_1$ loss and failed in Pixel2Pixel model, this time we tried the network with pure BCE loss, we get some nightmarish results as below. We found out that we should not delete the L1 loss but apply it with a mask constraint.

Result (BCELoss without masking)

Result (BCELoss without masking)

|

So we switched to MSE loss with masking. For each picture, we manually draw a mask over the blue facial mask to dropout that area in backward-propagation. The result is shown at the middle. And the ground truth is shown on the right. As you can see, the generated face is pretty natural now.

Result (MSELoss with Masking)

Result (MSELoss with Masking)

|

Some extra results are shown below. As we can see the generated image may not be similar to the original one in the uncovered area.

Other Results (MSELoss with Masking)

Other Results (MSELoss with Masking)

|

Since the generated result heavily depends on the first “random face”. Some extreme failure case will also appear when the “first random face” is too different from the target face. The failure case below shows that when target face is femail while the random face is male, the result is not good. Also, sometimes the network can’t figure out whether the color around the face is a hat, clothes or hair. That's why here we get a super stylish man on the right which looks totally different from the target face.

Failure Case

Failure Case

|

The difference in upper half face leads us to the idea of image blending. We first tried Poisson blending. After stitching the generated lower half face back to the original image, some of the results improve. For example, the lower-left girl has a very natural face now. However, this is only becasue some data in the fake facemask dataset has facemask smaller than the face. In reality, the face mask is larger than the face, Poisson blending won't work well, since it will blend part of the background onto the face. We leave advanced blending methods for future exploration.

Poisson Blending Results

Poisson Blending Results

|

Part 4: Future Work

For future work, we can add facial landmark detection to make the mask drawing process automatically. And we can also decide an encoder to encode the target image with mask on to latent space, then make sure the sampled latent code is close to that. But theoretically, to guess the facial expression under the cover is still with much randoness.