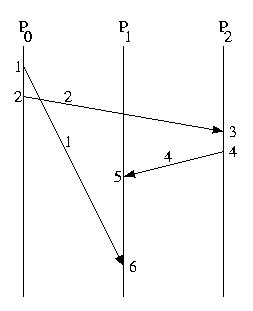

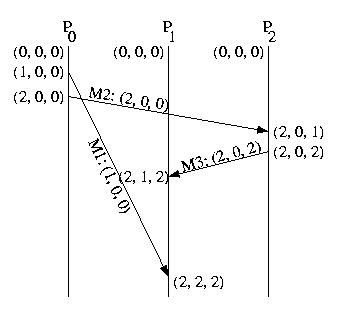

The example below shows several messages transmitted among the hosts of a distributed system. It illustrates the systems' understaning of local time and the timestamps placed onto each message.

Causality and Causality Violations

Let's take another look at the diagram above. Notice that the first message sent, from P0 to P1, is the last message received. Notice also that a message is sent from P0 to P2 and another from P2 to P3.The important thing about this situation is that the message from P2 arrives at P1, before the earlier message from P0. This timing problem might prove to be critical.

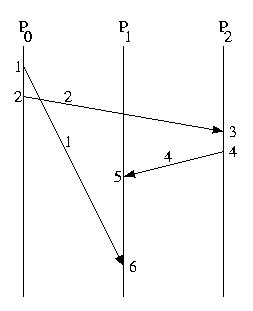

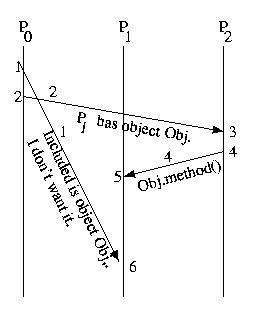

Consider the following situation that might occur if an object migrated from P0 to P1:

P0 gives Obj to P1 and tells P2. In response P2 sends a request to use that object to P1. Unfortunately, P1 has not yet received the message -- perhaps there was an error and the message needed to be resent or, perhaps, the communication channel is just slower. But, independent of the cause, "Bang!" P2's request to use the object fails.

This example illustrates a causality violation. A causality violation occurs when a message ordering problem results in one host taking an action based on information that another host has not yet received. In this case P2 is trying to invoke a method on P1, because P2 thinks that P1 has Obj.

In designing systems, we assume that any action a host takes may be affected by any message it has previously received. As a result, we would consider the situation above to be a potential causality violation, even if the message from P2 to P1 turned out to be completely independent of the messages that it received. Colloquially, we don't distinguish between potential causality violations and causality violations that have real consequences. Instead we call them both causality violations -- even if the messages turn out to be independent.

The bottom line is that a causality violation occurs if the send of a message knows something that the recipient of that message should know (has been sent), but does not know (has not received), by the time that the message is received.

Very shortly, we'll talk about designing a communication mechanism that avoid causality violations. But for the moment, lasts ask ourselves, "How can we detect (after the fact) that a causality violation has occurred?"

Lamport time is not sufficient to do this, because it track the total number of events in the system. This isn't helpful -- instead, we need a way of determining if messages were sent and received in the same order. In other words if we receive M2 before M1, but M1 was sent before M2, a (potential) causality violation has occured. The same is true if one or both of the messages arrived indirectly via other hosts. This is one of the areas where our next topic, vector time, becomes particularly useful.

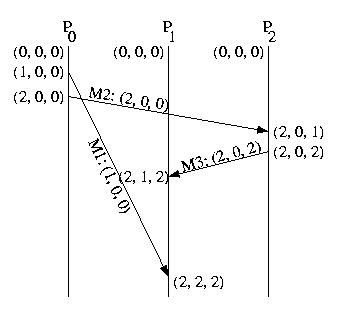

Let's take another look at the earlier example -- but this time, let's label it using vector time:

Comparing Vector Timestamps

"Vector timestamps are equal if, and only if, all corresponding elements are the same."

VT1=VT2 iff VT1[i] = VT2[i], for every i = 1, ..., N."Vector timestamp VT1 is less than or equal to vector timestamp VT2, if and only if, no element of VT1 is greater than the corresponding element in VT2. In other words, vector timestamp VT1 is not greater than vector timestamp VT2, if and only if, no element of VT1 is greater than the corresponding element in VT2."

VT1<=VT2 iff VT1[i] <= VT2[i], for every i = 1, ..., N."Vector timestamp VT1 is strictly less than vector timestamp VT2, if and only if, vector timestamp VT1 <= VT2 (see above), and VT1 is not equal to VT2 (see above)."

VT1<VT2 iff VT1<=VT2 and VT1!=VT2"VT1 and VT2 represent concurrent events, if and only if, VT1 is neither greater than, less than, nor equal to VT2.

VT1 and VT2 are concurrent, iff, VT1!<VT2 and VT1!>VT2 and VT1!=VT2

Detecting Causality Violations Using Vector Timestamps

We can detect a causality violation using vector timestamps by comparing the timestamp of a newly received message to the local time. If the message's timestamp is less than the local time vector, a (potential) causality violation has occurred.Why? For the local time to have advanced such that it is ahead of the timestamp of the newly received message, a prior message must have advanced the local time. The sender of that prior message must have gotten the newly arrived message before it sent its prior message to us. Thus a (potential) causality violation occured.

Admittedly, this doesn't fix the problem -- but at least we have a way of detecting and logging the problem. This will make it much easier to isolate and debug or system -- or at least to take mitigating action to ensure that the output from the system is correct.

Now, let's consider the this familiar example again:

M1's timestamp is (1,0,0). The local time on P2 is (2,0,2). (1,0,0) is less than (2,0,2). This indicates that a causality violation has occured -- someone who had already seen M1 sent P2 a message, before P2 received M1.

If the timestamps are concurrent, this does not represent a problem -- the messages are unrelated.

Matrix Logical Clocks

Before we leave time to discuss communication, let me mention one more detail. There is actually another type of logical clock that is one step o more encompassing than a vector logical clock -- the matrix logical clock. Much like a vector clock maintains the simple logical time for each host, a matrix clock maintains a vector of the vector clocks for each host.Every time a message is exchanged, the sending host tells us not only what it knows about the global state of time, but what other hosts have told it that they know about the global state of time -- relaible gossip.

This is useful in applications such as checkpointing and recovery, and garbage collection. In these cases, having a lower bound on what another host knows can prove useful by enabling the disposal of unusable objects. In the case of garbage collection -- objects that are no other object can reference. In the case of recovery -- logs and/or checkpoints that are no longer needed.

We'll discuss matrix time in more detail when we discuss checkpointing and recovery -- it is much easier to understand with a clear application.