If you examine the implementation of wait below, you will find that the wait function atomically releases the mutex and puts the thread to sleep. After the thread is signalled and wakes up, it reacquires the resource. This is to prevent a lost wake-up. This situation is discussed in the section describing the implementation of condition variables.

spin_lock s;

GetLock (condition cv, mutex mx)

{

mutex_acquire (mx);

while (LOCKED)

wait (c, mx);

lock=LOCKED;

mutex_release (mx);

}

ReleaseLock (condition cv, mutex mx)

{

mutex_acquire (mx);

lock = UNLOCKED;

signal (cv);

mutex_release (mx);

}

Condition Variables - Implementation

This is just one implementation of condition variables, others are possible.Data Structure

The condition variable data structure contains a double-linked list to use as a queue. It also contains a semaphore to protect operations on this queue. This semaphore should be a spin-lock since it will only be held for very short periods of time.

struct condition { proc next; /* doubly linked list implementation of */ proc prev; /* queue for blocked threads */ mutex mx; /*protects queue */ };wait()

The wait() operation adds a thread to the list and then puts it to sleep. The mutex that protects the critical section in the calling function is passed as a parameter to wait(). This allows wait to atomically release the mutex and put the process to sleep.If this operation is not atomic and a context switch occurs after the release_mutex (mx) and before the thread goes to sleep, it is possible that a process will signal before the process goes to sleep. When the waiting() process is restored to execution, it will enter the sleep queue, but the message to wake it up will be forever gone.

void wait (condition *cv, mutex *mx) { mutex_acquire(&c->listLock); /* protect the queue */ enqueue (&c->next, &c->prev, thr_self()); /* enqueue */ mutex_release (&c->listLock); /* we're done with the list */ /* The suspend and release_mutex() operation should be atomic */ release_mutex (mx)); thr_suspend (self); /* Sleep 'til someone wakes us */ mutex_acquire (mx); /* Woke up -- our turn, get resource lock */ return; }signal()

The signal() operation gets the next thread from the queue and wakes it up. If the queue is empty, it does nothing.

void signal (condition *c) { thread_id tid; mutex_acquire (c->listlock); /* protect the queue */ tid = dequeue(&c->next, &c->prev); mutex_release (listLock); if (tid>0) thr_continue (tid); return; }broadcast()

The broadcast operation wakes up every thread waiting for a particular resource. This generally makes sense only with sharable resources. Perhaps a writer just completed so all of the readers can be awakened.

void broadcast (condition *c) { thread_id tid; mutex_acquire (c->listLock); /* protect the queue */ while (&c->next) /* queue is not empty */ { tid = dequeue(&c->next, &c->prev); /* wake one */ thr_continue (tid); /* Make it runnable */ } mutex_release (c->listLock); /* done with the queue */ }

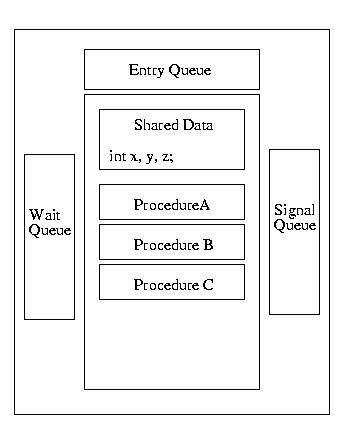

Monitors

A monitor is a synchronization tool designed to make a programmer's life simple. A monitor can be thought of as a conceptual box. A programmer can put functions/procedures/methods into this box and the monitor makes him a very simple guarantee: only one function within the monitor will execute at a time -- mutual exclusion will be guaranteed.Furthermore, the monitor can protect shared data. Data items declared within the monitor can only be accessed by functions/procedures/methods within the monitor. Therefore mutual exclusion is guaranteed for these data items. Functions/procedures/methods outside of the monitor can not corrupt them.

If nothing is executing within the monitor, a thread can execute one of its procedures/methods/functions. Otherwise, the thread is put into the entry queue and put to sleep. As soon as a thread exits the monitor, it wakes up the next process in the entry queue.

The picture gets a bit messier when we consider that threads executing within the monitor may require an unavailable resource. When this happens, the thread waits for this resource, using the wait operation of a condition variable. At this point, another thread is free to enter the monitor.

Now let me suggest that while a second thread is running in the monitor, it frees a resource required by the first. It signals that the resource that the first thread is waiting for becomes available. What should happen? Should the first thread be immediately awakened or should the second thread finish first? This situation gives rise to different versions of monitor sematics.

Monitors In Java

The Java programming language provides support for monitors via synchronized methods within a class. We are assured that at most one synchronized method within a particular class can be active at any particular time, even in multi-threaded applications. Java does not require that all methods within a class be synchronized, so every method of the class is not necessarily part of the monitor -- only synchronized methods of a class are protected from concurrent execution. This is obviously an opportunity for a programmer to damage an appendage with a massive and rapidly moving projectile.Java monitors are reasonably limited -- especially when contrasted with monitors using Hoare or Mesa semantics. In Java, there can only be one reason to wait (block) within the monitor, not multiple conditions. When a thread waits, it is made unrunnable. When it has been signaled to wake-up, it is made runnable -- it will next run whenever the scheduler happens to run it. Unlike BH monitors, a signal can occur anywhere in the code. Unlike Hoare semantics, the signaling thread doesn't immediately yield to the signaled thread. Unlike all three, there can only be one reason to wait/signal. In this way, they offer simplified Mesa sematics.

To wait for a condition, a Java thread invokes wait(). To signal a waiting thread to tell it that it can run (the condition upon which it is waiting is satisfied), a Java thread invokes notify(). Notify is actually a funny name -- normally this operation is called signal.

Monitor Examples in Java

In class we walked through these examples in Java from Concurrent Programming: The Java Language by Stephen Hartley and published by Oxford Univerity Press in 1998:The first example is a solution to the Bounded Buffer problem, also known as the Producer-Consumer Problem. This solution supports one producer thread and one consumer thread.

Please notice that the producer signals a waiting consumer if it fills the first slot in the buffer -- this is because the consumer might have blocked because there were no full buffers. The consumer follows a similar practice if it takes the last item in the buffer -- there could be a producer blocked waiting for an available slot in a buffer.

class BoundedBuffer { // designed for a single producer thread // and a single consumer thread private int numSlots = 0; private double[] buffer = null; private int putIn = 0, takeOut = 0; private int count = 0; public BoundedBuffer(int numSlots) { if (numSlots <= 0) throw new IllegalArgumentException("numSlots<=0"); this.numSlots = numSlots; buffer = new double[numSlots]; System.out.println("BoundedBuffer alive, numSlots=" + numSlots); } public synchronized void deposit(double value) { while (count == numSlots) try { wait(); } catch (InterruptedException e) { System.err.println("interrupted out of wait"); } buffer[putIn] = value; putIn = (putIn + 1) % numSlots; count++; // wake up the consumer if (count == 1) notify(); // since it might be waiting System.out.println(" after deposit, count=" + count + ", putIn=" + putIn); } public synchronized double fetch() { double value; while (count == 0) try { wait(); } catch (InterruptedException e) { System.err.println("interrupted out of wait"); } value = buffer[takeOut]; takeOut = (takeOut + 1) % numSlots; count--; // wake up the producer if (count == numSlots-1) notify(); // since it might be waiting System.out.println(" after fetch, count=" + count + ", takeOut=" + takeOut); return value; } }Fair Reader-Writer Solution

What follows is a fair solution to the reader-writer problem -- it allows the starvation of neither the producer, nor the consumer. The only tricky part of this code is realizing that starvation of writers by readers is avoided by yielding to earlier requests.

class Database extends MyObject { private int numReaders = 0; private int numWriters = 0; private int numWaitingReaders = 0; private int numWaitingWriters = 0; private boolean okToWrite = true; private long startWaitingReadersTime = 0; public Database() { super("rwDB"); } public synchronized void startRead(int i) { long readerArrivalTime = 0; if (numWaitingWriters > 0 || numWriters > 0) { numWaitingReaders++; readerArrivalTime = age(); while (readerArrivalTime >= startWaitingReadersTime) try {wait();} catch (InterruptedException e) {} numWaitingReaders--; } numReaders++; } public synchronized void endRead(int i) { numReaders--; okToWrite = numReaders == 0; if (okToWrite) notifyAll(); } public synchronized void startWrite(int i) { if (numReaders > 0 || numWriters > 0) { numWaitingWriters++; okToWrite = false; while (!okToWrite) try {wait();} catch (InterruptedException e) {} numWaitingWriters--; } okToWrite = false; numWriters++; } public synchronized void endWrite(int i) { numWriters--; // ASSERT(numWriters==0) okToWrite = numWaitingReaders == 0; startWaitingReadersTime = age(); notifyAll(); } }