Return to the Lecture Notes Index

Lecture 1 (January 17, 2000)

Reading

Read Chapters 1-3

Comments on the Syllabus

The Instructors

The course has two instructors. This is because the course is divided

into two two major components: principles and practice. Dave

Johnson (delivered the lecture) is responsible for the principles component

of the course: lectures and exams. Greg Kesden (back of room on Prof. Johnson's

left) is responsible for the practice component of the course: the projects.

Principles vs. Practice

The course contains a rigorous project component because we don't believe

that you can really understand the lecture material until you apply it

in a practical way. Often times implemention unravels misunderstandings

and makes holes in understanding apparent. It can mean the difference between

a self-consistent understanding and a correct (and self-consistent) understanding.

For the most part, Dave Johnson will present Monday and Wednesday lectures

on traditional OS material, and Greg Kesden will present Friday lectures

discussing the projects or special topics. This is not a hard-and-fast

rule. There is no doubt that there will be plenty of variation throughout

the semester.

The TAs

Jason Flinn, Chris Palmer and Benecio Sanchez (back of room on Dave's

right) are the TAs for the course. Office hours and locations will be announced

shortly.

Editor's note: Michelle Berger is also a the TAs this semester.

Prerequisites

An outdated paragraph slipped into this year's syllabus. The correct

prerequisite is 15-213. The paragraph in the syllabus is based on prior

semesters, before students from 15-213 had worked their way up to 15-412.

When the curriculum changes at the lower levels, it is often hard to determine

exactly when the effects will be seen in the upper-level courses. But these

prerequisites really don't matter, since we don't intend to enforce them.

The real prerequisites for the course include C programming, including

pointers, and some basic knowledge about architecture. This is because

operating systems talk directly to the architecture and the projects require

extenisive and intricate programming in the C programming language.

Projects

This is an 18-unit course -- with good reason. There are 4 large projects

this semester. We have three pieces of advice:

-

start early

-

work consistently

-

finish before the deadline

These projects are large, perhaps the largest you've had to date. Like

real operating systems, they are full of pointers. If you aren't absolutely

proficient with pointers, learn them or relearn them without delay.

Textbook

The textbook this semester is Operating System Concepts, 5th

edition, from Silberschatz and Galvin. It was originally published

by Addison-Wesley-Longman, but it now publsihed by John Wiley and Sons.

The textbooks in the bookstore may bear either logo, but are otherwise

identical.

The Department of Justice felt that Addison-Wesley would be able to

wield monopoly-like powers in the textbook market after the merger, unless

they sold off some of their leading titles to a competitor. This book was

sold along with several other among their computer science titles. As far

as OS books go, they sold the better among their books.

Although this book is our clear favorite, the collection of OS books

as a whole is fairly weak. We will only loosely follow the textbook. We'll

announce the readings in class. Please be aware that some lecture material

will not be in the textbook. And we will occasionally assign reading from

journal articles and other supplements.

Reminder

The projects are large -- start early.

Mid-term Exam

The mid-term exam is tentatively scheduled for Friday, March

3rd, 2000.

Course Schedule

A calendar of course events and reading assignments is available via

the Web page. Please check it frequently for updates.

Homework Assignments

There will be 4 homework assignments approximately equally spaced

throughout the semester.

Collaboration

The projects are done in groups of two. Both members of the group should

cooperatively work on the projects and develop a thorough understanding.

During project demos and written exams, we will ask questions about the

projects. We may ask questions such as, "How is project feature X implemented?"

or "Recall project feature X, how would your implementation change if this

feature is changed to Y?" This type of question is very difficult to answer,

especially given the time constraint, without the benefit of the

project.

Although partners may communicate in any level of detail about a project,

members of different groups may not. Different groups may only speak about

the course material that is the background for the project -- not the solution

to the project itself.

Homework should be done on an individual basis. Again, communication

about course background material is permitted, but potential solutions

to the assigned problems should not be discussed. You can talk about "how

to go about a solution," but not about the solution itself.

Grading Scale

There are only two interesting observations here:

-

The projects and the exams each contribute equally to your course grade

-- 50% each.

-

You must do well in each component (projects and exams) to pass the course.

Passing scores in both are required to pass the course. Students who pass

only one component cannot pass the course.

Reminder

The course is 18 units for a reason -- start the projects early (and

help us to find our bugs, before they become critical).

Course Communication

There are two bboards for the course. the .announce bboard is

used only for us to publish announcements. The other bboard is for communications

among the students -- we don't monitor it.

The best way to reach us is via the staff-412@cs mailing list.

Please email your questions to this list, instead of directing them to

individual TAs or instructors.

The course web site, http://www.cs.cmu.edu/~412/, is one of the

most valuable resources. It contains the course calendar, homework assignments

and handouts, and lecture notes.

The lecture notes are not provided as a substitute for attendance during

lectures.

-

You can't ask questions while reviewing lecture notes -- they're not interactive.

(Well, you can ask questions, but we won't be able to hear you...)

-

Not everything will be contained within the lecture notes. They are just

one person's work (see Greg scribbling away in the back...)

Late Work

In this class, much like the real world, there are deadlines. In general,

we don't give extensions or accept late work. You have a tentative schedule

of all of this semester's graded activities; please plan accordingly. If

you forsee problems arising near due dates, then you should start early

and finish early.

If insurmountable problems arise, please speak with us as far in advance

as possible. The later we are informed of problems, the less likely we

are to make any adjustments. If a crisis arises and you can't speak with

us in advance, speak with us as soon after the crisis as possible. The

longer you wait, the less sympathetic we will be.

Defintion of Operating System

This is a course about operating systems. You would expect

us to begin with a definition. But there is no single, clear definition.

There is no one interpretation; different people have different ideas.

But there is a somewhat accepted core of ideas:

-

Operating Systems are not application programs

-

Operating systems do nothing by themselves. Left to themselves, they are

pathetically useless.

-

In this capacity, they are similar to subroutine libraries, like libc.

Libraries do nothing unless they are invoked by programs

-

Operating systems provide an environment to run programs

-

How many people have tried to program hardware without any other software

support? (A few people raise their hands).

-

The OS provides services that make life easier

-

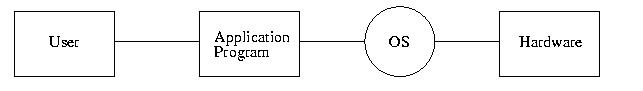

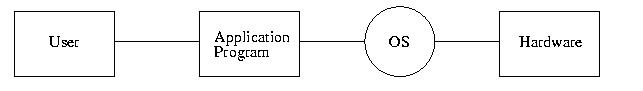

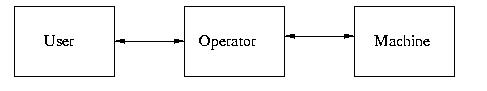

Operating systems act as an intermediary between users and the hardware

-

It is not a real OS if a program can generally talk directly to hardware

-

It is an interesting observation that in DOS and Windows 3.x programs frequently

interact with hardware. Under this definition, DOS and Windows 3.x are

not true operating systems.

-

Although it is a less common practice, Windows 9x programs may interact

directly with the hardware. Under this defintion, they may not be real

operating systems, either.

-

Programs can not interact directly in hardware in the Windows NT environment.

Under this defintion, Windows NT may be the first true operating system

from Microsoft.

Although we haven't actually provided a clear, tight, defintion of operating

systems, hopefully we've given you some ideas.

Goals of Operating Systems

-

Make the hardware convenient and easy to use

-

It is much easier to program using the OS-provided hardware abstractions

than to directly interact with hardware.

-

Provide a user-friendly way of interacting with the system -- a User interface.

Examples might include the X Window System or MS-Windows. (Whmm, is this

actually part of the OS? More later)

-

Make efficient use of available resources:

-

Disk

-

network transmission time

-

the user

-

Notice that the above two goals conflict; a balance is needed

-

It takes CPU time to make a system usable and friendly. This overhead hurts

efficiency.

-

Years ago, computers were more expensive than users. Now computers are

cheap and people are expensive. This means that the trade-off is increasingly

favoring usability over efficiency.

But What Exactly is an Operating System?

There is no one good answer, but there are possible answers.

Some might suggest that SolarisTM is an example of an operating

system. But does that include everything within the shrink-wrapped package?

There are many things on the CDs. Or is there a more narrow defintion of

an operating system than this?

Student: Yes.

Instructor: You're exactly right. It can go either way. But

we'll take a narrower view.

Consider "root." The root user is a software construct, but it does

have a hardware analogue. Modern hardware has features that support the

operating system, including privliged instructions. Perhaps certain instructions

are only available in kernel mode, or perhaps certain protections or properties

can only be set in kernel mode -- user programs must live with these settings.

So is the OS anything that runs in kernel mode? What about the mail

server? Window system? Compilers?

We haven't provided an exact definition, but hopefully we've helped

you to construct a vague idea.

What Do Operating Systems Do?

Abstractions

Operating systems construct abstractions that make the user's life easier.

Consider these examples:

-

The disk drive by iteslf is not pleasant for a user to use. It gets worse

when multiple users must share the same disk drive, as is the case on most

computers.

-

Most operating systems provide an abstraction called a file. In

many respects a file is a simple, easy to use, virtual disk drive. The

file abstraction makes it easier for programs to use disk storage, and

ensure the privacy and security of the data.

-

The VM 370 provided virtual card punchers and card readers. Programs could

use these devices for interprocess communication (IPC). One process could

punch virtual cards and feed them to another processes's virtual card reader.

-

Consider a system with one or several processors. These systems can run

several processes at the same time. To achive this, both memory and CPU

time must be divided among the processes. Each process gets a virtual piece

(virtual view) of each. Each process can interact with the system as if

it has the whole system to itself.

-

Consider terminals. In the case of real hardware, each type of terminal

device might be programed differently. But the OS creates an abstraction

that hides this from the user. In fact, the OS provides users with the

same interface to many different types of I/O devices. We can copy a regular

file to a regular file in much the same way that we can copy disk to a

disk. We can printf to the terminal, a file (disk), or the network -- with

the same interface.

Resource Manager

An operating system is also a resource manager (mostly hardware resources,

but also software abstractions, such as files):

-

The operating system manages resources and keeps track of who is using

resources and how many they are using. The operating system also reclaims

resources and recycles them for future use.

-

The OS must manage resources for several reasons, because the user can't

touch the hardware. This is desirable, because it allows the OS to function

as a layer that can enforce policy: who, what, and how many.

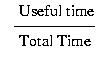

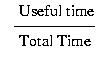

One goal of the operating system is to increase the utilization of resources.

Utilization =

For example, the OS should avoid wasting CPU time because the disk is rotating

or wasting switching among tasks.

History of Operating Systems

Early Systems (late 1940's - early 1950's)

There were no operating systems. Computers filled an entire room. Scientists

would sign up for time. During their scheduled time, they would enter their

program into the computer using binary switches. If they needed less time

than they reserved, the extra time would be wasted. If they needed more

time than they reserved, they had to sign up again. Some time was wasted

because it was at undesirable times of the day (or night).

Input to the systems changed and became more efficient: punch cards,

tapes, &c were used. Control cards were required to describe the use

of hardware by the programs. typically the first control card would tell

the system how to read the next cards. The first card was the loader, followed

by the program, followed by the data.

But there were still problems with overbooked, underbooked, and unused

time slots. And time was wasted shuffling cards. The decks often had to

be changed. Consider multi-pass assemblers where the source code had to

be reprocessed with the output from prior stages of the assembler. Time

was wasted shuffling cards.

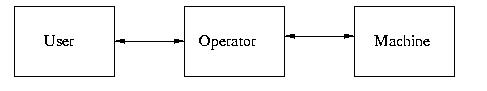

One improvement was the professional operator. The operator was more

skilled than the scientist in handling the cards. Less time was wasted

in handling the cards and time wasn't lost between jobs. When one job ended,

the next could be loaded immediately. Some repeatative card-reading became

unnecessary, because several jobs using the same code could be run as a

batch. If three jobs needed to assemble code, the 1st pass could be run

on all three jobs, and then the second pass on all three jobs.

Simple Batch Systems (early 1950's - 1960's) -- The Resident Monitor

The Resident Manager

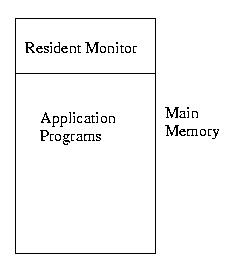

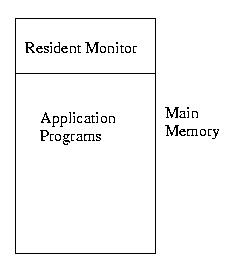

The resident monitor was in some sense a software implementation of

the human operator that, after it was loaded, remained in memory and ran

jobs.

The resident manager also managed the I/O devices, such as the card

reader and card punch and could speed the time between jobs (no human delay).

But the resident monitor had to be protected from user programs -- both

malicious and defective. Either way, the programs should only be able to

scribble on thier memory space, not the memory space of the resident monitor.

A bug in a program should only crash that program, not the whole machine.

For this reason control cards were used to describe the resource utilization

by a program. They would tell the system exactly whcih resources a program

would use, how it would use them, and when it would use them. The resident

monitor would not permit the program to violate these restrictions.

Other improvements included buffering, off-line operation, and spooling:

Buffering

Data could be written to a temporary area en route to an I/O device.

To some extent, this decoupled the processing from the I/O. If the program

was constantly faster than the device, there could eventually be an overflow,

but otherwise it went smoothly. This shielded programs from the latency

of the I/O devices.

Off-line Operation

Programs could generate output to tape, a reasonably fast I/O device.

Specialized, low-cost equipment could then read the tape and drive slower

I/O devices like printers or card-punches. This allowed a higher CPU utilization

by wasting less CPU time waiting from the slowest among I/O devices.

Spooling

Spooling is the same thing as buffering, except it is performed on-line.

An input buffer on disk could collect data from a slow device and have

it ready for the CPU with a lower latency. Programs could generate output

to disk, where it could collect until the slower output device could accept

it. This increased utilization and eliminated the need for the special

equipment to output the off-line storage.

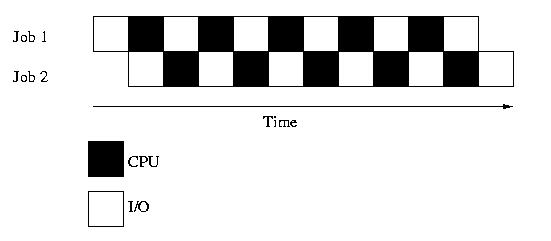

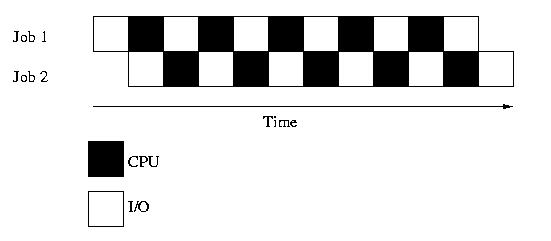

Multiprogramming (1960's - present)

Multiprogramming allows different users to execute jobs simultaneously.

(A better definiton would discuss multiprogramming in terms of processes,

but we'll get there soon enough).

Why would we we want to run multiple jobs simultaneously? This wastes

time switching between processes (context-switch). The answer is that it

allows one job to take advantage of the CPU, while another is not using

the CPU, becuase it is waiting for an I/O device to store/retrieve data.

But this approach requires that several programs be loaded in memory

at a time, as well as the memory manager. It also requires some accouting

and better protections. But that's okay, memory is cheaper now than it

was in the 40's and 50's.

Timesharing Systems (debut early 1960's, common by 1970's)

The first popular time sharing system was a CMU favorite, UNIX. Before

time sharing, separate jobs could run, but they were batched. The user

would leave his/her card deck with the operator and wave goodbye. The user

could pickup the output, after the job finished. Performance was measured

in terms of turn-around time, the length of time between the start

of the job and when the output was done.

Timesharing allows the user to interact with the program during execution

at the same time it allows multiple jobs to run at the same time. The user

can interact and react, control the path of the program, and perform interactive

debugging. Windowing systems, &c were developed to improve the look

and feel of the interaction.

This is achieved by sharing the resources (like the CPU) among the jobs.

Provided the CPU (and other resources) are fast enough, the user can view

the entire system as their own. Performance is measured in terms of response

time, the length of time between the start of a job and the first output.

Utilization is still a problem. Consider how much fast CPU time a slow

user could waste if the CPU waited for she/he to enter information at the

keyboard. Other jobs must run during this time. Overhead is also added

switching between jobs when it is not mandated by I/O, but is instead required

to share the resources among the jobs.

Trends

-

Recently operating systems research has focused on refining the existing

tools and models. Improved versions of the same concepts are used.

-

CPUs are continuing to become less expensive making personal computers,

workstations, and even multiprocessors commonplace.

-

Communications networks are a part of most computer systems - computers

in the same buidling and around the world communicate with each other.

-

Computers are becoming increasingly small and lightweight. Consider notebooks,

PDAs and wearable computers.

-

But operating systems research is not dead -- there are plenty of new ideas.

They include new applications and very advanced work. The big-picture concepts

are now over twenty years old.

Hardware Support for Operating Systems

Polling vs. Interrupts

Originally, systems performed a busy-wait for I/O devices. Input

devices could be polled to determine if they had more data to deliver and

output devices could be polled to determine if they could accept more data.

The software simply looped and waited for the device to become ready:

Example:

while (no character typed, yet)

;

This was a horribly inefficient use of the CPU, but without any hardware

support, this was close to the best that could be done. Perhaps a bit of

useful work could be done within the busy-wait loop, but that's about it.

But with hardware support for interrupts, asynchronous signals

to the CPU, utilization improved. With interrupts the device can signal

the operating system and let it know that it is ready. Until that signal

is received, the operating system tries to schedule other work that does

not require the in-use resource.

An interrupt handler or interrupt service routine (ISR) might best be

described as a special subroutine that is invoked asychronously by itself,

not by the currently executing program. No matter what the system is doing

when an interrupt is received, it will stop and the interrupt handler will

execute. Once the interrupt handler is done, the system returns to what

it was doing before. Using this approach, interrupt handlers can asynchronously

handle the slower I/O devices, improving CPU utilization.

But interrupt handlers do make the life of a program more complex. Care

has to be taken to ensure that the execution of the interrupt service routine

doesn't change the state of any resources that the program might be using,

including CPU registers. Consider the effects of updating a register within

an interrupt handler.

Example:

| Location |

Operation |

Pseudo-assembly |

| Program |

x=1 |

LOAD REG, 1 |

|

x=x+1 |

INC REG, 1 |

| Interrupt Handler |

x=x+2 |

ADD REG, 2 |

In the above example the invocation of the interrupt handler would cause

a side-effect by changing the value of x in an unexpected way within the

program.

Without interrupts, it would be impossible to implement multiprogramming

or timesharing. A busy-wait would be required so one job couldn't run during

another job's I/O wait. Without a timer interrupt, time slices can't be

created to divide the CPU among jobs.

Interrupt Synchronization

Interrupts themselves must be synchronized. If interrupts could interrupt

each other in a disorganized manner, it could become difficult to get anything

accomplished.

One approach might be to disallow interrupts during the execution of

any interrupt handler. But this might allow low-priority activities to

prevent high-priority activities from taking place. Instead, interrupts

are assigned a priority. The CPU is said to operate at some priority

level. Only interrupts higher than this level occur. If one interrupt

handler is currently executing and a higher priority interrupt occurs,

the old Iinterrupt handler will stop executing until the higher-priority

interrupt handler is finished.

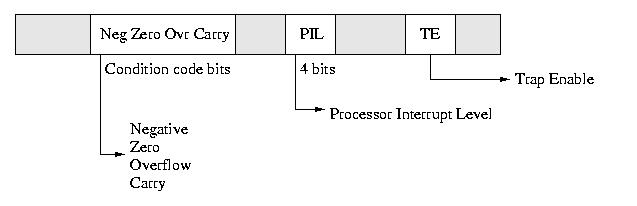

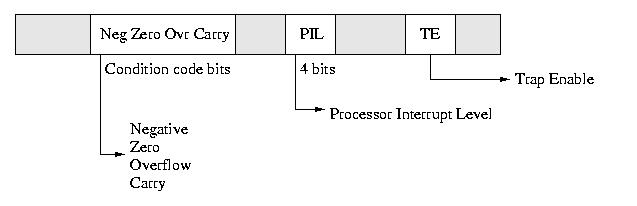

One example is the SPARC processor Status Register (PSR). This is a

good time to mention that we discuss these examples to help you reinforce

the concepts. We don't expect you to memorize them bit-by-bit.

(Interrupt, Trap, and Exception actually have different meanings, but

they are often interchanged. The designers SPARC chose to ignore the details

in naming the bit Trap Enable.)

The Processor Interrupt Level (PIL) is a 4-bit field that indicates

the current interrupt level. If the current level is 2 and a level-1 interrupt

comes in, we ignore it. But if a level-10 interrupt comes in, we take it.

Student Question: Are interrupts ignored or delayed?

Answer: Delayed, a bit is set recording that the interrupt occured.

But if more than one interrupt occurs at the same level, only one is recorded,

since a simple bit-per-level bit-mask is used.

Brute-force synchronization can be achieved by setting trap enable to 0

or by raising the PIL to a higher number.